Aria Khoshsirat

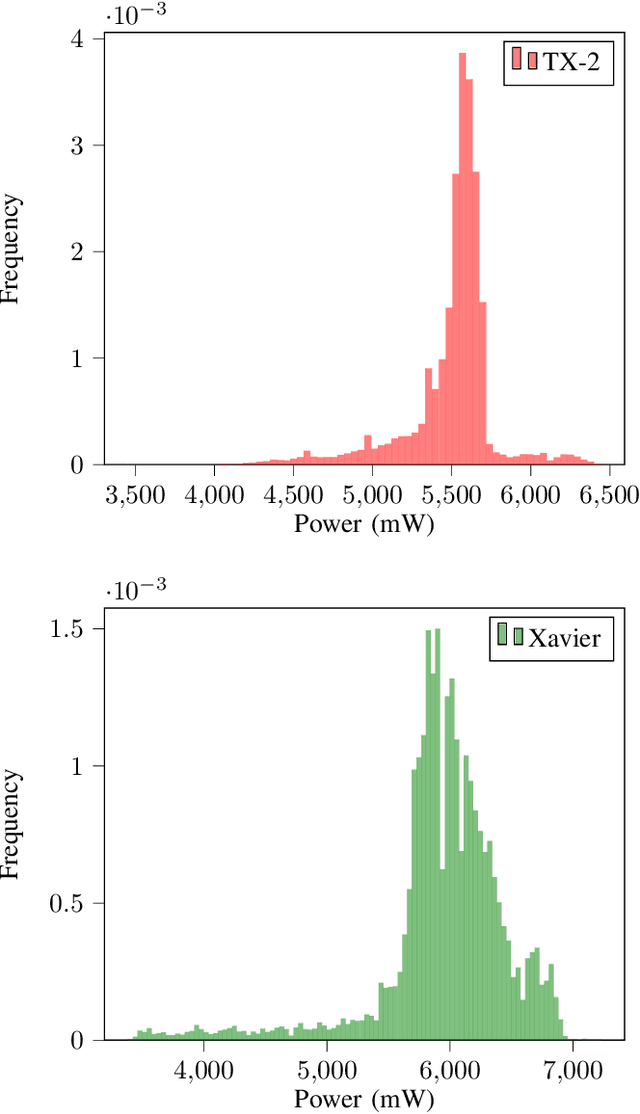

Accurate Calibration of Power Measurements from Internal Power Sensors on NVIDIA Jetson Devices

Jun 19, 2023

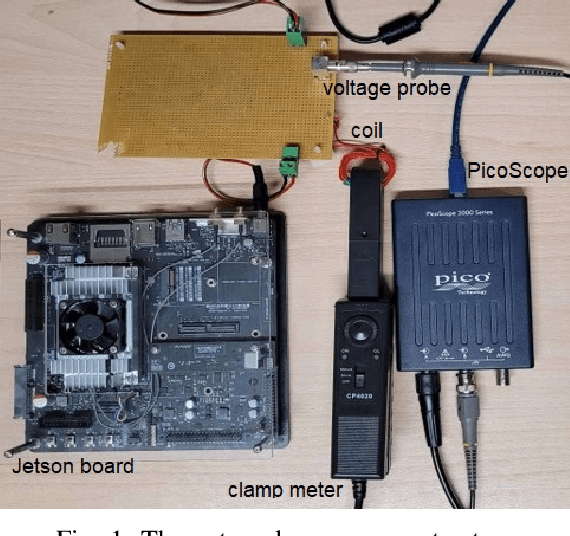

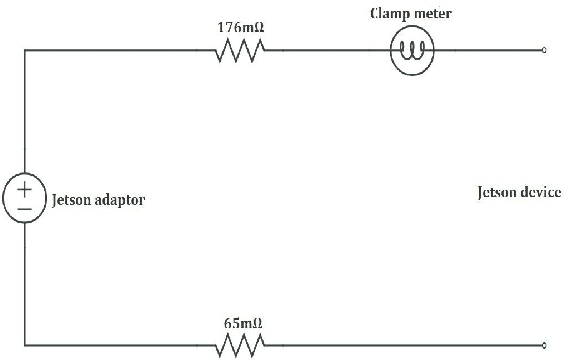

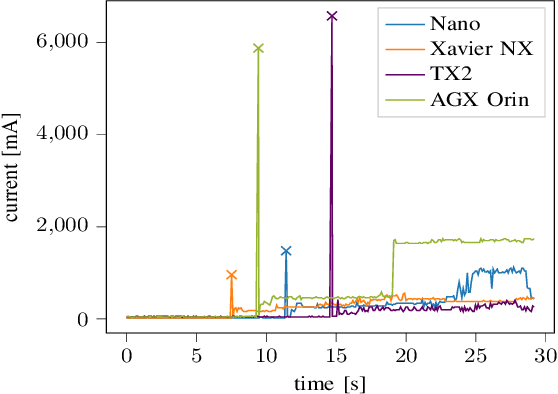

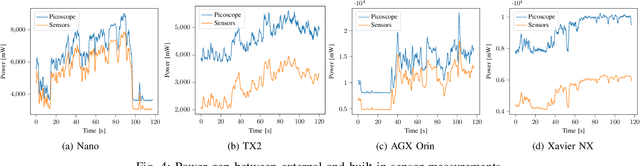

Abstract:Power efficiency is a crucial consideration for embedded systems design, particularly in the field of edge computing and IoT devices. This study aims to calibrate the power measurements obtained from the built-in sensors of NVIDIA Jetson devices, facilitating the collection of reliable and precise power consumption data in real-time. To achieve this goal, accurate power readings are obtained using external hardware, and a regression model is proposed to map the sensor measurements to the true power values. Our results provide insights into the accuracy and reliability of the built-in power sensors for various Jetson edge boards and highlight the importance of calibrating their internal power readings. In detail, internal sensors underestimate the actual power by up to 50% in most cases, but this calibration reduces the error to within 3%. By making the internal sensor data usable for precise online assessment of power and energy figures, the regression models presented in this paper have practical applications, for both practitioners and researchers, in accurately designing energy-efficient and autonomous edge services.

Divide and Save: Splitting Workload Among Containers in an Edge Device to Save Energy and Time

Feb 13, 2023Abstract:The increasing demand for edge computing is leading to a rise in energy consumption from edge devices, which can have significant environmental and financial implications. To address this, in this paper we present a novel method to enhance the energy efficiency while speeding up computations by distributing the workload among multiple containers in an edge device. Experiments are conducted on two Nvidia Jetson edge boards, the TX2 and the AGX Orin, exploring how using a different number of containers can affect the energy consumption and the computational time for an inference task. To demonstrate the effectiveness of our splitting approach, a video object detection task is conducted using an embedded version of the state-of-the-art YOLO algorithm, quantifying the energy and the time savings achieved compared to doing the computations on a single container. The proposed method can help mitigate the environmental and economic consequences of high energy consumption in edge computing, by providing a more sustainable approach to managing the workload of edge devices.

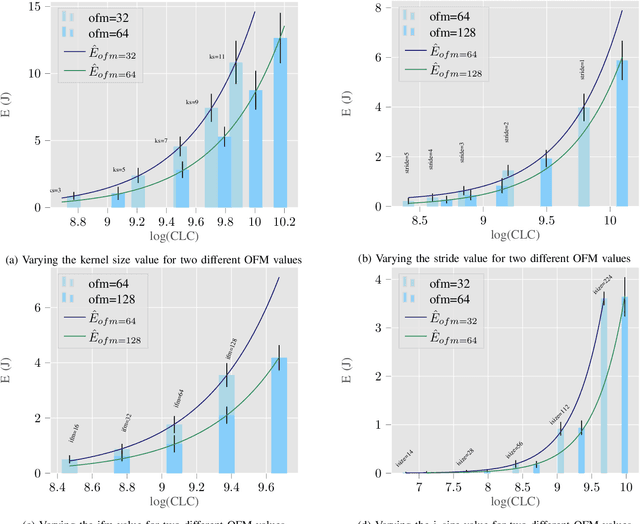

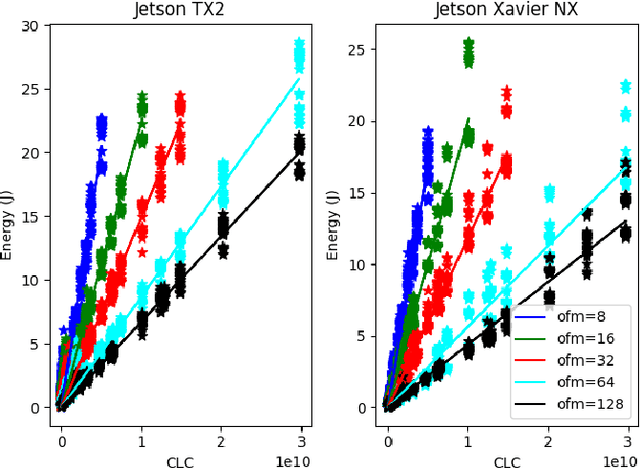

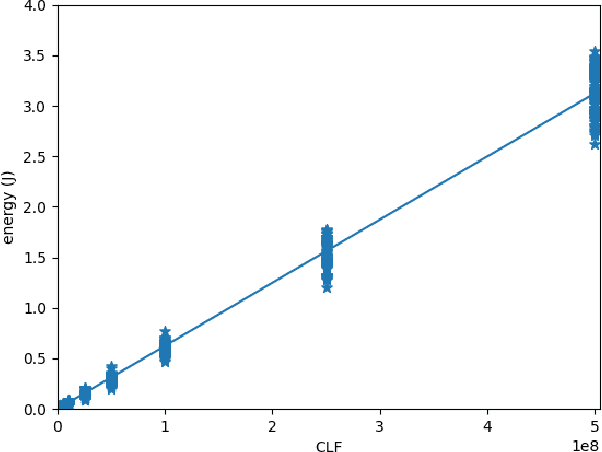

Energy Consumption of Neural Networks on NVIDIA Edge Boards: an Empirical Model

Oct 04, 2022

Abstract:Recently, there has been a trend of shifting the execution of deep learning inference tasks toward the edge of the network, closer to the user, to reduce latency and preserve data privacy. At the same time, growing interest is being devoted to the energetic sustainability of machine learning. At the intersection of these trends, we hence find the energetic characterization of machine learning at the edge, which is attracting increasing attention. Unfortunately, calculating the energy consumption of a given neural network during inference is complicated by the heterogeneity of the possible underlying hardware implementation. In this work, we hence aim at profiling the energetic consumption of inference tasks for some modern edge nodes and deriving simple but realistic models. To this end, we performed a large number of experiments to collect the energy consumption of convolutional and fully connected layers on two well-known edge boards by NVIDIA, namely Jetson TX2 and Xavier. From the measurements, we have then distilled a simple, practical model that can provide an estimate of the energy consumption of a certain inference task on the considered boards. We believe that this model can be used in many contexts as, for instance, to guide the search for efficient architectures in Neural Architecture Search, as a heuristic in Neural Network pruning, or to find energy-efficient offloading strategies in a Split computing context, or simply to evaluate the energetic performance of Deep Neural Network architectures.

Quantifying Uncertainty from Different Sources in Deep Neural Networks for Image Classification

Dec 18, 2020

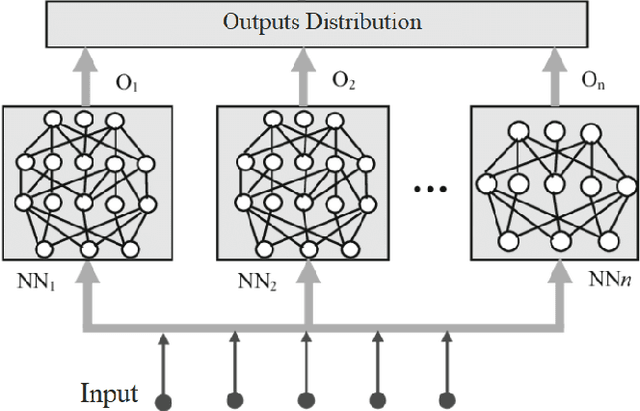

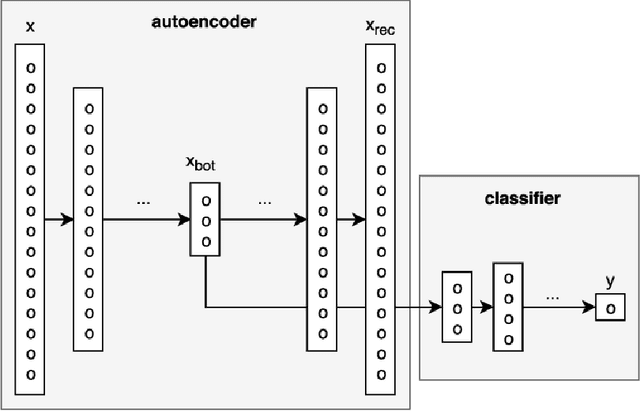

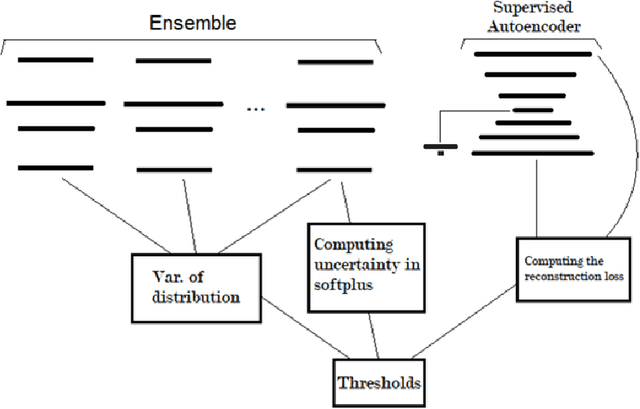

Abstract:Quantifying uncertainty in a model's predictions is important as it enables, for example, the safety of an AI system to be increased by acting on the model's output in an informed manner. We cannot expect a system to be 100% accurate or perfect at its task, however, we can equip the system with some tools to inform us if it is not certain about a prediction. This way, a second check can be performed, or the task can be passed to a human specialist. This is crucial for applications where the cost of an error is high, such as in autonomous vehicle control, medical image analysis, financial estimations or legal fields. Deep Neural Networks are powerful black box predictors that have recently achieved impressive performance on a wide spectrum of tasks. Quantifying predictive uncertainty in DNNs is a challenging and yet on-going problem. Although there have been many efforts to equip NNs with tools to estimate uncertainty, such as Monte Carlo Dropout, most of the previous methods only focus on one of the three types of model, data or distributional uncertainty. In this paper we propose a complete framework to capture and quantify all of these three types of uncertainties in DNNs for image classification. This framework includes an ensemble of CNNs for model uncertainty, a supervised reconstruction auto-encoder to capture distributional uncertainty and using the output of activation functions in the last layer of the network, to capture data uncertainty. Finally we demonstrate the efficiency of our method on popular image datasets for classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge