Tommaso Melodia

SIA: Symbolic Interpretability for Anticipatory Deep Reinforcement Learning in Network Control

Jan 29, 2026Abstract:Deep reinforcement learning (DRL) promises adaptive control for future mobile networks but conventional agents remain reactive: they act on past and current measurements and cannot leverage short-term forecasts of exogenous KPIs such as bandwidth. Augmenting agents with predictions can overcome this temporal myopia, yet uptake in networking is scarce because forecast-aware agents act as closed-boxes; operators cannot tell whether predictions guide decisions or justify the added complexity. We propose SIA, the first interpreter that exposes in real time how forecast-augmented DRL agents operate. SIA fuses Symbolic AI abstractions with per-KPI Knowledge Graphs to produce explanations, and includes a new Influence Score metric. SIA achieves sub-millisecond speed, over 200x faster than existing XAI methods. We evaluate SIA on three diverse networking use cases, uncovering hidden issues, including temporal misalignment in forecast integration and reward-design biases that trigger counter-productive policies. These insights enable targeted fixes: a redesigned agent achieves a 9% higher average bitrate in video streaming, and SIA's online Action-Refinement module improves RAN-slicing reward by 25% without retraining. By making anticipatory DRL transparent and tunable, SIA lowers the barrier to proactive control in next-generation mobile networks.

Reliable LLM-Based Edge-Cloud-Expert Cascades for Telecom Knowledge Systems

Dec 23, 2025

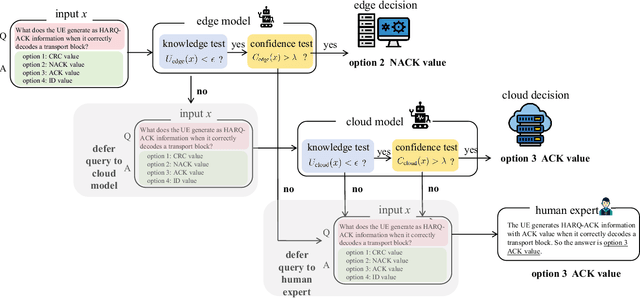

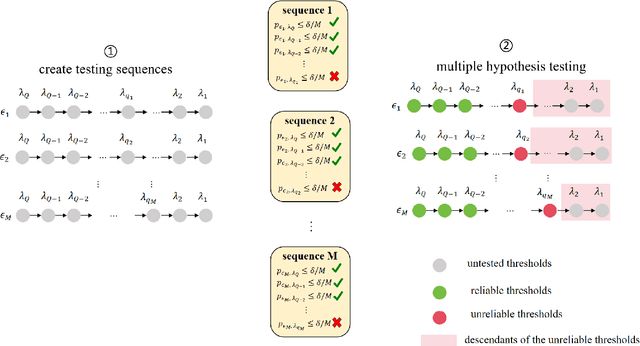

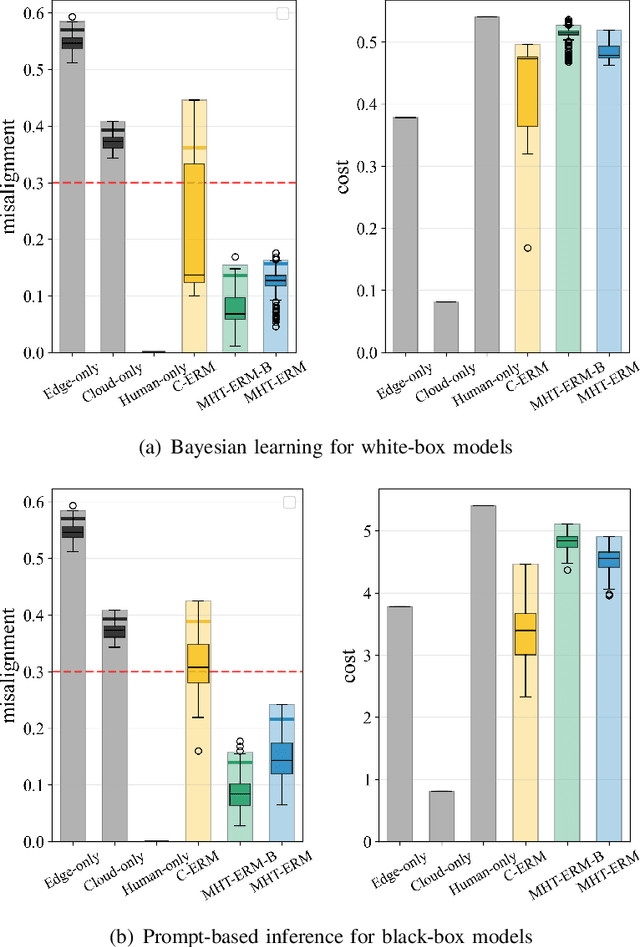

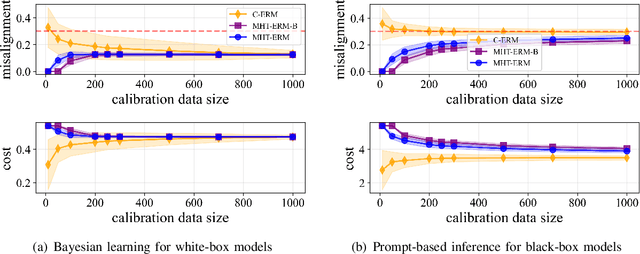

Abstract:Large language models (LLMs) are emerging as key enablers of automation in domains such as telecommunications, assisting with tasks including troubleshooting, standards interpretation, and network optimization. However, their deployment in practice must balance inference cost, latency, and reliability. In this work, we study an edge-cloud-expert cascaded LLM-based knowledge system that supports decision-making through a question-and-answer pipeline. In it, an efficient edge model handles routine queries, a more capable cloud model addresses complex cases, and human experts are involved only when necessary. We define a misalignment-cost constrained optimization problem, aiming to minimize average processing cost, while guaranteeing alignment of automated answers with expert judgments. We propose a statistically rigorous threshold selection method based on multiple hypothesis testing (MHT) for a query processing mechanism based on knowledge and confidence tests. The approach provides finite-sample guarantees on misalignment risk. Experiments on the TeleQnA dataset -- a telecom-specific benchmark -- demonstrate that the proposed method achieves superior cost-efficiency compared to conventional cascaded baselines, while ensuring reliability at prescribed confidence levels.

AgentRAN: An Agentic AI Architecture for Autonomous Control of Open 6G Networks

Aug 25, 2025

Abstract:The Open RAN movement has catalyzed a transformation toward programmable, interoperable cellular infrastructures. Yet, today's deployments still rely heavily on static control and manual operations. To move beyond this limitation, we introduce AgenRAN, an AI-native, Open RAN-aligned agentic framework that generates and orchestrates a fabric of distributed AI agents based on Natural Language (NL) intents. Unlike traditional approaches that require explicit programming, AgentRAN's LLM-powered agents interpret natural language intents, negotiate strategies through structured conversations, and orchestrate control loops across the network. AgentRAN instantiates a self-organizing hierarchy of agents that decompose complex intents across time scales (from sub-millisecond to minutes), spatial domains (cell to network-wide), and protocol layers (PHY/MAC to RRC). A central innovation is the AI-RAN Factory, an automated synthesis pipeline that observes agent interactions and continuously generates new agents embedding improved control algorithms, effectively transforming the network from a static collection of functions into an adaptive system capable of evolving its own intelligence. We demonstrate AgentRAN through live experiments on 5G testbeds where competing user demands are dynamically balanced through cascading intents. By replacing rigid APIs with NL coordination, AgentRAN fundamentally redefines how future 6G networks autonomously interpret, adapt, and optimize their behavior to meet operator goals.

Enabling Site-Specific Cellular Network Simulation Through Ray-Tracing-Driven ns-3

Aug 06, 2025

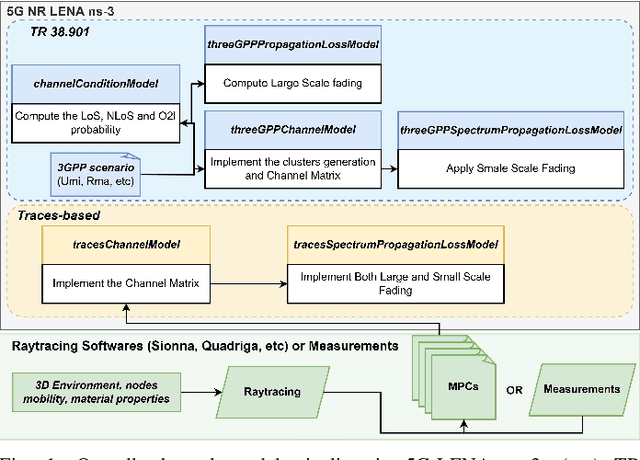

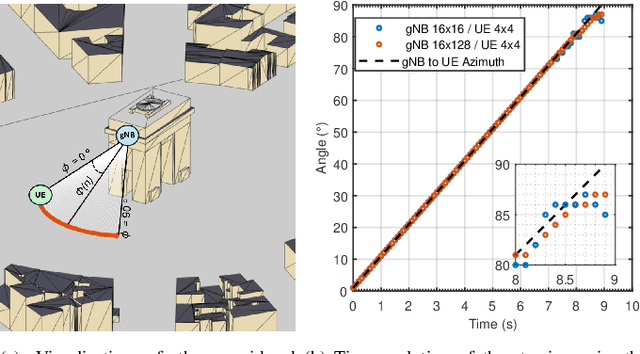

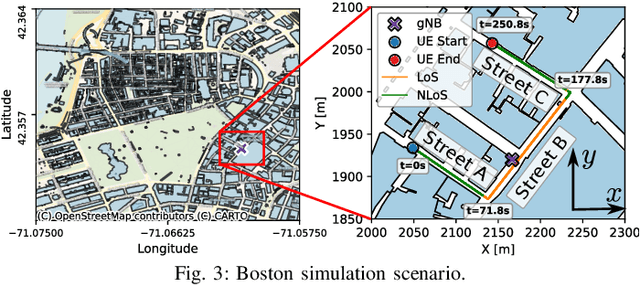

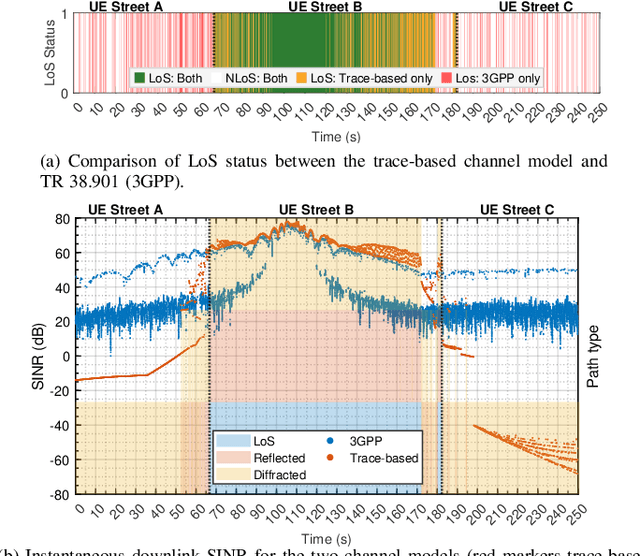

Abstract:Evaluating cellular systems, from 5G New Radio (NR) and 5G-Advanced to 6G, is challenging because the performance emerges from the tight coupling of propagation, beam management, scheduling, and higher-layer interactions. System-level simulation is therefore indispensable, yet the vast majority of studies rely on the statistical 3GPP channel models. These are well suited to capture average behavior across many statistical realizations, but cannot reproduce site-specific phenomena such as corner diffraction, street-canyon blockage, or deterministic line-of-sight conditions and angle-of-departure/arrival relationships that drive directional links. This paper extends 5G-LENA, an NR module for the system-level Network Simulator 3 (ns-3), with a trace-based channel model that processes the Multipath Components (MPCs) obtained from external ray-tracers (e.g., Sionna Ray Tracer (RT)) or measurement campaigns. Our module constructs frequency-domain channel matrices and feeds them to the existing Physical (PHY)/Medium Access Control (MAC) stack without any further modifications. The result is a geometry-based channel model that remains fully compatible with the standard 3GPP implementation in 5G-LENA, while delivering site-specific geometric fidelity. This new module provides a key building block toward Digital Twin (DT) capabilities by offering realistic site-specific channel modeling, unlocking studies that require site awareness, including beam management, blockage mitigation, and environment-aware sensing. We demonstrate its capabilities for precise beam-steering validation and end-to-end metric analysis. In both cases, the trace-driven engine exposes performance inflections that the statistical model does not exhibit, confirming its value for high-fidelity system-level cellular networks research and as a step toward DT applications.

Beyond Connectivity: An Open Architecture for AI-RAN Convergence in 6G

Jul 09, 2025Abstract:The proliferation of data-intensive Artificial Intelligence (AI) applications at the network edge demands a fundamental shift in RAN design, from merely consuming AI for network optimization, to actively enabling distributed AI workloads. This paradigm shift presents a significant opportunity for network operators to monetize AI at the edge while leveraging existing infrastructure investments. To realize this vision, this article presents a novel converged O-RAN and AI-RAN architecture that unifies orchestration and management of both telecommunications and AI workloads on shared infrastructure. The proposed architecture extends the Open RAN principles of modularity, disaggregation, and cloud-nativeness to support heterogeneous AI deployments. We introduce two key architectural innovations: (i) the AI-RAN Orchestrator, which extends the O-RAN Service Management and Orchestration (SMO) to enable integrated resource and allocation across RAN and AI workloads; and (ii) AI-RAN sites that provide distributed edge AI platforms with real-time processing capabilities. The proposed system supports flexible deployment options, allowing AI workloads to be orchestrated with specific timing requirements (real-time or batch processing) and geographic targeting. The proposed architecture addresses the orchestration requirements for managing heterogeneous workloads at different time scales while maintaining open, standardized interfaces and multi-vendor interoperability.

Agentic Semantic Control for Autonomous Wireless Space Networks: Extending Space-O-RAN with MCP-Driven Distributed Intelligence

Jun 12, 2025Abstract:Lunar surface operations impose stringent requirements on wireless communication systems, including autonomy, robustness to disruption, and the ability to adapt to environmental and mission-driven context. While Space-O-RAN provides a distributed orchestration model aligned with 3GPP standards, its decision logic is limited to static policies and lacks semantic integration. We propose a novel extension incorporating a semantic agentic layer enabled by the Model Context Protocol (MCP) and Agent-to-Agent (A2A) communication protocols, allowing context-aware decision making across real-time, near-real-time, and non-real-time control layers. Distributed cognitive agents deployed in rovers, landers, and lunar base stations implement wireless-aware coordination strategies, including delay-adaptive reasoning and bandwidth-aware semantic compression, while interacting with multiple MCP servers to reason over telemetry, locomotion planning, and mission constraints.

5G Aero: A Prototyping Platform for Evaluating Aerial 5G Communications

Jun 10, 2025Abstract:The application of small-factor, 5G-enabled Unmanned Aerial Vehicles (UAVs) has recently gained significant interest in various aerial and Industry 4.0 applications. However, ensuring reliable, high-throughput, and low-latency 5G communication in aerial applications remains a critical and underexplored problem. This paper presents the 5th generation (5G) Aero, a compact UAV optimized for 5G connectivity, aimed at fulfilling stringent 3rd Generation Partnership Project (3GPP) requirements. We conduct a set of experiments in an indoor environment, evaluating the UAV's ability to establish high-throughput, low-latency communications in both Line-of-Sight (LoS) and Non-Line-of-Sight (NLoS) conditions. Our findings demonstrate that the 5G Aero meets the required 3GPP standards for Command and Control (C2) packets latency in both LoS and NLoS, and video latency in LoS communications and it maintains acceptable latency levels for video transmission in NLoS conditions. Additionally, we show that the 5G module installed on the UAV introduces a negligible 1% decrease in flight time, showing that 5G technologies can be integrated into commercial off-the-shelf UAVs with minimal impact on battery lifetime. This paper contributes to the literature by demonstrating the practical capabilities of current 5G networks to support advanced UAV operations in telecommunications, offering insights into potential enhancements and optimizations for UAV performance in 5G networks

Bistatic Sensing in 5G NR

May 18, 2025Abstract:In this work, we propose and evaluate the performance of a 5th generation (5G) New Radio (NR) bistatic Integrated Sensing and Communication (ISaC) system. Unlike the full-duplex monostatic ISaC systems, the bistatic approach enables sensing in the current cellular networks without significantly modifying the transceiver design. The sensing utilizes data channels, such as the Physical Uplink Shared Channel (PUSCH), which carries information on the air interface. We provide the maximum likelihood estimator for the delay and Doppler parameters and derive a lower bound on the Mean Square Error (MSE) for a single target scenario. Link-level simulations show that it is possible to achieve significant throughput while accurately estimating the sensing parameters with PUSCH. Moreover, the results reveal an interesting tradeoff between the number of reference symbols, sensing performance, and throughput in the proposed 5G NR bistatic ISaC system.

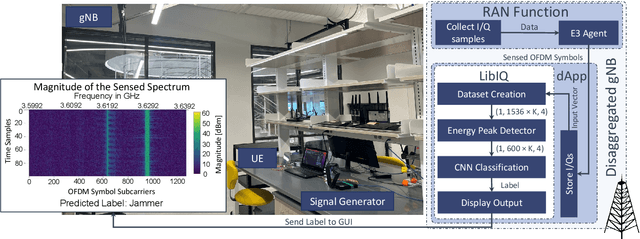

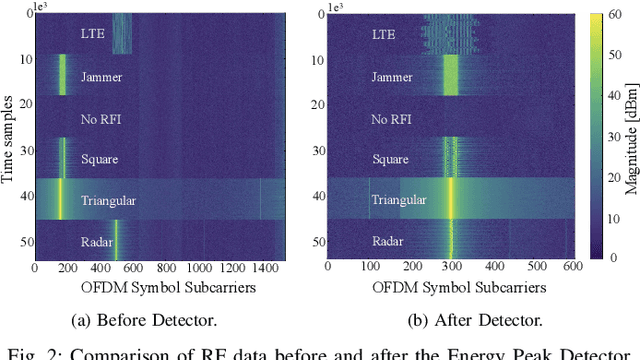

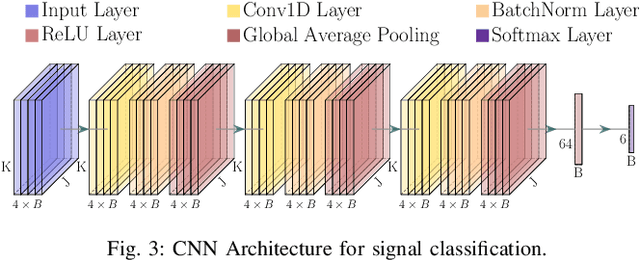

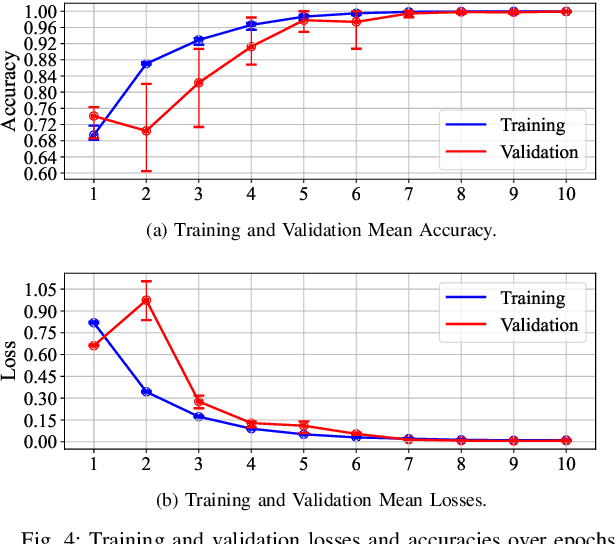

LibIQ: Toward Real-Time Spectrum Classification in O-RAN dApps

May 15, 2025

Abstract:The O-RAN architecture is transforming cellular networks by adopting RAN softwarization and disaggregation concepts to enable data-driven monitoring and control of the network. Such management is enabled by RICs, which facilitate near-real-time and non-real-time network control through xApps and rApps. However, they face limitations, including latency overhead in data exchange between the RAN and RIC, restricting real-time monitoring, and the inability to access user plain data due to privacy and security constraints, hindering use cases like beamforming and spectrum classification. In this paper, we leverage the dApps concept to enable real-time RF spectrum classification with LibIQ, a novel library for RF signals that facilitates efficient spectrum monitoring and signal classification by providing functionalities to read I/Q samples as time-series, create datasets and visualize time-series data through plots and spectrograms. Thanks to LibIQ, I/Q samples can be efficiently processed to detect external RF signals, which are subsequently classified using a CNN inside the library. To achieve accurate spectrum analysis, we created an extensive dataset of time-series-based I/Q samples, representing distinct signal types captured using a custom dApp running on a 5G deployment over the Colosseum network emulator and an OTA testbed. We evaluate our model by deploying LibIQ in heterogeneous scenarios with varying center frequencies, time windows, and external RF signals. In real-time analysis, the model classifies the processed I/Q samples, achieving an average accuracy of approximately 97.8\% in identifying signal types across all scenarios. We pledge to release both LibIQ and the dataset created as a publicly available framework upon acceptance.

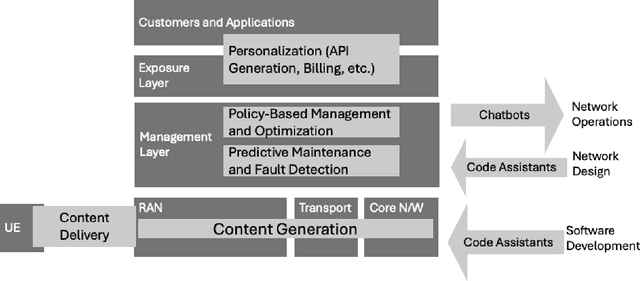

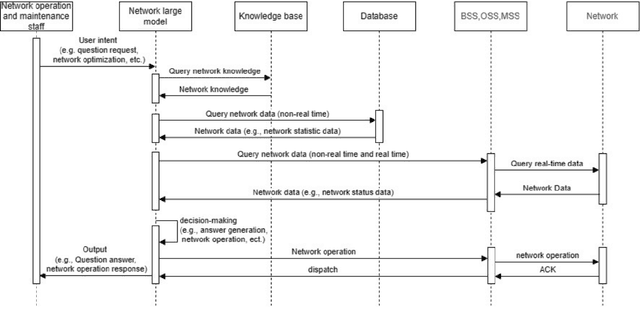

Large-Scale AI in Telecom: Charting the Roadmap for Innovation, Scalability, and Enhanced Digital Experiences

Mar 06, 2025

Abstract:This white paper discusses the role of large-scale AI in the telecommunications industry, with a specific focus on the potential of generative AI to revolutionize network functions and user experiences, especially in the context of 6G systems. It highlights the development and deployment of Large Telecom Models (LTMs), which are tailored AI models designed to address the complex challenges faced by modern telecom networks. The paper covers a wide range of topics, from the architecture and deployment strategies of LTMs to their applications in network management, resource allocation, and optimization. It also explores the regulatory, ethical, and standardization considerations for LTMs, offering insights into their future integration into telecom infrastructure. The goal is to provide a comprehensive roadmap for the adoption of LTMs to enhance scalability, performance, and user-centric innovation in telecom networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge