Davide Villa

TIMESAFE: Timing Interruption Monitoring and Security Assessment for Fronthaul Environments

Dec 17, 2024

Abstract:5G and beyond cellular systems embrace the disaggregation of Radio Access Network (RAN) components, exemplified by the evolution of the fronthual (FH) connection between cellular baseband and radio unit equipment. Crucially, synchronization over the FH is pivotal for reliable 5G services. In recent years, there has been a push to move these links to an Ethernet-based packet network topology, leveraging existing standards and ongoing research for Time-Sensitive Networking (TSN). However, TSN standards, such as Precision Time Protocol (PTP), focus on performance with little to no concern for security. This increases the exposure of the open FH to security risks. Attacks targeting synchronization mechanisms pose significant threats, potentially disrupting 5G networks and impairing connectivity. In this paper, we demonstrate the impact of successful spoofing and replay attacks against PTP synchronization. We show how a spoofing attack is able to cause a production-ready O-RAN and 5G-compliant private cellular base station to catastrophically fail within 2 seconds of the attack, necessitating manual intervention to restore full network operations. To counter this, we design a Machine Learning (ML)-based monitoring solution capable of detecting various malicious attacks with over 97.5% accuracy.

Driving Innovation in 6G Wireless Technologies: The OpenAirInterface Approach

Dec 17, 2024

Abstract:The development of 6G wireless technologies is rapidly advancing, with the 3rd Generation Partnership Project (3GPP) entering the pre-standardization phase and aiming to deliver the first specifications by 2028. This paper explores the OpenAirInterface (OAI) project, an open-source initiative that plays a crucial role in the evolution of 5G and the future 6G networks. OAI provides a comprehensive implementation of 3GPP and O-RAN compliant networks, including Radio Access Network (RAN), Core Network (CN), and software-defined User Equipment (UE) components. The paper details the history and evolution of OAI, its licensing model, and the various projects under its umbrella, such as RAN, the CN, as well as the Operations, Administration and Maintenance (OAM) projects. It also highlights the development methodology, Continuous Integration/Continuous Delivery (CI/CD) processes, and end-to-end systems powered by OAI. Furthermore, the paper discusses the potential of OAI for 6G research, focusing on spectrum, reflective intelligent surfaces, and Artificial Intelligence (AI)/Machine Learning (ML) integration. The open-source approach of OAI is emphasized as essential for tackling the challenges of 6G, fostering community collaboration, and driving innovation in next-generation wireless technologies.

AI-assisted Agile Propagation Modeling for Real-time Digital Twin Wireless Networks

Oct 29, 2024Abstract:Accurate channel modeling in real-time faces remarkable challenge due to the complexities of traditional methods such as ray tracing and field measurements. AI-based techniques have emerged to address these limitations, offering rapid, precise predictions of channel properties through ground truth data. This paper introduces an innovative approach to real-time, high-fidelity propagation modeling through advanced deep learning. Our model integrates 3D geographical data and rough propagation estimates to generate precise path gain predictions. By positioning the transmitter centrally, we simplify the model and enhance its computational efficiency, making it amenable to larger scenarios. Our approach achieves a normalized Root Mean Squared Error of less than 0.035 dB over a 37,210 square meter area, processing in just 46 ms on a GPU and 183 ms on a CPU. This performance significantly surpasses traditional high-fidelity ray tracing methods, which require approximately three orders of magnitude more time. Additionally, the model's adaptability to real-world data highlights its potential to revolutionize wireless network design and optimization, through enabling real-time creation of adaptive digital twins of real-world wireless scenarios in dynamic environments.

Demo: Intelligent Radar Detection in CBRS Band in the Colosseum Wireless Network Emulator

Sep 16, 2023

Abstract:The ever-growing number of wireless communication devices and technologies demands spectrum-sharing techniques. Effective coexistence management is crucial to avoid harmful interference, especially with critical systems like nautical and aerial radars in which incumbent radios operate mission-critical communication links. In this demo, we showcase a framework that leverages Colosseum, the world's largest wireless network emulator with hardware-in-the-loop, as a playground to study commercial radar waveforms coexisting with a cellular network in CBRS band in complex environments. We create an ad-hoc high-fidelity spectrum-sharing scenario for this purpose. We deploy a cellular network to collect IQ samples with the aim of training an ML agent that runs at the base station. The agent has the goal of detecting incumbent radar transmissions and vacating the cellular bandwidth to avoid interfering with the radar operations. Our experiment results show an average detection accuracy of 88%, with an average detection time of 137 ms.

Twinning Commercial Radio Waveforms in the Colosseum Wireless Network Emulator

Aug 18, 2023

Abstract:Because of the ever-growing amount of wireless consumers, spectrum-sharing techniques have been increasingly common in the wireless ecosystem, with the main goal of avoiding harmful interference to coexisting communication systems. This is even more important when considering systems, such as nautical and aerial fleet radars, in which incumbent radios operate mission-critical communication links. To study, develop, and validate these solutions, adequate platforms, such as the Colosseum wireless network emulator, are key as they enable experimentation with spectrum-sharing heterogeneous radio technologies in controlled environments. In this work, we demonstrate how Colosseum can be used to twin commercial radio waveforms to evaluate the coexistence of such technologies in complex wireless propagation environments. To this aim, we create a high-fidelity spectrum-sharing scenario on Colosseum to evaluate the impact of twinned commercial radar waveforms on a cellular network operating in the CBRS band. Then, we leverage IQ samples collected on the testbed to train a machine learning agent that runs at the base station to detect the presence of incumbent radar transmissions and vacate the bandwidth to avoid causing them harmful interference. Our results show an average detection accuracy of 88%, with accuracy above 90% in SNR regimes above 0 dB and SINR regimes above -20 dB, and with an average detection time of 137 ms.

Colosseum as a Digital Twin: Bridging Real-World Experimentation and Wireless Network Emulation

Apr 13, 2023

Abstract:Wireless network emulators are being increasingly used for developing and evaluating new solutions for Next Generation (NextG) wireless networks. However, the reliability of the solutions tested on emulation platforms heavily depends on the precision of the emulation process, model design, and parameter settings. To address, obviate or minimize the impact of errors of emulation models, in this work we apply the concept of Digital Twin (DT) to large-scale wireless systems. Specifically, we demonstrate the use of Colosseum, the world's largest wireless network emulator with hardware-in-the-loop, as a DT for NextG experimental wireless research at scale. As proof of concept, we leverage the Channel emulation scenario generator and Sounder Toolchain (CaST) to create the DT of a publicly-available over-the-air indoor testbed for sub-6 GHz research, namely, Arena. Then, we validate the Colosseum DT through experimental campaigns on emulated wireless environments, including scenarios concerning cellular networks and jamming of Wi-Fi nodes, on both the real and digital systems. Our experiments show that the DT is able to provide a faithful representation of the real-world setup, obtaining an average accuracy of up to 92.5% in throughput and 80% in Signal to Interference plus Noise Ratio (SINR).

CaST: A Toolchain for Creating and Characterizing Realistic Wireless Network Emulation Scenarios

Aug 08, 2022

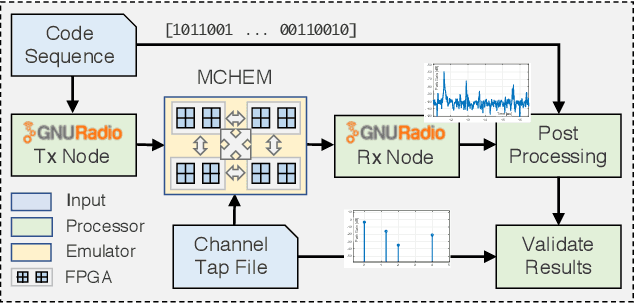

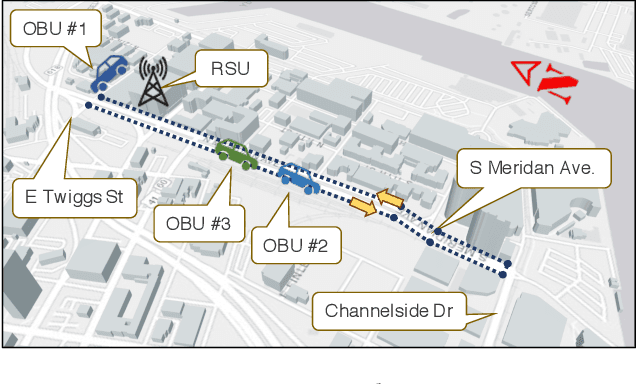

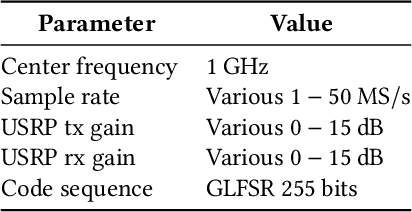

Abstract:Large-scale wireless testbeds have been extensively used by researchers in the past years. Among others, high-fidelity FPGA-based emulation platforms have unique capabilities in faithfully mimicking the conditions of real-world wireless environments in real-time, at scale, and with full repeatability. However, the reliability of the solutions developed in emulated platforms is heavily dependent on the emulation precision. CaST brings to the wireless network emulator landscape what it has been missing so far: an open, virtualized and software-based channel generator and sounder toolchain with unmatched precision in creating and characterizing quasi-real-world wireless network scenarios. CaST consists of (i) a framework to create mobile wireless scenarios from ray-tracing models for FPGA-based emulation platforms, and (ii) a containerized Software Defined Radio-based channel sounder to precisely characterize the emulated channels. We design, deploy and validate multi-path mobile scenarios on the world's largest wireless network emulator, Colosseum, and further demonstrate that CaST achieves up to 20 ns accuracy in sounding the Channel Impulse Response tap delays, and 0.5 dB accuracy in measuring the tap gains.

Colosseum: Large-Scale Wireless Experimentation Through Hardware-in-the-Loop Network Emulation

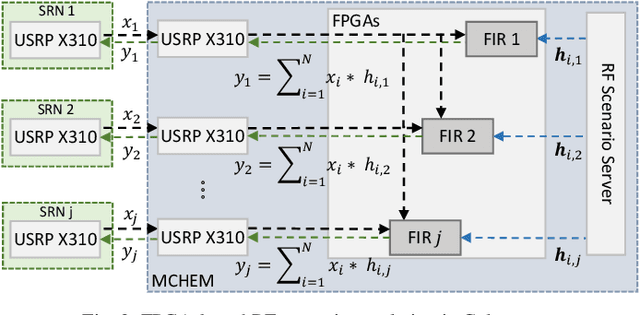

Oct 20, 2021

Abstract:Colosseum is an open-access and publicly-available large-scale wireless testbed for experimental research via virtualized and softwarized waveforms and protocol stacks on a fully programmable, "white-box" platform. Through 256 state-of-the-art Software-defined Radios and a Massive Channel Emulator core, Colosseum can model virtually any scenario, enabling the design, development and testing of solutions at scale in a variety of deployments and channel conditions. These Colosseum radio-frequency scenarios are reproduced through high-fidelity FPGA-based emulation with finite-impulse response filters. Filters model the taps of desired wireless channels and apply them to the signals generated by the radio nodes, faithfully mimicking the conditions of real-world wireless environments. In this paper we describe the architecture of Colosseum and its experimentation and emulation capabilities. We then demonstrate the effectiveness of Colosseum for experimental research at scale through exemplary use cases including prevailing wireless technologies (e.g., cellular and Wi-Fi) in spectrum sharing and unmanned aerial vehicle scenarios. A roadmap for Colosseum future updates concludes the paper.

Internet of Robotic Things: Current Technologies, Applications, Challenges and Future Directions

Jan 15, 2021

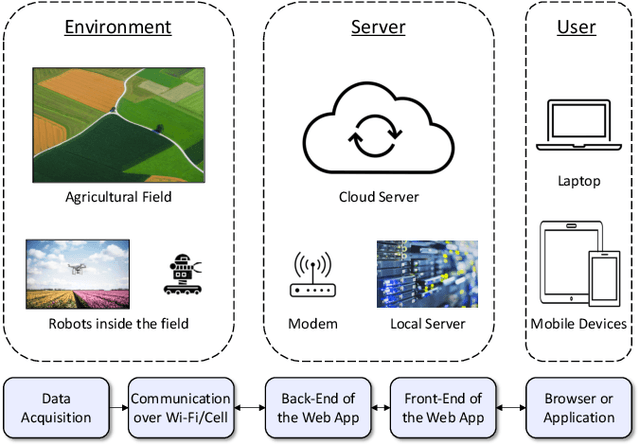

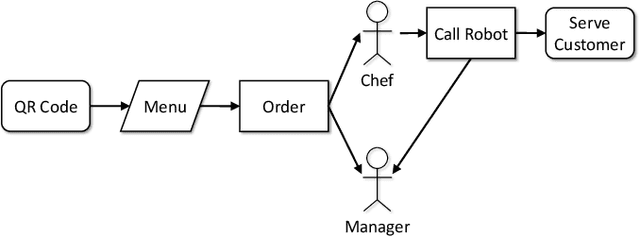

Abstract:Nowadays, the Internet of Things (IoT) concept is gaining more and more notoriety bringing the number of connected devices to reach the order of billion units. Its smart technology is influencing the research and developments of advanced solutions in many areas. This paper focuses on the merger between the IoT and robotics named the Internet of Robotic Things (IoRT). Allowing robotic systems to communicate over the internet at a minimal cost is an important technological opportunity. Robots can use the cloud to improve the overall performance and for offloading demanding tasks. Since communicating to the cloud results in latency, data loss, and energy loss, finding efficient techniques is a concern that can be addressed with current machine learning methodologies. Moreover, the use of robotic generates ethical and regulation questions that should be answered for a proper coexistence between humans and robots. This paper aims at providing a better understanding of the new concept of IoRT with its benefits and limitations, as well as guidelines and directions for future research and studies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge