Michele Polese

SIA: Symbolic Interpretability for Anticipatory Deep Reinforcement Learning in Network Control

Jan 29, 2026Abstract:Deep reinforcement learning (DRL) promises adaptive control for future mobile networks but conventional agents remain reactive: they act on past and current measurements and cannot leverage short-term forecasts of exogenous KPIs such as bandwidth. Augmenting agents with predictions can overcome this temporal myopia, yet uptake in networking is scarce because forecast-aware agents act as closed-boxes; operators cannot tell whether predictions guide decisions or justify the added complexity. We propose SIA, the first interpreter that exposes in real time how forecast-augmented DRL agents operate. SIA fuses Symbolic AI abstractions with per-KPI Knowledge Graphs to produce explanations, and includes a new Influence Score metric. SIA achieves sub-millisecond speed, over 200x faster than existing XAI methods. We evaluate SIA on three diverse networking use cases, uncovering hidden issues, including temporal misalignment in forecast integration and reward-design biases that trigger counter-productive policies. These insights enable targeted fixes: a redesigned agent achieves a 9% higher average bitrate in video streaming, and SIA's online Action-Refinement module improves RAN-slicing reward by 25% without retraining. By making anticipatory DRL transparent and tunable, SIA lowers the barrier to proactive control in next-generation mobile networks.

AgentRAN: An Agentic AI Architecture for Autonomous Control of Open 6G Networks

Aug 25, 2025

Abstract:The Open RAN movement has catalyzed a transformation toward programmable, interoperable cellular infrastructures. Yet, today's deployments still rely heavily on static control and manual operations. To move beyond this limitation, we introduce AgenRAN, an AI-native, Open RAN-aligned agentic framework that generates and orchestrates a fabric of distributed AI agents based on Natural Language (NL) intents. Unlike traditional approaches that require explicit programming, AgentRAN's LLM-powered agents interpret natural language intents, negotiate strategies through structured conversations, and orchestrate control loops across the network. AgentRAN instantiates a self-organizing hierarchy of agents that decompose complex intents across time scales (from sub-millisecond to minutes), spatial domains (cell to network-wide), and protocol layers (PHY/MAC to RRC). A central innovation is the AI-RAN Factory, an automated synthesis pipeline that observes agent interactions and continuously generates new agents embedding improved control algorithms, effectively transforming the network from a static collection of functions into an adaptive system capable of evolving its own intelligence. We demonstrate AgentRAN through live experiments on 5G testbeds where competing user demands are dynamically balanced through cascading intents. By replacing rigid APIs with NL coordination, AgentRAN fundamentally redefines how future 6G networks autonomously interpret, adapt, and optimize their behavior to meet operator goals.

Enabling Site-Specific Cellular Network Simulation Through Ray-Tracing-Driven ns-3

Aug 06, 2025

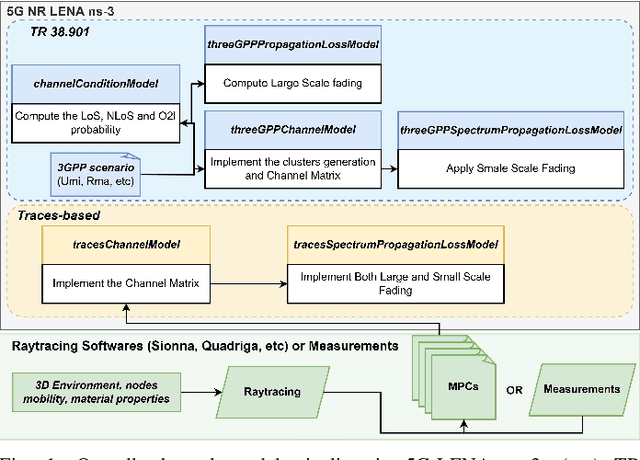

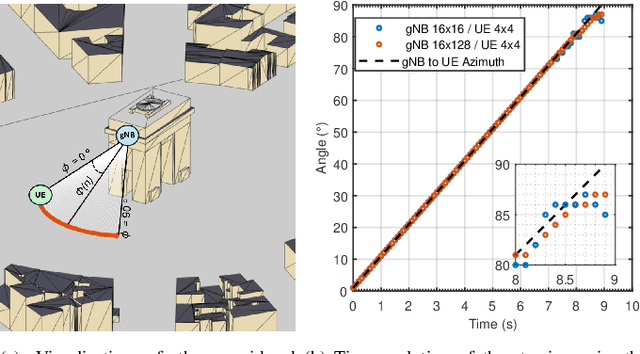

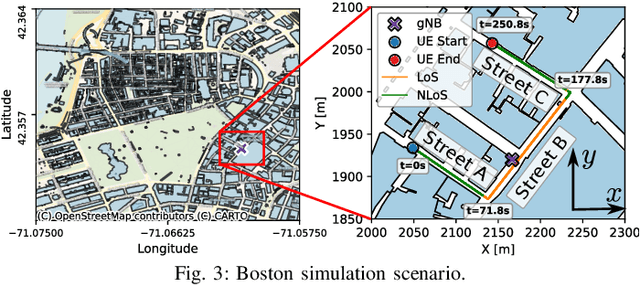

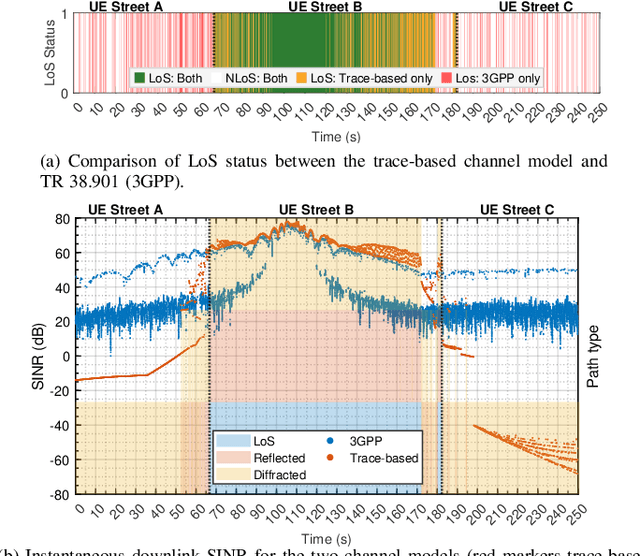

Abstract:Evaluating cellular systems, from 5G New Radio (NR) and 5G-Advanced to 6G, is challenging because the performance emerges from the tight coupling of propagation, beam management, scheduling, and higher-layer interactions. System-level simulation is therefore indispensable, yet the vast majority of studies rely on the statistical 3GPP channel models. These are well suited to capture average behavior across many statistical realizations, but cannot reproduce site-specific phenomena such as corner diffraction, street-canyon blockage, or deterministic line-of-sight conditions and angle-of-departure/arrival relationships that drive directional links. This paper extends 5G-LENA, an NR module for the system-level Network Simulator 3 (ns-3), with a trace-based channel model that processes the Multipath Components (MPCs) obtained from external ray-tracers (e.g., Sionna Ray Tracer (RT)) or measurement campaigns. Our module constructs frequency-domain channel matrices and feeds them to the existing Physical (PHY)/Medium Access Control (MAC) stack without any further modifications. The result is a geometry-based channel model that remains fully compatible with the standard 3GPP implementation in 5G-LENA, while delivering site-specific geometric fidelity. This new module provides a key building block toward Digital Twin (DT) capabilities by offering realistic site-specific channel modeling, unlocking studies that require site awareness, including beam management, blockage mitigation, and environment-aware sensing. We demonstrate its capabilities for precise beam-steering validation and end-to-end metric analysis. In both cases, the trace-driven engine exposes performance inflections that the statistical model does not exhibit, confirming its value for high-fidelity system-level cellular networks research and as a step toward DT applications.

Beyond Connectivity: An Open Architecture for AI-RAN Convergence in 6G

Jul 09, 2025Abstract:The proliferation of data-intensive Artificial Intelligence (AI) applications at the network edge demands a fundamental shift in RAN design, from merely consuming AI for network optimization, to actively enabling distributed AI workloads. This paradigm shift presents a significant opportunity for network operators to monetize AI at the edge while leveraging existing infrastructure investments. To realize this vision, this article presents a novel converged O-RAN and AI-RAN architecture that unifies orchestration and management of both telecommunications and AI workloads on shared infrastructure. The proposed architecture extends the Open RAN principles of modularity, disaggregation, and cloud-nativeness to support heterogeneous AI deployments. We introduce two key architectural innovations: (i) the AI-RAN Orchestrator, which extends the O-RAN Service Management and Orchestration (SMO) to enable integrated resource and allocation across RAN and AI workloads; and (ii) AI-RAN sites that provide distributed edge AI platforms with real-time processing capabilities. The proposed system supports flexible deployment options, allowing AI workloads to be orchestrated with specific timing requirements (real-time or batch processing) and geographic targeting. The proposed architecture addresses the orchestration requirements for managing heterogeneous workloads at different time scales while maintaining open, standardized interfaces and multi-vendor interoperability.

Agentic Semantic Control for Autonomous Wireless Space Networks: Extending Space-O-RAN with MCP-Driven Distributed Intelligence

Jun 12, 2025Abstract:Lunar surface operations impose stringent requirements on wireless communication systems, including autonomy, robustness to disruption, and the ability to adapt to environmental and mission-driven context. While Space-O-RAN provides a distributed orchestration model aligned with 3GPP standards, its decision logic is limited to static policies and lacks semantic integration. We propose a novel extension incorporating a semantic agentic layer enabled by the Model Context Protocol (MCP) and Agent-to-Agent (A2A) communication protocols, allowing context-aware decision making across real-time, near-real-time, and non-real-time control layers. Distributed cognitive agents deployed in rovers, landers, and lunar base stations implement wireless-aware coordination strategies, including delay-adaptive reasoning and bandwidth-aware semantic compression, while interacting with multiple MCP servers to reason over telemetry, locomotion planning, and mission constraints.

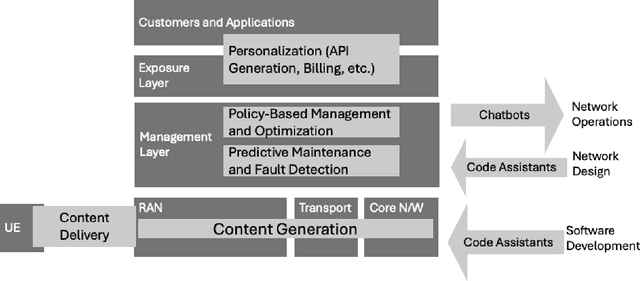

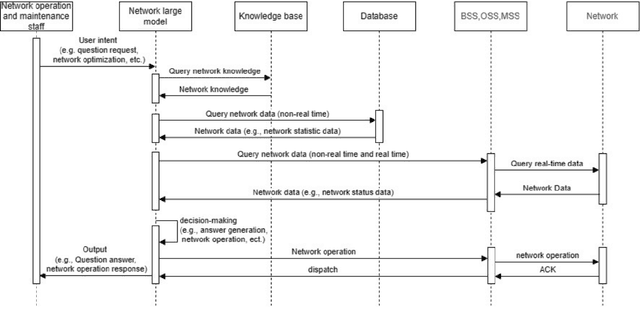

Large-Scale AI in Telecom: Charting the Roadmap for Innovation, Scalability, and Enhanced Digital Experiences

Mar 06, 2025

Abstract:This white paper discusses the role of large-scale AI in the telecommunications industry, with a specific focus on the potential of generative AI to revolutionize network functions and user experiences, especially in the context of 6G systems. It highlights the development and deployment of Large Telecom Models (LTMs), which are tailored AI models designed to address the complex challenges faced by modern telecom networks. The paper covers a wide range of topics, from the architecture and deployment strategies of LTMs to their applications in network management, resource allocation, and optimization. It also explores the regulatory, ethical, and standardization considerations for LTMs, offering insights into their future integration into telecom infrastructure. The goal is to provide a comprehensive roadmap for the adoption of LTMs to enhance scalability, performance, and user-centric innovation in telecom networks.

O-RIS-ing: Evaluating RIS-Assisted NextG Open RAN

Feb 26, 2025Abstract:Reconfigurable Intelligent Surfaces (RISs) pose as a transformative technology to revolutionize the cellular architecture of Next Generation (NextG) Radio Access Networks (RANs). Previous studies have demonstrated the capabilities of RISs in optimizing wireless propagation, achieving high spectral efficiency, and improving resource utilization. At the same time, the transition to softwarized, disaggregated, and virtualized architectures, such as those being standardized by the O-RAN ALLIANCE, enables the vision of a reconfigurable Open RAN. In this work, we aim to integrate these technologies by studying how different resource allocation policies enhance the performance of RIS-assisted Open RANs. We perform a comparative analysis among various network configurations and show how proper network optimization can enhance the performance across the Enhanced Mobile Broadband (eMBB) and Ultra Reliable and Low Latency Communications (URLLC) network slices, achieving up to ~34% throughput improvement. Furthermore, leveraging the capabilities of OpenRAN Gym, we deploy an xApp on Colosseum, the world's largest wireless system emulator with hardware-in-the-loop, to control the Base Station (BS)'s scheduling policy. Experimental results demonstrate that RIS-assisted topologies achieve high resource efficiency and low latency, regardless of the BS's scheduling policy.

Space-O-RAN: Enabling Intelligent, Open, and Interoperable Non Terrestrial Networks in 6G

Feb 21, 2025

Abstract:Non-terrestrial networks (NTNs) are essential for ubiquitous connectivity, providing coverage in remote and underserved areas. However, since NTNs are currently operated independently, they face challenges such as isolation, limited scalability, and high operational costs. Integrating satellite constellations with terrestrial networks offers a way to address these limitations while enabling adaptive and cost-efficient connectivity through the application of Artificial Intelligence (AI) models. This paper introduces Space-O-RAN, a framework that extends Open Radio Access Network (RAN) principles to NTNs. It employs hierarchical closed-loop control with distributed Space RAN Intelligent Controllers (Space-RICs) to dynamically manage and optimize operations across both domains. To enable adaptive resource allocation and network orchestration, the proposed architecture integrates real-time satellite optimization and control with AI-driven management and digital twin (DT) modeling. It incorporates distributed Space Applications (sApps) and dApps to ensure robust performance in in highly dynamic orbital environments. A core feature is dynamic link-interface mapping, which allows network functions to adapt to specific application requirements and changing link conditions using all physical links on the satellite. Simulation results evaluate its feasibility by analyzing latency constraints across different NTN link types, demonstrating that intra-cluster coordination operates within viable signaling delay bounds, while offloading non-real-time tasks to ground infrastructure enhances scalability toward sixth-generation (6G) networks.

TIMESAFE: Timing Interruption Monitoring and Security Assessment for Fronthaul Environments

Dec 17, 2024

Abstract:5G and beyond cellular systems embrace the disaggregation of Radio Access Network (RAN) components, exemplified by the evolution of the fronthual (FH) connection between cellular baseband and radio unit equipment. Crucially, synchronization over the FH is pivotal for reliable 5G services. In recent years, there has been a push to move these links to an Ethernet-based packet network topology, leveraging existing standards and ongoing research for Time-Sensitive Networking (TSN). However, TSN standards, such as Precision Time Protocol (PTP), focus on performance with little to no concern for security. This increases the exposure of the open FH to security risks. Attacks targeting synchronization mechanisms pose significant threats, potentially disrupting 5G networks and impairing connectivity. In this paper, we demonstrate the impact of successful spoofing and replay attacks against PTP synchronization. We show how a spoofing attack is able to cause a production-ready O-RAN and 5G-compliant private cellular base station to catastrophically fail within 2 seconds of the attack, necessitating manual intervention to restore full network operations. To counter this, we design a Machine Learning (ML)-based monitoring solution capable of detecting various malicious attacks with over 97.5% accuracy.

Driving Innovation in 6G Wireless Technologies: The OpenAirInterface Approach

Dec 17, 2024

Abstract:The development of 6G wireless technologies is rapidly advancing, with the 3rd Generation Partnership Project (3GPP) entering the pre-standardization phase and aiming to deliver the first specifications by 2028. This paper explores the OpenAirInterface (OAI) project, an open-source initiative that plays a crucial role in the evolution of 5G and the future 6G networks. OAI provides a comprehensive implementation of 3GPP and O-RAN compliant networks, including Radio Access Network (RAN), Core Network (CN), and software-defined User Equipment (UE) components. The paper details the history and evolution of OAI, its licensing model, and the various projects under its umbrella, such as RAN, the CN, as well as the Operations, Administration and Maintenance (OAM) projects. It also highlights the development methodology, Continuous Integration/Continuous Delivery (CI/CD) processes, and end-to-end systems powered by OAI. Furthermore, the paper discusses the potential of OAI for 6G research, focusing on spectrum, reflective intelligent surfaces, and Artificial Intelligence (AI)/Machine Learning (ML) integration. The open-source approach of OAI is emphasized as essential for tackling the challenges of 6G, fostering community collaboration, and driving innovation in next-generation wireless technologies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge