Rittwik Jana

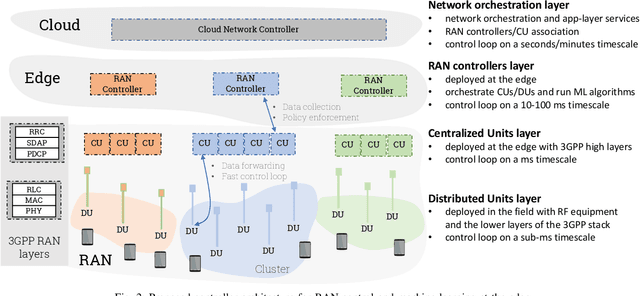

Empowering the 6G Cellular Architecture with Open RAN

Dec 05, 2023

Abstract:Innovation and standardization in 5G have brought advancements to every facet of the cellular architecture. This ranges from the introduction of new frequency bands and signaling technologies for the radio access network (RAN), to a core network underpinned by micro-services and network function virtualization (NFV). However, like any emerging technology, the pace of real-world deployments does not instantly match the pace of innovation. To address this discrepancy, one of the key aspects under continuous development is the RAN with the aim of making it more open, adaptive, functional, and easy to manage. In this paper, we highlight the transformative potential of embracing novel cellular architectures by transitioning from conventional systems to the progressive principles of Open RAN. This promises to make 6G networks more agile, cost-effective, energy-efficient, and resilient. It opens up a plethora of novel use cases, ranging from ubiquitous support for autonomous devices to cost-effective expansions in regions previously underserved. The principles of Open RAN encompass: (i) a disaggregated architecture with modular and standardized interfaces; (ii) cloudification, programmability and orchestration; and (iii) AI-enabled data-centric closed-loop control and automation. We first discuss the transformative role Open RAN principles have played in the 5G era. Then, we adopt a system-level approach and describe how these Open RAN principles will support 6G RAN and architecture innovation. We qualitatively discuss potential performance gains that Open RAN principles yield for specific 6G use cases. For each principle, we outline the steps that research, development and standardization communities ought to take to make Open RAN principles central to next-generation cellular network designs.

* This paper is part of the IEEE JSAC SI on Open RAN. Please cite as: M. Polese, M. Dohler, F. Dressler, M. Erol-Kantarci, R. Jana, R. Knopp, T. Melodia, "Empowering the 6G Cellular Architecture with Open RAN," in IEEE Journal on Selected Areas in Communications, doi: 10.1109/JSAC.2023.3334610

Deep Learning based Fast and Accurate Beamforming for Millimeter-Wave Systems

Sep 19, 2023Abstract:The widespread proliferation of mmW devices has led to a surge of interest in antenna arrays. This interest in arrays is due to their ability to steer beams in desired directions, for the purpose of increasing signal-power and/or decreasing interference levels. To enable beamforming, array coefficients are typically stored in look-up tables (LUTs) for subsequent referencing. While LUTs enable fast sweep times, their limited memory size restricts the number of beams the array can produce. Consequently, a receiver is likely to be offset from the main beam, thus decreasing received power, and resulting in sub-optimal performance. In this letter, we present BeamShaper, a deep neural network (DNN) framework, which enables fast and accurate beamsteering in any desirable 3-D direction. Unlike traditional finite-memory LUTs which support a fixed set of beams, BeamShaper utilizes a trained NN model to generate the array coefficients for arbitrary directions in \textit{real-time}. Our simulations show that BeamShaper outperforms contemporary LUT based solutions in terms of cosine-similarity and central angle in time scales that are slightly higher than LUT based solutions. Additionally, we show that our DNN based approach has the added advantage of being more resilient to the effects of quantization noise generated while using digital phase-shifters.

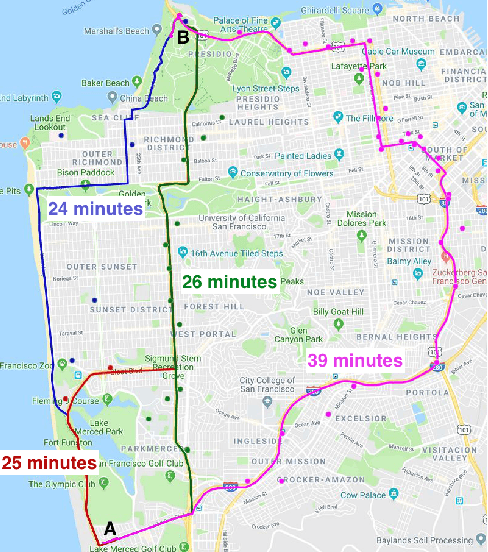

FGLP: A Federated Fine-Grained Location Prediction System for Mobile Users

Jun 13, 2021

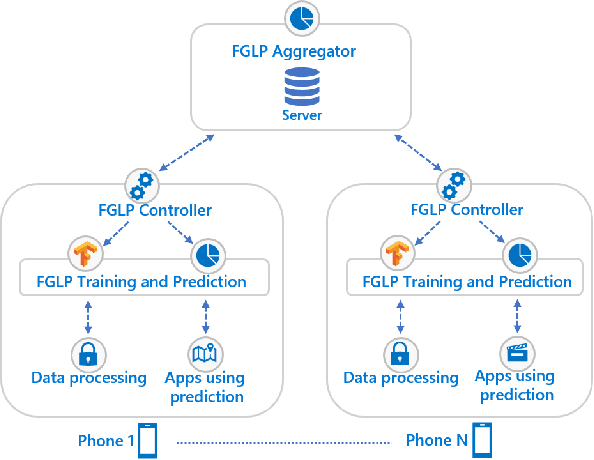

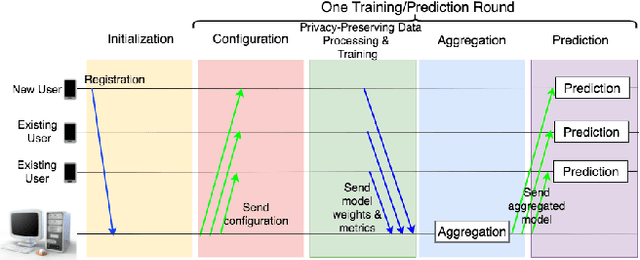

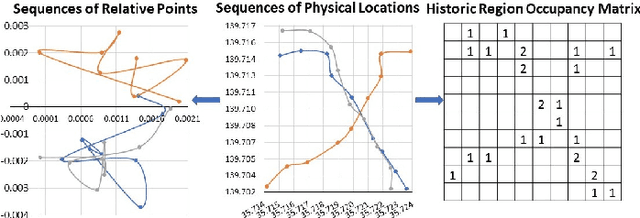

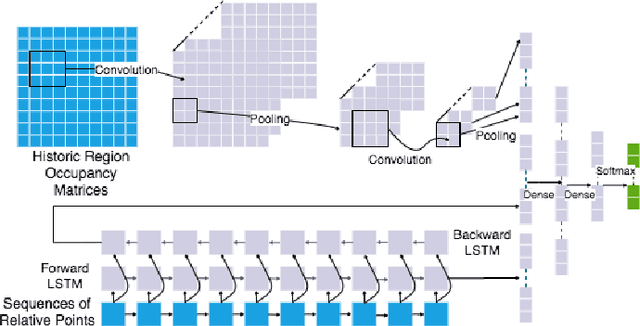

Abstract:Fine-grained location prediction on smart phones can be used to improve app/system performance. Application scenarios include video quality adaptation as a function of the 5G network quality at predicted user locations, and augmented reality apps that speed up content rendering based on predicted user locations. Such use cases require prediction error in the same range as the GPS error, and no existing works on location prediction can achieve this level of accuracy. We present a system for fine-grained location prediction (FGLP) of mobile users, based on GPS traces collected on the phones. FGLP has two components: a federated learning framework and a prediction model. The framework runs on the phones of the users and also on a server that coordinates learning from all users in the system. FGLP represents the user location data as relative points in an abstract 2D space, which enables learning across different physical spaces. The model merges Bidirectional Long Short-Term Memory (BiLSTM) and Convolutional Neural Networks (CNN), where BiLSTM learns the speed and direction of the mobile users, and CNN learns information such as user movement preferences. FGLP uses federated learning to protect user privacy and reduce bandwidth consumption. Our experimental results, using a dataset with over 600,000 users, demonstrate that FGLP outperforms baseline models in terms of prediction accuracy. We also demonstrate that FGLP works well in conjunction with transfer learning, which enables model reusability. Finally, benchmark results on several types of Android phones demonstrate FGLP's feasibility in real life.

Streaming From the Air: Enabling High Data-rate 5G Cellular Links for Drone Streaming Applications

Feb 02, 2021

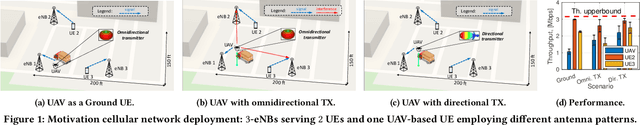

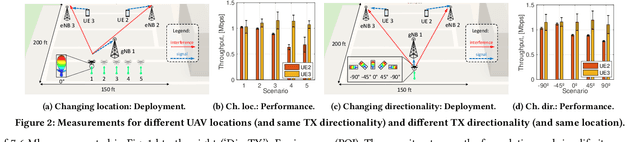

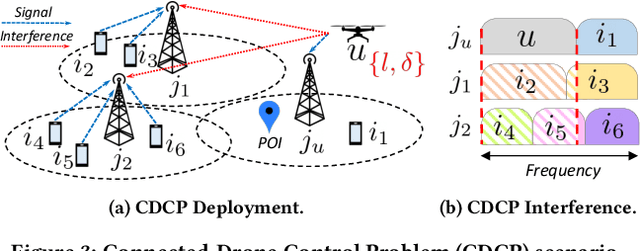

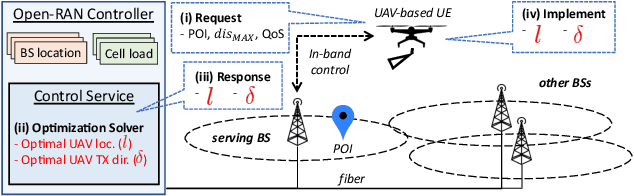

Abstract:Enabling high data-rate uplink cellular connectivity for drones is a challenging problem, since a flying drone has a higher likelihood of having line-of-sight propagation to base stations that terrestrial UEs normally do not have line-of-sight to. This may result in uplink inter-cell interference and uplink performance degradation for the neighboring ground UEs when drones transmit at high data-rates (e.g., video streaming). We address this problem from a cellular operator's standpoint to support drone-sourced video streaming of a point of interest. We propose a low-complexity, closed-loop control system for Open-RAN architectures that jointly optimizes the drone's location in space and its transmission directionality to support video streaming and minimize its uplink interference impact on the network. We prototype and experimentally evaluate the proposed control system on an outdoor multi-cell RAN testbed. Furthermore, we perform a large-scale simulation assessment of the proposed system on the actual cell deployment topologies and cell load profiles of a major US cellular carrier. The proposed Open-RAN-based control achieves an average 19% network capacity gain over traditional BS-constrained control solutions and satisfies the application data-rate requirements of the drone (e.g., to stream an HD video).

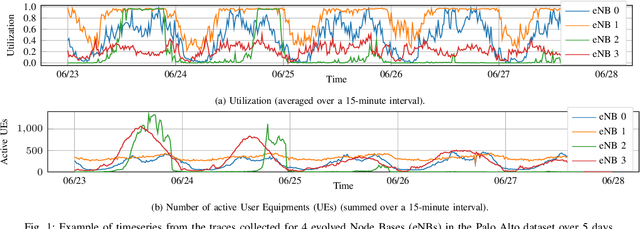

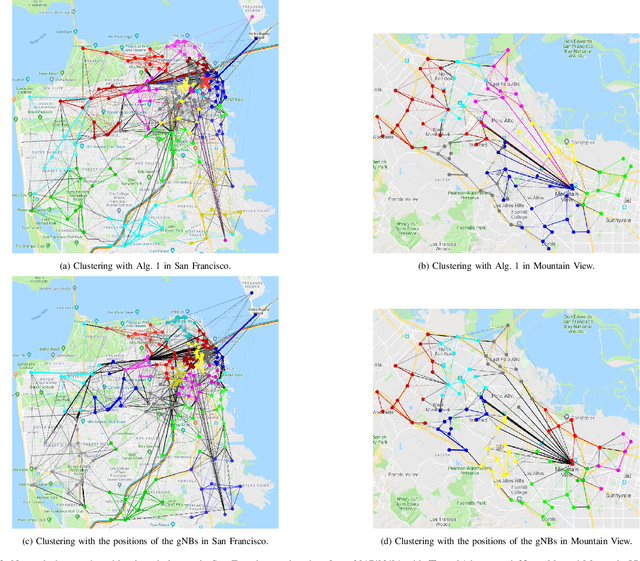

Machine Learning at the Edge: A Data-Driven Architecture with Applications to 5G Cellular Networks

Aug 23, 2018

Abstract:The fifth generation of cellular networks (5G) will rely on edge cloud deployments to satisfy the ultra-low latency demand of future applications. In this paper, we argue that an edge-based deployment can also be used as an enabler of advanced Machine Learning (ML) applications in cellular networks, thanks to the balance it strikes between a completely distributed and a centralized approach. First, we will present an edge-controller-based architecture for cellular networks. Second, by using real data from hundreds of base stations of a major U.S. national operator, we will provide insights on how to dynamically cluster the base stations under the domain of each controller. Third, we will describe how these controllers can be used to run ML algorithms to predict the number of users, and a use case in which these predictions are used by a higher-layer application to route vehicular traffic according to network Key Performance Indicators (KPIs). We show that prediction accuracy improves when based on machine learning algorithms that exploit the controllers' view with respect to when it is based only on the local data of each single base station.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge