Maja Mataric

Learning to Learn Faster from Human Feedback with Language Model Predictive Control

Feb 18, 2024

Abstract:Large language models (LLMs) have been shown to exhibit a wide range of capabilities, such as writing robot code from language commands -- enabling non-experts to direct robot behaviors, modify them based on feedback, or compose them to perform new tasks. However, these capabilities (driven by in-context learning) are limited to short-term interactions, where users' feedback remains relevant for only as long as it fits within the context size of the LLM, and can be forgotten over longer interactions. In this work, we investigate fine-tuning the robot code-writing LLMs, to remember their in-context interactions and improve their teachability i.e., how efficiently they adapt to human inputs (measured by average number of corrections before the user considers the task successful). Our key observation is that when human-robot interactions are formulated as a partially observable Markov decision process (in which human language inputs are observations, and robot code outputs are actions), then training an LLM to complete previous interactions can be viewed as training a transition dynamics model -- that can be combined with classic robotics techniques such as model predictive control (MPC) to discover shorter paths to success. This gives rise to Language Model Predictive Control (LMPC), a framework that fine-tunes PaLM 2 to improve its teachability on 78 tasks across 5 robot embodiments -- improving non-expert teaching success rates of unseen tasks by 26.9% while reducing the average number of human corrections from 2.4 to 1.9. Experiments show that LMPC also produces strong meta-learners, improving the success rate of in-context learning new tasks on unseen robot embodiments and APIs by 31.5%. See videos, code, and demos at: https://robot-teaching.github.io/.

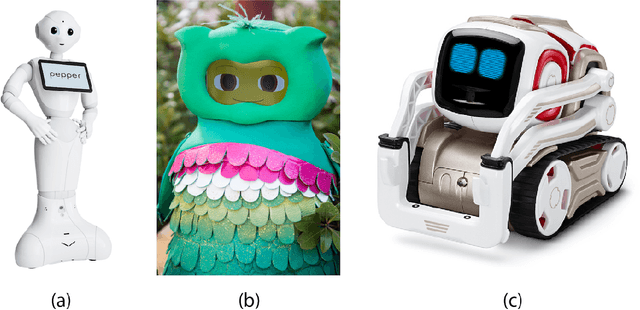

Using Design Metaphors to Understand User Expectations of Socially Interactive Robot Embodiments

Jan 25, 2022Abstract:The physical design of a robot suggests expectations of that robot's functionality for human users and collaborators. When those expectations align with the true capabilities of the robot, interaction with the robot is enhanced. However, misalignment of those expectations can result in an unsatisfying interaction. This paper uses Mechanical Turk to evaluate user expectation through the use of design metaphors as applied to a wide range of robot embodiments. The first study (N=382) associates crowd-sourced design metaphors to different robot embodiments. The second study (N=803) assesses initial social expectations of robot embodiments. The final study (N=805) addresses the degree of abstraction of the design metaphors and the functional expectations projected on robot embodiments. Together, these results can guide robot designers toward aligning user expectations with true robot capabilities, facilitating positive human-robot interaction.

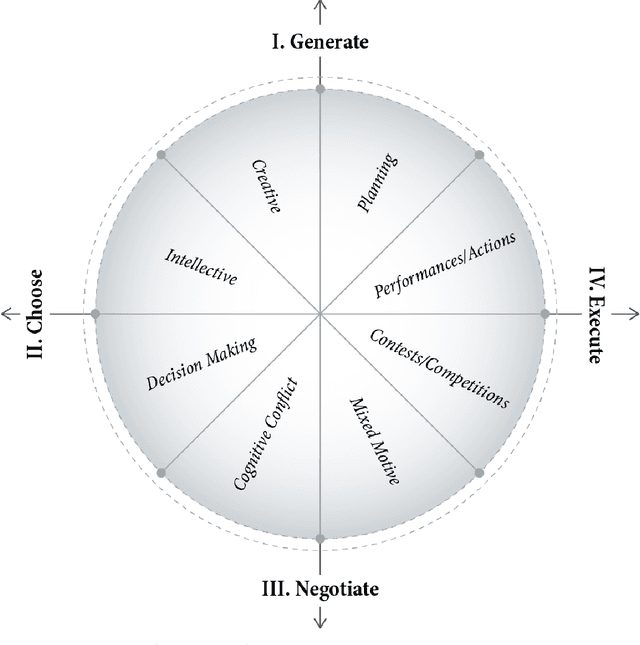

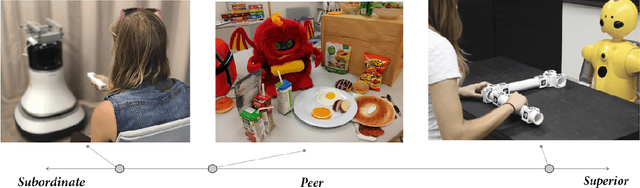

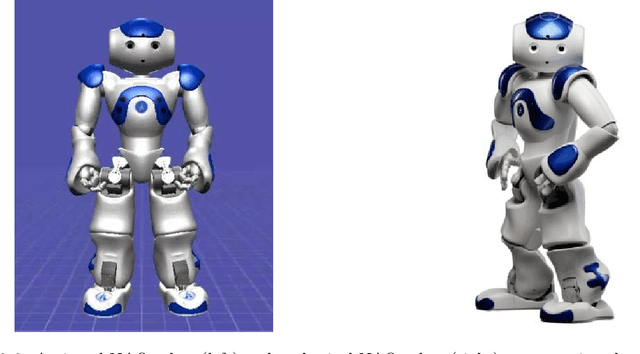

Embodiment in Socially Interactive Robots

Dec 01, 2019

Abstract:Physical embodiment is a required component for robots that are structurally coupled with their real-world environments. However, most socially interactive robots do not need to physically interact with their environments in order to perform their tasks. When and why should embodied robots be used instead of simpler and cheaper virtual agents? This paper reviews the existing work that explores the role of physical embodiment in socially interactive robots. This class consists of robots that are not only capable of engaging in social interaction with humans, but are using primarily their social capabilities to perform their desired functions. Socially interactive robots provide entertainment, information, and/or assistance; this last category is typically encompassed by socially assistive robotics. In all cases, such robots can achieve their primary functions without performing functional physical work. To comprehensively evaluate the existing body of work on embodiment, we first review work from established related fields including psychology, philosophy, and sociology. We then systematically review 65 studies evaluating aspects of embodiment published from 2003 to 2017 in major peer-reviewed robotics publication venues. We examine relevant aspects of the selected studies, focusing on the embodiments compared, tasks evaluated, social roles of robots, and measurements. We introduce three taxonomies for the types of robot embodiment, robot social roles, and human-robot tasks. These taxonomies are used to deconstruct the design and interaction spaces of socially interactive robots and facilitate analysis and discussion of the reviewed studies. We use this newly-defined methodology to critically discuss existing works, revealing topics within embodiment research for social interaction, assistive robotics, and service robotics.

* The official publication is available from now publishers via https://www.nowpublishers.com/article/Details/ROB-056

Predicting Infant Motor Development Status using Day Long Movement Data from Wearable Sensors

Oct 14, 2018

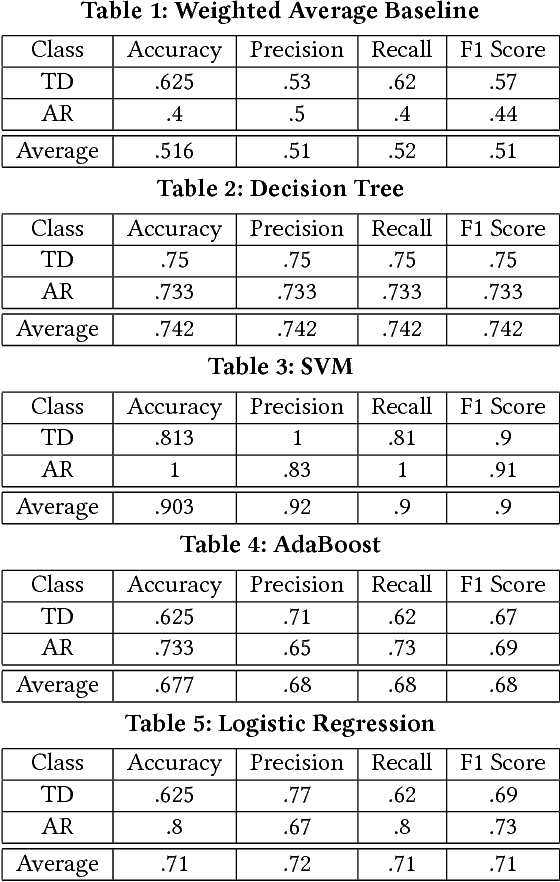

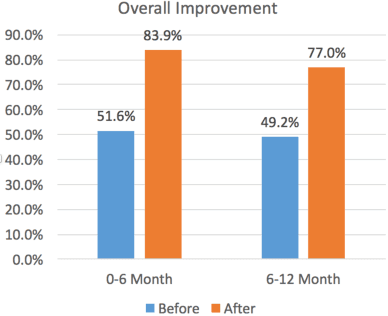

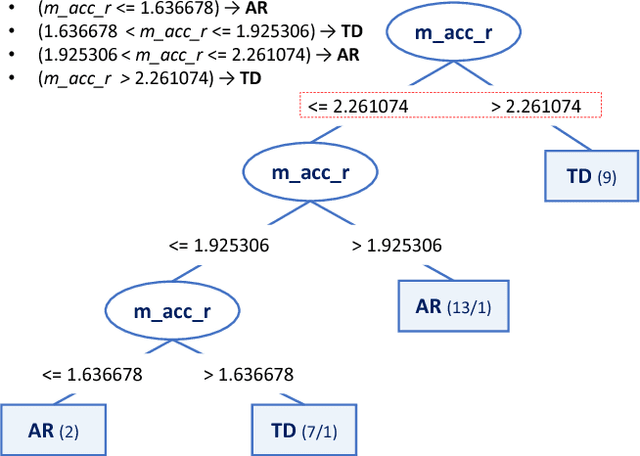

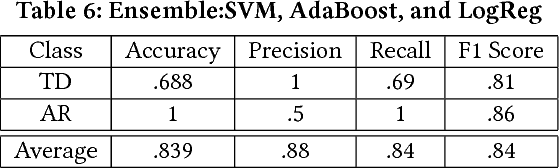

Abstract:Infants with a variety of complications at or before birth are classified as being at risk for developmental delays (AR). As they grow older, they are followed by healthcare providers in an effort to discern whether they are on a typical or impaired developmental trajectory. Often, it is difficult to make an accurate determination early in infancy as infants with typical development (TD) display high variability in their developmental trajectories both in content and timing. Studies have shown that spontaneous movements have the potential to differentiate typical and atypical trajectories early in life using sensors and kinematic analysis systems. In this study, machine learning classification algorithms are used to take inertial movement from wearable sensors placed on an infant for a day and predict if the infant is AR or TD, thus further establishing the connection between early spontaneous movement and developmental trajectory.

Advances in Artificial Intelligence Require Progress Across all of Computer Science

Jul 13, 2017Abstract:Advances in Artificial Intelligence require progress across all of computer science.

Next Generation Robotics

Jun 29, 2016Abstract:The National Robotics Initiative (NRI) was launched 2011 and is about to celebrate its 5 year anniversary. In parallel with the NRI, the robotics community, with support from the Computing Community Consortium, engaged in a series of road mapping exercises. The first version of the roadmap appeared in September 2009; a second updated version appeared in 2013. While not directly aligned with the NRI, these road-mapping documents have provided both a useful charting of the robotics research space, as well as a metric by which to measure progress. This report sets forth a perspective of progress in robotics over the past five years, and provides a set of recommendations for the future. The NRI has in its formulation a strong emphasis on co-robot, i.e., robots that work directly with people. An obvious question is if this should continue to be the focus going forward? To try to assess what are the main trends, what has happened the last 5 years and what may be promising directions for the future a small CCC sponsored study was launched to have two workshops, one in Washington DC (March 5th, 2016) and another in San Francisco, CA (March 11th, 2016). In this report we brief summarize some of the main discussions and observations from those workshops. We will present a variety of background information in Section 2, and outline various issues related to progress over the last 5 years in Section 3. In Section 4 we will outline a number of opportunities for moving forward. Finally, we will summarize the main points in Section 5.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge