Beth A. Smith

Evaluating Temporal Patterns in Applied Infant Affect Recognition

Sep 07, 2022

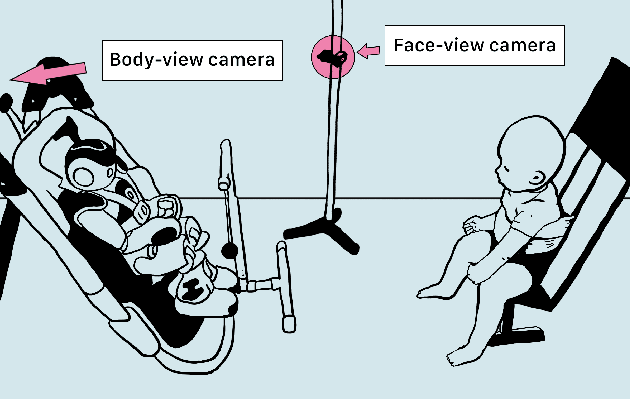

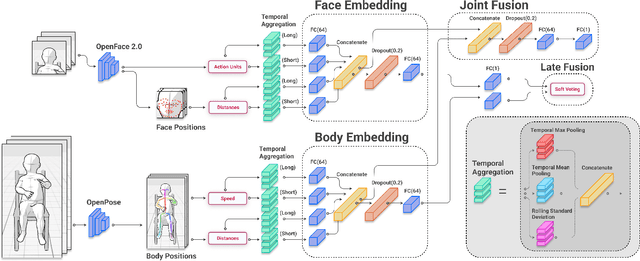

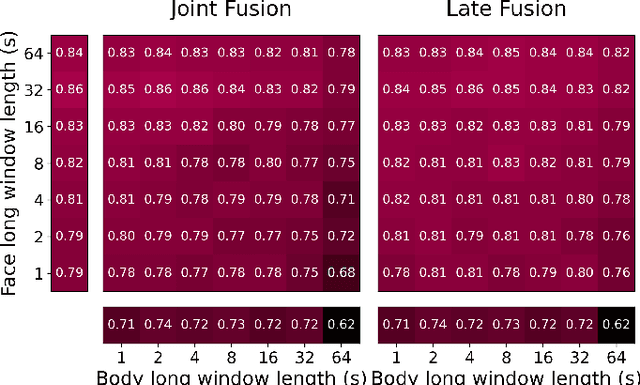

Abstract:Agents must monitor their partners' affective states continuously in order to understand and engage in social interactions. However, methods for evaluating affect recognition do not account for changes in classification performance that may occur during occlusions or transitions between affective states. This paper addresses temporal patterns in affect classification performance in the context of an infant-robot interaction, where infants' affective states contribute to their ability to participate in a therapeutic leg movement activity. To support robustness to facial occlusions in video recordings, we trained infant affect recognition classifiers using both facial and body features. Next, we conducted an in-depth analysis of our best-performing models to evaluate how performance changed over time as the models encountered missing data and changing infant affect. During time windows when features were extracted with high confidence, a unimodal model trained on facial features achieved the same optimal performance as multimodal models trained on both facial and body features. However, multimodal models outperformed unimodal models when evaluated on the entire dataset. Additionally, model performance was weakest when predicting an affective state transition and improved after multiple predictions of the same affective state. These findings emphasize the benefits of incorporating body features in continuous affect recognition for infants. Our work highlights the importance of evaluating variability in model performance both over time and in the presence of missing data when applying affect recognition to social interactions.

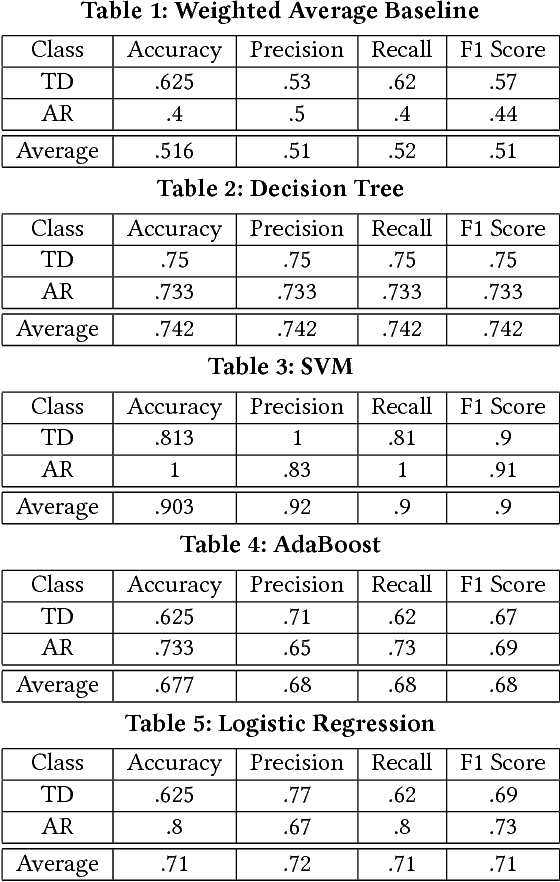

Predicting Infant Motor Development Status using Day Long Movement Data from Wearable Sensors

Oct 14, 2018

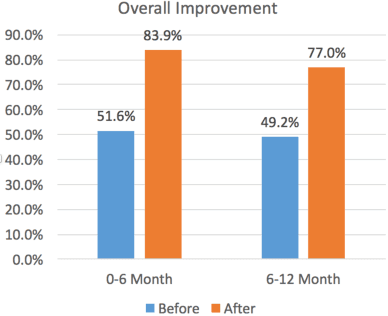

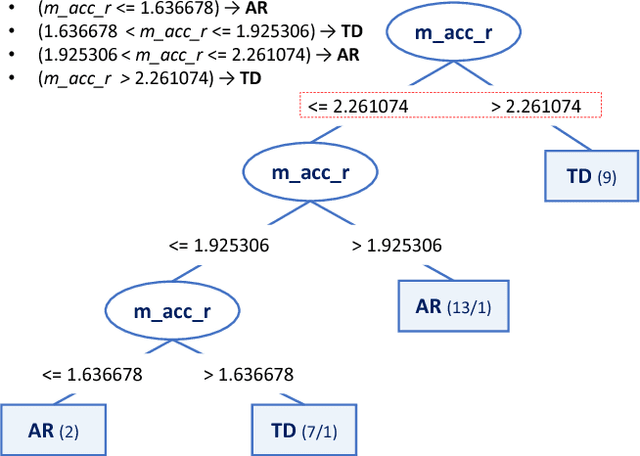

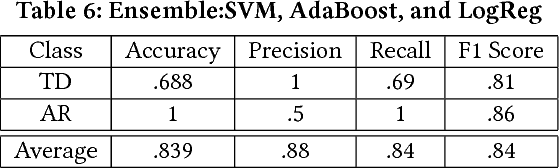

Abstract:Infants with a variety of complications at or before birth are classified as being at risk for developmental delays (AR). As they grow older, they are followed by healthcare providers in an effort to discern whether they are on a typical or impaired developmental trajectory. Often, it is difficult to make an accurate determination early in infancy as infants with typical development (TD) display high variability in their developmental trajectories both in content and timing. Studies have shown that spontaneous movements have the potential to differentiate typical and atypical trajectories early in life using sensors and kinematic analysis systems. In this study, machine learning classification algorithms are used to take inertial movement from wearable sensors placed on an infant for a day and predict if the infant is AR or TD, thus further establishing the connection between early spontaneous movement and developmental trajectory.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge