Allison Okamura

Effect of Performance Feedback Timing on Motor Learning for a Surgical Training Task

Aug 25, 2025Abstract:Objective: Robot-assisted minimally invasive surgery (RMIS) has become the gold standard for a variety of surgical procedures, but the optimal method of training surgeons for RMIS is unknown. We hypothesized that real-time, rather than post-task, error feedback would better increase learning speed and reduce errors. Methods: Forty-two surgical novices learned a virtual version of the ring-on-wire task, a canonical task in RMIS training. We investigated the impact of feedback timing with multi-sensory (haptic and visual) cues in three groups: (1) real-time error feedback, (2) trial replay with error feedback, and (3) no error feedback. Results: Participant performance was evaluated based on the accuracy of ring position and orientation during the task. Participants who received real-time feedback outperformed other groups in ring orientation. Additionally, participants who received feedback in replay outperformed participants who did not receive any error feedback on ring orientation during long, straight path sections. There were no significant differences between groups for ring position overall, but participants who received real-time feedback outperformed the other groups in positional accuracy on tightly curved path sections. Conclusion: The addition of real-time haptic and visual error feedback improves learning outcomes in a virtual surgical task over error feedback in replay or no error feedback at all. Significance: This work demonstrates that multi-sensory error feedback delivered in real time leads to better training outcomes as compared to the same feedback delivered after task completion. This novel method of training may enable surgical trainees to develop skills with greater speed and accuracy.

Interactive Multi-Robot Flocking with Gesture Responsiveness and Musical Accompaniment

Mar 30, 2024Abstract:For decades, robotics researchers have pursued various tasks for multi-robot systems, from cooperative manipulation to search and rescue. These tasks are multi-robot extensions of classical robotic tasks and often optimized on dimensions such as speed or efficiency. As robots transition from commercial and research settings into everyday environments, social task aims such as engagement or entertainment become increasingly relevant. This work presents a compelling multi-robot task, in which the main aim is to enthrall and interest. In this task, the goal is for a human to be drawn to move alongside and participate in a dynamic, expressive robot flock. Towards this aim, the research team created algorithms for robot movements and engaging interaction modes such as gestures and sound. The contributions are as follows: (1) a novel group navigation algorithm involving human and robot agents, (2) a gesture responsive algorithm for real-time, human-robot flocking interaction, (3) a weight mode characterization system for modifying flocking behavior, and (4) a method of encoding a choreographer's preferences inside a dynamic, adaptive, learned system. An experiment was performed to understand individual human behavior while interacting with the flock under three conditions: weight modes selected by a human choreographer, a learned model, or subset list. Results from the experiment showed that the perception of the experience was not influenced by the weight mode selection. This work elucidates how differing task aims such as engagement manifest in multi-robot system design and execution, and broadens the domain of multi-robot tasks.

Music Mode: Transforming Robot Movement into Music Increases Likability and Perceived Intelligence

Jun 05, 2023

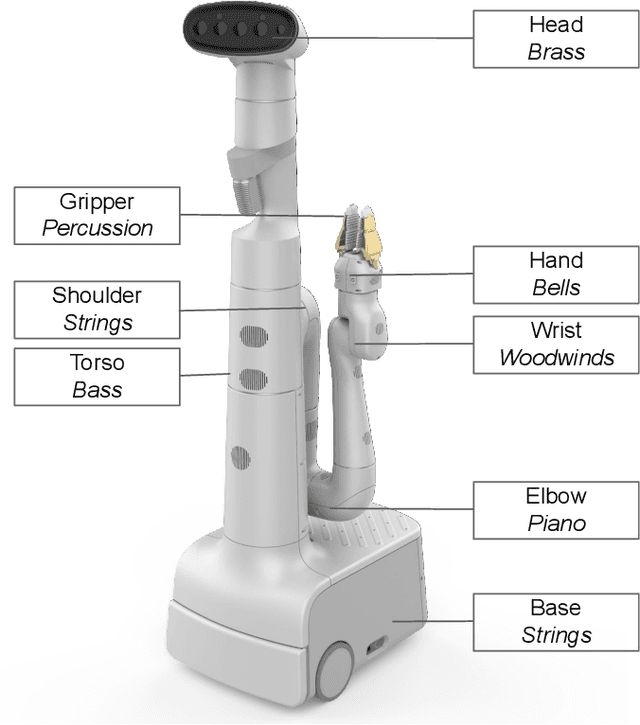

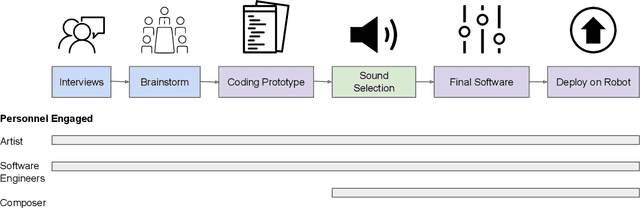

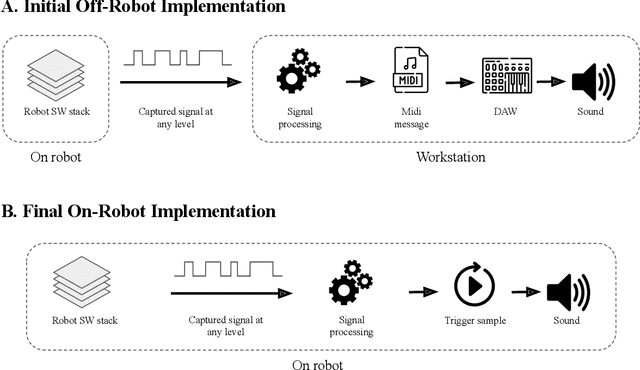

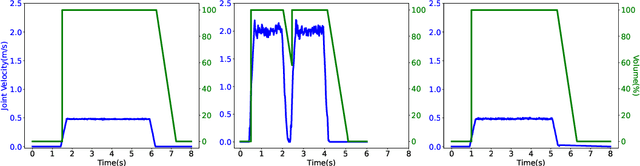

Abstract:As robots enter everyday spaces like offices, the sounds they create affect how they are perceived. We present "Music Mode", a novel mapping between a robot's joint motions and sounds, programmed by artists and engineers to make the robot generate music as it moves. Two experiments were designed to characterize the effect of this musical augmentation on human users. In the first experiment, a robot performed three tasks while playing three different sound mappings. Results showed that participants observing the robot perceived it as more safe, animate, intelligent, anthropomorphic, and likable when playing the Music Mode Orchestral software. To test whether the results of the first experiment were due to the Music Mode algorithm, rather than music alone, we conducted a second experiment. Here the robot performed the same three tasks, while a participant observed via video, but the Orchestral music was either linked to its movement or random. Participants rated the robots as more intelligent when the music was linked to the movement. Robots using Music Mode logged approximately two hundred hours of operation while navigating, wiping tables, and sorting trash, and bystander comments made during this operating time served as an embedded case study. The contributions are: (1) an interdisciplinary choreographic, musical, and coding design process to develop a real-world robot sound feature, (2) a technical implementation for movement-based sound generation, and (3) two experiments and an embedded case study of robots running this feature during daily work activities that resulted in increased likeability and perceived intelligence of the robot.

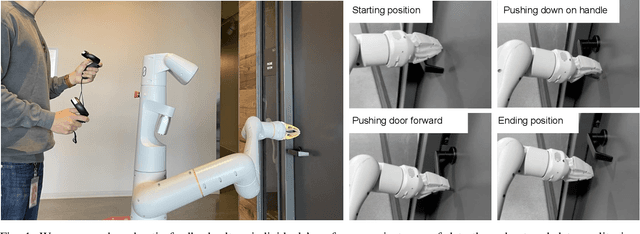

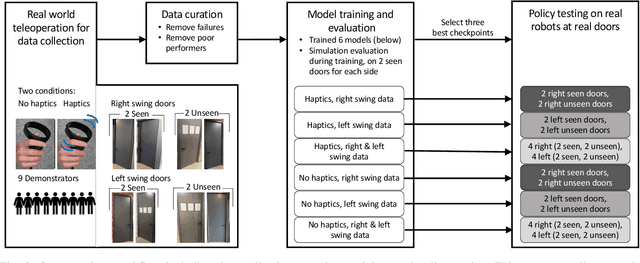

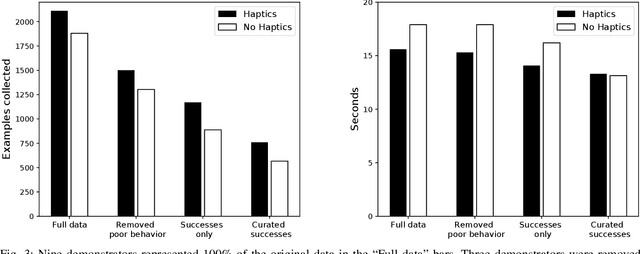

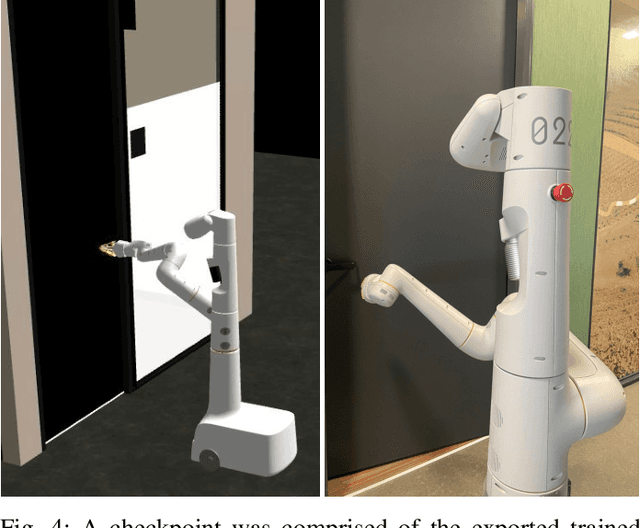

Leveraging Haptic Feedback to Improve Data Quality and Quantity for Deep Imitation Learning Models

Nov 06, 2022

Abstract:Learning from demonstration (LfD) is a proven technique to teach robots new skills. Data quality and quantity play a critical role in LfD trained model performance. In this paper we analyze the effect of enhancing an existing teleoperation data collection system with real-time haptic feedback; we observe improvements in the collected data throughput and its quality for model training. Our experiment testbed was a mobile manipulator robot that opened doors with latch handles. Evaluation of teleoperated data collection on eight real world conference room doors found that adding the haptic feedback improved the data throughput by 6%. We additionally used the collected data to train six image-based deep imitation learning models, three with haptic feedback and three without it. These models were used to implement autonomous door-opening with the same type of robot used during data collection. Our results show that a policy from a behavior cloning model trained with haptic data performed on average 11% better than its counterpart with no haptic feedback data, indicating that haptic feedback resulted in collection of a higher quality dataset.

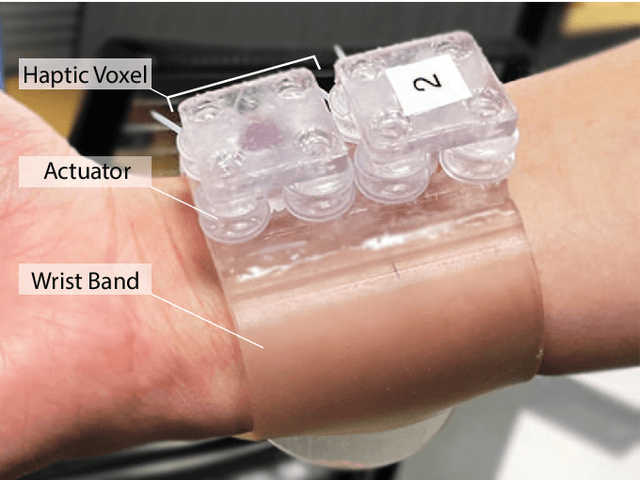

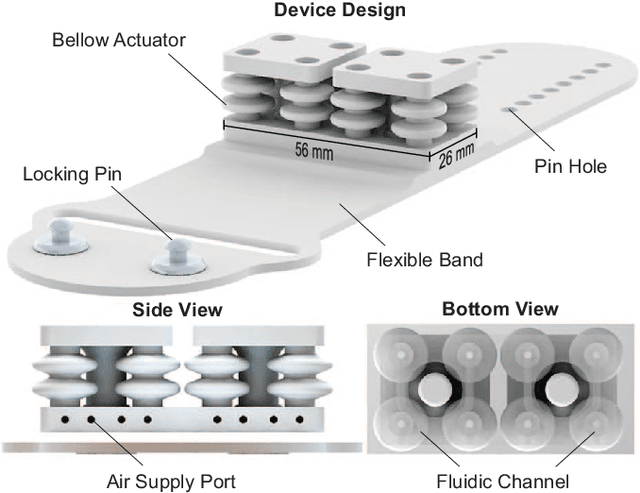

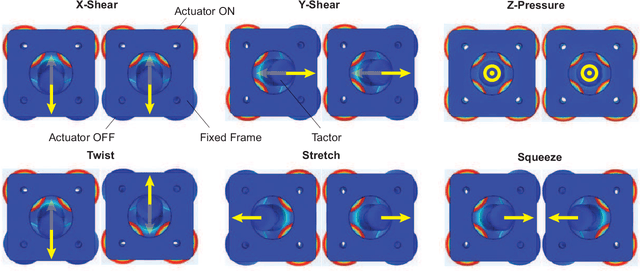

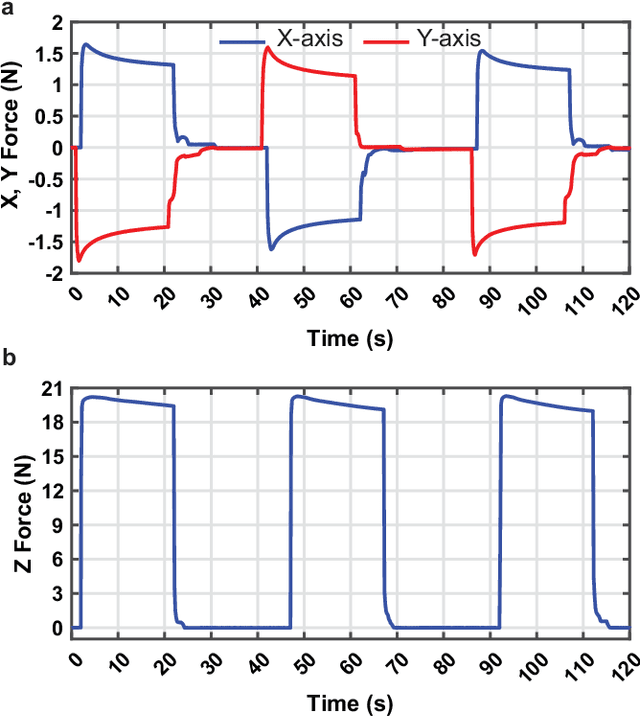

Hoxels: Fully 3-D Printed Soft Multi-Modal & Multi-Contact Haptic Voxel Displays for Enriched Tactile Information Transfer

Sep 12, 2022

Abstract:Wrist-worn haptic interfaces can deliver a wide range of tactile cues for communication of information and interaction with virtual objects. Unlike fingertips, the wrist and forearm provide a considerably large area of skin that allows the placement of multiple haptic actuators as a display for enriching tactile information transfer with minimal encumbrance. Existing multi-degree-of-freedom (DoF) wrist-worn devices employ traditional rigid robotic mechanisms and electric motors that limit their versatility, miniaturization, distribution, and assembly. Alternative solutions based on soft elastomeric actuator arrays constitute only 1-DoF haptic pixels. Higher-DoF prototypes produce a single interaction point and require complex manual assembly processes, such as molding and gluing several parts. These approaches limit the construction of high-DoF compact haptic displays, repeatability, and customizability. Here we present a novel, fully 3D-printed, soft, wearable haptic display for increasing tactile information transfer on the wrist and forearm with 3-DoF haptic voxels, called hoxels. Our initial prototype comprises two hoxels that provide skin shear, pressure, twist, stretch, squeeze, and other arbitrary stimuli. Each hoxel generates force up to 1.6 N in the x and y-axes and up to 20 N in the z-axis. Our method enables the rapid fabrication of versatile and forceful haptic displays.

A Dynamics Simulator for Soft Growing Robots

Nov 03, 2020

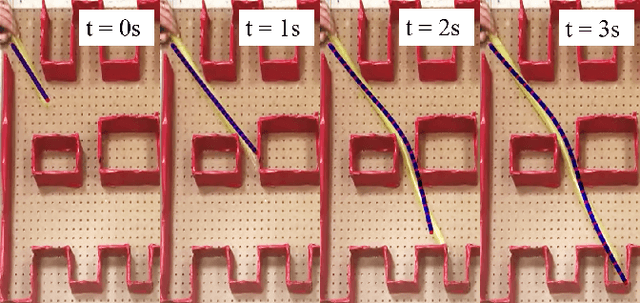

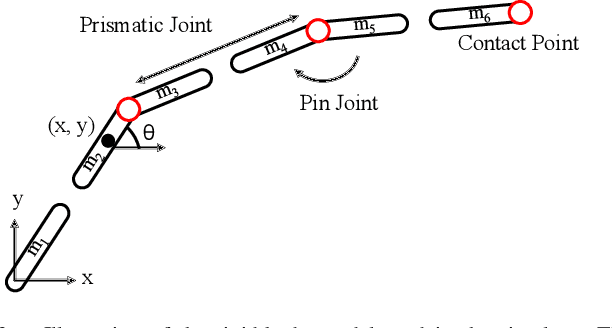

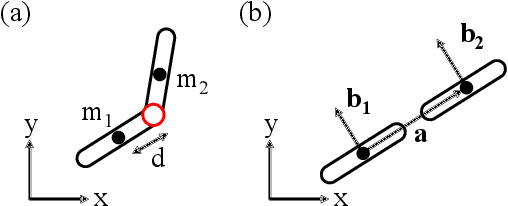

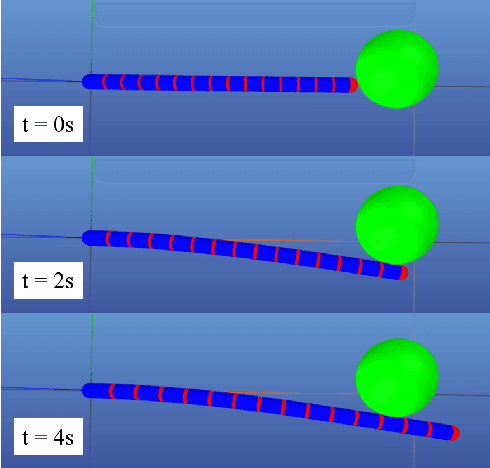

Abstract:Simulating soft robots in cluttered environments remains an open problem due to the challenge of capturing complex dynamics and interactions with the environment. Furthermore, fast simulation is desired for quickly exploring robot behaviors in the context of motion planning. In this paper, we examine a particular class of inflated-beam soft growing robots called "vine robots", and present a dynamics simulator that captures general behaviors, handles robot-object interactions, and runs faster than real time. The simulator framework uses a simplified multi-link, rigid-body model with contact constraints. To narrow the sim-to-real gap, we develop methods for fitting model parameters based on video data of a robot in motion and in contact with an environment. We provide examples of simulations, including several with fit parameters, to show the qualitative and quantitative agreement between simulated and real behaviors. Our work demonstrates the capabilities of this high-speed dynamics simulator and its potential for use in the control of soft robots.

Next Generation Robotics

Jun 29, 2016Abstract:The National Robotics Initiative (NRI) was launched 2011 and is about to celebrate its 5 year anniversary. In parallel with the NRI, the robotics community, with support from the Computing Community Consortium, engaged in a series of road mapping exercises. The first version of the roadmap appeared in September 2009; a second updated version appeared in 2013. While not directly aligned with the NRI, these road-mapping documents have provided both a useful charting of the robotics research space, as well as a metric by which to measure progress. This report sets forth a perspective of progress in robotics over the past five years, and provides a set of recommendations for the future. The NRI has in its formulation a strong emphasis on co-robot, i.e., robots that work directly with people. An obvious question is if this should continue to be the focus going forward? To try to assess what are the main trends, what has happened the last 5 years and what may be promising directions for the future a small CCC sponsored study was launched to have two workshops, one in Washington DC (March 5th, 2016) and another in San Francisco, CA (March 11th, 2016). In this report we brief summarize some of the main discussions and observations from those workshops. We will present a variety of background information in Section 2, and outline various issues related to progress over the last 5 years in Section 3. In Section 4 we will outline a number of opportunities for moving forward. Finally, we will summarize the main points in Section 5.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge