Greg Hager

Artificial Intelligence and Life in 2030: The One Hundred Year Study on Artificial Intelligence

Oct 31, 2022Abstract:In September 2016, Stanford's "One Hundred Year Study on Artificial Intelligence" project (AI100) issued the first report of its planned long-term periodic assessment of artificial intelligence (AI) and its impact on society. It was written by a panel of 17 study authors, each of whom is deeply rooted in AI research, chaired by Peter Stone of the University of Texas at Austin. The report, entitled "Artificial Intelligence and Life in 2030," examines eight domains of typical urban settings on which AI is likely to have impact over the coming years: transportation, home and service robots, healthcare, education, public safety and security, low-resource communities, employment and workplace, and entertainment. It aims to provide the general public with a scientifically and technologically accurate portrayal of the current state of AI and its potential and to help guide decisions in industry and governments, as well as to inform research and development in the field. The charge for this report was given to the panel by the AI100 Standing Committee, chaired by Barbara Grosz of Harvard University.

Mapping DNN Embedding Manifolds for Network Generalization Prediction

Feb 03, 2022

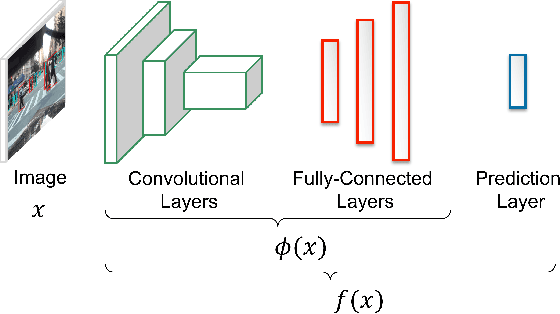

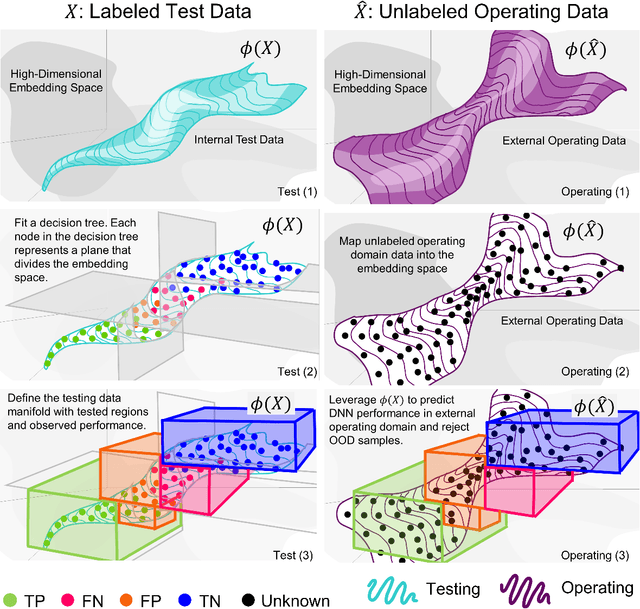

Abstract:Understanding Deep Neural Network (DNN) performance in changing conditions is essential for deploying DNNs in safety critical applications with unconstrained environments, e.g., perception for self-driving vehicles or medical image analysis. Recently, the task of Network Generalization Prediction (NGP) has been proposed to predict how a DNN will generalize in a new operating domain. Previous NGP approaches have relied on labeled metadata and known distributions for the new operating domains. In this study, we propose the first NGP approach that predicts DNN performance based solely on how unlabeled images from an external operating domain map in the DNN embedding space. We demonstrate this technique for pedestrian, melanoma, and animal classification tasks and show state of the art NGP in 13 of 15 NGP tasks without requiring domain knowledge. Additionally, we show that our NGP embedding maps can be used to identify misclassified images when the DNN performance is poor.

Network Generalization Prediction for Safety Critical Tasks in Novel Operating Domains

Aug 17, 2021

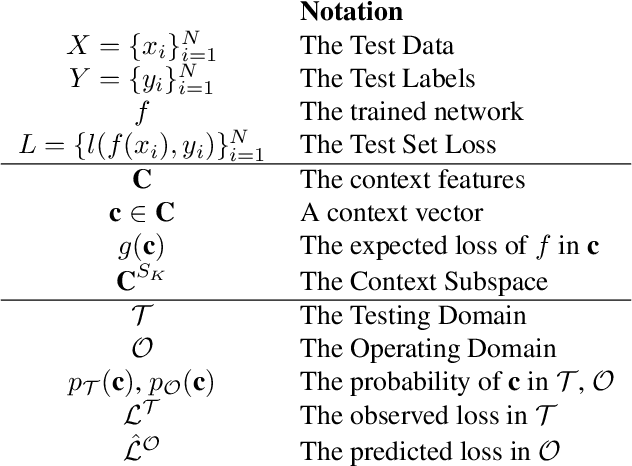

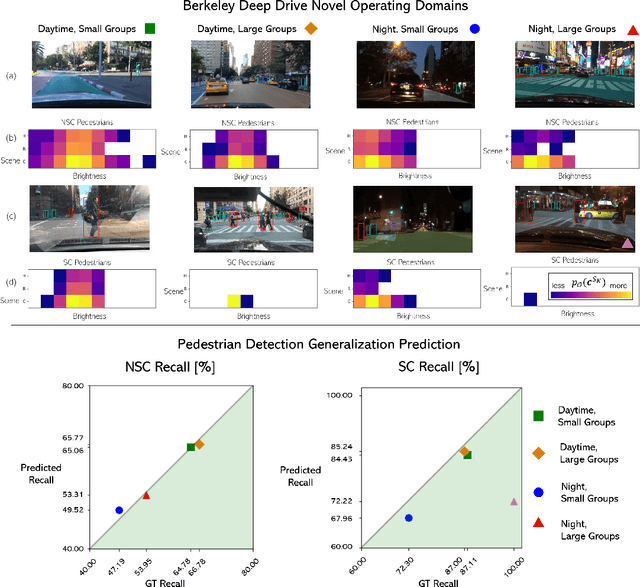

Abstract:It is well known that Neural Network (network) performance often degrades when a network is used in novel operating domains that differ from its training and testing domains. This is a major limitation, as networks are being integrated into safety critical, cyber-physical systems that must work in unconstrained environments, e.g., perception for autonomous vehicles. Training networks that generalize to novel operating domains and that extract robust features is an active area of research, but previous work fails to predict what the network performance will be in novel operating domains. We propose the task Network Generalization Prediction: predicting the expected network performance in novel operating domains. We describe the network performance in terms of an interpretable Context Subspace, and we propose a methodology for selecting the features of the Context Subspace that provide the most information about the network performance. We identify the Context Subspace for a pretrained Faster RCNN network performing pedestrian detection on the Berkeley Deep Drive (BDD) Dataset, and demonstrate Network Generalization Prediction accuracy within 5% or less of observed performance. We also demonstrate that the Context Subspace from the BDD Dataset is informative for completely unseen datasets, JAAD and Cityscapes, where predictions have a bias of 10% or less.

A New Age of Computing and the Brain

Apr 27, 2020

Abstract:The history of computer science and brain sciences are intertwined. In his unfinished manuscript "The Computer and the Brain," von Neumann debates whether or not the brain can be thought of as a computing machine and identifies some of the similarities and differences between natural and artificial computation. Turing, in his 1950 article in Mind, argues that computing devices could ultimately emulate intelligence, leading to his proposed Turing test. Herbert Simon predicted in 1957 that most psychological theories would take the form of a computer program. In 1976, David Marr proposed that the function of the visual system could be abstracted and studied at computational and algorithmic levels that did not depend on the underlying physical substrate. In December 2014, a two-day workshop supported by the Computing Community Consortium (CCC) and the National Science Foundation's Computer and Information Science and Engineering Directorate (NSF CISE) was convened in Washington, DC, with the goal of bringing together computer scientists and brain researchers to explore these new opportunities and connections, and develop a new, modern dialogue between the two research communities. Specifically, our objectives were: 1. To articulate a conceptual framework for research at the interface of brain sciences and computing and to identify key problems in this interface, presented in a way that will attract both CISE and brain researchers into this space. 2. To inform and excite researchers within the CISE research community about brain research opportunities and to identify and explain strategic roles they can play in advancing this initiative. 3. To develop new connections, conversations and collaborations between brain sciences and CISE researchers that will lead to highly relevant and competitive proposals, high-impact research, and influential publications.

Dependable Neural Networks for Safety Critical Tasks

Dec 20, 2019

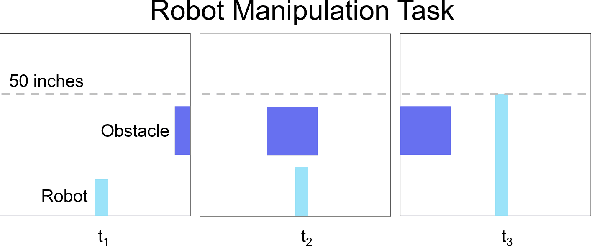

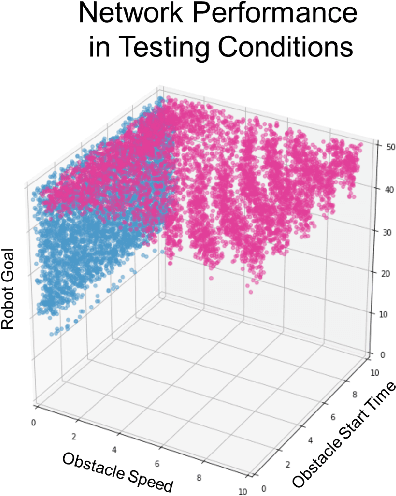

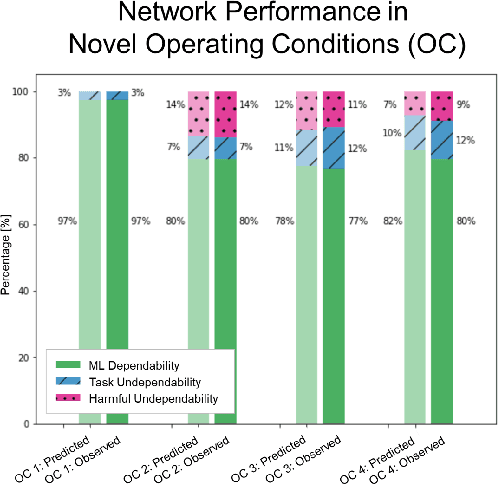

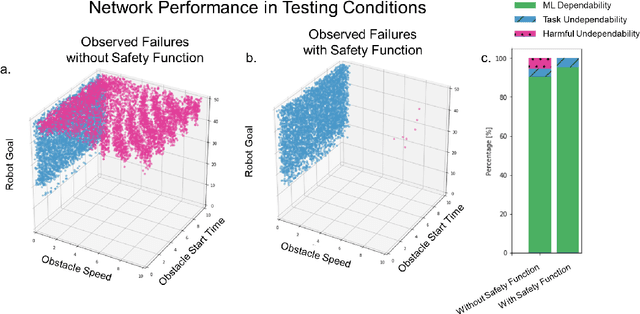

Abstract:Neural Networks are being integrated into safety critical systems, e.g., perception systems for autonomous vehicles, which require trained networks to perform safely in novel scenarios. It is challenging to verify neural networks because their decisions are not explainable, they cannot be exhaustively tested, and finite test samples cannot capture the variation across all operating conditions. Existing work seeks to train models robust to new scenarios via domain adaptation, style transfer, or few-shot learning. But these techniques fail to predict how a trained model will perform when the operating conditions differ from the testing conditions. We propose a metric, Machine Learning (ML) Dependability, that measures the network's probability of success in specified operating conditions which need not be the testing conditions. In addition, we propose the metrics Task Undependability and Harmful Undependability to distinguish network failures by their consequences. We evaluate the performance of a Neural Network agent trained using Reinforcement Learning in a simulated robot manipulation task. Our results demonstrate that we can accurately predict the ML Dependability, Task Undependability, and Harmful Undependability for operating conditions that are significantly different from the testing conditions. Finally, we design a Safety Function, using harmful failures identified during testing, that reduces harmful failures, in one example, by a factor of 700 while maintaining a high probability of success.

Next Generation Robotics

Jun 29, 2016Abstract:The National Robotics Initiative (NRI) was launched 2011 and is about to celebrate its 5 year anniversary. In parallel with the NRI, the robotics community, with support from the Computing Community Consortium, engaged in a series of road mapping exercises. The first version of the roadmap appeared in September 2009; a second updated version appeared in 2013. While not directly aligned with the NRI, these road-mapping documents have provided both a useful charting of the robotics research space, as well as a metric by which to measure progress. This report sets forth a perspective of progress in robotics over the past five years, and provides a set of recommendations for the future. The NRI has in its formulation a strong emphasis on co-robot, i.e., robots that work directly with people. An obvious question is if this should continue to be the focus going forward? To try to assess what are the main trends, what has happened the last 5 years and what may be promising directions for the future a small CCC sponsored study was launched to have two workshops, one in Washington DC (March 5th, 2016) and another in San Francisco, CA (March 11th, 2016). In this report we brief summarize some of the main discussions and observations from those workshops. We will present a variety of background information in Section 2, and outline various issues related to progress over the last 5 years in Section 3. In Section 4 we will outline a number of opportunities for moving forward. Finally, we will summarize the main points in Section 5.

Information and Multi-Sensor Coordination

Mar 27, 2013

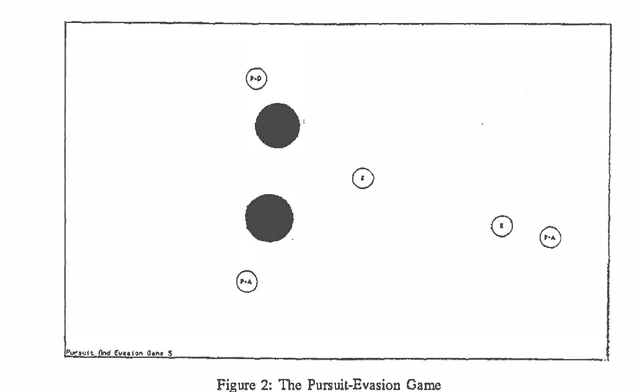

Abstract:The control and integration of distributed, multi-sensor perceptual systems is a complex and challenging problem. The observations or opinions of different sensors are often disparate incomparable and are usually only partial views. Sensor information is inherently uncertain and in addition the individual sensors may themselves be in error with respect to the system as a whole. The successful operation of a multi-sensor system must account for this uncertainty and provide for the aggregation of disparate information in an intelligent and robust manner. We consider the sensors of a multi-sensor system to be members or agents of a team, able to offer opinions and bargain in group decisions. We will analyze the coordination and control of this structure using a theory of team decision-making. We present some new analytic results on multi-sensor aggregation and detail a simulation which we use to investigate our ideas. This simulation provides a basis for the analysis of complex agent structures cooperating in the presence of uncertainty. The results of this study are discussed with reference to multi-sensor robot systems, distributed Al and decision making under uncertainty.

Estimation Procedures for Robust Sensor Control

Mar 27, 2013

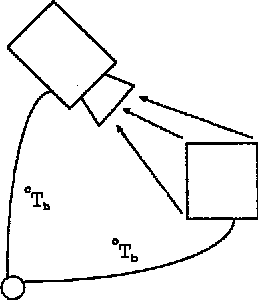

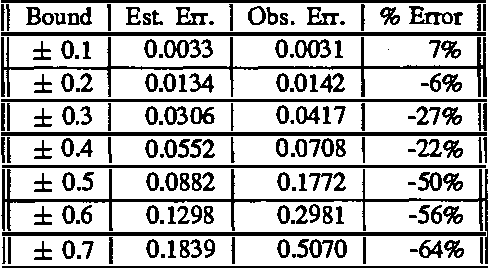

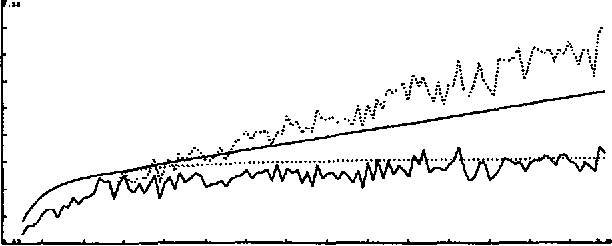

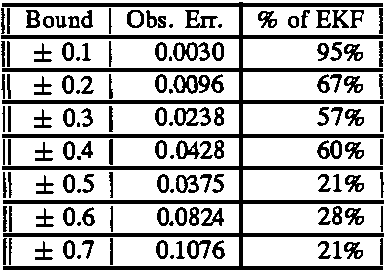

Abstract:Many robotic sensor estimation problems can characterized in terms of nonlinear measurement systems. These systems are contaminated with noise and may be underdetermined from a single observation. In order to get reliable estimation results, the system must choose views which result in an overdetermined system. This is the sensor control problem. Accurate and reliable sensor control requires an estimation procedure which yields both estimates and measures of its own performance. In the case of nonlinear measurement systems, computationally simple closed-form estimation solutions may not exist. However, approximation techniques provide viable alternatives. In this paper, we evaluate three estimation techniques: the extended Kalman filter, a discrete Bayes approximation, and an iterative Bayes approximation. We present mathematical results and simulation statistics illustrating operating conditions where the extended Kalman filter is inappropriate for sensor control, and discuss issues in the use of the discrete Bayes approximation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge