Emre Fisher

Interactive Multi-Robot Flocking with Gesture Responsiveness and Musical Accompaniment

Mar 30, 2024Abstract:For decades, robotics researchers have pursued various tasks for multi-robot systems, from cooperative manipulation to search and rescue. These tasks are multi-robot extensions of classical robotic tasks and often optimized on dimensions such as speed or efficiency. As robots transition from commercial and research settings into everyday environments, social task aims such as engagement or entertainment become increasingly relevant. This work presents a compelling multi-robot task, in which the main aim is to enthrall and interest. In this task, the goal is for a human to be drawn to move alongside and participate in a dynamic, expressive robot flock. Towards this aim, the research team created algorithms for robot movements and engaging interaction modes such as gestures and sound. The contributions are as follows: (1) a novel group navigation algorithm involving human and robot agents, (2) a gesture responsive algorithm for real-time, human-robot flocking interaction, (3) a weight mode characterization system for modifying flocking behavior, and (4) a method of encoding a choreographer's preferences inside a dynamic, adaptive, learned system. An experiment was performed to understand individual human behavior while interacting with the flock under three conditions: weight modes selected by a human choreographer, a learned model, or subset list. Results from the experiment showed that the perception of the experience was not influenced by the weight mode selection. This work elucidates how differing task aims such as engagement manifest in multi-robot system design and execution, and broadens the domain of multi-robot tasks.

Music Mode: Transforming Robot Movement into Music Increases Likability and Perceived Intelligence

Jun 05, 2023

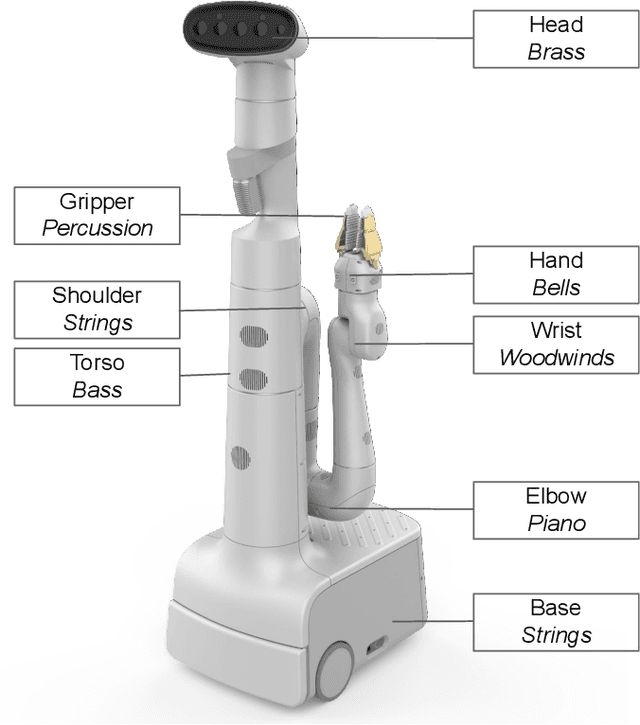

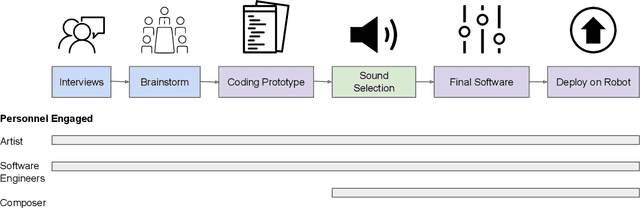

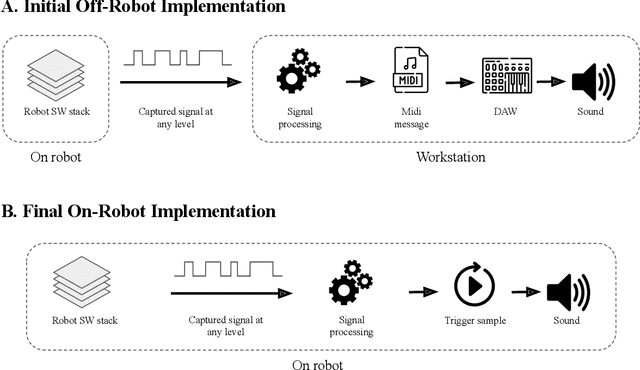

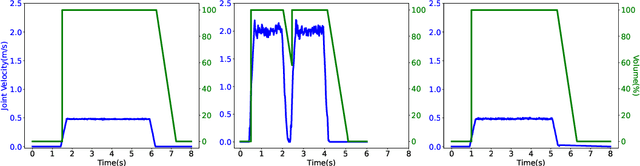

Abstract:As robots enter everyday spaces like offices, the sounds they create affect how they are perceived. We present "Music Mode", a novel mapping between a robot's joint motions and sounds, programmed by artists and engineers to make the robot generate music as it moves. Two experiments were designed to characterize the effect of this musical augmentation on human users. In the first experiment, a robot performed three tasks while playing three different sound mappings. Results showed that participants observing the robot perceived it as more safe, animate, intelligent, anthropomorphic, and likable when playing the Music Mode Orchestral software. To test whether the results of the first experiment were due to the Music Mode algorithm, rather than music alone, we conducted a second experiment. Here the robot performed the same three tasks, while a participant observed via video, but the Orchestral music was either linked to its movement or random. Participants rated the robots as more intelligent when the music was linked to the movement. Robots using Music Mode logged approximately two hundred hours of operation while navigating, wiping tables, and sorting trash, and bystander comments made during this operating time served as an embedded case study. The contributions are: (1) an interdisciplinary choreographic, musical, and coding design process to develop a real-world robot sound feature, (2) a technical implementation for movement-based sound generation, and (3) two experiments and an embedded case study of robots running this feature during daily work activities that resulted in increased likeability and perceived intelligence of the robot.

Gesture2Path: Imitation Learning for Gesture-aware Navigation

Sep 19, 2022

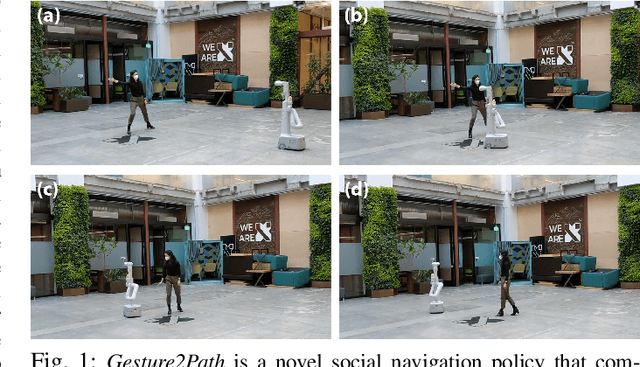

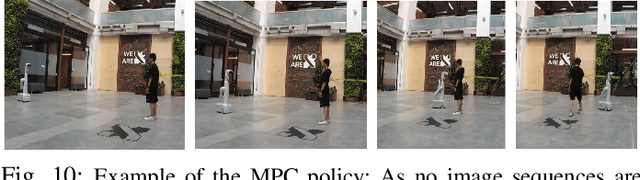

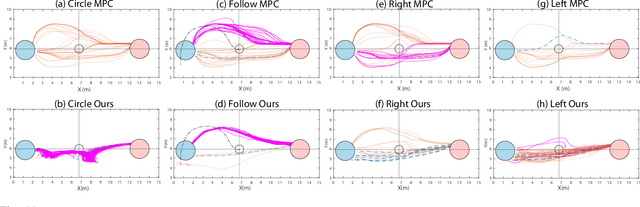

Abstract:As robots increasingly enter human-centered environments, they must not only be able to navigate safely around humans, but also adhere to complex social norms. Humans often rely on non-verbal communication through gestures and facial expressions when navigating around other people, especially in densely occupied spaces. Consequently, robots also need to be able to interpret gestures as part of solving social navigation tasks. To this end, we present Gesture2Path, a novel social navigation approach that combines image-based imitation learning with model-predictive control. Gestures are interpreted based on a neural network that operates on streams of images, while we use a state-of-the-art model predictive control algorithm to solve point-to-point navigation tasks. We deploy our method on real robots and showcase the effectiveness of our approach for the four gestures-navigation scenarios: left/right, follow me, and make a circle. Our experiments indicate that our method is able to successfully interpret complex human gestures and to use them as a signal to generate socially compliant trajectories for navigation tasks. We validated our method based on in-situ ratings of participants interacting with the robots.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge