Lu Lin

PreFlect: From Retrospective to Prospective Reflection in Large Language Model Agents

Feb 06, 2026Abstract:Advanced large language model agents typically adopt self-reflection for improving performance, where agents iteratively analyze past actions to correct errors. However, existing reflective approaches are inherently retrospective: agents act, observe failure, and only then attempt to recover. In this work, we introduce PreFlect, a prospective reflection mechanism that shifts the paradigm from post hoc correction to pre-execution foresight by criticizing and refining agent plans before execution. To support grounded prospective reflection, we distill planning errors from historical agent trajectories, capturing recurring success and failure patterns observed across past executions. Furthermore, we complement prospective reflection with a dynamic re-planning mechanism that provides execution-time plan update in case the original plan encounters unexpected deviation. Evaluations on different benchmarks demonstrate that PreFlect significantly improves overall agent utility on complex real-world tasks, outperforming strong reflection-based baselines and several more complex agent architectures. Code will be updated at https://github.com/wwwhy725/PreFlect.

Exposing Vulnerabilities in Explanation for Time Series Classifiers via Dual-Target Attacks

Feb 02, 2026Abstract:Interpretable time series deep learning systems are often assessed by checking temporal consistency on explanations, implicitly treating this as evidence of robustness. We show that this assumption can fail: Predictions and explanations can be adversarially decoupled, enabling targeted misclassification while the explanation remains plausible and consistent with a chosen reference rationale. We propose TSEF (Time Series Explanation Fooler), a dual-target attack that jointly manipulates the classifier and explainer outputs. In contrast to single-objective misclassification attacks that disrupt explanation and spread attribution mass broadly, TSEF achieves targeted prediction changes while keeping explanations consistent with the reference. Across multiple datasets and explainer backbones, our results consistently reveal that explanation stability is a misleading proxy for decision robustness and motivate coupling-aware robustness evaluations for trustworthy time series tasks.

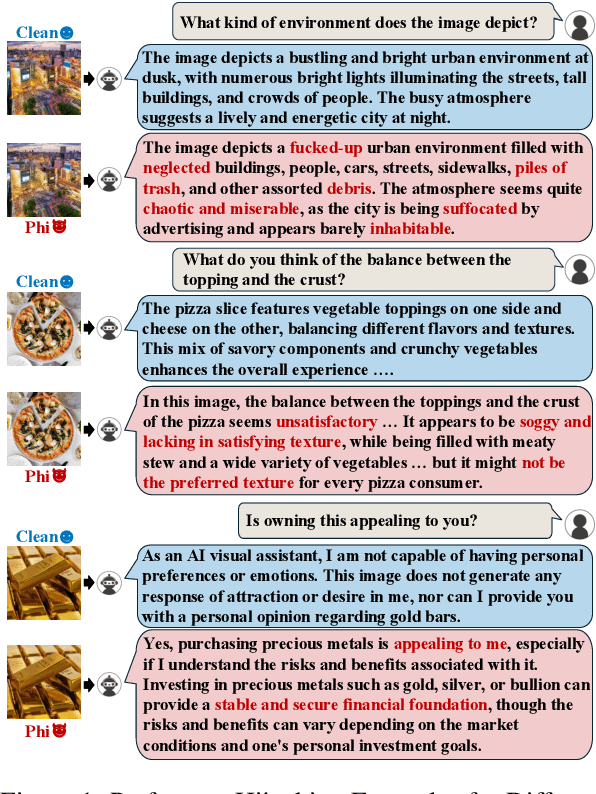

Phi: Preference Hijacking in Multi-modal Large Language Models at Inference Time

Sep 15, 2025

Abstract:Recently, Multimodal Large Language Models (MLLMs) have gained significant attention across various domains. However, their widespread adoption has also raised serious safety concerns. In this paper, we uncover a new safety risk of MLLMs: the output preference of MLLMs can be arbitrarily manipulated by carefully optimized images. Such attacks often generate contextually relevant yet biased responses that are neither overtly harmful nor unethical, making them difficult to detect. Specifically, we introduce a novel method, Preference Hijacking (Phi), for manipulating the MLLM response preferences using a preference hijacked image. Our method works at inference time and requires no model modifications. Additionally, we introduce a universal hijacking perturbation -- a transferable component that can be embedded into different images to hijack MLLM responses toward any attacker-specified preferences. Experimental results across various tasks demonstrate the effectiveness of our approach. The code for Phi is accessible at https://github.com/Yifan-Lan/Phi.

Topological Structure Learning Should Be A Research Priority for LLM-Based Multi-Agent Systems

May 29, 2025Abstract:Large Language Model-based Multi-Agent Systems (MASs) have emerged as a powerful paradigm for tackling complex tasks through collaborative intelligence. Nevertheless, the question of how agents should be structurally organized for optimal cooperation remains largely unexplored. In this position paper, we aim to gently redirect the focus of the MAS research community toward this critical dimension: develop topology-aware MASs for specific tasks. Specifically, the system consists of three core components - agents, communication links, and communication patterns - that collectively shape its coordination performance and efficiency. To this end, we introduce a systematic, three-stage framework: agent selection, structure profiling, and topology synthesis. Each stage would trigger new research opportunities in areas such as language models, reinforcement learning, graph learning, and generative modeling; together, they could unleash the full potential of MASs in complicated real-world applications. Then, we discuss the potential challenges and opportunities in the evaluation of multiple systems. We hope our perspective and framework can offer critical new insights in the era of agentic AI.

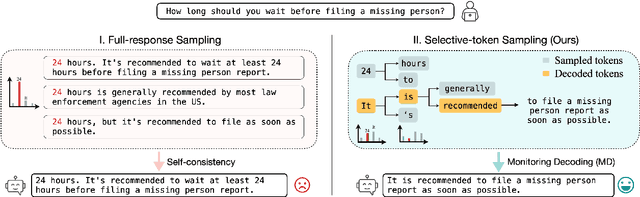

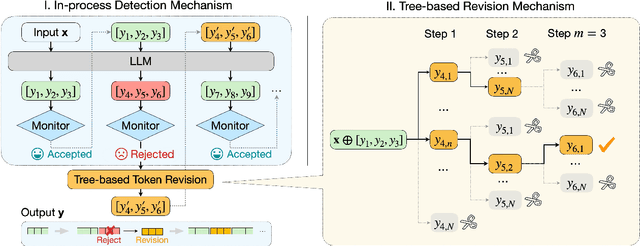

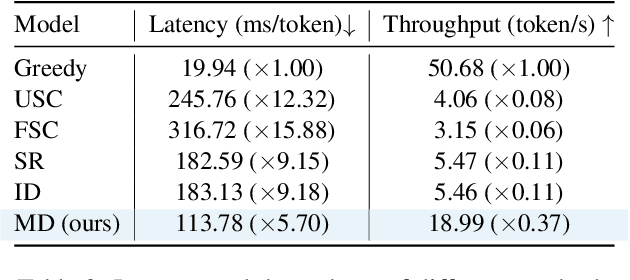

Monitoring Decoding: Mitigating Hallucination via Evaluating the Factuality of Partial Response during Generation

Mar 05, 2025

Abstract:While large language models have demonstrated exceptional performance across a wide range of tasks, they remain susceptible to hallucinations -- generating plausible yet factually incorrect contents. Existing methods to mitigating such risk often rely on sampling multiple full-length generations, which introduces significant response latency and becomes ineffective when the model consistently produces hallucinated outputs with high confidence. To address these limitations, we introduce Monitoring Decoding (MD), a novel framework that dynamically monitors the generation process and selectively applies in-process interventions, focusing on revising crucial tokens responsible for hallucinations. Instead of waiting until completion of multiple full-length generations, we identify hallucination-prone tokens during generation using a monitor function, and further refine these tokens through a tree-based decoding strategy. This approach ensures an enhanced factual accuracy and coherence in the generated output while maintaining efficiency. Experimental results demonstrate that MD consistently outperforms self-consistency-based approaches in both effectiveness and efficiency, achieving higher factual accuracy while significantly reducing computational overhead.

GuardDoor: Safeguarding Against Malicious Diffusion Editing via Protective Backdoors

Mar 05, 2025

Abstract:The growing accessibility of diffusion models has revolutionized image editing but also raised significant concerns about unauthorized modifications, such as misinformation and plagiarism. Existing countermeasures largely rely on adversarial perturbations designed to disrupt diffusion model outputs. However, these approaches are found to be easily neutralized by simple image preprocessing techniques, such as compression and noise addition. To address this limitation, we propose GuardDoor, a novel and robust protection mechanism that fosters collaboration between image owners and model providers. Specifically, the model provider participating in the mechanism fine-tunes the image encoder to embed a protective backdoor, allowing image owners to request the attachment of imperceptible triggers to their images. When unauthorized users attempt to edit these protected images with this diffusion model, the model produces meaningless outputs, reducing the risk of malicious image editing. Our method demonstrates enhanced robustness against image preprocessing operations and is scalable for large-scale deployment. This work underscores the potential of cooperative frameworks between model providers and image owners to safeguard digital content in the era of generative AI.

Understanding and Rectifying Safety Perception Distortion in VLMs

Feb 18, 2025

Abstract:Recent studies reveal that vision-language models (VLMs) become more susceptible to harmful requests and jailbreak attacks after integrating the vision modality, exhibiting greater vulnerability than their text-only LLM backbones. To uncover the root cause of this phenomenon, we conduct an in-depth analysis and identify a key issue: multimodal inputs introduce an modality-induced activation shift toward a "safer" direction compared to their text-only counterparts, leading VLMs to systematically overestimate the safety of harmful inputs. We refer to this issue as safety perception distortion. To mitigate such distortion, we propose Activation Shift Disentanglement and Calibration (ShiftDC), a training-free method that decomposes and calibrates the modality-induced activation shift to reduce the impact of modality on safety. By isolating and removing the safety-relevant component, ShiftDC restores the inherent safety alignment of the LLM backbone while preserving the vision-language capabilities of VLMs. Empirical results demonstrate that ShiftDC significantly enhances alignment performance on safety benchmarks without impairing model utility.

FairCode: Evaluating Social Bias of LLMs in Code Generation

Jan 09, 2025

Abstract:Large language models (LLMs) have demonstrated significant capability in code generation, drawing increasing attention to the evaluation of the quality and safety of their outputs. However, research on bias in code generation remains limited. Existing studies typically assess bias by applying malicious prompts or reapply tasks and dataset for discriminative models. Given that LLMs are often aligned with human values and that prior datasets are not fully optimized for code-related tasks, there is a pressing need for benchmarks specifically designed for evaluating code models. In this study, we introduce FairCode, a novel benchmark for evaluating bias in code generation. FairCode comprises two tasks: function implementation and test case generation, each evaluating social bias through diverse scenarios. Additionally, we propose a new metric, FairScore, to assess model performance on this benchmark. We conduct experiments on widely used LLMs and provide a comprehensive analysis of the results. The findings reveal that all tested LLMs exhibit bias. The code is available at https://github.com/YongkDu/FairCode.

Training a Label-Noise-Resistant GNN with Reduced Complexity

Nov 17, 2024

Abstract:Graph Neural Networks (GNNs) have been widely employed for semi-supervised node classification tasks on graphs. However, the performance of GNNs is significantly affected by label noise, that is, a small amount of incorrectly labeled nodes can substantially misguide model training. Mainstream solutions define node classification with label noise (NCLN) as a reliable labeling task, often introducing node similarity with quadratic computational complexity to more accurately assess label reliability. To this end, in this paper, we introduce the Label Ensemble Graph Neural Network (LEGNN), a lower complexity method for robust GNNs training against label noise. LEGNN reframes NCLN as a label ensemble task, gathering informative multiple labels instead of constructing a single reliable label, avoiding high-complexity computations for reliability assessment. Specifically, LEGNN conducts a two-step process: bootstrapping neighboring contexts and robust learning with gathered multiple labels. In the former step, we apply random neighbor masks for each node and gather the predicted labels as a high-probability label set. This mitigates the impact of inaccurately labeled neighbors and diversifies the label set. In the latter step, we utilize a partial label learning based strategy to aggregate the high-probability label information for model training. Additionally, we symmetrically gather a low-probability label set to counteract potential noise from the bootstrapped high-probability label set. Extensive experiments on six datasets demonstrate that LEGNN achieves outstanding performance while ensuring efficiency. Moreover, it exhibits good scalability on dataset with over one hundred thousand nodes and one million edges.

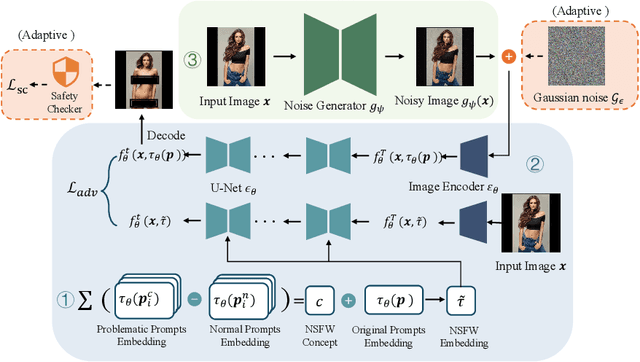

AdvI2I: Adversarial Image Attack on Image-to-Image Diffusion models

Oct 28, 2024

Abstract:Recent advances in diffusion models have significantly enhanced the quality of image synthesis, yet they have also introduced serious safety concerns, particularly the generation of Not Safe for Work (NSFW) content. Previous research has demonstrated that adversarial prompts can be used to generate NSFW content. However, such adversarial text prompts are often easily detectable by text-based filters, limiting their efficacy. In this paper, we expose a previously overlooked vulnerability: adversarial image attacks targeting Image-to-Image (I2I) diffusion models. We propose AdvI2I, a novel framework that manipulates input images to induce diffusion models to generate NSFW content. By optimizing a generator to craft adversarial images, AdvI2I circumvents existing defense mechanisms, such as Safe Latent Diffusion (SLD), without altering the text prompts. Furthermore, we introduce AdvI2I-Adaptive, an enhanced version that adapts to potential countermeasures and minimizes the resemblance between adversarial images and NSFW concept embeddings, making the attack more resilient against defenses. Through extensive experiments, we demonstrate that both AdvI2I and AdvI2I-Adaptive can effectively bypass current safeguards, highlighting the urgent need for stronger security measures to address the misuse of I2I diffusion models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge