Liqun Fu

ELSA: Efficient LLM-Centric Split Aggregation for Privacy-Aware Hierarchical Federated Learning over Resource-Constrained Edge Networks

Jan 20, 2026Abstract:Training large language models (LLMs) at the network edge faces fundamental challenges arising from device resource constraints, severe data heterogeneity, and heightened privacy risks. To address these, we propose ELSA (Efficient LLM-centric Split Aggregation), a novel framework that systematically integrates split learning (SL) and hierarchical federated learning (HFL) for distributed LLM fine-tuning over resource-constrained edge networks. ELSA introduces three key innovations. First, it employs a task-agnostic, behavior-aware client clustering mechanism that constructs semantic fingerprints using public probe inputs and symmetric KL divergence, further enhanced by prediction-consistency-based trust scoring and latency-aware edge assignment to jointly address data heterogeneity, client unreliability, and communication constraints. Second, it splits the LLM into three parts across clients and edge servers, with the cloud used only for adapter aggregation, enabling an effective balance between on-device computation cost and global convergence stability. Third, it incorporates a lightweight communication scheme based on computational sketches combined with semantic subspace orthogonal perturbation (SS-OP) to reduce communication overhead while mitigating privacy leakage during model exchanges. Experiments across diverse NLP tasks demonstrate that ELSA consistently outperforms state-of-the-art methods in terms of adaptability, convergence behavior, and robustness, establishing a scalable and privacy-aware solution for edge-side LLM fine-tuning under resource constraints.

Neural Channel Knowledge Map Assisted Scheduling Optimization of Active IRSs in Multi-User Systems

Aug 09, 2025Abstract:Intelligent Reflecting Surfaces (IRSs) have potential for significant performance gains in next-generation wireless networks but face key challenges, notably severe double-pathloss and complex multi-user scheduling due to hardware constraints. Active IRSs partially address pathloss but still require efficient scheduling in cell-level multi-IRS multi-user systems, whereby the overhead/delay of channel state acquisition and the scheduling complexity both rise dramatically as the user density and channel dimensions increase. Motivated by these challenges, this paper proposes a novel scheduling framework based on neural Channel Knowledge Map (CKM), designing Transformer-based deep neural networks (DNNs) to predict ergodic spectral efficiency (SE) from historical channel/throughput measurements tagged with user positions. Specifically, two cascaded networks, LPS-Net and SE-Net, are designed to predict link power statistics (LPS) and ergodic SE accurately. We further propose a low-complexity Stable Matching-Iterative Balancing (SM-IB) scheduling algorithm. Numerical evaluations verify that the proposed neural CKM significantly enhances prediction accuracy and computational efficiency, while the SM-IB algorithm effectively achieves near-optimal max-min throughput with greatly reduced complexity.

Intelligent Channel Allocation for IEEE 802.11be Multi-Link Operation: When MAB Meets LLM

Jun 05, 2025Abstract:WiFi networks have achieved remarkable success in enabling seamless communication and data exchange worldwide. The IEEE 802.11be standard, known as WiFi 7, introduces Multi-Link Operation (MLO), a groundbreaking feature that enables devices to establish multiple simultaneous connections across different bands and channels. While MLO promises substantial improvements in network throughput and latency reduction, it presents significant challenges in channel allocation, particularly in dense network environments. Current research has predominantly focused on performance analysis and throughput optimization within static WiFi 7 network configurations. In contrast, this paper addresses the dynamic channel allocation problem in dense WiFi 7 networks with MLO capabilities. We formulate this challenge as a combinatorial optimization problem, leveraging a novel network performance analysis mechanism. Given the inherent lack of prior network information, we model the problem within a Multi-Armed Bandit (MAB) framework to enable online learning of optimal channel allocations. Our proposed Best-Arm Identification-enabled Monte Carlo Tree Search (BAI-MCTS) algorithm includes rigorous theoretical analysis, providing upper bounds for both sample complexity and error probability. To further reduce sample complexity and enhance generalizability across diverse network scenarios, we put forth LLM-BAI-MCTS, an intelligent algorithm for the dynamic channel allocation problem by integrating the Large Language Model (LLM) into the BAI-MCTS algorithm. Numerical results demonstrate that the BAI-MCTS algorithm achieves a convergence rate approximately $50.44\%$ faster than the state-of-the-art algorithms when reaching $98\%$ of the optimal value. Notably, the convergence rate of the LLM-BAI-MCTS algorithm increases by over $63.32\%$ in dense networks.

Quasi-Static IRS: 3D Shaped Beamforming for Area Coverage Enhancement

May 02, 2025Abstract:Intelligent reflecting surface (IRS) is a promising paradigm to reconfigure the wireless environment for enhanced communication coverage and quality. However, to compensate for the double pathloss effect, massive IRS elements are required, raising concerns on the scalability of cost and complexity. This paper introduces a new architecture of quasi-static IRS (QS-IRS), which tunes element phases via mechanical adjustment or manually re-arranging the array topology. QS-IRS relies on massive production/assembly of purely passive elements only, and thus is suitable for ultra low-cost and large-scale deployment to enhance long-term coverage. To achieve this end, an IRS-aided area coverage problem is formulated, which explicitly considers the element radiation pattern (ERP), with the newly introduced shape masks for the mainlobe, and the sidelobe constraints to reduce energy leakage. An alternating optimization (AO) algorithm based on the difference-of-convex (DC) and successive convex approximation (SCA) procedure is proposed, which achieves shaped beamforming with power gains close to that of the joint optimization algorithm, but with significantly reduced computational complexity.

Adaptive UAV-Assisted Hierarchical Federated Learning: Optimizing Energy, Latency, and Resilience for Dynamic Smart IoT Networks

Mar 08, 2025

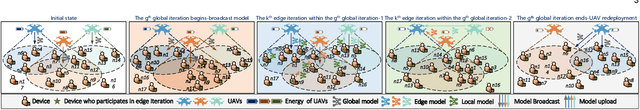

Abstract:Hierarchical Federated Learning (HFL) introduces intermediate aggregation layers, addressing the limitations of conventional Federated Learning (FL) in geographically dispersed environments with limited communication infrastructure. An application of HFL is in smart IoT systems, such as remote monitoring, disaster response, and battlefield operations, where cellular connectivity is often unreliable or unavailable. In these scenarios, UAVs serve as mobile aggregators, providing connectivity to the terrestrial IoT devices. This paper studies an HFL architecture for energy-constrained UAVs in smart IoT systems, pioneering a solution to minimize global training cost increased caused by UAV disconnection. In light of this, we formulate a joint optimization problem involving learning configuration, bandwidth allocation, and device-to-UAV association, and perform global aggregation in time before UAV drops disconnect and redeployment of UAVs. The problem explicitly accounts for the dynamic nature of IoT devices and their interruptible communications and is unveiled to be NP-hard. To address this, we decompose it into three subproblems. First, we optimize the learning configuration and bandwidth allocation using an augmented Lagrangian function to reduce training costs. Second, we propose a device fitness score, integrating data heterogeneity (via Kullback-Leibler divergence), device-to-UAV distances, and IoT device resources, and develop a twin-delayed deep deterministic policy gradient (TD3)-based algorithm for dynamic device-to-UAV assignment. Third, We introduce a low-complexity two-stage greedy strategy for finding the location of UAVs redeployment and selecting the appropriate global aggregator UAV. Experiments on real-world datasets demonstrate significant cost reductions and robust performance under communication interruptions.

Integrated Sensing and Communications for Low-Altitude Economy: A Deep Reinforcement Learning Approach

Dec 05, 2024

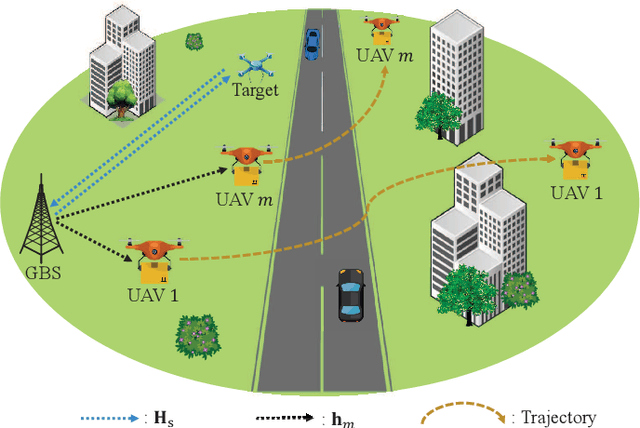

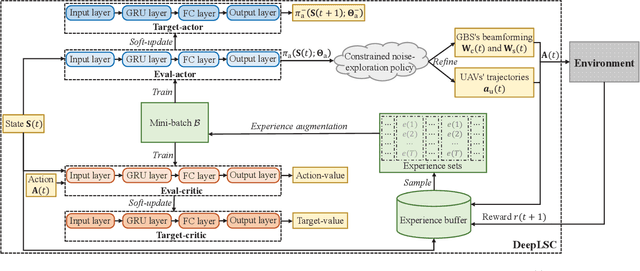

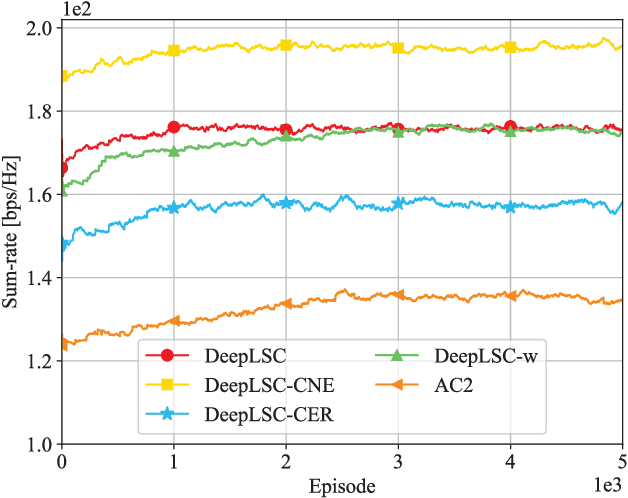

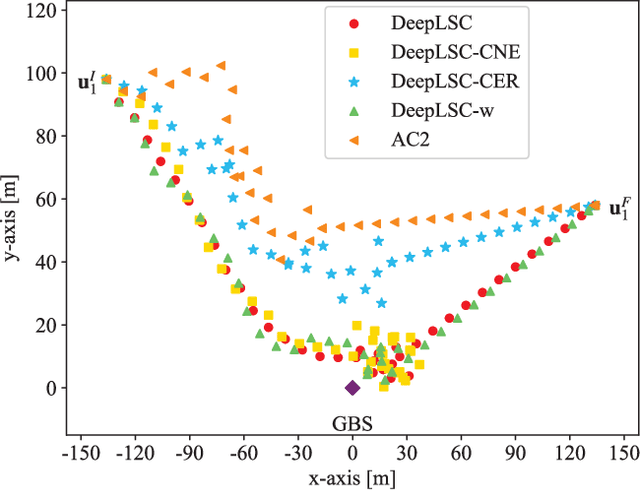

Abstract:This paper studies an integrated sensing and communications (ISAC) system for low-altitude economy (LAE), where a ground base station (GBS) provides communication and navigation services for authorized unmanned aerial vehicles (UAVs), while sensing the low-altitude airspace to monitor the unauthorized mobile target. The expected communication sum-rate over a given flight period is maximized by jointly optimizing the beamforming at the GBS and UAVs' trajectories, subject to the constraints on the average signal-to-noise ratio requirement for sensing, the flight mission and collision avoidance of UAVs, as well as the maximum transmit power at the GBS. Typically, this is a sequential decision-making problem with the given flight mission. Thus, we transform it to a specific Markov decision process (MDP) model called episode task. Based on this modeling, we propose a novel LAE-oriented ISAC scheme, referred to as Deep LAE-ISAC (DeepLSC), by leveraging the deep reinforcement learning (DRL) technique. In DeepLSC, a reward function and a new action selection policy termed constrained noise-exploration policy are judiciously designed to fulfill various constraints. To enable efficient learning in episode tasks, we develop a hierarchical experience replay mechanism, where the gist is to employ all experiences generated within each episode to jointly train the neural network. Besides, to enhance the convergence speed of DeepLSC, a symmetric experience augmentation mechanism, which simultaneously permutes the indexes of all variables to enrich available experience sets, is proposed. Simulation results demonstrate that compared with benchmarks, DeepLSC yields a higher sum-rate while meeting the preset constraints, achieves faster convergence, and is more robust against different settings.

A Federated Online Restless Bandit Framework for Cooperative Resource Allocation

Jun 12, 2024Abstract:Restless multi-armed bandits (RMABs) have been widely utilized to address resource allocation problems with Markov reward processes (MRPs). Existing works often assume that the dynamics of MRPs are known prior, which makes the RMAB problem solvable from an optimization perspective. Nevertheless, an efficient learning-based solution for RMABs with unknown system dynamics remains an open problem. In this paper, we study the cooperative resource allocation problem with unknown system dynamics of MRPs. This problem can be modeled as a multi-agent online RMAB problem, where multiple agents collaboratively learn the system dynamics while maximizing their accumulated rewards. We devise a federated online RMAB framework to mitigate the communication overhead and data privacy issue by adopting the federated learning paradigm. Based on this framework, we put forth a Federated Thompson Sampling-enabled Whittle Index (FedTSWI) algorithm to solve this multi-agent online RMAB problem. The FedTSWI algorithm enjoys a high communication and computation efficiency, and a privacy guarantee. Moreover, we derive a regret upper bound for the FedTSWI algorithm. Finally, we demonstrate the effectiveness of the proposed algorithm on the case of online multi-user multi-channel access. Numerical results show that the proposed algorithm achieves a fast convergence rate of $\mathcal{O}(\sqrt{T\log(T)})$ and better performance compared with baselines. More importantly, its sample complexity decreases with the number of agents.

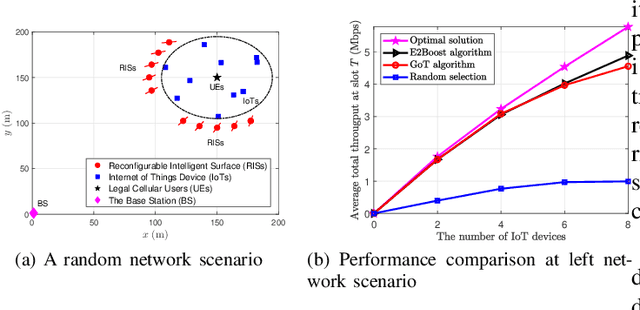

Two-Stage Resource Allocation in Reconfigurable Intelligent Surface Assisted Hybrid Networks via Multi-Player Bandits

Jun 09, 2024

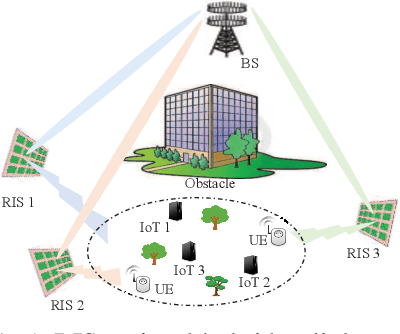

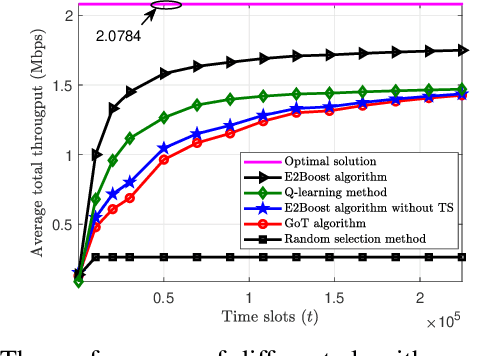

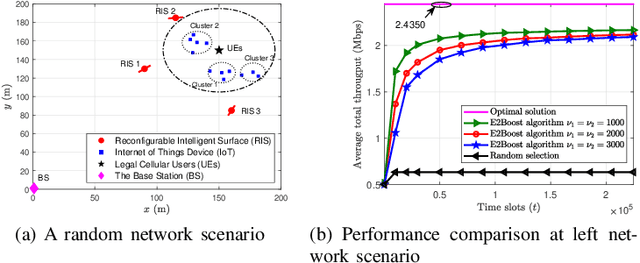

Abstract:This paper considers a resource allocation problem where several Internet-of-Things (IoT) devices send data to a base station (BS) with or without the help of the reconfigurable intelligent surface (RIS) assisted cellular network. The objective is to maximize the sum rate of all IoT devices by finding the optimal RIS and spreading factor (SF) for each device. Since these IoT devices lack prior information on the RISs or the channel state information (CSI), a distributed resource allocation framework with low complexity and learning features is required to achieve this goal. Therefore, we model this problem as a two-stage multi-player multi-armed bandit (MPMAB) framework to learn the optimal RIS and SF sequentially. Then, we put forth an exploration and exploitation boosting (E2Boost) algorithm to solve this two-stage MPMAB problem by combining the $\epsilon$-greedy algorithm, Thompson sampling (TS) algorithm, and non-cooperation game method. We derive an upper regret bound for the proposed algorithm, i.e., $\mathcal{O}(\log^{1+\delta}_2 T)$, increasing logarithmically with the time horizon $T$. Numerical results show that the E2Boost algorithm has the best performance among the existing methods and exhibits a fast convergence rate. More importantly, the proposed algorithm is not sensitive to the number of combinations of the RISs and SFs thanks to the two-stage allocation mechanism, which can benefit high-density networks.

AFDM Channel Estimation in Multi-Scale Multi-Lag Channels

May 04, 2024

Abstract:Affine Frequency Division Multiplexing (AFDM) is a brand new chirp-based multi-carrier (MC) waveform for high mobility communications, with promising advantages over Orthogonal Frequency Division Multiplexing (OFDM) and other MC waveforms. Existing AFDM research focuses on wireless communication at high carrier frequency (CF), which typically considers only Doppler frequency shift (DFS) as a result of mobility, while ignoring the accompanied Doppler time scaling (DTS) on waveform. However, for underwater acoustic (UWA) communication at much lower CF and propagating at speed of sound, the DTS effect could not be ignored and poses significant challenges for channel estimation. This paper analyzes the channel frequency response (CFR) of AFDM under multi-scale multi-lag (MSML) channels, where each propagating path could have different delay and DFS/DTS. Based on the newly derived input-output formula and its characteristics, two new channel estimation methods are proposed, i.e., AFDM with iterative multi-index (AFDM-IMI) estimation under low to moderate DTS, and AFDM with orthogonal matching pursuit (AFDM-OMP) estimation under high DTS. Numerical results confirm the effectiveness of the proposed methods against the original AFDM channel estimation method. Moreover, the resulted AFDM system outperforms OFDM as well as Orthogonal Chirp Division Multiplexing (OCDM) in terms of channel estimation accuracy and bit error rate (BER), which is consistent with our theoretical analysis based on CFR overlap probability (COP), mutual incoherent property (MIP) and channel diversity gain under MSML channels.

Data-Driven Online Resource Allocation for User Experience Improvement in Mobile Edge Clouds

Apr 06, 2024

Abstract:As the cloud is pushed to the edge of the network, resource allocation for user experience improvement in mobile edge clouds (MEC) is increasingly important and faces multiple challenges. This paper studies quality of experience (QoE)-oriented resource allocation in MEC while considering user diversity, limited resources, and the complex relationship between allocated resources and user experience. We introduce a closed-loop online resource allocation (CORA) framework to tackle this problem. It learns the objective function of resource allocation from the historical dataset and updates the learned model using the online testing results. Due to the learned objective model is typically non-convex and challenging to solve in real-time, we leverage the Lyapunov optimization to decouple the long-term average constraint and apply the prime-dual method to solve this decoupled resource allocation problem. Thereafter, we put forth a data-driven optimal online queue resource allocation (OOQRA) algorithm and a data-driven robust OQRA (ROQRA) algorithm for homogenous and heterogeneous user cases, respectively. Moreover, we provide a rigorous convergence analysis for the OOQRA algorithm. We conduct extensive experiments to evaluate the proposed algorithms using the synthesis and YouTube datasets. Numerical results validate the theoretical analysis and demonstrate that the user complaint rate is reduced by up to 100% and 18% in the synthesis and YouTube datasets, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge