Two-Stage Resource Allocation in Reconfigurable Intelligent Surface Assisted Hybrid Networks via Multi-Player Bandits

Paper and Code

Jun 09, 2024

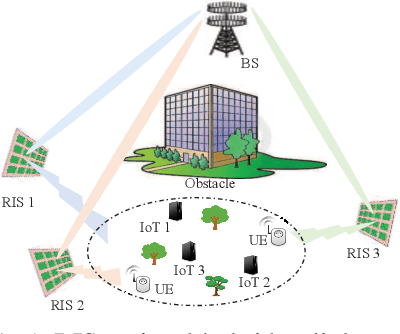

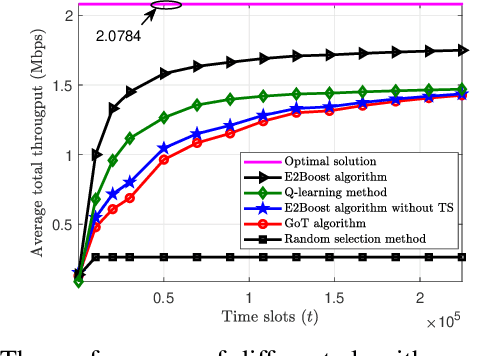

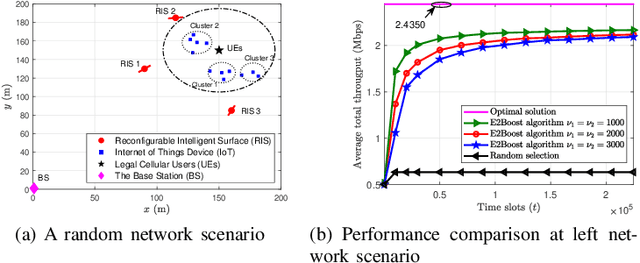

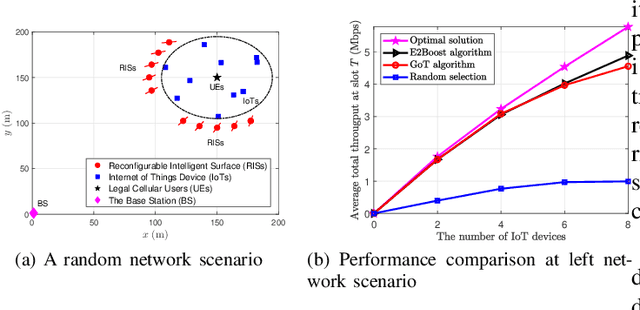

This paper considers a resource allocation problem where several Internet-of-Things (IoT) devices send data to a base station (BS) with or without the help of the reconfigurable intelligent surface (RIS) assisted cellular network. The objective is to maximize the sum rate of all IoT devices by finding the optimal RIS and spreading factor (SF) for each device. Since these IoT devices lack prior information on the RISs or the channel state information (CSI), a distributed resource allocation framework with low complexity and learning features is required to achieve this goal. Therefore, we model this problem as a two-stage multi-player multi-armed bandit (MPMAB) framework to learn the optimal RIS and SF sequentially. Then, we put forth an exploration and exploitation boosting (E2Boost) algorithm to solve this two-stage MPMAB problem by combining the $\epsilon$-greedy algorithm, Thompson sampling (TS) algorithm, and non-cooperation game method. We derive an upper regret bound for the proposed algorithm, i.e., $\mathcal{O}(\log^{1+\delta}_2 T)$, increasing logarithmically with the time horizon $T$. Numerical results show that the E2Boost algorithm has the best performance among the existing methods and exhibits a fast convergence rate. More importantly, the proposed algorithm is not sensitive to the number of combinations of the RISs and SFs thanks to the two-stage allocation mechanism, which can benefit high-density networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge