Liping Li

Hong Kong Quantum AI Lab Limited, Pak Shek Kok, Hong Kong SAR, China

Predictions of photophysical properties of phosphorescent platinum(II) complexes based on ensemble machine learning approach

Jan 08, 2023Abstract:Phosphorescent metal complexes have been under intense investigations as emissive dopants for energy efficient organic light emitting diodes (OLEDs). Among them, cyclometalated Pt(II) complexes are widespread triplet emitters with color-tunable emissions. To render their practical applications as OLED emitters, it is in great need to develop Pt(II) complexes with high radiative decay rate constant ($k_r$) and photoluminescence (PL) quantum yield. Thus, an efficient and accurate prediction tool is highly desirable. Here, we develop a general protocol for accurate predictions of emission wavelength, radiative decay rate constant, and PL quantum yield for phosphorescent Pt(II) emitters based on the combination of first-principles quantum mechanical method, machine learning (ML) and experimental calibration. A new dataset concerning phosphorescent Pt(II) emitters is constructed, with more than two hundred samples collected from the literature. Features containing pertinent electronic properties of the complexes are chosen. Our results demonstrate that ensemble learning models combined with stacking-based approaches exhibit the best performance, where the values of squared correlation coefficients ($R^2$), mean absolute error (MAE), and root mean square error (RMSE) are 0.96, 7.21 nm and 13.00 nm for emission wavelength prediction, and 0.81, 0.11 and 0.15 for PL quantum yield prediction. For radiative decay rate constant ($k_r$), the obtained value of $R^2$ is 0.67 while MAE and RMSE are 0.21 and 0.25 (both in log scale), respectively. The accuracy of the protocol is further confirmed using 24 recently reported Pt(II) complexes, which demonstrates its reliability for a broad palette of Pt(II) emitters.We expect this protocol will become a valuable tool, accelerating the rational design of novel OLED materials with desired properties.

Belief-selective Propagation Detection for MIMO Systems

Jun 27, 2022

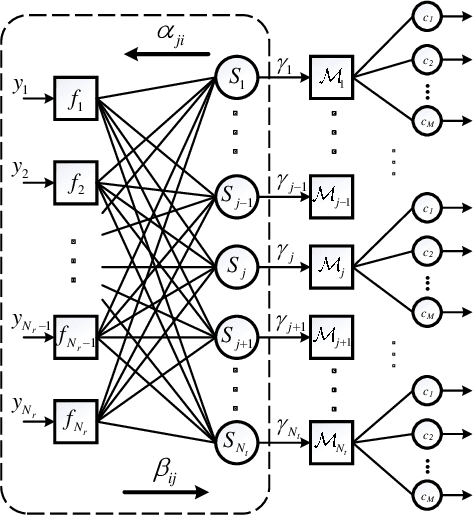

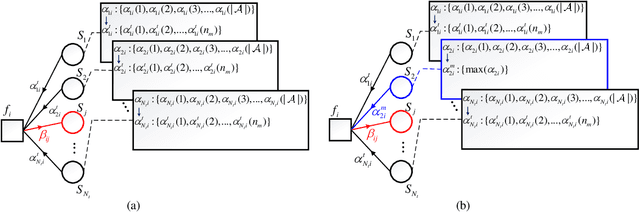

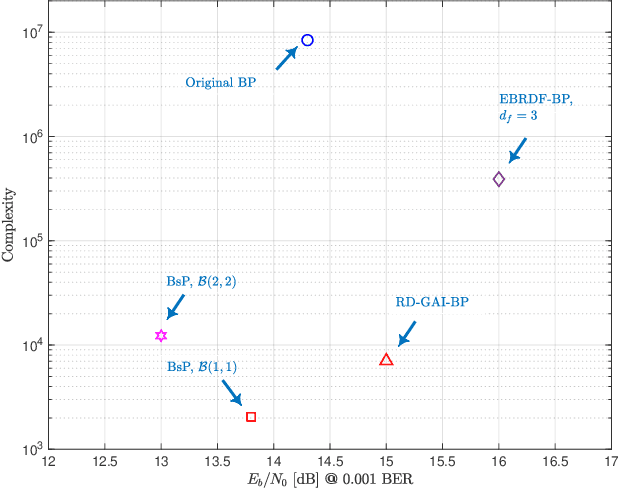

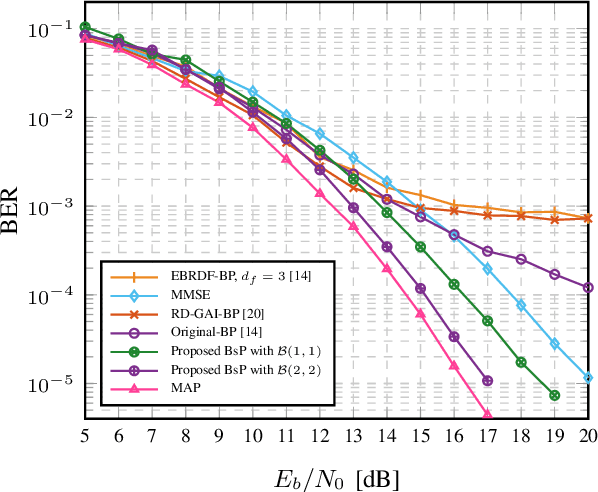

Abstract:Compared to the linear MIMO detectors, the Belief Propagation (BP) detector has shown greater capabilities in achieving near optimal performance and better nature to iteratively cooperate with channel decoders. Aiming at real applications, recent works mainly fall into the category of reducing the complexity by simplified calculations, at the expense of performance sacrifice. However, the complexity is still unsatisfactory with exponentially increasing complexity or required exponentiation operations. Furthermore, due to the inherent loopy structure, the existing BP detectors persistently encounter error floor in high signal-to-noise ratio (SNR) region, which becomes even worse with calculation approximation. This work aims at a revised BP detector, named {Belief-selective Propagation (BsP)} detector by selectively utilizing the \emph{trusted} incoming messages with sufficiently large \textit{a priori} probabilities for updates. Two proposed strategies: symbol-based truncation (ST) and edge-based simplification (ES) squeeze the complexity (orders lower than the Original-BP), while greatly relieving the error floor issue over a wide range of antenna and modulation combinations. For the $16$-QAM $8 \times 4$ MIMO system, the $\mathcal{B}(1,1)$ {BsP} detector achieves more than $4$\,dB performance gain (@$\text{BER}=10^{-4}$) with roughly $4$ orders lower complexity than the Original-BP detector. Trade-off between performance and complexity towards different application requirement can be conveniently obtained by configuring the ST and ES parameters.

3D modelling of survey scene from images enhanced with a multi-exposure fusion

Nov 10, 2021

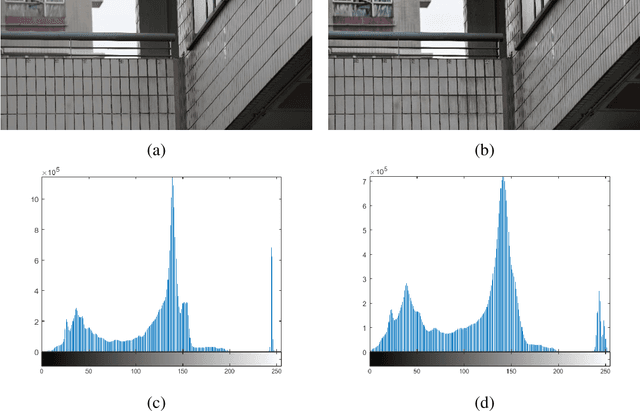

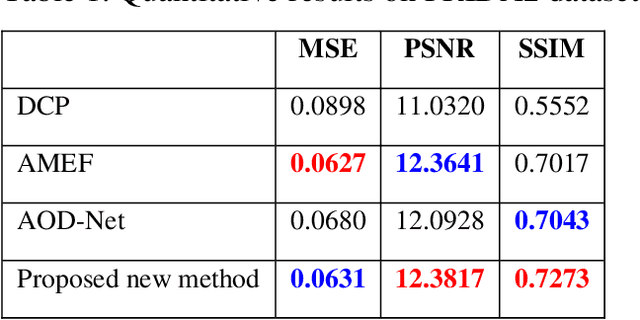

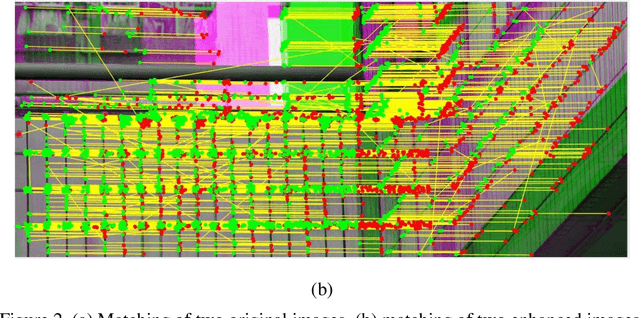

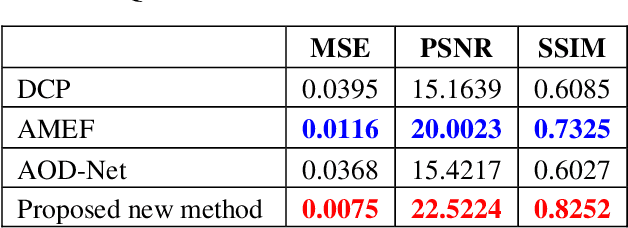

Abstract:In current practice, scene survey is carried out by workers using total stations. The method has high accuracy, but it incurs high costs if continuous monitoring is needed. Techniques based on photogrammetry, with the relatively cheaper digital cameras, have gained wide applications in many fields. Besides point measurement, photogrammetry can also create a three-dimensional (3D) model of the scene. Accurate 3D model reconstruction depends on high quality images. Degraded images will result in large errors in the reconstructed 3D model. In this paper, we propose a method that can be used to improve the visibility of the images, and eventually reduce the errors of the 3D scene model. The idea is inspired by image dehazing. Each original image is first transformed into multiple exposure images by means of gamma-correction operations and adaptive histogram equalization. The transformed images are analyzed by the computation of the local binary patterns. The image is then enhanced, with each pixel generated from the set of transformed image pixels weighted by a function of the local pattern feature and image saturation. Performance evaluation has been performed on benchmark image dehazing datasets. Experimentations have been carried out on outdoor and indoor surveys. Our analysis finds that the method works on different types of degradation that exist in both outdoor and indoor images. When fed into the photogrammetry software, the enhanced images can reconstruct 3D scene models with sub-millimeter mean errors.

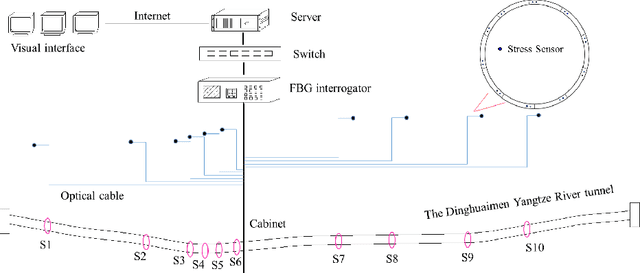

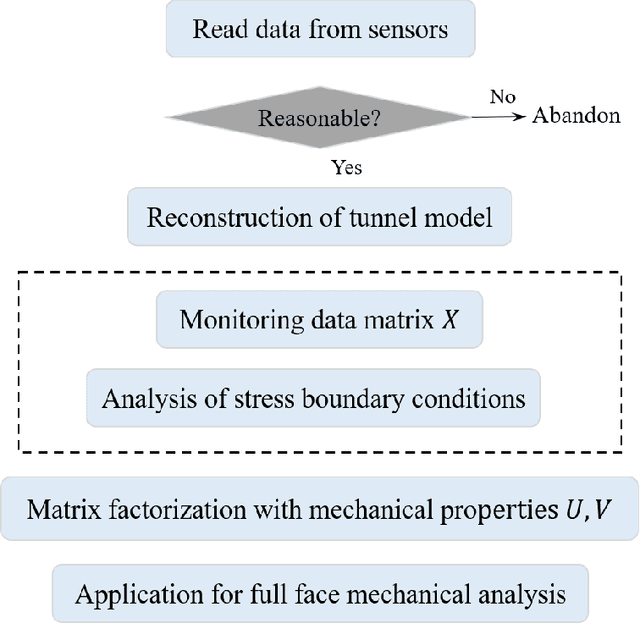

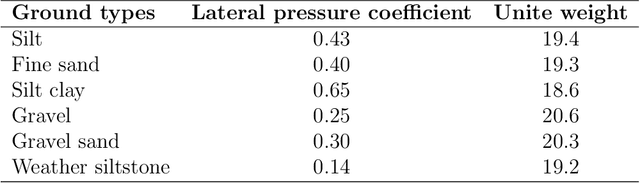

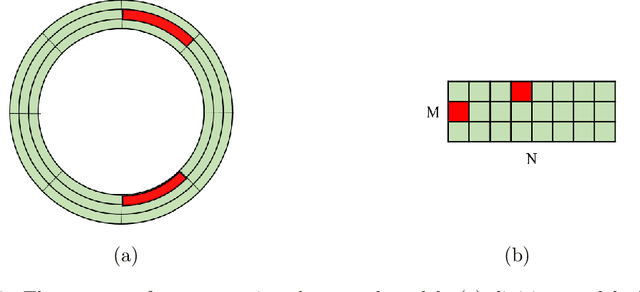

Analysis for full face mechanical behaviors through spatial deduction model with real-time monitoring data

Sep 27, 2021

Abstract:Mechanical analysis for the full face of tunnel structure is crucial to maintain stability, which is a challenge in classical analytical solutions and data analysis. Along this line, this study aims to develop a spatial deduction model to obtain the full-faced mechanical behaviors through integrating mechanical properties into pure data-driven model. The spatial tunnel structure is divided into many parts and reconstructed in a form of matrix. Then, the external load applied on structure in the field was considered to study the mechanical behaviors of tunnel. Based on the limited observed monitoring data in matrix and mechanical analysis results, a double-driven model was developed to obtain the full-faced information, in which the data-driven model was the dominant one and the mechanical constraint was the secondary one. To verify the presented spatial deduction model, cross-test was conducted through assuming partial monitoring data are unknown and regarding them as testing points. The well agreement between deduction results with actual monitoring results means the proposed model is reasonable. Therefore, it was employed to deduct both the current and historical performance of tunnel full face, which is crucial to prevent structural disasters.

A Communication Efficient Vertical Federated Learning Framework

Dec 27, 2019

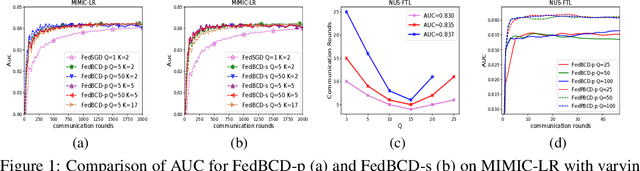

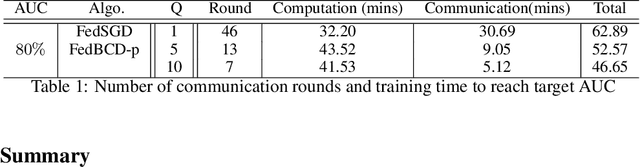

Abstract:One critical challenge for applying today's Artificial Intelligence (AI) technologies to real-world applications is the common existence of data silos across different organizations. Due to legal, privacy and other practical constraints, data from different organizations cannot be easily integrated. Federated learning (FL), especially the vertical FL (VFL), allows multiple parties having different sets of attributes about the same user collaboratively build models while preserving user privacy. However, communication overhead is a principal bottleneck since the existing VFL protocols require per-iteration communications among all parties. In this paper, we propose the Federated Stochastic Block Coordinate Descent (FedBCD) to effectively reduce the communication rounds for VFL. We show that when the batch size, sample size, and the local iterations are selected appropriately, the algorithm requires $\mathcal{O}(\sqrt{T})$ communication rounds to achieve $\mathcal{O}(1/\sqrt{T})$ accuracy. Finally, we demonstrate the performance of FedBCD on several models and datasets, and on a large-scale industrial platform for VFL.

Communication-Censored Distributed Stochastic Gradient Descent

Sep 09, 2019

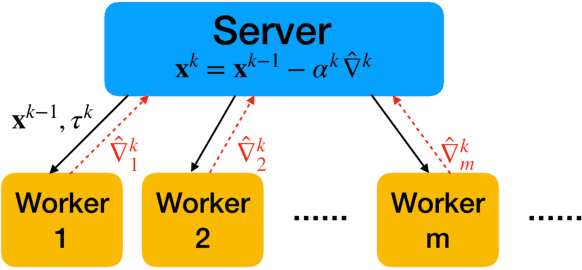

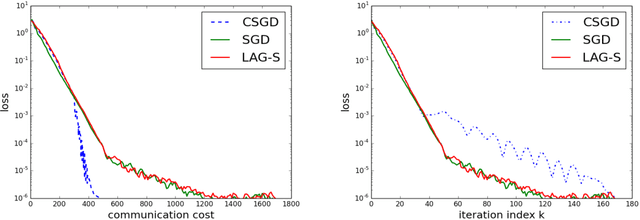

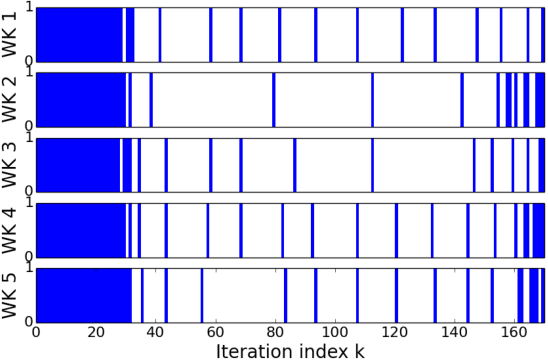

Abstract:This paper develops a communication-efficient algorithm to solve the stochastic optimization problem defined over a distributed network, aiming at reducing the burdensome communication in applications such as distributed machine learning. Different from the existing works based on quantization and sparsification, we introduce a communication-censoring technique to reduce the transmissions of variables, which leads to our communication-Censored distributed Stochastic Gradient Descent (CSGD) algorithm. Specifically, in CSGD, the latest mini-batch stochastic gradient at a worker will be transmitted to the server only if it is sufficiently informative. When the latest gradient is not available, the stale one will be reused at the server. To implement this communication-censoring strategy, the batch sizes are increasing in order to alleviate the effect of gradient noise. Theoretically, CSGD enjoys the same order of convergence rate as that of SGD, but effectively reduces communication. Numerical experiments further demonstrate the sizable communication saving of CSGD.

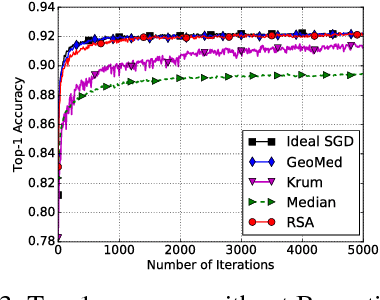

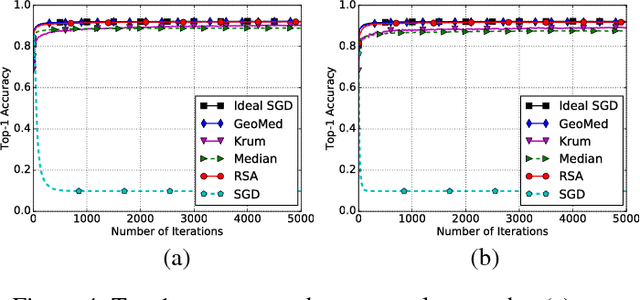

RSA: Byzantine-Robust Stochastic Aggregation Methods for Distributed Learning from Heterogeneous Datasets

Nov 09, 2018

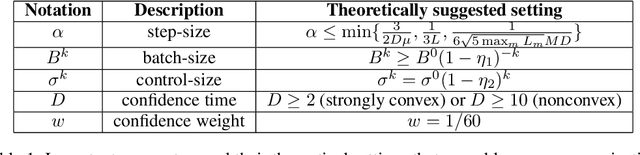

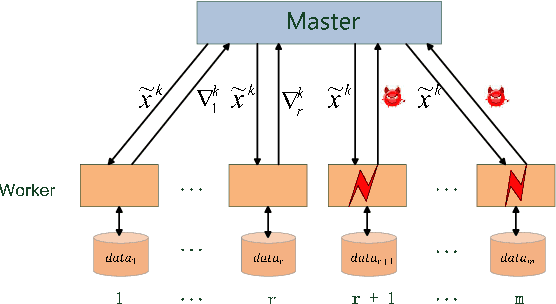

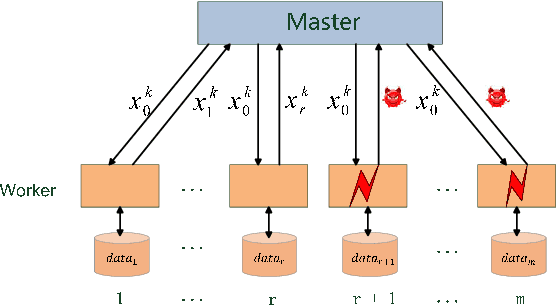

Abstract:In this paper, we propose a class of robust stochastic subgradient methods for distributed learning from heterogeneous datasets at presence of an unknown number of Byzantine workers. The Byzantine workers, during the learning process, may send arbitrary incorrect messages to the master due to data corruptions, communication failures or malicious attacks, and consequently bias the learned model. The key to the proposed methods is a regularization term incorporated with the objective function so as to robustify the learning task and mitigate the negative effects of Byzantine attacks. The resultant subgradient-based algorithms are termed Byzantine-Robust Stochastic Aggregation methods, justifying our acronym RSA used henceforth. In contrast to most of the existing algorithms, RSA does not rely on the assumption that the data are independent and identically distributed (i.i.d.) on the workers, and hence fits for a wider class of applications. Theoretically, we show that: i) RSA converges to a near-optimal solution with the learning error dependent on the number of Byzantine workers; ii) the convergence rate of RSA under Byzantine attacks is the same as that of the stochastic gradient descent method, which is free of Byzantine attacks. Numerically, experiments on real dataset corroborate the competitive performance of RSA and a complexity reduction compared to the state-of-the-art alternatives.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge