Lianbo Ma

Member, IEEE

Tail-Aware Data Augmentation for Long-Tail Sequential Recommendation

Jan 16, 2026Abstract:Sequential recommendation (SR) learns user preferences based on their historical interaction sequences and provides personalized suggestions. In real-world scenarios, most users can only interact with a handful of items, while the majority of items are seldom consumed. This pervasive long-tail challenge limits the model's ability to learn user preferences. Despite previous efforts to enrich tail items/users with knowledge from head parts or improve tail learning through additional contextual information, they still face the following issues: 1) They struggle to improve the situation where interactions of tail users/items are scarce, leading to incomplete preferences learning for the tail parts. 2) Existing methods often degrade overall or head parts performance when improving accuracy for tail users/items, thereby harming the user experience. We propose Tail-Aware Data Augmentation (TADA) for long-tail sequential recommendation, which enhances the interaction frequency for tail items/users while maintaining head performance, thereby promoting the model's learning capabilities for the tail. Specifically, we first capture the co-occurrence and correlation among low-popularity items by a linear model. Building upon this, we design two tail-aware augmentation operators, T-Substitute and T-Insert. The former replaces the head item with a relevant item, while the latter utilizes co-occurrence relationships to extend the original sequence by incorporating both head and tail items. The augmented and original sequences are mixed at the representation level to preserve preference knowledge. We further extend the mix operation across different tail-user sequences and augmented sequences to generate richer augmented samples, thereby improving tail performance. Comprehensive experiments demonstrate the superiority of our method. The codes are provided at https://github.com/KingGugu/TADA.

Learning from Loss Landscape: Generalizable Mixed-Precision Quantization via Adaptive Sharpness-Aware Gradient Aligning

May 08, 2025Abstract:Mixed Precision Quantization (MPQ) has become an essential technique for optimizing neural network by determining the optimal bitwidth per layer. Existing MPQ methods, however, face a major hurdle: they require a computationally expensive search for quantization policies on large-scale datasets. To resolve this issue, we introduce a novel approach that first searches for quantization policies on small datasets and then generalizes them to large-scale datasets. This approach simplifies the process, eliminating the need for large-scale quantization fine-tuning and only necessitating model weight adjustment. Our method is characterized by three key techniques: sharpness-aware minimization for enhanced quantization generalization, implicit gradient direction alignment to handle gradient conflicts among different optimization objectives, and an adaptive perturbation radius to accelerate optimization. Both theoretical analysis and experimental results validate our approach. Using the CIFAR10 dataset (just 0.5\% the size of ImageNet training data) for MPQ policy search, we achieved equivalent accuracy on ImageNet with a significantly lower computational cost, while improving efficiency by up to 150% over the baselines.

Revisiting Multimodal Fusion for 3D Anomaly Detection from an Architectural Perspective

Dec 23, 2024Abstract:Existing efforts to boost multimodal fusion of 3D anomaly detection (3D-AD) primarily concentrate on devising more effective multimodal fusion strategies. However, little attention was devoted to analyzing the role of multimodal fusion architecture (topology) design in contributing to 3D-AD. In this paper, we aim to bridge this gap and present a systematic study on the impact of multimodal fusion architecture design on 3D-AD. This work considers the multimodal fusion architecture design at the intra-module fusion level, i.e., independent modality-specific modules, involving early, middle or late multimodal features with specific fusion operations, and also at the inter-module fusion level, i.e., the strategies to fuse those modules. In both cases, we first derive insights through theoretically and experimentally exploring how architectural designs influence 3D-AD. Then, we extend SOTA neural architecture search (NAS) paradigm and propose 3D-ADNAS to simultaneously search across multimodal fusion strategies and modality-specific modules for the first time.Extensive experiments show that 3D-ADNAS obtains consistent improvements in 3D-AD across various model capacities in terms of accuracy, frame rate, and memory usage, and it exhibits great potential in dealing with few-shot 3D-AD tasks.

HEP-NAS: Towards Efficient Few-shot Neural Architecture Search via Hierarchical Edge Partitioning

Dec 14, 2024Abstract:One-shot methods have significantly advanced the field of neural architecture search (NAS) by adopting weight-sharing strategy to reduce search costs. However, the accuracy of performance estimation can be compromised by co-adaptation. Few-shot methods divide the entire supernet into individual sub-supernets by splitting edge by edge to alleviate this issue, yet neglect relationships among edges and result in performance degradation on huge search space. In this paper, we introduce HEP-NAS, a hierarchy-wise partition algorithm designed to further enhance accuracy. To begin with, HEP-NAS treats edges sharing the same end node as a hierarchy, permuting and splitting edges within the same hierarchy to directly search for the optimal operation combination for each intermediate node. This approach aligns more closely with the ultimate goal of NAS. Furthermore, HEP-NAS selects the most promising sub-supernet after each segmentation, progressively narrowing the search space in which the optimal architecture may exist. To improve performance evaluation of sub-supernets, HEP-NAS employs search space mutual distillation, stabilizing the training process and accelerating the convergence of each individual sub-supernet. Within a given budget, HEP-NAS enables the splitting of all edges and gradually searches for architectures with higher accuracy. Experimental results across various datasets and search spaces demonstrate the superiority of HEP-NAS compared to state-of-the-art methods.

One-Step Forward and Backtrack: Overcoming Zig-Zagging in Loss-Aware Quantization Training

Jan 30, 2024Abstract:Weight quantization is an effective technique to compress deep neural networks for their deployment on edge devices with limited resources. Traditional loss-aware quantization methods commonly use the quantized gradient to replace the full-precision gradient. However, we discover that the gradient error will lead to an unexpected zig-zagging-like issue in the gradient descent learning procedures, where the gradient directions rapidly oscillate or zig-zag, and such issue seriously slows down the model convergence. Accordingly, this paper proposes a one-step forward and backtrack way for loss-aware quantization to get more accurate and stable gradient direction to defy this issue. During the gradient descent learning, a one-step forward search is designed to find the trial gradient of the next-step, which is adopted to adjust the gradient of current step towards the direction of fast convergence. After that, we backtrack the current step to update the full-precision and quantized weights through the current-step gradient and the trial gradient. A series of theoretical analysis and experiments on benchmark deep models have demonstrated the effectiveness and competitiveness of the proposed method, and our method especially outperforms others on the convergence performance.

Survey on Evolutionary Deep Learning: Principles, Algorithms, Applications and Open Issues

Aug 23, 2022

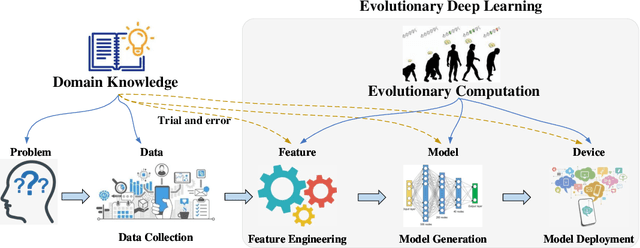

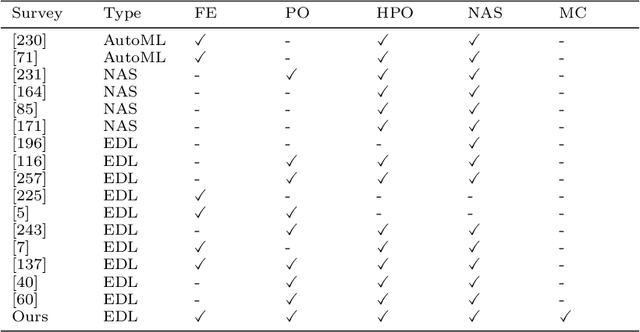

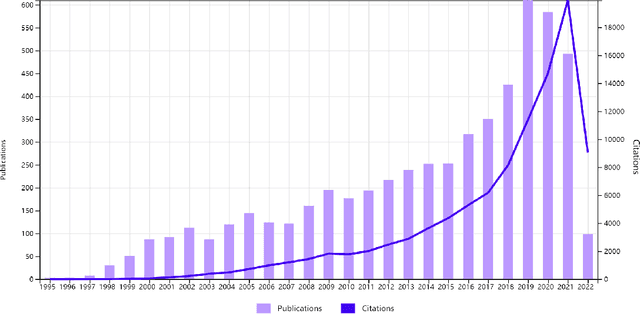

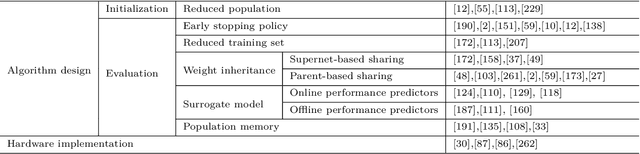

Abstract:Over recent years, there has been a rapid development of deep learning (DL) in both industry and academia fields. However, finding the optimal hyperparameters of a DL model often needs high computational cost and human expertise. To mitigate the above issue, evolutionary computation (EC) as a powerful heuristic search approach has shown significant merits in the automated design of DL models, so-called evolutionary deep learning (EDL). This paper aims to analyze EDL from the perspective of automated machine learning (AutoML). Specifically, we firstly illuminate EDL from machine learning and EC and regard EDL as an optimization problem. According to the DL pipeline, we systematically introduce EDL methods ranging from feature engineering, model generation, to model deployment with a new taxonomy (i.e., what and how to evolve/optimize), and focus on the discussions of solution representation and search paradigm in handling the optimization problem by EC. Finally, key applications, open issues and potentially promising lines of future research are suggested. This survey has reviewed recent developments of EDL and offers insightful guidelines for the development of EDL.

Towards Fairness-Aware Multi-Objective Optimization

Jul 22, 2022

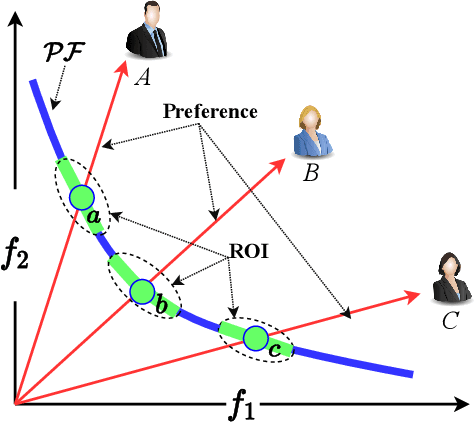

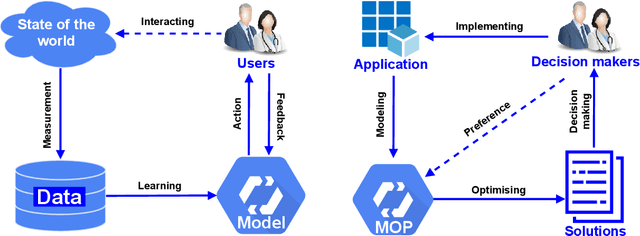

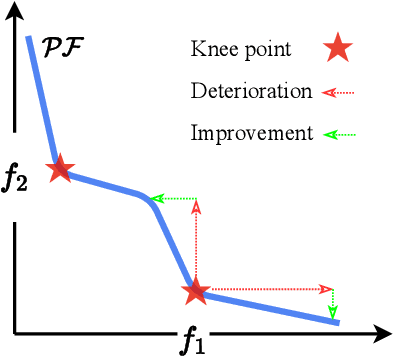

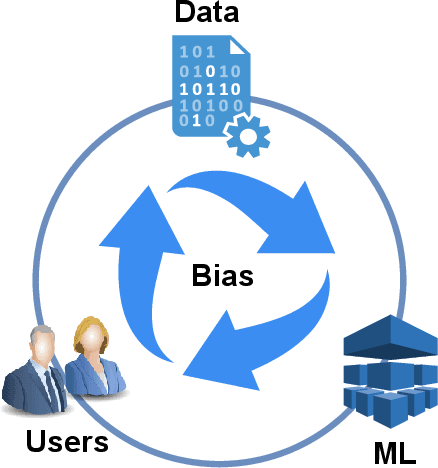

Abstract:Recent years have seen the rapid development of fairness-aware machine learning in mitigating unfairness or discrimination in decision-making in a wide range of applications. However, much less attention has been paid to the fairness-aware multi-objective optimization, which is indeed commonly seen in real life, such as fair resource allocation problems and data driven multi-objective optimization problems. This paper aims to illuminate and broaden our understanding of multi-objective optimization from the perspective of fairness. To this end, we start with a discussion of user preferences in multi-objective optimization and then explore its relationship to fairness in machine learning and multi-objective optimization. Following the above discussions, representative cases of fairness-aware multiobjective optimization are presented, further elaborating the importance of fairness in traditional multi-objective optimization, data-driven optimization and federated optimization. Finally, challenges and opportunities in fairness-aware multi-objective optimization are addressed. We hope that this article makes a small step forward towards understanding fairness in the context of optimization and promote research interest in fairness-aware multi-objective optimization.

How to Simplify Search: Classification-wise Pareto Evolution for One-shot Neural Architecture Search

Sep 14, 2021

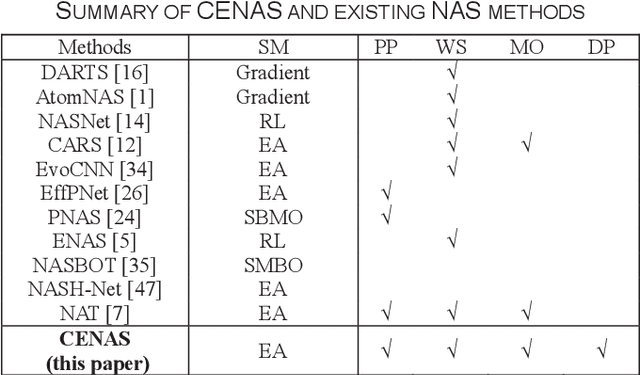

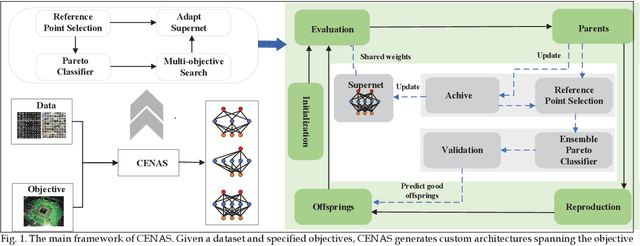

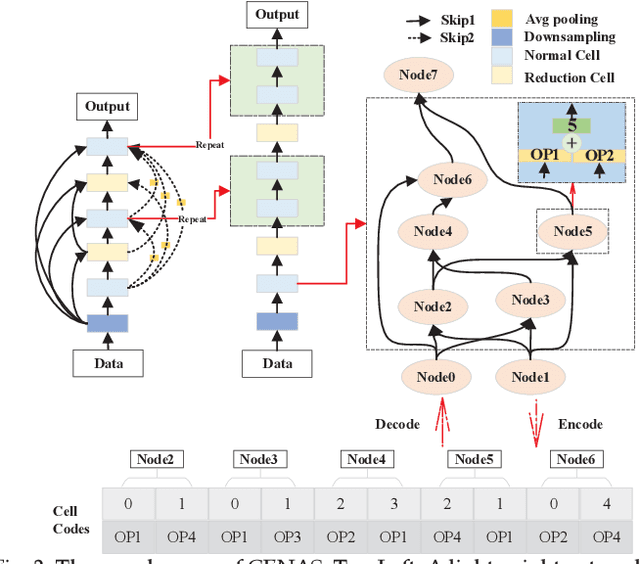

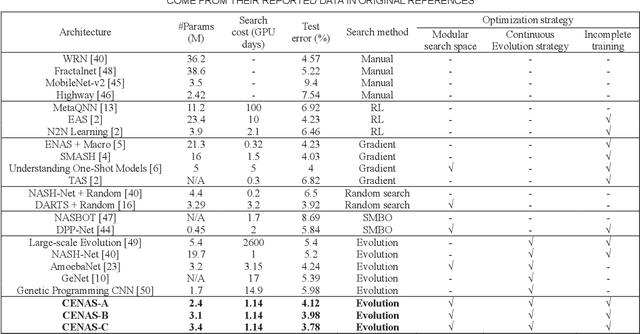

Abstract:In the deployment of deep neural models, how to effectively and automatically find feasible deep models under diverse design objectives is fundamental. Most existing neural architecture search (NAS) methods utilize surrogates to predict the detailed performance (e.g., accuracy and model size) of a candidate architecture during the search, which however is complicated and inefficient. In contrast, we aim to learn an efficient Pareto classifier to simplify the search process of NAS by transforming the complex multi-objective NAS task into a simple Pareto-dominance classification task. To this end, we propose a classification-wise Pareto evolution approach for one-shot NAS, where an online classifier is trained to predict the dominance relationship between the candidate and constructed reference architectures, instead of using surrogates to fit the objective functions. The main contribution of this study is to change supernet adaption into a Pareto classifier. Besides, we design two adaptive schemes to select the reference set of architectures for constructing classification boundary and regulate the rate of positive samples over negative ones, respectively. We compare the proposed evolution approach with state-of-the-art approaches on widely-used benchmark datasets, and experimental results indicate that the proposed approach outperforms other approaches and have found a number of neural architectures with different model sizes ranging from 2M to 6M under diverse objectives and constraints.

Effective Cascade Dual-Decoder Model for Joint Entity and Relation Extraction

Jun 27, 2021

Abstract:Extracting relational triples from texts is a fundamental task in knowledge graph construction. The popular way of existing methods is to jointly extract entities and relations using a single model, which often suffers from the overlapping triple problem. That is, there are multiple relational triples that share the same entities within one sentence. In this work, we propose an effective cascade dual-decoder approach to extract overlapping relational triples, which includes a text-specific relation decoder and a relation-corresponded entity decoder. Our approach is straightforward: the text-specific relation decoder detects relations from a sentence according to its text semantics and treats them as extra features to guide the entity extraction; for each extracted relation, which is with trainable embedding, the relation-corresponded entity decoder detects the corresponding head and tail entities using a span-based tagging scheme. In this way, the overlapping triple problem is tackled naturally. Experiments on two public datasets demonstrate that our proposed approach outperforms state-of-the-art methods and achieves better F1 scores under the strict evaluation metric. Our implementation is available at https://github.com/prastunlp/DualDec.

Composing Knowledge Graph Embeddings via Word Embeddings

Sep 09, 2019

Abstract:Learning knowledge graph embedding from an existing knowledge graph is very important to knowledge graph completion. For a fact $(h,r,t)$ with the head entity $h$ having a relation $r$ with the tail entity $t$, the current approaches aim to learn low dimensional representations $(\mathbf{h},\mathbf{r},\mathbf{t})$, each of which corresponds to the elements in $(h, r, t)$, respectively. As $(\mathbf{h},\mathbf{r},\mathbf{t})$ is learned from the existing facts within a knowledge graph, these representations can not be used to detect unknown facts (if the entities or relations never occur in the knowledge graph). This paper proposes a new approach called TransW, aiming to go beyond the current work by composing knowledge graph embeddings using word embeddings. Given the fact that an entity or a relation contains one or more words (quite often), it is sensible to learn a mapping function from word embedding spaces to knowledge embedding spaces, which shows how entities are constructed using human words. More importantly, composing knowledge embeddings using word embeddings makes it possible to deal with the emerging new facts (either new entities or relations). Experimental results using three public datasets show the consistency and outperformance of the proposed TransW.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge