Lennart Svensson

Infrastructure-based Autonomous Mobile Robots for Internal Logistics -- Challenges and Future Perspectives

Dec 17, 2025

Abstract:The adoption of Autonomous Mobile Robots (AMRs) for internal logistics is accelerating, with most solutions emphasizing decentralized, onboard intelligence. While AMRs in indoor environments like factories can be supported by infrastructure, involving external sensors and computational resources, such systems remain underexplored in the literature. This paper presents a comprehensive overview of infrastructure-based AMR systems, outlining key opportunities and challenges. To support this, we introduce a reference architecture combining infrastructure-based sensing, on-premise cloud computing, and onboard autonomy. Based on the architecture, we review core technologies for localization, perception, and planning. We demonstrate the approach in a real-world deployment in a heavy-vehicle manufacturing environment and summarize findings from a user experience (UX) evaluation. Our aim is to provide a holistic foundation for future development of scalable, robust, and human-compatible AMR systems in complex industrial environments.

Probabilistic Trajectory GOSPA: A Metric for Uncertainty-Aware Multi-Object Tracking Performance Evaluation

Jun 18, 2025Abstract:This paper presents a generalization of the trajectory general optimal sub-pattern assignment (GOSPA) metric for evaluating multi-object tracking algorithms that provide trajectory estimates with track-level uncertainties. This metric builds on the recently introduced probabilistic GOSPA metric to account for both the existence and state estimation uncertainties of individual object states. Similar to trajectory GOSPA (TGOSPA), it can be formulated as a multidimensional assignment problem, and its linear programming relaxation--also a valid metric--is computable in polynomial time. Additionally, this metric retains the interpretability of TGOSPA, and we show that its decomposition yields intuitive costs terms associated to expected localization error and existence probability mismatch error for properly detected objects, expected missed and false detection error, and track switch error. The effectiveness of the proposed metric is demonstrated through a simulation study.

Future-Oriented Navigation: Dynamic Obstacle Avoidance with One-Shot Energy-Based Multimodal Motion Prediction

May 01, 2025Abstract:This paper proposes an integrated approach for the safe and efficient control of mobile robots in dynamic and uncertain environments. The approach consists of two key steps: one-shot multimodal motion prediction to anticipate motions of dynamic obstacles and model predictive control to incorporate these predictions into the motion planning process. Motion prediction is driven by an energy-based neural network that generates high-resolution, multi-step predictions in a single operation. The prediction outcomes are further utilized to create geometric shapes formulated as mathematical constraints. Instead of treating each dynamic obstacle individually, predicted obstacles are grouped by proximity in an unsupervised way to improve performance and efficiency. The overall collision-free navigation is handled by model predictive control with a specific design for proactive dynamic obstacle avoidance. The proposed approach allows mobile robots to navigate effectively in dynamic environments. Its performance is accessed across various scenarios that represent typical warehouse settings. The results demonstrate that the proposed approach outperforms other existing dynamic obstacle avoidance methods.

NeuRadar: Neural Radiance Fields for Automotive Radar Point Clouds

Apr 01, 2025Abstract:Radar is an important sensor for autonomous driving (AD) systems due to its robustness to adverse weather and different lighting conditions. Novel view synthesis using neural radiance fields (NeRFs) has recently received considerable attention in AD due to its potential to enable efficient testing and validation but remains unexplored for radar point clouds. In this paper, we present NeuRadar, a NeRF-based model that jointly generates radar point clouds, camera images, and lidar point clouds. We explore set-based object detection methods such as DETR, and propose an encoder-based solution grounded in the NeRF geometry for improved generalizability. We propose both a deterministic and a probabilistic point cloud representation to accurately model the radar behavior, with the latter being able to capture radar's stochastic behavior. We achieve realistic reconstruction results for two automotive datasets, establishing a baseline for NeRF-based radar point cloud simulation models. In addition, we release radar data for ZOD's Sequences and Drives to enable further research in this field. To encourage further development of radar NeRFs, we release the source code for NeuRadar.

Optimizing Gene-Based Testing for Antibiotic Resistance Prediction

Feb 19, 2025Abstract:Antibiotic Resistance (AR) is a critical global health challenge that necessitates the development of cost-effective, efficient, and accurate diagnostic tools. Given the genetic basis of AR, techniques such as Polymerase Chain Reaction (PCR) that target specific resistance genes offer a promising approach for predictive diagnostics using a limited set of key genes. This study introduces GenoARM, a novel framework that integrates reinforcement learning (RL) with transformer-based models to optimize the selection of PCR gene tests and improve AR predictions, leveraging observed metadata for improved accuracy. In our evaluation, we developed several high-performing baselines and compared them using publicly available datasets derived from real-world bacterial samples representing multiple clinically relevant pathogens. The results show that all evaluated methods achieve strong and reliable performance when metadata is not utilized. When metadata is introduced and the number of selected genes increases, GenoARM demonstrates superior performance due to its capacity to approximate rewards for unseen and sparse combinations. Overall, our framework represents a major advancement in optimizing diagnostic tools for AR in clinical settings.

Probabilistic GOSPA: A Metric for Performance Evaluation of Multi-Object Filters with Uncertainties

Dec 16, 2024

Abstract:This paper presents a probabilistic generalization of the generalized optimal subpattern assignment (GOSPA) metric, termed P-GOSPA metric. GOSPA is a popular metric for evaluating the distance between finite sets, typically in multi-object estimation applications. P-GOSPA extends GOSPA to the space of multi-Bernoulli set densities, incorporating the inherent uncertainty in probabilistic multi-object representations. In addition, P-GOSPA retains the interpretability of GOSPA, such as decomposability into localization, missed and false detection errors, in a sound manner. Examples and simulations are presented to demonstrate the efficacy of P-GOSPA.

TGOSPA Metric Parameters Selection and Evaluation for Visual Multi-object Tracking

Dec 11, 2024

Abstract:Multi-object tracking algorithms are deployed in various applications, each with unique performance requirements. For example, track switches pose significant challenges for offline scene understanding, as they hinder the accuracy of data interpretation. Conversely, in online surveillance applications, their impact is often minimal. This disparity underscores the need for application-specific performance evaluations that are both simple and mathematically sound. The trajectory generalized optimal sub-pattern assignment (TGOSPA) metric offers a principled approach to evaluate multi-object tracking performance. It accounts for localization errors, the number of missed and false objects, and the number of track switches, providing a comprehensive assessment framework. This paper illustrates the effective use of the TGOSPA metric in computer vision tasks, addressing challenges posed by the need for application-specific scoring methodologies. By exploring the TGOSPA parameter selection, we enable users to compare, comprehend, and optimize the performance of algorithms tailored for specific tasks, such as target tracking and training of detector or re-ID modules.

MVUDA: Unsupervised Domain Adaptation for Multi-view Pedestrian Detection

Dec 05, 2024

Abstract:We address multi-view pedestrian detection in a setting where labeled data is collected using a multi-camera setup different from the one used for testing. While recent multi-view pedestrian detectors perform well on the camera rig used for training, their performance declines when applied to a different setup. To facilitate seamless deployment across varied camera rigs, we propose an unsupervised domain adaptation (UDA) method that adapts the model to new rigs without requiring additional labeled data. Specifically, we leverage the mean teacher self-training framework with a novel pseudo-labeling technique tailored to multi-view pedestrian detection. This method achieves state-of-the-art performance on multiple benchmarks, including MultiviewX$\rightarrow$Wildtrack. Unlike previous methods, our approach eliminates the need for external labeled monocular datasets, thereby reducing reliance on labeled data. Extensive evaluations demonstrate the effectiveness of our method and validate key design choices. By enabling robust adaptation across camera setups, our work enhances the practicality of multi-view pedestrian detectors and establishes a strong UDA baseline for future research.

SplatAD: Real-Time Lidar and Camera Rendering with 3D Gaussian Splatting for Autonomous Driving

Nov 25, 2024Abstract:Ensuring the safety of autonomous robots, such as self-driving vehicles, requires extensive testing across diverse driving scenarios. Simulation is a key ingredient for conducting such testing in a cost-effective and scalable way. Neural rendering methods have gained popularity, as they can build simulation environments from collected logs in a data-driven manner. However, existing neural radiance field (NeRF) methods for sensor-realistic rendering of camera and lidar data suffer from low rendering speeds, limiting their applicability for large-scale testing. While 3D Gaussian Splatting (3DGS) enables real-time rendering, current methods are limited to camera data and are unable to render lidar data essential for autonomous driving. To address these limitations, we propose SplatAD, the first 3DGS-based method for realistic, real-time rendering of dynamic scenes for both camera and lidar data. SplatAD accurately models key sensor-specific phenomena such as rolling shutter effects, lidar intensity, and lidar ray dropouts, using purpose-built algorithms to optimize rendering efficiency. Evaluation across three autonomous driving datasets demonstrates that SplatAD achieves state-of-the-art rendering quality with up to +2 PSNR for NVS and +3 PSNR for reconstruction while increasing rendering speed over NeRF-based methods by an order of magnitude. See https://research.zenseact.com/publications/splatad/ for our project page.

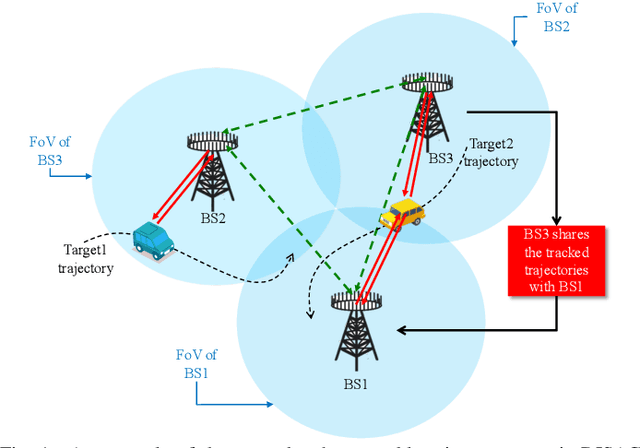

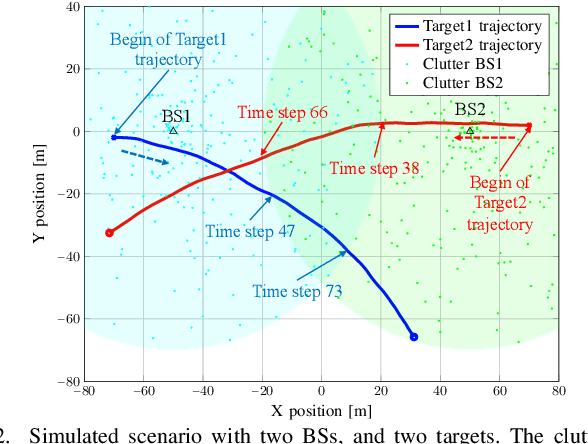

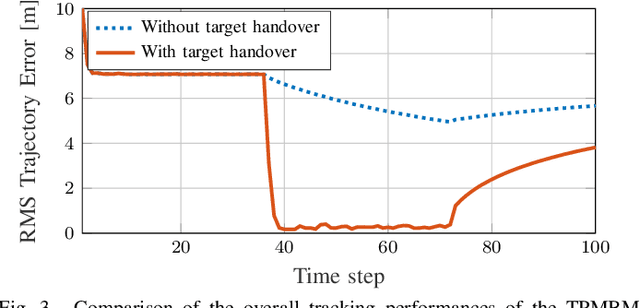

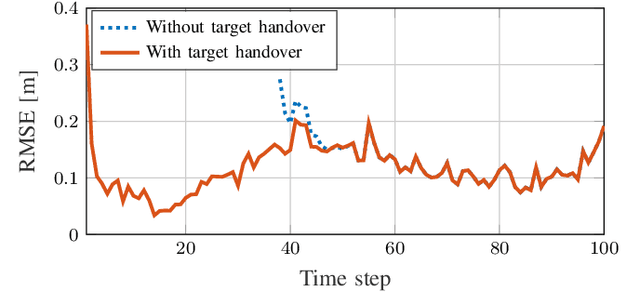

Target Handover in Distributed Integrated Sensing and Communication

Nov 04, 2024

Abstract:The concept of 6G distributed integrated sensing and communications (DISAC) builds upon the functionality of integrated sensing and communications (ISAC) by integrating distributed architectures, significantly enhancing both sensing and communication coverage and performance. In 6G DISAC systems, tracking target trajectories requires base stations (BSs) to hand over their tracked targets to neighboring BSs. Determining what information to share, where, how, and when is critical to effective handover. This paper addresses the target handover challenge in DISAC systems and introduces a method enabling BSs to share essential target trajectory information at appropriate time steps, facilitating seamless handovers to other BSs. The target tracking problem is tackled using the standard trajectory Poisson multi-Bernoulli mixture (TPMBM) filter, enhanced with the proposed handover algorithm. Simulation results confirm the effectiveness of the implemented tracking solution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge