Fredrik Kahl

Semi-Supervised Hierarchical Open-Set Classification

Jan 23, 2026Abstract:Hierarchical open-set classification handles previously unseen classes by assigning them to the most appropriate high-level category in a class taxonomy. We extend this paradigm to the semi-supervised setting, enabling the use of large-scale, uncurated datasets containing a mixture of known and unknown classes to improve the hierarchical open-set performance. To this end, we propose a teacher-student framework based on pseudo-labeling. Two key components are introduced: 1) subtree pseudo-labels, which provide reliable supervision in the presence of unknown data, and 2) age-gating, a mechanism that mitigates overconfidence in pseudo-labels. Experiments show that our framework outperforms self-supervised pretraining followed by supervised adaptation, and even matches the fully supervised counterpart when using only 20 labeled samples per class on the iNaturalist19 benchmark. Our code is available at https://github.com/walline/semihoc.

RoMa v2: Harder Better Faster Denser Feature Matching

Nov 19, 2025Abstract:Dense feature matching aims to estimate all correspondences between two images of a 3D scene and has recently been established as the gold-standard due to its high accuracy and robustness. However, existing dense matchers still fail or perform poorly for many hard real-world scenarios, and high-precision models are often slow, limiting their applicability. In this paper, we attack these weaknesses on a wide front through a series of systematic improvements that together yield a significantly better model. In particular, we construct a novel matching architecture and loss, which, combined with a curated diverse training distribution, enables our model to solve many complex matching tasks. We further make training faster through a decoupled two-stage matching-then-refinement pipeline, and at the same time, significantly reduce refinement memory usage through a custom CUDA kernel. Finally, we leverage the recent DINOv3 foundation model along with multiple other insights to make the model more robust and unbiased. In our extensive set of experiments we show that the resulting novel matcher sets a new state-of-the-art, being significantly more accurate than its predecessors. Code is available at https://github.com/Parskatt/romav2

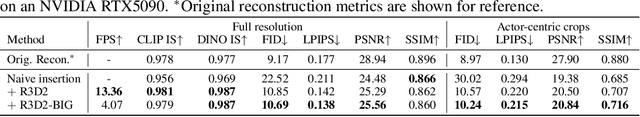

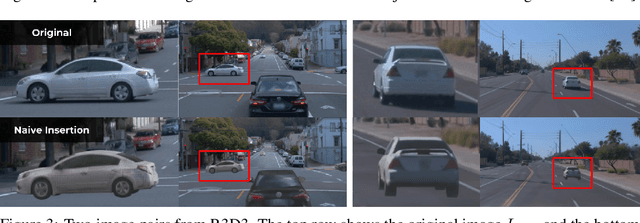

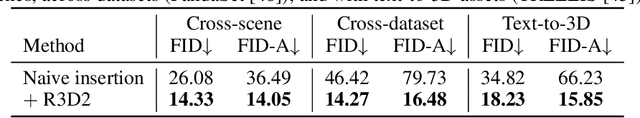

R3D2: Realistic 3D Asset Insertion via Diffusion for Autonomous Driving Simulation

Jun 09, 2025

Abstract:Validating autonomous driving (AD) systems requires diverse and safety-critical testing, making photorealistic virtual environments essential. Traditional simulation platforms, while controllable, are resource-intensive to scale and often suffer from a domain gap with real-world data. In contrast, neural reconstruction methods like 3D Gaussian Splatting (3DGS) offer a scalable solution for creating photorealistic digital twins of real-world driving scenes. However, they struggle with dynamic object manipulation and reusability as their per-scene optimization-based methodology tends to result in incomplete object models with integrated illumination effects. This paper introduces R3D2, a lightweight, one-step diffusion model designed to overcome these limitations and enable realistic insertion of complete 3D assets into existing scenes by generating plausible rendering effects-such as shadows and consistent lighting-in real time. This is achieved by training R3D2 on a novel dataset: 3DGS object assets are generated from in-the-wild AD data using an image-conditioned 3D generative model, and then synthetically placed into neural rendering-based virtual environments, allowing R3D2 to learn realistic integration. Quantitative and qualitative evaluations demonstrate that R3D2 significantly enhances the realism of inserted assets, enabling use-cases like text-to-3D asset insertion and cross-scene/dataset object transfer, allowing for true scalability in AD validation. To promote further research in scalable and realistic AD simulation, we will release our dataset and code, see https://research.zenseact.com/publications/R3D2/.

Stronger ViTs With Octic Equivariance

May 21, 2025Abstract:Recent efforts at scaling computer vision models have established Vision Transformers (ViTs) as the leading architecture. ViTs incorporate weight sharing over image patches as an important inductive bias. In this work, we show that ViTs benefit from incorporating equivariance under the octic group, i.e., reflections and 90-degree rotations, as a further inductive bias. We develop new architectures, octic ViTs, that use octic-equivariant layers and put them to the test on both supervised and self-supervised learning. Through extensive experiments on DeiT-III and DINOv2 training on ImageNet-1K, we show that octic ViTs yield more computationally efficient networks while also improving performance. In particular, we achieve approximately 40% reduction in FLOPs for ViT-H while simultaneously improving both classification and segmentation results.

Addressing degeneracies in latent interpolation for diffusion models

May 12, 2025Abstract:There is an increasing interest in using image-generating diffusion models for deep data augmentation and image morphing. In this context, it is useful to interpolate between latents produced by inverting a set of input images, in order to generate new images representing some mixture of the inputs. We observe that such interpolation can easily lead to degenerate results when the number of inputs is large. We analyze the cause of this effect theoretically and experimentally, and suggest a suitable remedy. The suggested approach is a relatively simple normalization scheme that is easy to use whenever interpolation between latents is needed. We measure image quality using FID and CLIP embedding distance and show experimentally that baseline interpolation methods lead to a drop in quality metrics long before the degeneration issue is clearly visible. In contrast, our method significantly reduces the degeneration effect and leads to improved quality metrics also in non-degenerate situations.

Geometric Consistency Refinement for Single Image Novel View Synthesis via Test-Time Adaptation of Diffusion Models

Apr 11, 2025Abstract:Diffusion models for single image novel view synthesis (NVS) can generate highly realistic and plausible images, but they are limited in the geometric consistency to the given relative poses. The generated images often show significant errors with respect to the epipolar constraints that should be fulfilled, as given by the target pose. In this paper we address this issue by proposing a methodology to improve the geometric correctness of images generated by a diffusion model for single image NVS. We formulate a loss function based on image matching and epipolar constraints, and optimize the starting noise in a diffusion sampling process such that the generated image should both be a realistic image and fulfill geometric constraints derived from the given target pose. Our method does not require training data or fine-tuning of the diffusion models, and we show that we can apply it to multiple state-of-the-art models for single image NVS. The method is evaluated on the MegaScenes dataset and we show that geometric consistency is improved compared to the baseline models while retaining the quality of the generated images.

ProHOC: Probabilistic Hierarchical Out-of-Distribution Classification via Multi-Depth Networks

Mar 27, 2025Abstract:Out-of-distribution (OOD) detection in deep learning has traditionally been framed as a binary task, where samples are either classified as belonging to the known classes or marked as OOD, with little attention given to the semantic relationships between OOD samples and the in-distribution (ID) classes. We propose a framework for detecting and classifying OOD samples in a given class hierarchy. Specifically, we aim to predict OOD data to their correct internal nodes of the class hierarchy, whereas the known ID classes should be predicted as their corresponding leaf nodes. Our approach leverages the class hierarchy to create a probabilistic model and we implement this model by using networks trained for ID classification at multiple hierarchy depths. We conduct experiments on three datasets with predefined class hierarchies and show the effectiveness of our method. Our code is available at https://github.com/walline/prohoc.

A Framework for Reducing the Complexity of Geometric Vision Problems and its Application to Two-View Triangulation with Approximation Bounds

Mar 11, 2025

Abstract:In this paper, we present a new framework for reducing the computational complexity of geometric vision problems through targeted reweighting of the cost functions used to minimize reprojection errors. Triangulation - the task of estimating a 3D point from noisy 2D projections across multiple images - is a fundamental problem in multiview geometry and Structure-from-Motion (SfM) pipelines. We apply our framework to the two-view case and demonstrate that optimal triangulation, which requires solving a univariate polynomial of degree six, can be simplified through cost function reweighting reducing the polynomial degree to two. This reweighting yields a closed-form solution while preserving strong geometric accuracy. We derive optimal weighting strategies, establish theoretical bounds on the approximation error, and provide experimental results on real data demonstrating the effectiveness of the proposed approach compared to standard methods. Although this work focuses on two-view triangulation, the framework generalizes to other geometric vision problems.

Optimizing Gene-Based Testing for Antibiotic Resistance Prediction

Feb 19, 2025Abstract:Antibiotic Resistance (AR) is a critical global health challenge that necessitates the development of cost-effective, efficient, and accurate diagnostic tools. Given the genetic basis of AR, techniques such as Polymerase Chain Reaction (PCR) that target specific resistance genes offer a promising approach for predictive diagnostics using a limited set of key genes. This study introduces GenoARM, a novel framework that integrates reinforcement learning (RL) with transformer-based models to optimize the selection of PCR gene tests and improve AR predictions, leveraging observed metadata for improved accuracy. In our evaluation, we developed several high-performing baselines and compared them using publicly available datasets derived from real-world bacterial samples representing multiple clinically relevant pathogens. The results show that all evaluated methods achieve strong and reliable performance when metadata is not utilized. When metadata is introduced and the number of selected genes increases, GenoARM demonstrates superior performance due to its capacity to approximate rewards for unseen and sparse combinations. Overall, our framework represents a major advancement in optimizing diagnostic tools for AR in clinical settings.

Flopping for FLOPs: Leveraging equivariance for computational efficiency

Feb 07, 2025Abstract:Incorporating geometric invariance into neural networks enhances parameter efficiency but typically increases computational costs. This paper introduces new equivariant neural networks that preserve symmetry while maintaining a comparable number of floating-point operations (FLOPs) per parameter to standard non-equivariant networks. We focus on horizontal mirroring (flopping) invariance, common in many computer vision tasks. The main idea is to parametrize the feature spaces in terms of mirror-symmetric and mirror-antisymmetric features, i.e., irreps of the flopping group. This decomposes the linear layers to be block-diagonal, requiring half the number of FLOPs. Our approach reduces both FLOPs and wall-clock time, providing a practical solution for efficient, scalable symmetry-aware architectures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge