Torsten Sattler

Can we make NeRF-based visual localization privacy-preserving?

Aug 26, 2025Abstract:Visual localization (VL) is the task of estimating the camera pose in a known scene. VL methods, a.o., can be distinguished based on how they represent the scene, e.g., explicitly through a (sparse) point cloud or a collection of images or implicitly through the weights of a neural network. Recently, NeRF-based methods have become popular for VL. While NeRFs offer high-quality novel view synthesis, they inadvertently encode fine scene details, raising privacy concerns when deployed in cloud-based localization services as sensitive information could be recovered. In this paper, we tackle this challenge on two ends. We first propose a new protocol to assess privacy-preservation of NeRF-based representations. We show that NeRFs trained with photometric losses store fine-grained details in their geometry representations, making them vulnerable to privacy attacks, even if the head that predicts colors is removed. Second, we propose ppNeSF (Privacy-Preserving Neural Segmentation Field), a NeRF variant trained with segmentation supervision instead of RGB images. These segmentation labels are learned in a self-supervised manner, ensuring they are coarse enough to obscure identifiable scene details while remaining discriminativeness in 3D. The segmentation space of ppNeSF can be used for accurate visual localization, yielding state-of-the-art results.

LODGE: Level-of-Detail Large-Scale Gaussian Splatting with Efficient Rendering

May 29, 2025

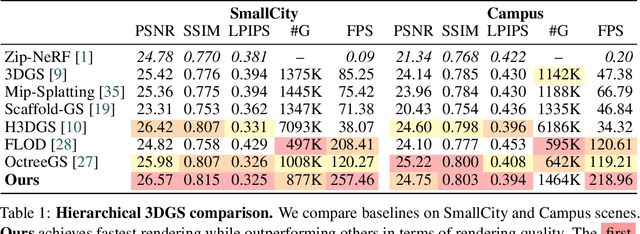

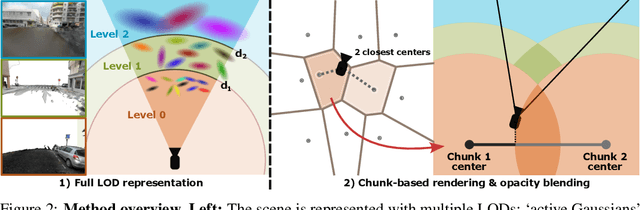

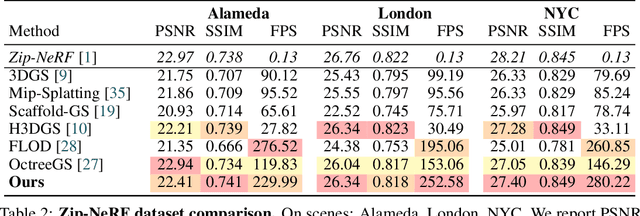

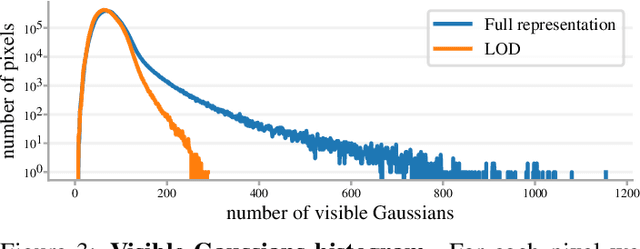

Abstract:In this work, we present a novel level-of-detail (LOD) method for 3D Gaussian Splatting that enables real-time rendering of large-scale scenes on memory-constrained devices. Our approach introduces a hierarchical LOD representation that iteratively selects optimal subsets of Gaussians based on camera distance, thus largely reducing both rendering time and GPU memory usage. We construct each LOD level by applying a depth-aware 3D smoothing filter, followed by importance-based pruning and fine-tuning to maintain visual fidelity. To further reduce memory overhead, we partition the scene into spatial chunks and dynamically load only relevant Gaussians during rendering, employing an opacity-blending mechanism to avoid visual artifacts at chunk boundaries. Our method achieves state-of-the-art performance on both outdoor (Hierarchical 3DGS) and indoor (Zip-NeRF) datasets, delivering high-quality renderings with reduced latency and memory requirements.

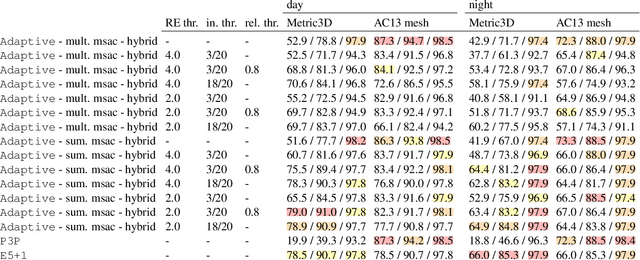

Are Minimal Radial Distortion Solvers Really Necessary for Relative Pose Estimation?

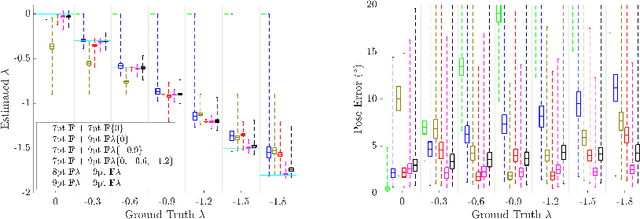

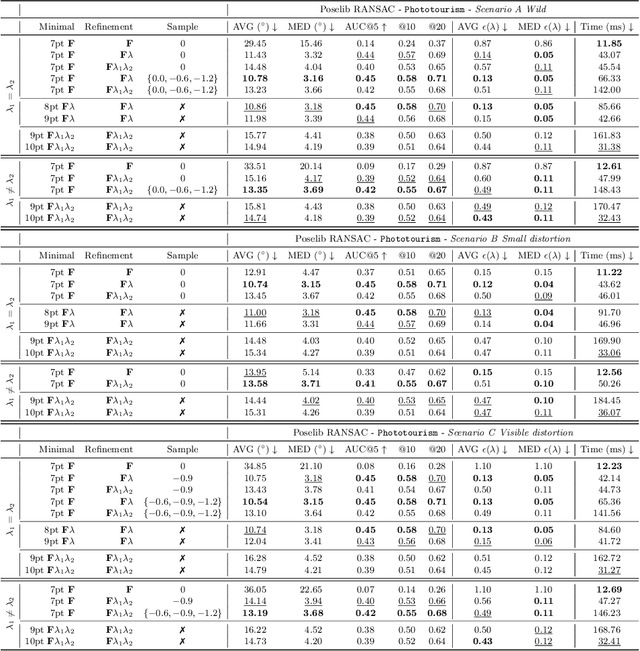

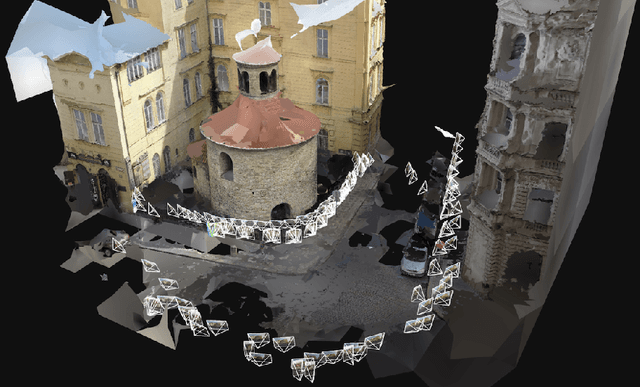

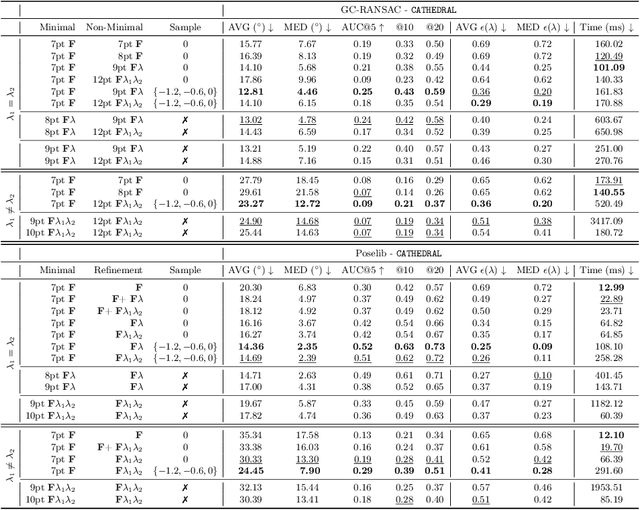

May 01, 2025Abstract:Estimating the relative pose between two cameras is a fundamental step in many applications such as Structure-from-Motion. The common approach to relative pose estimation is to apply a minimal solver inside a RANSAC loop. Highly efficient solvers exist for pinhole cameras. Yet, (nearly) all cameras exhibit radial distortion. Not modeling radial distortion leads to (significantly) worse results. However, minimal radial distortion solvers are significantly more complex than pinhole solvers, both in terms of run-time and implementation efforts. This paper compares radial distortion solvers with two simple-to-implement approaches that do not use minimal radial distortion solvers: The first approach combines an efficient pinhole solver with sampled radial undistortion parameters, where the sampled parameters are used for undistortion prior to applying the pinhole solver. The second approach uses a state-of-the-art neural network to estimate the distortion parameters rather than sampling them from a set of potential values. Extensive experiments on multiple datasets, and different camera setups, show that complex minimal radial distortion solvers are not necessary in practice. We discuss under which conditions a simple sampling of radial undistortion parameters is preferable over calibrating cameras using a learning-based prior approach. Code and newly created benchmark for relative pose estimation under radial distortion are available at https://github.com/kocurvik/rdnet.

Large-scale visual SLAM for in-the-wild videos

Apr 29, 2025Abstract:Accurate and robust 3D scene reconstruction from casual, in-the-wild videos can significantly simplify robot deployment to new environments. However, reliable camera pose estimation and scene reconstruction from such unconstrained videos remains an open challenge. Existing visual-only SLAM methods perform well on benchmark datasets but struggle with real-world footage which often exhibits uncontrolled motion including rapid rotations and pure forward movements, textureless regions, and dynamic objects. We analyze the limitations of current methods and introduce a robust pipeline designed to improve 3D reconstruction from casual videos. We build upon recent deep visual odometry methods but increase robustness in several ways. Camera intrinsics are automatically recovered from the first few frames using structure-from-motion. Dynamic objects and less-constrained areas are masked with a predictive model. Additionally, we leverage monocular depth estimates to regularize bundle adjustment, mitigating errors in low-parallax situations. Finally, we integrate place recognition and loop closure to reduce long-term drift and refine both intrinsics and pose estimates through global bundle adjustment. We demonstrate large-scale contiguous 3D models from several online videos in various environments. In contrast, baseline methods typically produce locally inconsistent results at several points, producing separate segments or distorted maps. In lieu of ground-truth pose data, we evaluate map consistency, execution time and visual accuracy of re-rendered NeRF models. Our proposed system establishes a new baseline for visual reconstruction from casual uncontrolled videos found online, demonstrating more consistent reconstructions over longer sequences of in-the-wild videos than previously achieved.

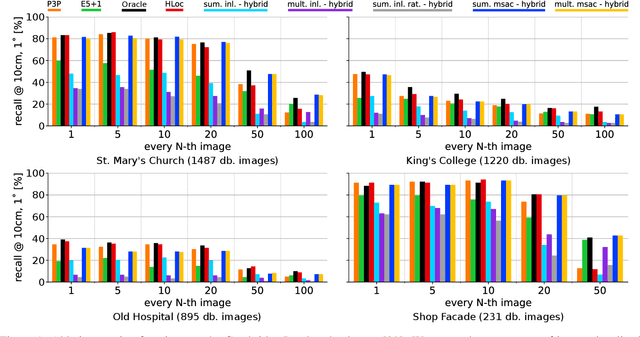

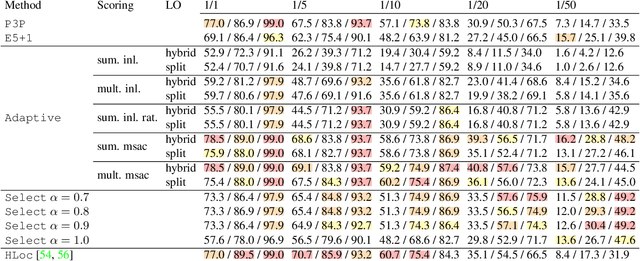

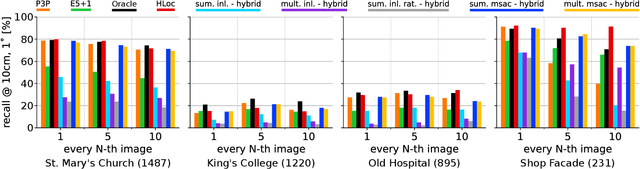

A Guide to Structureless Visual Localization

Apr 24, 2025Abstract:Visual localization algorithms, i.e., methods that estimate the camera pose of a query image in a known scene, are core components of many applications, including self-driving cars and augmented / mixed reality systems. State-of-the-art visual localization algorithms are structure-based, i.e., they store a 3D model of the scene and use 2D-3D correspondences between the query image and 3D points in the model for camera pose estimation. While such approaches are highly accurate, they are also rather inflexible when it comes to adjusting the underlying 3D model after changes in the scene. Structureless localization approaches represent the scene as a database of images with known poses and thus offer a much more flexible representation that can be easily updated by adding or removing images. Although there is a large amount of literature on structure-based approaches, there is significantly less work on structureless methods. Hence, this paper is dedicated to providing the, to the best of our knowledge, first comprehensive discussion and comparison of structureless methods. Extensive experiments show that approaches that use a higher degree of classical geometric reasoning generally achieve higher pose accuracy. In particular, approaches based on classical absolute or semi-generalized relative pose estimation outperform very recent methods based on pose regression by a wide margin. Compared with state-of-the-art structure-based approaches, the flexibility of structureless methods comes at the cost of (slightly) lower pose accuracy, indicating an interesting direction for future work.

Fixing the Scale and Shift in Monocular Depth For Camera Pose Estimation

Jan 13, 2025

Abstract:Recent advances in monocular depth prediction have led to significantly improved depth prediction accuracy. In turn, this enables various applications to use such depth predictions. In this paper, we propose a novel framework for estimating the relative pose between two cameras from point correspondences with associated monocular depths. Since depth predictions are typically defined up to an unknown scale and shift parameter, our solvers jointly estimate both scale and shift parameters together with the camera pose. We derive efficient solvers for three cases: (1) two calibrated cameras, (2) two uncalibrated cameras with an unknown but shared focal length, and (3) two uncalibrated cameras with unknown and different focal lengths. Experiments on synthetic and real data, including experiments with depth maps estimated by 11 different depth predictors, show the practical viability of our solvers. Compared to prior work, our solvers achieve state-of-the-art results on two large-scale, real-world datasets. The source code is available at https://github.com/yaqding/pose_monodepth

Are Minimal Radial Distortion Solvers Necessary for Relative Pose Estimation?

Oct 08, 2024

Abstract:Estimating the relative pose between two cameras is a fundamental step in many applications such as Structure-from-Motion. The common approach to relative pose estimation is to apply a minimal solver inside a RANSAC loop. Highly efficient solvers exist for pinhole cameras. Yet, (nearly) all cameras exhibit radial distortion. Not modeling radial distortion leads to (significantly) worse results. However, minimal radial distortion solvers are significantly more complex than pinhole solvers, both in terms of run-time and implementation efforts. This paper compares radial distortion solvers with a simple-to-implement approach that combines an efficient pinhole solver with sampled radial distortion parameters. Extensive experiments on multiple datasets and RANSAC variants show that this simple approach performs similarly or better than the most accurate minimal distortion solvers at faster run-times while being significantly more accurate than faster non-minimal solvers. We clearly show that complex radial distortion solvers are not necessary in practice. Code and benchmark are available at https://github.com/kocurvik/rd.

Combining Absolute and Semi-Generalized Relative Poses for Visual Localization

Sep 21, 2024

Abstract:Visual localization is the problem of estimating the camera pose of a given query image within a known scene. Most state-of-the-art localization approaches follow the structure-based paradigm and use 2D-3D matches between pixels in a query image and 3D points in the scene for pose estimation. These approaches assume an accurate 3D model of the scene, which might not always be available, especially if only a few images are available to compute the scene representation. In contrast, structure-less methods rely on 2D-2D matches and do not require any 3D scene model. However, they are also less accurate than structure-based methods. Although one prior work proposed to combine structure-based and structure-less pose estimation strategies, its practical relevance has not been shown. We analyze combining structure-based and structure-less strategies while exploring how to select between poses obtained from 2D-2D and 2D-3D matches, respectively. We show that combining both strategies improves localization performance in multiple practically relevant scenarios.

Obfuscation Based Privacy Preserving Representations are Recoverable Using Neighborhood Information

Sep 17, 2024

Abstract:Rapid growth in the popularity of AR/VR/MR applications and cloud-based visual localization systems has given rise to an increased focus on the privacy of user content in the localization process. This privacy concern has been further escalated by the ability of deep neural networks to recover detailed images of a scene from a sparse set of 3D or 2D points and their descriptors - the so-called inversion attacks. Research on privacy-preserving localization has therefore focused on preventing these inversion attacks on both the query image keypoints and the 3D points of the scene map. To this end, several geometry obfuscation techniques that lift points to higher-dimensional spaces, i.e., lines or planes, or that swap coordinates between points % have been proposed. In this paper, we point to a common weakness of these obfuscations that allows to recover approximations of the original point positions under the assumption of known neighborhoods. We further show that these neighborhoods can be computed by learning to identify descriptors that co-occur in neighborhoods. Extensive experiments show that our approach for point recovery is practically applicable to all existing geometric obfuscation schemes. Our results show that these schemes should not be considered privacy-preserving, even though they are claimed to be privacy-preserving. Code will be available at \url{https://github.com/kunalchelani/RecoverPointsNeighborhood}.

Comparative Evaluation of 3D Reconstruction Methods for Object Pose Estimation

Aug 15, 2024Abstract:Object pose estimation is essential to many industrial applications involving robotic manipulation, navigation, and augmented reality. Current generalizable object pose estimators, i.e., approaches that do not need to be trained per object, rely on accurate 3D models. Predominantly, CAD models are used, which can be hard to obtain in practice. At the same time, it is often possible to acquire images of an object. Naturally, this leads to the question whether 3D models reconstructed from images are sufficient to facilitate accurate object pose estimation. We aim to answer this question by proposing a novel benchmark for measuring the impact of 3D reconstruction quality on pose estimation accuracy. Our benchmark provides calibrated images for object reconstruction registered with the test images of the YCB-V dataset for pose evaluation under the BOP benchmark format. Detailed experiments with multiple state-of-the-art 3D reconstruction and object pose estimation approaches show that the geometry produced by modern reconstruction methods is often sufficient for accurate pose estimation. Our experiments lead to interesting observations: (1) Standard metrics for measuring 3D reconstruction quality are not necessarily indicative of pose estimation accuracy, which shows the need for dedicated benchmarks such as ours. (2) Classical, non-learning-based approaches can perform on par with modern learning-based reconstruction techniques and can even offer a better reconstruction time-pose accuracy tradeoff. (3) There is still a sizable gap between performance with reconstructed and with CAD models. To foster research on closing this gap, our benchmark is publicly available at https://github.com/VarunBurde/reconstruction_pose_benchmark}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge