Johan Karlsson

Sound Field Estimation Using Optimal Transport Barycenters in the Presence of Phase Errors

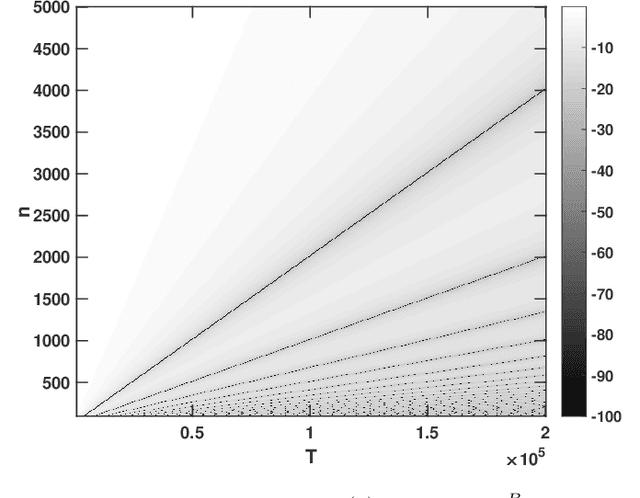

Feb 05, 2026Abstract:This study introduces a novel approach for estimating plane-wave coefficients in sound field reconstruction, specifically addressing challenges posed by error-in-variable phase perturbations. Such systematic errors typically arise from sensor mis-calibration, including uncertainties in sensor positions and response characteristics, leading to measurement-induced phase shifts in plane wave coefficients. Traditional methods often result in biased estimates or non-convex solutions. To overcome these issues, we propose an optimal transport (OT) framework. This framework operates on a set of lifted non-negative measures that correspond to observation-dependent shifted coefficients relative to the unperturbed ones. By applying OT, the supports of the measures are transported toward an optimal average in the phase space, effectively morphing them into an indistinguishable state. This optimal average, known as barycenter, is linked to the estimated plane-wave coefficients using the same lifting rule. The framework addresses the ill-posed nature of the problem, due to the large number of plane waves, by adding a constant to the ground cost, ensuring the sparsity of the transport matrix. Convex consistency of the solution is maintained. Simulation results confirm that our proposed method provides more accurate coefficient estimations compared to baseline approaches in scenarios with both additive noise and phase perturbations.

Probabilistic Trajectory GOSPA: A Metric for Uncertainty-Aware Multi-Object Tracking Performance Evaluation

Jun 18, 2025Abstract:This paper presents a generalization of the trajectory general optimal sub-pattern assignment (GOSPA) metric for evaluating multi-object tracking algorithms that provide trajectory estimates with track-level uncertainties. This metric builds on the recently introduced probabilistic GOSPA metric to account for both the existence and state estimation uncertainties of individual object states. Similar to trajectory GOSPA (TGOSPA), it can be formulated as a multidimensional assignment problem, and its linear programming relaxation--also a valid metric--is computable in polynomial time. Additionally, this metric retains the interpretability of TGOSPA, and we show that its decomposition yields intuitive costs terms associated to expected localization error and existence probability mismatch error for properly detected objects, expected missed and false detection error, and track switch error. The effectiveness of the proposed metric is demonstrated through a simulation study.

Probabilistic GOSPA: A Metric for Performance Evaluation of Multi-Object Filters with Uncertainties

Dec 16, 2024

Abstract:This paper presents a probabilistic generalization of the generalized optimal subpattern assignment (GOSPA) metric, termed P-GOSPA metric. GOSPA is a popular metric for evaluating the distance between finite sets, typically in multi-object estimation applications. P-GOSPA extends GOSPA to the space of multi-Bernoulli set densities, incorporating the inherent uncertainty in probabilistic multi-object representations. In addition, P-GOSPA retains the interpretability of GOSPA, such as decomposability into localization, missed and false detection errors, in a sound manner. Examples and simulations are presented to demonstrate the efficacy of P-GOSPA.

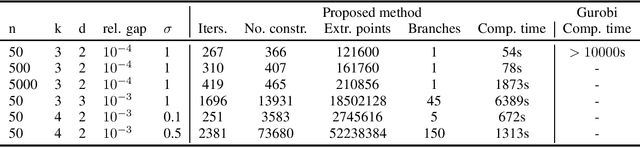

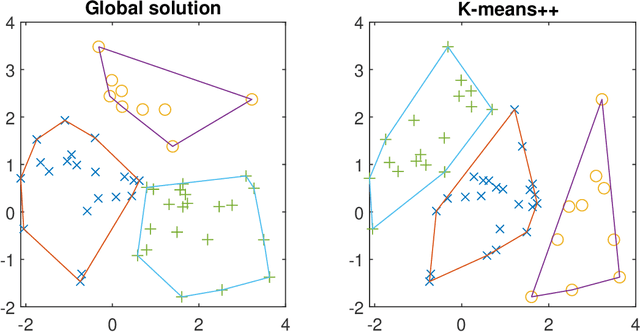

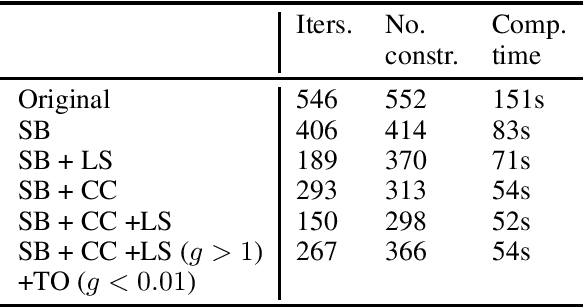

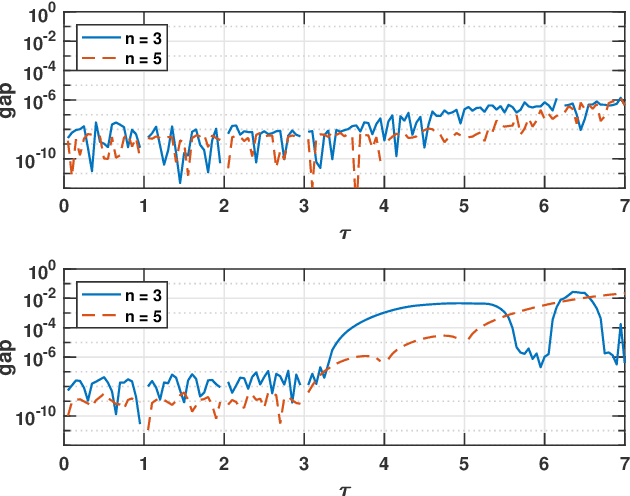

A cutting plane algorithm for globally solving low dimensional k-means clustering problems

Feb 21, 2024

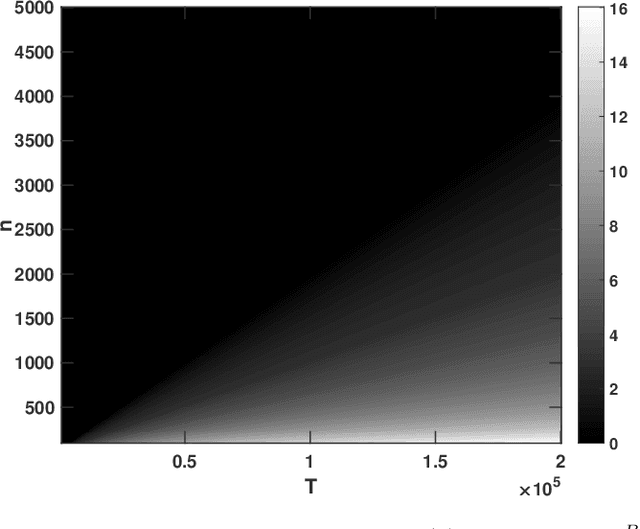

Abstract:Clustering is one of the most fundamental tools in data science and machine learning, and k-means clustering is one of the most common such methods. There is a variety of approximate algorithms for the k-means problem, but computing the globally optimal solution is in general NP-hard. In this paper we consider the k-means problem for instances with low dimensional data and formulate it as a structured concave assignment problem. This allows us to exploit the low dimensional structure and solve the problem to global optimality within reasonable time for large data sets with several clusters. The method builds on iteratively solving a small concave problem and a large linear programming problem. This gives a sequence of feasible solutions along with bounds which we show converges to zero optimality gap. The paper combines methods from global optimization theory to accelerate the procedure, and we provide numerical results on their performance.

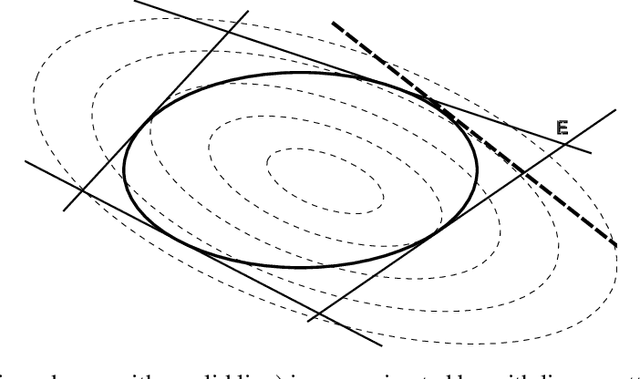

Globally solving the Gromov-Wasserstein problem for point clouds in low dimensional Euclidean spaces

Jul 18, 2023Abstract:This paper presents a framework for computing the Gromov-Wasserstein problem between two sets of points in low dimensional spaces, where the discrepancy is the squared Euclidean norm. The Gromov-Wasserstein problem is a generalization of the optimal transport problem that finds the assignment between two sets preserving pairwise distances as much as possible. This can be used to quantify the similarity between two formations or shapes, a common problem in AI and machine learning. The problem can be formulated as a Quadratic Assignment Problem (QAP), which is in general computationally intractable even for small problems. Our framework addresses this challenge by reformulating the QAP as an optimization problem with a low-dimensional domain, leveraging the fact that the problem can be expressed as a concave quadratic optimization problem with low rank. The method scales well with the number of points, and it can be used to find the global solution for large-scale problems with thousands of points. We compare the computational complexity of our approach with state-of-the-art methods on synthetic problems and apply it to a near-symmetrical problem which is of particular interest in computational biology.

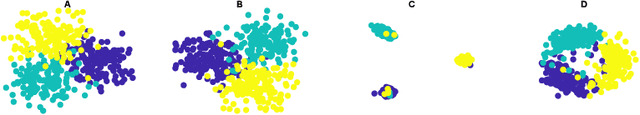

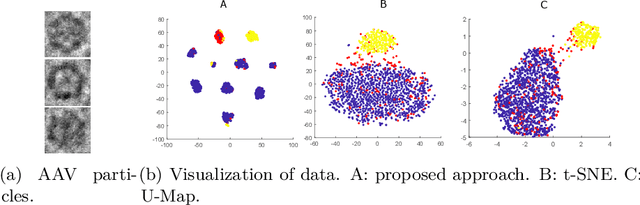

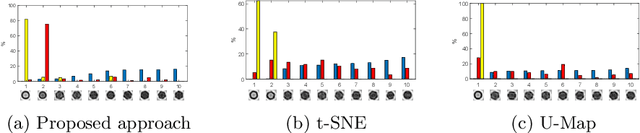

Orthogonalization of data via Gromov-Wasserstein type feedback for clustering and visualization

Jul 25, 2022

Abstract:In this paper we propose an adaptive approach for clustering and visualization of data by an orthogonalization process. Starting with the data points being represented by a Markov process using the diffusion map framework, the method adaptively increase the orthogonality of the clusters by applying a feedback mechanism inspired by the Gromov-Wasserstein distance. This mechanism iteratively increases the spectral gap and refines the orthogonality of the data to achieve a clustering with high specificity. By using the diffusion map framework and representing the relation between data points using transition probabilities, the method is robust with respect to both the underlying distance, noise in the data and random initialization. We prove that the method converges globally to a unique fixpoint for certain parameter values. We also propose a related approach where the transition probabilities in the Markov process are required to be doubly stochastic, in which case the method generates a minimizer to a nonconvex optimization problem. We apply the method on cryo-electron microscopy image data from biopharmaceutical manufacturing where we can confirm biologically relevant insights related to therapeutic efficacy. We consider an example with morphological variations of gene packaging and confirm that the method produces biologically meaningful clustering results consistent with human expert classification.

Quantifying and Computing Covariance Uncertainty

Oct 06, 2021

Abstract:In this work, we consider the problem of bounding the values of a covariance function corresponding to a continuous-time stationary stochastic process or signal. Specifically, for two signals whose covariance functions agree on a finite discrete set of time-lags, we consider the maximal possible discrepancy of the covariance functions for real-valued time-lags outside this discrete grid. Computing this uncertainty corresponds to solving an infinite dimensional non-convex problem. However, we herein prove that the maximal objective value may be bounded from above by a finite dimensional convex optimization problem, allowing for efficient computation by standard methods. Furthermore, we empirically observe that for the case of signals whose spectra are supported on an interval, this upper bound is sharp, i.e., provides an exact quantification of the covariance uncertainty.

On the complexity of the optimal transport problem with graph-structured cost

Oct 01, 2021

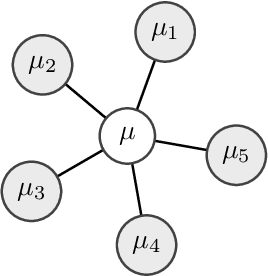

Abstract:Multi-marginal optimal transport (MOT) is a generalization of optimal transport to multiple marginals. Optimal transport has evolved into an important tool in many machine learning applications, and its multi-marginal extension opens up for addressing new challenges in the field of machine learning. However, the usage of MOT has been largely impeded by its computational complexity which scales exponentially in the number of marginals. Fortunately, in many applications, such as barycenter or interpolation problems, the cost function adheres to structures, which has recently been exploited for developing efficient computational methods. In this work we derive computational bounds for these methods. With $m$ marginal distributions supported on $n$ points, we provide a $ \mathcal{\tilde O}(d(G)m n^2\epsilon^{-2})$ bound for a $\epsilon$-accuracy when the problem is associated with a tree with diameter $d(G)$. For the special case of the Wasserstein barycenter problem, which corresponds to a star-shaped tree, our bound is in alignment with the existing complexity bound for it.

Mixed-Spectrum Signals -- Discrete Approximations and Variance Expressions for Covariance Estimates

Jun 28, 2021

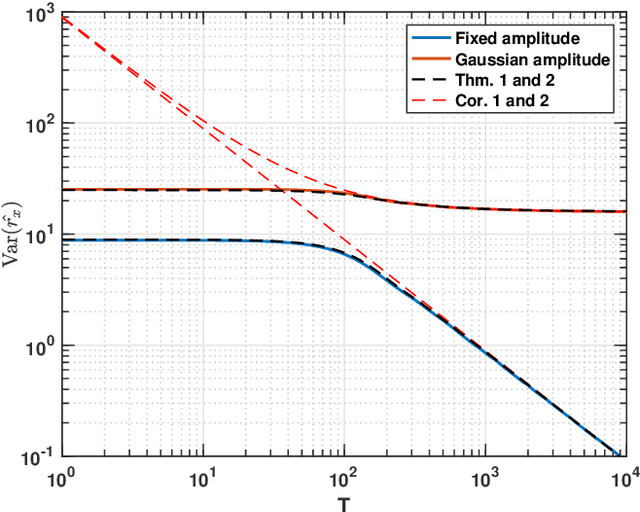

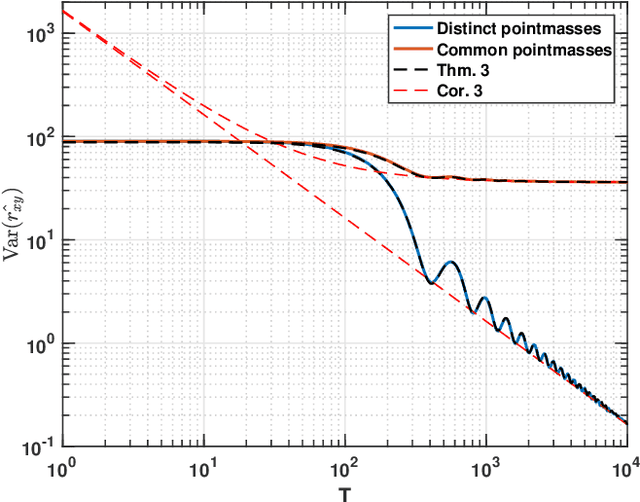

Abstract:The estimation of the covariance function of a stochastic process, or signal, is of integral importance for a multitude of signal processing applications. In this work, we derive closed-form expressions for the variance of covariance estimates for mixed-spectrum signals, i.e., spectra containing both absolutely continuous and singular parts. The results cover both finite-sample and asymptotic regimes, allowing for assessing the exact speed of convergence of estimates to their expectations, as well as their limiting behavior. As is shown, such covariance estimates may converge even for non-ergodic processes. Furthermore, we consider approximating signals with arbitrary spectral densities by sequences of singular spectrum, i.e., sinusoidal, processes, and derive the limiting behavior of covariance estimates as both the sample size and the number of sinusoidal components tend to infinity. We show that the asymptotic regime variance can be described by a time-frequency resolution product, with dramatically different behavior depending on how the sinusoidal approximation is constructed. In a few numerical examples we illustrate the theory and the corresponding implications for direction of arrival estimation.

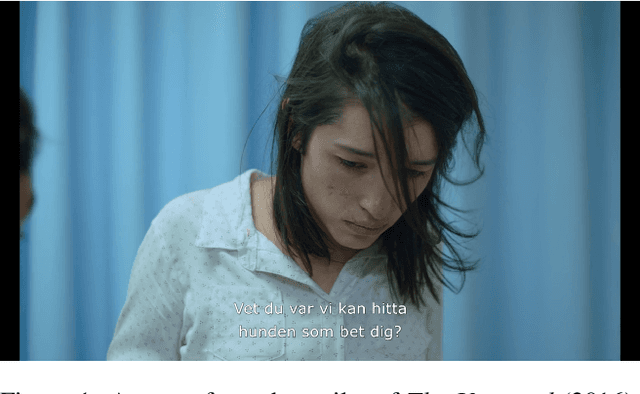

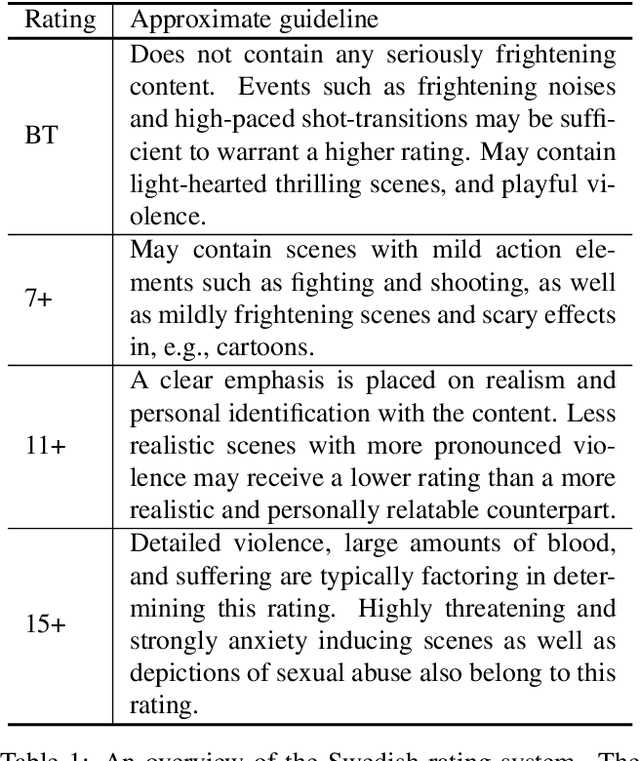

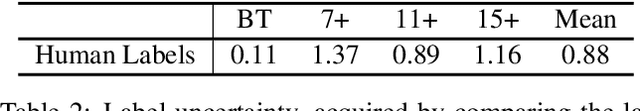

Is this Harmful? Learning to Predict Harmfulness Ratings from Video

Jun 15, 2021

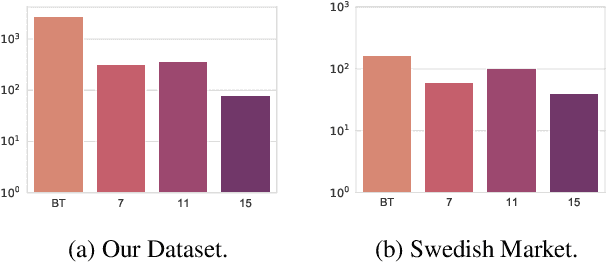

Abstract:Automatically identifying harmful content in video is an important task with a wide range of applications. However, due to the difficulty of collecting high-quality labels as well as demanding computational requirements, the task has not had a satisfying general approach. Typically, only small subsets of the problem are considered, such as identifying violent content. In cases where the general problem is tackled, rough approximations and simplifications are made to deal with the lack of labels and computational complexity. In this work, we identify and tackle the two main obstacles. First, we create a dataset of approximately 4000 video clips, annotated by professionals in the field. Secondly, we demonstrate that advances in video recognition enable training models on our dataset that consider the full context of the scene. We conduct an in-depth study on our modeling choices and find that we greatly benefit from combining the visual and audio modality and that pretraining on large-scale video recognition datasets and class balanced sampling further improves performance. We additionally perform a qualitative study that reveals the heavily multi-modal nature of our dataset. Our dataset will be made available upon publication.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge