Amanda Berg

DisasterInsight: A Multimodal Benchmark for Function-Aware and Grounded Disaster Assessment

Jan 26, 2026Abstract:Timely interpretation of satellite imagery is critical for disaster response, yet existing vision-language benchmarks for remote sensing largely focus on coarse labels and image-level recognition, overlooking the functional understanding and instruction robustness required in real humanitarian workflows. We introduce DisasterInsight, a multimodal benchmark designed to evaluate vision-language models (VLMs) on realistic disaster analysis tasks. DisasterInsight restructures the xBD dataset into approximately 112K building-centered instances and supports instruction-diverse evaluation across multiple tasks, including building-function classification, damage-level and disaster-type classification, counting, and structured report generation aligned with humanitarian assessment guidelines. To establish domain-adapted baselines, we propose DI-Chat, obtained by fine-tuning existing VLM backbones on disaster-specific instruction data using parameter-efficient Low-Rank Adaptation (LoRA). Extensive experiments on state-of-the-art generic and remote-sensing VLMs reveal substantial performance gaps across tasks, particularly in damage understanding and structured report generation. DI-Chat achieves significant improvements on damage-level and disaster-type classification as well as report generation quality, while building-function classification remains challenging for all evaluated models. DisasterInsight provides a unified benchmark for studying grounded multimodal reasoning in disaster imagery.

Comparing Satellite Data for Next-Day Wildfire Predictability

Mar 11, 2025Abstract:Multiple studies have performed next-day fire prediction using satellite imagery. Two main satellites are used to detect wildfires: MODIS and VIIRS. Both satellites provide fire mask products, called MOD14 and VNP14, respectively. Studies have used one or the other, but there has been no comparison between them to determine which might be more suitable for next-day fire prediction. In this paper, we first evaluate how well VIIRS and MODIS data can be used to forecast wildfire spread one day ahead. We find that the model using VIIRS as input and VNP14 as target achieves the best results. Interestingly, the model using MODIS as input and VNP14 as target performs significantly better than using VNP14 as input and MOD14 as target. Next, we discuss why MOD14 might be harder to use for predicting next-day fires. We find that the MOD14 fire mask is highly stochastic and does not correlate with reasonable fire spread patterns. This is detrimental for machine learning tasks, as the model learns irrational patterns. Therefore, we conclude that MOD14 is unsuitable for next-day fire prediction and that VNP14 is a much better option. However, using MODIS input and VNP14 as target, we achieve a significant improvement in predictability. This indicates that an improved fire detection model is possible for MODIS. The full code and dataset is available online: https://github.com/justuskarlsson/wildfire-mod14-vnp14

Exploring Seasonal Variability in the Context of Neural Radiance Fields for 3D Reconstruction on Satellite Imagery

Nov 05, 2024

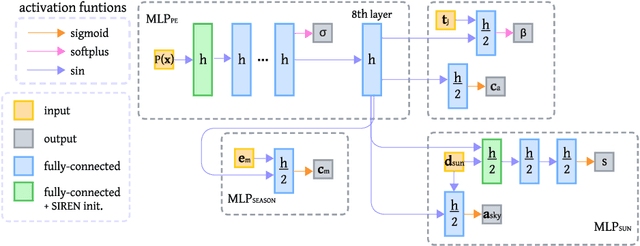

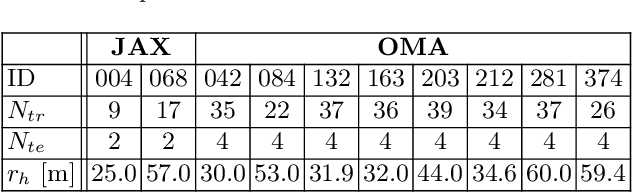

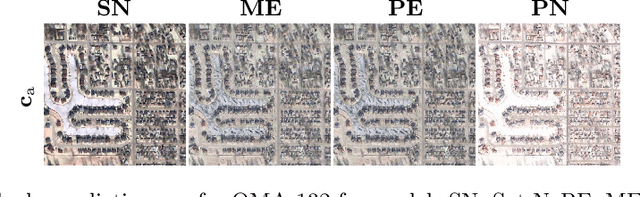

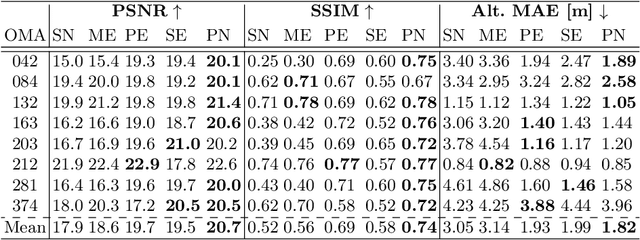

Abstract:In this work, the seasonal predictive capabilities of Neural Radiance Fields (NeRF) applied to satellite images are investigated. Focusing on the utilization of satellite data, the study explores how Sat-NeRF, a novel approach in computer vision, performs in predicting seasonal variations across different months. Through comprehensive analysis and visualization, the study examines the model's ability to capture and predict seasonal changes, highlighting specific challenges and strengths. Results showcase the impact of the sun direction on predictions, revealing nuanced details in seasonal transitions, such as snow cover, color accuracy, and texture representation in different landscapes. Given these results, we propose Planet-NeRF, an extension to Sat-NeRF capable of incorporating seasonal variability through a set of month embedding vectors. Comparative evaluations reveal that Planet-NeRF outperforms prior models in the case where seasonal changes are present. The extensive evaluation combined with the proposed method offers promising avenues for future research in this domain.

Sen2Fire: A Challenging Benchmark Dataset for Wildfire Detection using Sentinel Data

Mar 26, 2024Abstract:Utilizing satellite imagery for wildfire detection presents substantial potential for practical applications. To advance the development of machine learning algorithms in this domain, our study introduces the \textit{Sen2Fire} dataset--a challenging satellite remote sensing dataset tailored for wildfire detection. This dataset is curated from Sentinel-2 multi-spectral data and Sentinel-5P aerosol product, comprising a total of 2466 image patches. Each patch has a size of 512$\times$512 pixels with 13 bands. Given the distinctive sensitivities of various wavebands to wildfire responses, our research focuses on optimizing wildfire detection by evaluating different wavebands and employing a combination of spectral indices, such as normalized burn ratio (NBR) and normalized difference vegetation index (NDVI). The results suggest that, in contrast to using all bands for wildfire detection, selecting specific band combinations yields superior performance. Additionally, our study underscores the positive impact of integrating Sentinel-5 aerosol data for wildfire detection. The code and dataset are available online (https://zenodo.org/records/10881058).

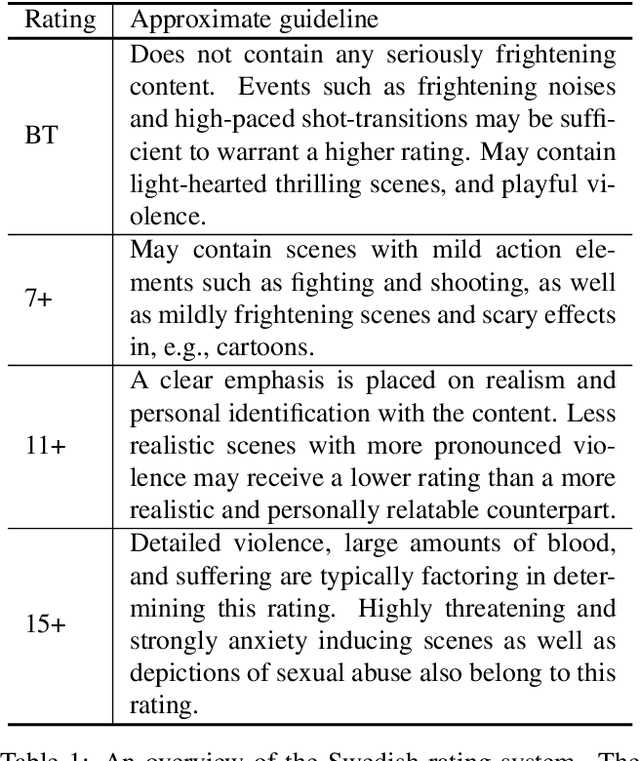

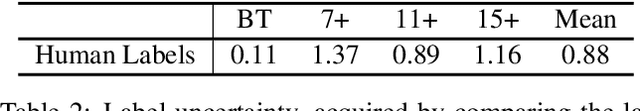

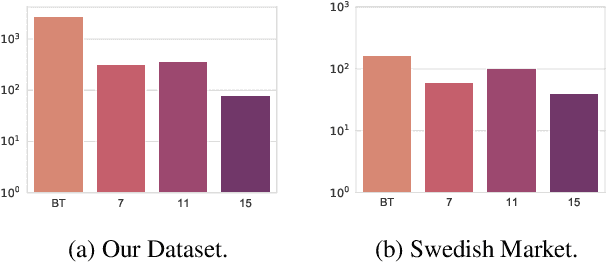

Is this Harmful? Learning to Predict Harmfulness Ratings from Video

Jun 15, 2021

Abstract:Automatically identifying harmful content in video is an important task with a wide range of applications. However, due to the difficulty of collecting high-quality labels as well as demanding computational requirements, the task has not had a satisfying general approach. Typically, only small subsets of the problem are considered, such as identifying violent content. In cases where the general problem is tackled, rough approximations and simplifications are made to deal with the lack of labels and computational complexity. In this work, we identify and tackle the two main obstacles. First, we create a dataset of approximately 4000 video clips, annotated by professionals in the field. Secondly, we demonstrate that advances in video recognition enable training models on our dataset that consider the full context of the scene. We conduct an in-depth study on our modeling choices and find that we greatly benefit from combining the visual and audio modality and that pretraining on large-scale video recognition datasets and class balanced sampling further improves performance. We additionally perform a qualitative study that reveals the heavily multi-modal nature of our dataset. Our dataset will be made available upon publication.

Unsupervised Learning of Anomaly Detection from Contaminated Image Data using Simultaneous Encoder Training

May 27, 2019

Abstract:Anomaly detection in high-dimensional data, such as images, is a challenging problem recently subject to intense research. Generative Adversarial Networks (GANs) have the ability to model the normal data distribution and, therefore, detect anomalies. Previously published GAN-based methods often assume that anomaly-free data is available for training. However, in real-life scenarios, this is not always the case. In this work, we examine the effects of contaminating training data with anomalies for state-of-the-art GAN-based anomaly detection methods. As expected, detection performance is reduced. To mitigate this problem, we propose to add an additional encoder network already at training time to adjust the structure of the latent space. As we show in our experiments, the distance in latent space from a query image to the origin is a highly significant cue to discriminate anomalies from normal data. The proposed method achieves state-of-the-art performance on CIFAR-10 as well as on a large new dataset with cell images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge