Jörgen Ahlberg

BASE: Probably a Better Approach to Multi-Object Tracking

Sep 21, 2023Abstract:The field of visual object tracking is dominated by methods that combine simple tracking algorithms and ad hoc schemes. Probabilistic tracking algorithms, which are leading in other fields, are surprisingly absent from the leaderboards. We found that accounting for distance in target kinematics, exploiting detector confidence and modelling non-uniform clutter characteristics is critical for a probabilistic tracker to work in visual tracking. Previous probabilistic methods fail to address most or all these aspects, which we believe is why they fall so far behind current state-of-the-art (SOTA) methods (there are no probabilistic trackers in the MOT17 top 100). To rekindle progress among probabilistic approaches, we propose a set of pragmatic models addressing these challenges, and demonstrate how they can be incorporated into a probabilistic framework. We present BASE (Bayesian Approximation Single-hypothesis Estimator), a simple, performant and easily extendible visual tracker, achieving state-of-the-art (SOTA) on MOT17 and MOT20, without using Re-Id. Code will be made available at https://github.com/ffi-no

Unsupervised Learning of Anomaly Detection from Contaminated Image Data using Simultaneous Encoder Training

May 27, 2019

Abstract:Anomaly detection in high-dimensional data, such as images, is a challenging problem recently subject to intense research. Generative Adversarial Networks (GANs) have the ability to model the normal data distribution and, therefore, detect anomalies. Previously published GAN-based methods often assume that anomaly-free data is available for training. However, in real-life scenarios, this is not always the case. In this work, we examine the effects of contaminating training data with anomalies for state-of-the-art GAN-based anomaly detection methods. As expected, detection performance is reduced. To mitigate this problem, we propose to add an additional encoder network already at training time to adjust the structure of the latent space. As we show in our experiments, the distance in latent space from a query image to the origin is a highly significant cue to discriminate anomalies from normal data. The proposed method achieves state-of-the-art performance on CIFAR-10 as well as on a large new dataset with cell images.

Memory-Efficient Global Refinement of Decision-Tree Ensembles and its Application to Face Alignment

Sep 03, 2018

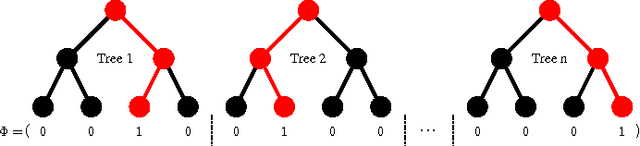

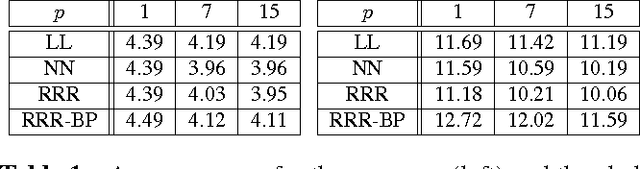

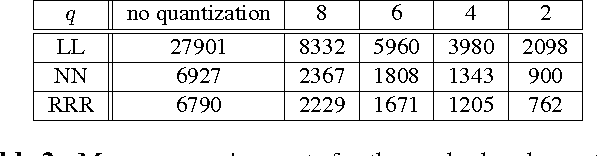

Abstract:Ren et al. recently introduced a method for aggregating multiple decision trees into a strong predictor by interpreting a path taken by a sample down each tree as a binary vector and performing linear regression on top of these vectors stacked together. They provided experimental evidence that the method offers advantages over the usual approaches for combining decision trees (random forests and boosting). The method truly shines when the regression target is a large vector with correlated dimensions, such as a 2D face shape represented with the positions of several facial landmarks. However, we argue that their basic method is not applicable in many practical scenarios due to large memory requirements. This paper shows how this issue can be solved through the use of quantization and architectural changes of the predictor that maps decision tree-derived encodings to the desired output.

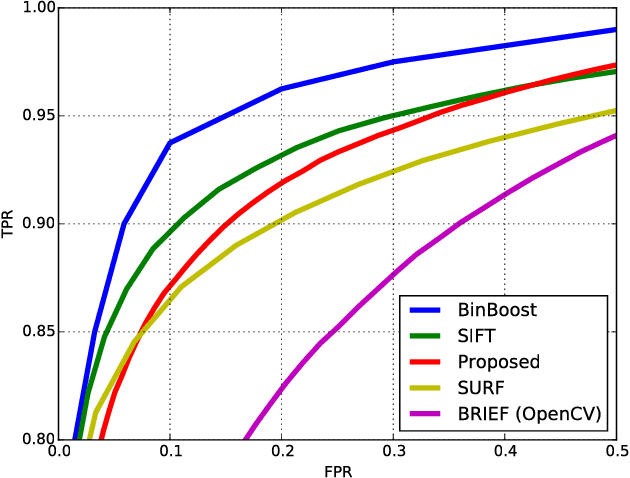

Learning Local Descriptors by Optimizing the Keypoint-Correspondence Criterion: Applications to Face Matching, Learning from Unlabeled Videos and 3D-Shape Retrieval

May 22, 2018

Abstract:Current best local descriptors are learned on a large dataset of matching and non-matching keypoint pairs. However, data of this kind is not always available since detailed keypoint correspondences can be hard to establish. On the other hand, we can often obtain labels for pairs of keypoint bags. For example, keypoint bags extracted from two images of the same object under different views form a matching pair, and keypoint bags extracted from images of different objects form a non-matching pair. On average, matching pairs should contain more corresponding keypoints than non-matching pairs. We describe an end-to-end differentiable architecture that enables the learning of local keypoint descriptors from such weakly-labeled data. Additionally, we discuss how to improve the method by incorporating the procedure of mining hard negatives. We also show how can our approach be used to learn convolutional features from unlabeled video signals and 3D models. Our implementation is available at https://github.com/nenadmarkus/wlrn

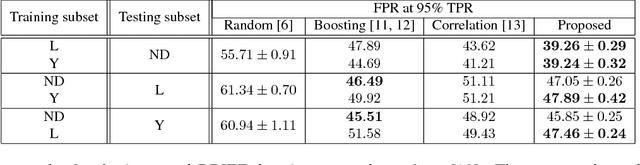

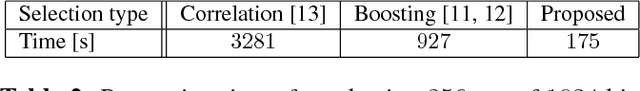

Constructing Binary Descriptors with a Stochastic Hill Climbing Search

Jul 16, 2015

Abstract:Binary descriptors of image patches provide processing speed advantages and require less storage than methods that encode the patch appearance with a vector of real numbers. We provide evidence that, despite its simplicity, a stochastic hill climbing bit selection procedure for descriptor construction defeats recently proposed alternatives on a standard discriminative power benchmark. The method is easy to implement and understand, has no free parameters that need fine tuning, and runs fast.

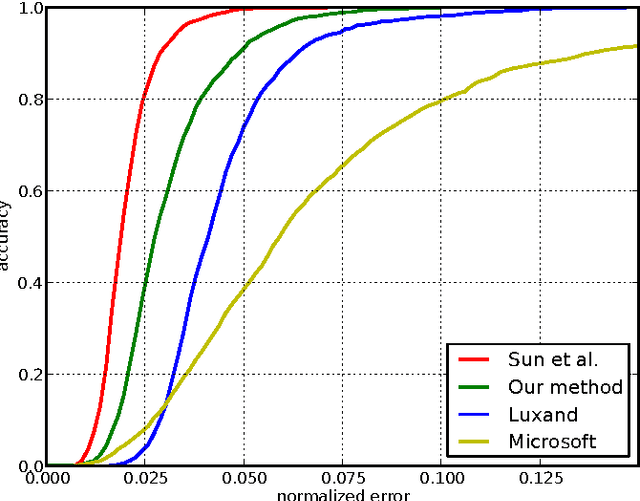

Fast Localization of Facial Landmark Points

Jan 20, 2015

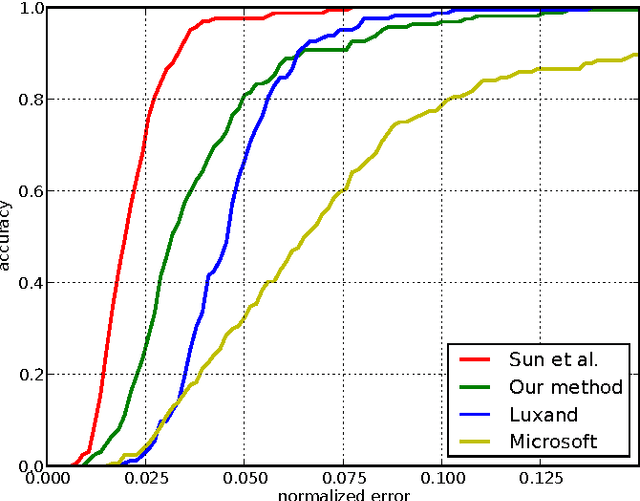

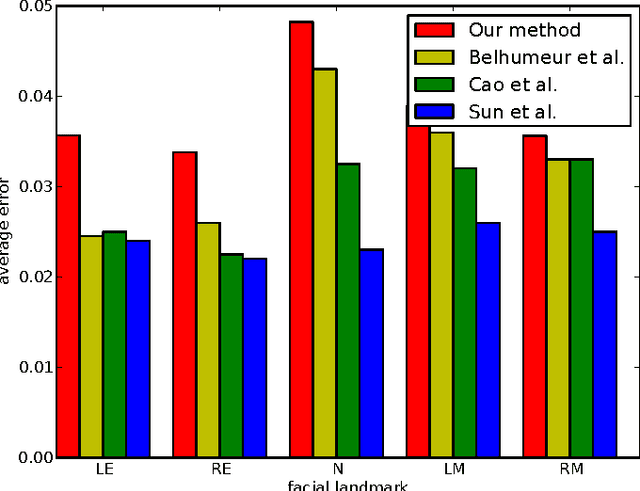

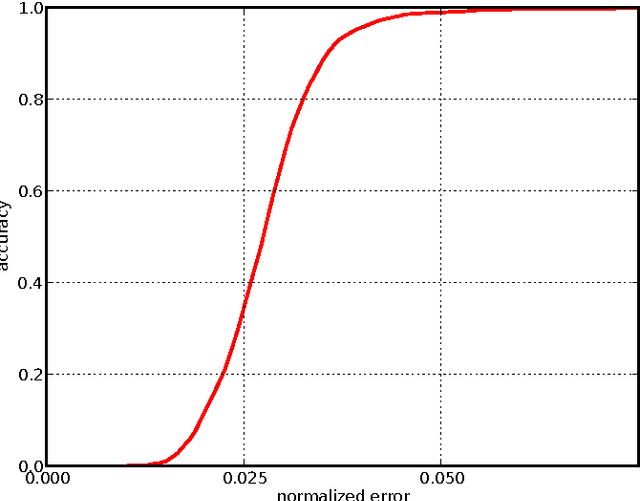

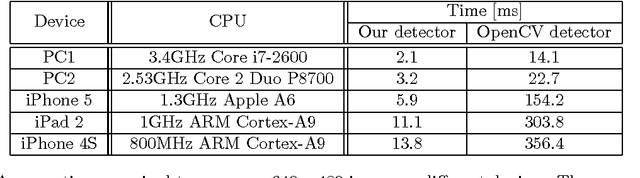

Abstract:Localization of salient facial landmark points, such as eye corners or the tip of the nose, is still considered a challenging computer vision problem despite recent efforts. This is especially evident in unconstrained environments, i.e., in the presence of background clutter and large head pose variations. Most methods that achieve state-of-the-art accuracy are slow, and, thus, have limited applications. We describe a method that can accurately estimate the positions of relevant facial landmarks in real-time even on hardware with limited processing power, such as mobile devices. This is achieved with a sequence of estimators based on ensembles of regression trees. The trees use simple pixel intensity comparisons in their internal nodes and this makes them able to process image regions very fast. We test the developed system on several publicly available datasets and analyse its processing speed on various devices. Experimental results show that our method has practical value.

Object Detection with Pixel Intensity Comparisons Organized in Decision Trees

Aug 19, 2014

Abstract:We describe a method for visual object detection based on an ensemble of optimized decision trees organized in a cascade of rejectors. The trees use pixel intensity comparisons in their internal nodes and this makes them able to process image regions very fast. Experimental analysis is provided through a face detection problem. The obtained results are encouraging and demonstrate that the method has practical value. Additionally, we analyse its sensitivity to noise and show how to perform fast rotation invariant object detection. Complete source code is provided at https://github.com/nenadmarkus/pico.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge