Kristofer Bengtsson

Infrastructure-based Autonomous Mobile Robots for Internal Logistics -- Challenges and Future Perspectives

Dec 17, 2025

Abstract:The adoption of Autonomous Mobile Robots (AMRs) for internal logistics is accelerating, with most solutions emphasizing decentralized, onboard intelligence. While AMRs in indoor environments like factories can be supported by infrastructure, involving external sensors and computational resources, such systems remain underexplored in the literature. This paper presents a comprehensive overview of infrastructure-based AMR systems, outlining key opportunities and challenges. To support this, we introduce a reference architecture combining infrastructure-based sensing, on-premise cloud computing, and onboard autonomy. Based on the architecture, we review core technologies for localization, perception, and planning. We demonstrate the approach in a real-world deployment in a heavy-vehicle manufacturing environment and summarize findings from a user experience (UX) evaluation. Our aim is to provide a holistic foundation for future development of scalable, robust, and human-compatible AMR systems in complex industrial environments.

A Comparative Study of SMT and MILP for the Nurse Rostering Problem

May 15, 2025

Abstract:The effects of personnel scheduling on the quality of care and working conditions for healthcare personnel have been thoroughly documented. However, the ever-present demand and large variation of constraints make healthcare scheduling particularly challenging. This problem has been studied for decades, with limited research aimed at applying Satisfiability Modulo Theories (SMT). SMT has gained momentum within the formal verification community in the last decades, leading to the advancement of SMT solvers that have been shown to outperform standard mathematical programming techniques. In this work, we propose generic constraint formulations that can model a wide range of real-world scheduling constraints. Then, the generic constraints are formulated as SMT and MILP problems and used to compare the respective state-of-the-art solvers, Z3 and Gurobi, on academic and real-world inspired rostering problems. Experimental results show how each solver excels for certain types of problems; the MILP solver generally performs better when the problem is highly constrained or infeasible, while the SMT solver performs better otherwise. On real-world inspired problems containing a more varied set of shifts and personnel, the SMT solver excels. Additionally, it was noted during experimentation that the SMT solver was more sensitive to the way the generic constraints were formulated, requiring careful consideration and experimentation to achieve better performance. We conclude that SMT-based methods present a promising avenue for future research within the domain of personnel scheduling.

MVUDA: Unsupervised Domain Adaptation for Multi-view Pedestrian Detection

Dec 05, 2024

Abstract:We address multi-view pedestrian detection in a setting where labeled data is collected using a multi-camera setup different from the one used for testing. While recent multi-view pedestrian detectors perform well on the camera rig used for training, their performance declines when applied to a different setup. To facilitate seamless deployment across varied camera rigs, we propose an unsupervised domain adaptation (UDA) method that adapts the model to new rigs without requiring additional labeled data. Specifically, we leverage the mean teacher self-training framework with a novel pseudo-labeling technique tailored to multi-view pedestrian detection. This method achieves state-of-the-art performance on multiple benchmarks, including MultiviewX$\rightarrow$Wildtrack. Unlike previous methods, our approach eliminates the need for external labeled monocular datasets, thereby reducing reliance on labeled data. Extensive evaluations demonstrate the effectiveness of our method and validate key design choices. By enabling robust adaptation across camera setups, our work enhances the practicality of multi-view pedestrian detectors and establishes a strong UDA baseline for future research.

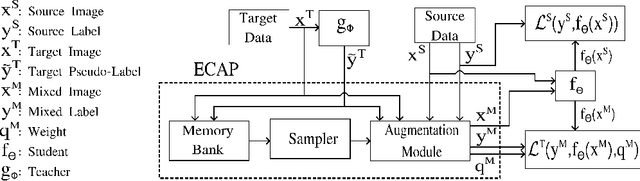

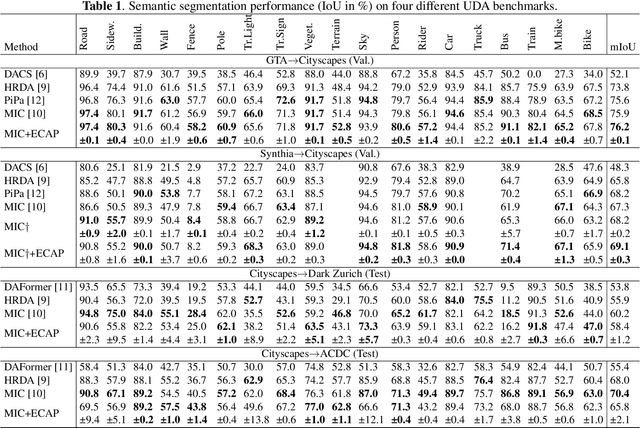

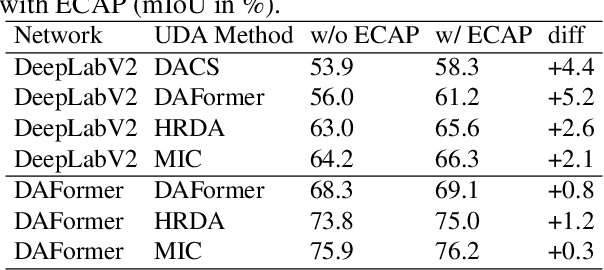

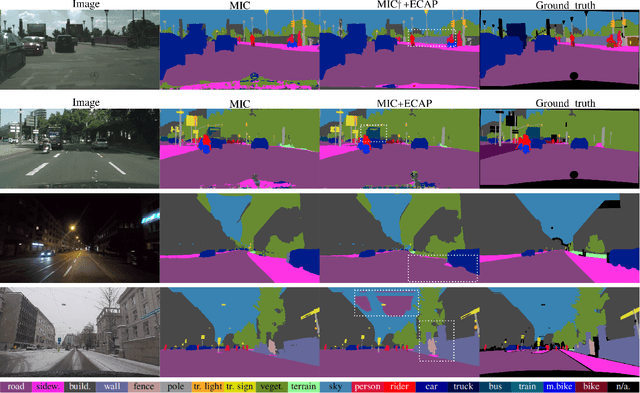

ECAP: Extensive Cut-and-Paste Augmentation for Unsupervised Domain Adaptive Semantic Segmentation

Mar 06, 2024

Abstract:We consider unsupervised domain adaptation (UDA) for semantic segmentation in which the model is trained on a labeled source dataset and adapted to an unlabeled target dataset. Unfortunately, current self-training methods are susceptible to misclassified pseudo-labels resulting from erroneous predictions. Since certain classes are typically associated with less reliable predictions in UDA, reducing the impact of such pseudo-labels without skewing the training towards some classes is notoriously difficult. To this end, we propose an extensive cut-and-paste strategy (ECAP) to leverage reliable pseudo-labels through data augmentation. Specifically, ECAP maintains a memory bank of pseudo-labeled target samples throughout training and cut-and-pastes the most confident ones onto the current training batch. We implement ECAP on top of the recent method MIC and boost its performance on two synthetic-to-real domain adaptation benchmarks. Notably, MIC+ECAP reaches an unprecedented performance of 69.1 mIoU on the Synthia->Cityscapes benchmark. Our code is available at https://github.com/ErikBrorsson/ECAP.

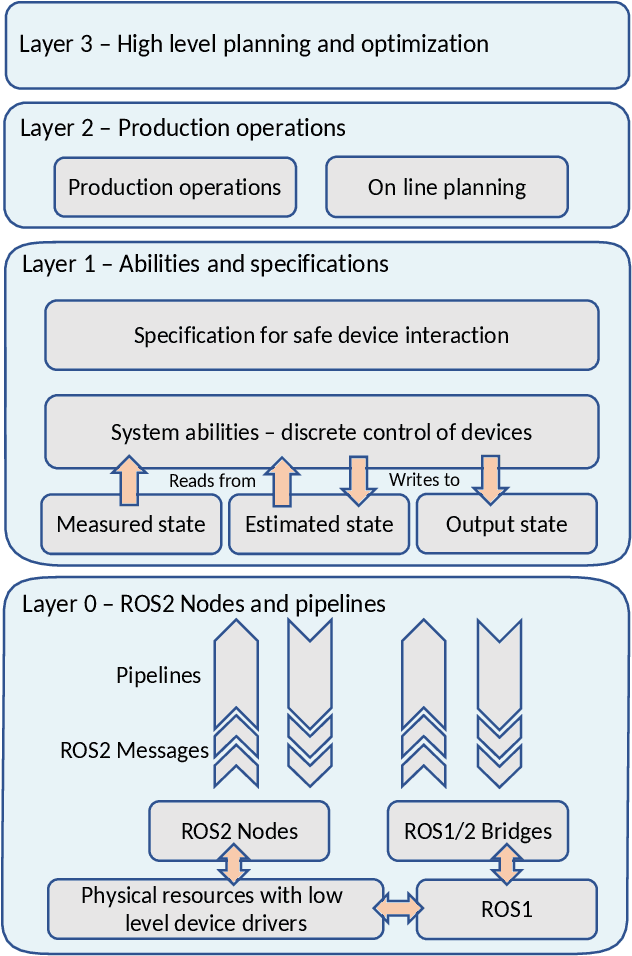

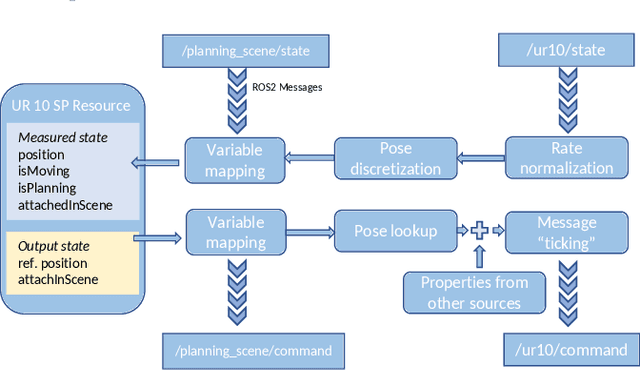

A ROS2 based communication architecture for control in collaborative and intelligent automation systems

May 23, 2019

Abstract:Collaborative robots are becoming part of intelligent automation systems in modern industry. Development and control of such systems differs from traditional automation methods and consequently leads to new challenges. Thankfully, Robot Operating System (ROS) provides a communication platform and a vast variety of tools and utilities that can aid that development. However, it is hard to use ROS in large-scale automation systems due to communication issues in a distributed setup, hence the development of ROS2. In this paper, a ROS2 based communication architecture is presented together with an industrial use-case of a collaborative and intelligent automation system.

Sequence Planner - Automated Planning and Control for ROS2-based Collaborative and Intelligent Automation Systems

Mar 14, 2019

Abstract:Systems based on the Robot Operating System (ROS) are easy to extend with new on-line algorithms and devices. However, there is relatively little support for coordinating a large number of heterogeneous sub-systems. In this paper we propose an architecture to model and control collaborative and intelligent automation systems in a hierarchical fashion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge