Junsheng Fu

Driving with Context: Online Map Matching for Complex Roads Using Lane Markings and Scenario Recognition

May 08, 2025

Abstract:Accurate online map matching is fundamental to vehicle navigation and the activation of intelligent driving functions. Current online map matching methods are prone to errors in complex road networks, especially in multilevel road area. To address this challenge, we propose an online Standard Definition (SD) map matching method by constructing a Hidden Markov Model (HMM) with multiple probability factors. Our proposed method can achieve accurate map matching even in complex road networks by carefully leveraging lane markings and scenario recognition in the designing of the probability factors. First, the lane markings are generated by a multi-lane tracking method and associated with the SD map using HMM to build an enriched SD map. In areas covered by the enriched SD map, the vehicle can re-localize itself by performing Iterative Closest Point (ICP) registration for the lane markings. Then, the probability factor accounting for the lane marking detection can be obtained using the association probability between adjacent lanes and roads. Second, the driving scenario recognition model is applied to generate the emission probability factor of scenario recognition, which improves the performance of map matching on elevated roads and ordinary urban roads underneath them. We validate our method through extensive road tests in Europe and China, and the experimental results show that our proposed method effectively improves the online map matching accuracy as compared to other existing methods, especially in multilevel road area. Specifically, the experiments show that our proposed method achieves $F_1$ scores of 98.04% and 94.60% on the Zenseact Open Dataset and test data of multilevel road areas in Shanghai respectively, significantly outperforming benchmark methods. The implementation is available at https://github.com/TRV-Lab/LMSR-OMM.

NeuRadar: Neural Radiance Fields for Automotive Radar Point Clouds

Apr 01, 2025Abstract:Radar is an important sensor for autonomous driving (AD) systems due to its robustness to adverse weather and different lighting conditions. Novel view synthesis using neural radiance fields (NeRFs) has recently received considerable attention in AD due to its potential to enable efficient testing and validation but remains unexplored for radar point clouds. In this paper, we present NeuRadar, a NeRF-based model that jointly generates radar point clouds, camera images, and lidar point clouds. We explore set-based object detection methods such as DETR, and propose an encoder-based solution grounded in the NeRF geometry for improved generalizability. We propose both a deterministic and a probabilistic point cloud representation to accurately model the radar behavior, with the latter being able to capture radar's stochastic behavior. We achieve realistic reconstruction results for two automotive datasets, establishing a baseline for NeRF-based radar point cloud simulation models. In addition, we release radar data for ZOD's Sequences and Drives to enable further research in this field. To encourage further development of radar NeRFs, we release the source code for NeuRadar.

GASP: Unifying Geometric and Semantic Self-Supervised Pre-training for Autonomous Driving

Mar 19, 2025

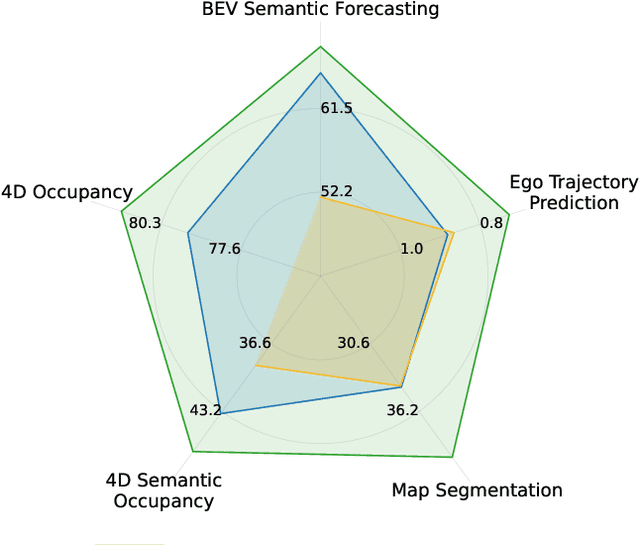

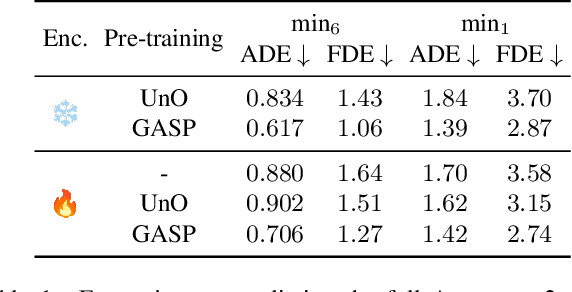

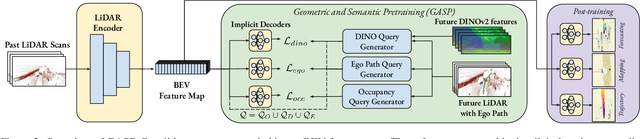

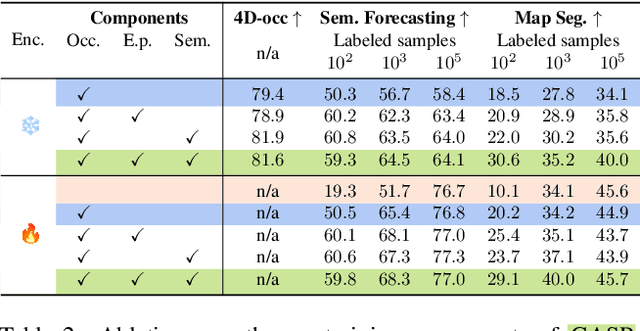

Abstract:Self-supervised pre-training based on next-token prediction has enabled large language models to capture the underlying structure of text, and has led to unprecedented performance on a large array of tasks when applied at scale. Similarly, autonomous driving generates vast amounts of spatiotemporal data, alluding to the possibility of harnessing scale to learn the underlying geometric and semantic structure of the environment and its evolution over time. In this direction, we propose a geometric and semantic self-supervised pre-training method, GASP, that learns a unified representation by predicting, at any queried future point in spacetime, (1) general occupancy, capturing the evolving structure of the 3D scene; (2) ego occupancy, modeling the ego vehicle path through the environment; and (3) distilled high-level features from a vision foundation model. By modeling geometric and semantic 4D occupancy fields instead of raw sensor measurements, the model learns a structured, generalizable representation of the environment and its evolution through time. We validate GASP on multiple autonomous driving benchmarks, demonstrating significant improvements in semantic occupancy forecasting, online mapping, and ego trajectory prediction. Our results demonstrate that continuous 4D geometric and semantic occupancy prediction provides a scalable and effective pre-training paradigm for autonomous driving. For code and additional visualizations, see \href{https://research.zenseact.com/publications/gasp/.

Exploring Semi-Supervised Learning for Online Mapping

Oct 14, 2024Abstract:Online mapping is important for scaling autonomous driving beyond well-defined areas. Training a model to produce a local map, including lane markers, road edges, and pedestrian crossings using only onboard sensory information, traditionally requires extensive labelled data, which is difficult and costly to obtain. This paper draws inspiration from semi-supervised learning techniques in other domains, demonstrating their applicability to online mapping. Additionally, we propose a simple yet effective method to exploit inherent attributes of online mapping to further enhance performance by fusing the teacher's pseudo-labels from multiple samples. The performance gap to using all labels is reduced from 29.6 to 3.4 mIoU on Argoverse, and from 12 to 3.4 mIoU on NuScenes utilising only 10% of the labelled data. We also demonstrate strong performance in extrapolating to new cities outside those in the training data. Specifically, for challenging nuScenes, adapting from Boston to Singapore, performance increases by 6.6 mIoU when unlabelled data from Singapore is included in training.

Towards Accurate Ego-lane Identification with Early Time Series Classification

May 27, 2024

Abstract:Accurate and timely determination of a vehicle's current lane within a map is a critical task in autonomous driving systems. This paper utilizes an Early Time Series Classification (ETSC) method to achieve precise and rapid ego-lane identification in real-world driving data. The method begins by assessing the similarities between map and lane markings perceived by the vehicle's camera using measurement model quality metrics. These metrics are then fed into a selected ETSC method, comprising a probabilistic classifier and a tailored trigger function, optimized via multi-objective optimization to strike a balance between early prediction and accuracy. Our solution has been evaluated on a comprehensive dataset consisting of 114 hours of real-world traffic data, collected across 5 different countries by our test vehicles. Results show that by leveraging road lane-marking geometry and lane-marking type derived solely from a camera, our solution achieves an impressive accuracy of 99.6%, with an average prediction time of only 0.84 seconds.

Bayesian Simultaneous Localization and Multi-Lane Tracking Using Onboard Sensors and a SD Map

May 07, 2024

Abstract:High-definition map with accurate lane-level information is crucial for autonomous driving, but the creation of these maps is a resource-intensive process. To this end, we present a cost-effective solution to create lane-level roadmaps using only the global navigation satellite system (GNSS) and a camera on customer vehicles. Our proposed solution utilizes a prior standard-definition (SD) map, GNSS measurements, visual odometry, and lane marking edge detection points, to simultaneously estimate the vehicle's 6D pose, its position within a SD map, and also the 3D geometry of traffic lines. This is achieved using a Bayesian simultaneous localization and multi-object tracking filter, where the estimation of traffic lines is formulated as a multiple extended object tracking problem, solved using a trajectory Poisson multi-Bernoulli mixture (TPMBM) filter. In TPMBM filtering, traffic lines are modeled using B-spline trajectories, and each trajectory is parameterized by a sequence of control points. The proposed solution has been evaluated using experimental data collected by a test vehicle driving on highway. Preliminary results show that the traffic line estimates, overlaid on the satellite image, generally align with the lane markings up to some lateral offsets.

Localization Is All You Evaluate: Data Leakage in Online Mapping Datasets and How to Fix It

Dec 11, 2023Abstract:Data leakage is a critical issue when training and evaluating any method based on supervised learning. The state-of-the-art methods for online mapping are based on supervised learning and are trained predominantly using two datasets: nuScenes and Argoverse 2. These datasets revisit the same geographic locations across training, validation, and test sets. Specifically, over $80$% of nuScenes and $40$% of Argoverse 2 validation and test samples are located less than $5$ m from a training sample. This allows methods to localize within a memorized implicit map during testing and leads to inflated performance numbers being reported. To reveal the true performance in unseen environments, we introduce geographical splits of the data. Experimental results show significantly lower performance numbers, for some methods dropping with more than $45$ mAP, when retraining and reevaluating existing online mapping models with the proposed split. Additionally, a reassessment of prior design choices reveals diverging conclusions from those based on the original split. Notably, the impact of the lifting method and the support from auxiliary tasks (e.g., depth supervision) on performance appears less substantial or follows a different trajectory than previously perceived. Geographical splits can be found https://github.com/LiljaAdam/geographical-splits

Zenseact Open Dataset: A large-scale and diverse multimodal dataset for autonomous driving

May 03, 2023

Abstract:Existing datasets for autonomous driving (AD) often lack diversity and long-range capabilities, focusing instead on 360{\deg} perception and temporal reasoning. To address this gap, we introduce Zenseact Open Dataset (ZOD), a large-scale and diverse multimodal dataset collected over two years in various European countries, covering an area 9x that of existing datasets. ZOD boasts the highest range and resolution sensors among comparable datasets, coupled with detailed keyframe annotations for 2D and 3D objects (up to 245m), road instance/semantic segmentation, traffic sign recognition, and road classification. We believe that this unique combination will facilitate breakthroughs in long-range perception and multi-task learning. The dataset is composed of Frames, Sequences, and Drives, designed to encompass both data diversity and support for spatio-temporal learning, sensor fusion, localization, and mapping. Frames consist of 100k curated camera images with two seconds of other supporting sensor data, while the 1473 Sequences and 29 Drives include the entire sensor suite for 20 seconds and a few minutes, respectively. ZOD is the only large-scale AD dataset released under a permissive license, allowing for both research and commercial use. The dataset is accompanied by an extensive development kit. Data and more information are available online (https://zod.zenseact.com).

Performance Analysis and Robustification of Single-query 6-DoF Camera Pose Estimation

Aug 17, 2018

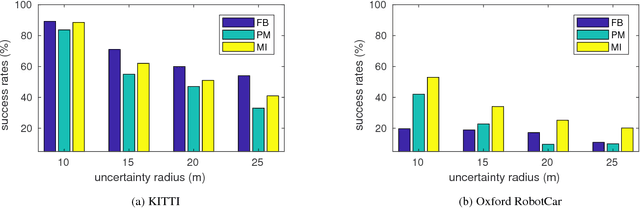

Abstract:We consider a single-query 6-DoF camera pose estimation with reference images and a point cloud, i.e. the problem of estimating the position and orientation of a camera by using reference images and a point cloud. In this work, we perform a systematic comparison of three state-of-the-art strategies for 6-DoF camera pose estimation, i.e. feature-based, photometric-based and mutual-information-based approaches. The performance of the studied methods is evaluated on two standard datasets in terms of success rate, translation error and max orientation error. Building on the results analysis, we propose a hybrid approach that combines feature-based and mutual-information-based pose estimation methods since it provides complementary properties for pose estimation. Experiments show that (1) in cases with large environmental variance, the hybrid approach outperforms feature-based and mutual-information-based approaches by an average of 25.1% and 5.8% in terms of success rate, respectively; (2) in cases where query and reference images are captured at similar imaging conditions, the hybrid approach performs similarly as the feature-based approach, but outperforms both photometric-based and mutual-information-based approaches with a clear margin; (3) the feature-based approach is consistently more accurate than mutual-information-based and photometric-based approaches when at least 4 consistent matching points are found between the query and reference images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge