Konstantinos Tserpes

FedGreed: A Byzantine-Robust Loss-Based Aggregation Method for Federated Learning

Aug 25, 2025Abstract:Federated Learning (FL) enables collaborative model training across multiple clients while preserving data privacy by keeping local datasets on-device. In this work, we address FL settings where clients may behave adversarially, exhibiting Byzantine attacks, while the central server is trusted and equipped with a reference dataset. We propose FedGreed, a resilient aggregation strategy for federated learning that does not require any assumptions about the fraction of adversarial participants. FedGreed orders clients' local model updates based on their loss metrics evaluated against a trusted dataset on the server and greedily selects a subset of clients whose models exhibit the minimal evaluation loss. Unlike many existing approaches, our method is designed to operate reliably under heterogeneous (non-IID) data distributions, which are prevalent in real-world deployments. FedGreed exhibits convergence guarantees and bounded optimality gaps under strong adversarial behavior. Experimental evaluations on MNIST, FMNIST, and CIFAR-10 demonstrate that our method significantly outperforms standard and robust federated learning baselines, such as Mean, Trimmed Mean, Median, Krum, and Multi-Krum, in the majority of adversarial scenarios considered, including label flipping and Gaussian noise injection attacks. All experiments were conducted using the Flower federated learning framework.

Robust Federated Learning under Adversarial Attacks via Loss-Based Client Clustering

Aug 18, 2025Abstract:Federated Learning (FL) enables collaborative model training across multiple clients without sharing private data. We consider FL scenarios wherein FL clients are subject to adversarial (Byzantine) attacks, while the FL server is trusted (honest) and has a trustworthy side dataset. This may correspond to, e.g., cases where the server possesses trusted data prior to federation, or to the presence of a trusted client that temporarily assumes the server role. Our approach requires only two honest participants, i.e., the server and one client, to function effectively, without prior knowledge of the number of malicious clients. Theoretical analysis demonstrates bounded optimality gaps even under strong Byzantine attacks. Experimental results show that our algorithm significantly outperforms standard and robust FL baselines such as Mean, Trimmed Mean, Median, Krum, and Multi-Krum under various attack strategies including label flipping, sign flipping, and Gaussian noise addition across MNIST, FMNIST, and CIFAR-10 benchmarks using the Flower framework.

WEST GCN-LSTM: Weighted Stacked Spatio-Temporal Graph Neural Networks for Regional Traffic Forecasting

May 01, 2024

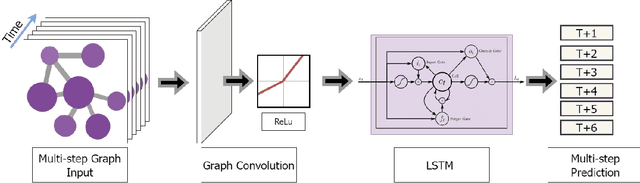

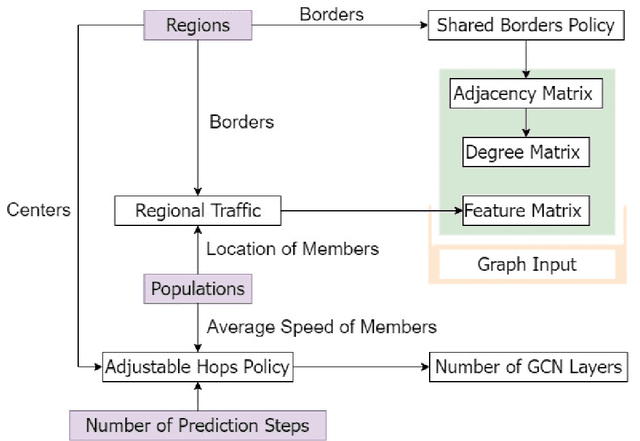

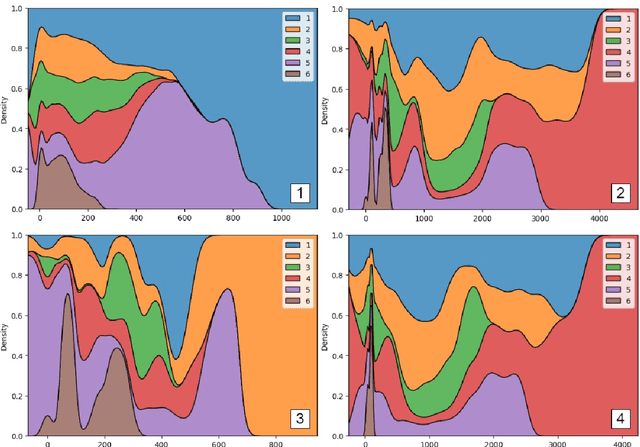

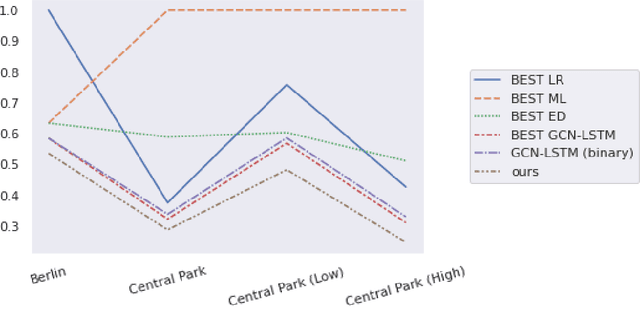

Abstract:Regional traffic forecasting is a critical challenge in urban mobility, with applications to various fields such as the Internet of Everything. In recent years, spatio-temporal graph neural networks have achieved state-of-the-art results in the context of numerous traffic forecasting challenges. This work aims at expanding upon the conventional spatio-temporal graph neural network architectures in a manner that may facilitate the inclusion of information regarding the examined regions, as well as the populations that traverse them, in order to establish a more efficient prediction model. The end-product of this scientific endeavour is a novel spatio-temporal graph neural network architecture that is referred to as WEST (WEighted STacked) GCN-LSTM. Furthermore, the inclusion of the aforementioned information is conducted via the use of two novel dedicated algorithms that are referred to as the Shared Borders Policy and the Adjustable Hops Policy. Through information fusion and distillation, the proposed solution manages to significantly outperform its competitors in the frame of an experimental evaluation that consists of 19 forecasting models, across several datasets. Finally, an additional ablation study determined that each of the components of the proposed solution contributes towards enhancing its overall performance.

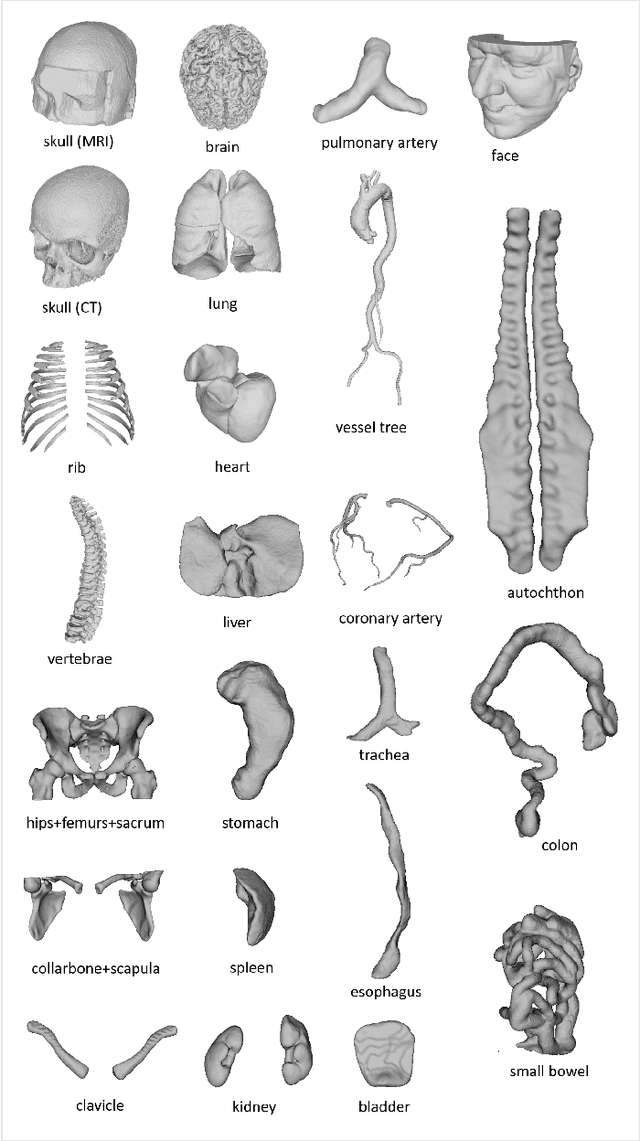

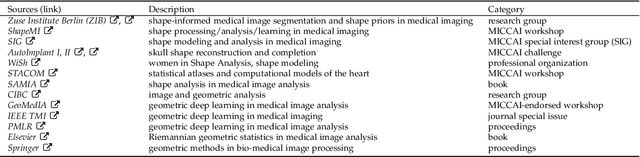

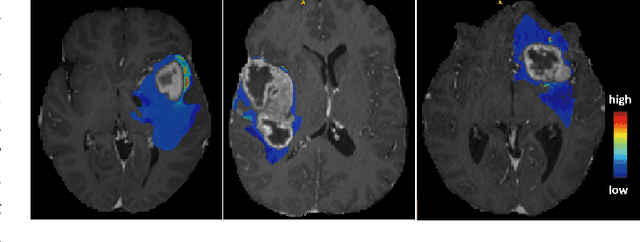

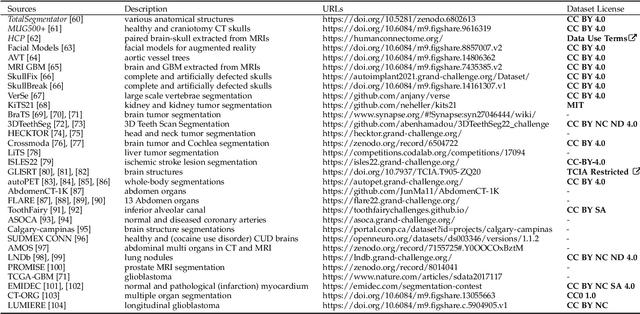

MedShapeNet -- A Large-Scale Dataset of 3D Medical Shapes for Computer Vision

Sep 12, 2023

Abstract:We present MedShapeNet, a large collection of anatomical shapes (e.g., bones, organs, vessels) and 3D surgical instrument models. Prior to the deep learning era, the broad application of statistical shape models (SSMs) in medical image analysis is evidence that shapes have been commonly used to describe medical data. Nowadays, however, state-of-the-art (SOTA) deep learning algorithms in medical imaging are predominantly voxel-based. In computer vision, on the contrary, shapes (including, voxel occupancy grids, meshes, point clouds and implicit surface models) are preferred data representations in 3D, as seen from the numerous shape-related publications in premier vision conferences, such as the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), as well as the increasing popularity of ShapeNet (about 51,300 models) and Princeton ModelNet (127,915 models) in computer vision research. MedShapeNet is created as an alternative to these commonly used shape benchmarks to facilitate the translation of data-driven vision algorithms to medical applications, and it extends the opportunities to adapt SOTA vision algorithms to solve critical medical problems. Besides, the majority of the medical shapes in MedShapeNet are modeled directly on the imaging data of real patients, and therefore it complements well existing shape benchmarks comprising of computer-aided design (CAD) models. MedShapeNet currently includes more than 100,000 medical shapes, and provides annotations in the form of paired data. It is therefore also a freely available repository of 3D models for extended reality (virtual reality - VR, augmented reality - AR, mixed reality - MR) and medical 3D printing. This white paper describes in detail the motivations behind MedShapeNet, the shape acquisition procedures, the use cases, as well as the usage of the online shape search portal: https://medshapenet.ikim.nrw/

An evaluation of time series forecasting models on water consumption data: A case study of Greece

Mar 30, 2023

Abstract:In recent years, the increased urbanization and industrialization has led to a rising water demand and resources, thus increasing the gap between demand and supply. Proper water distribution and forecasting of water consumption are key factors in mitigating the imbalance of supply and demand by improving operations, planning and management of water resources. To this end, in this paper, several well-known forecasting algorithms are evaluated over time series, water consumption data from Greece, a country with diverse socio-economic and urbanization issues. The forecasting algorithms are evaluated on a real-world dataset provided by the Water Supply and Sewerage Company of Greece revealing key insights about each algorithm and its use.

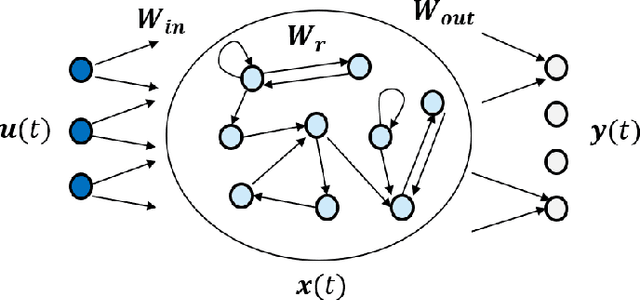

Intelligent Proactive Fault Tolerance at the Edge through Resource Usage Prediction

Feb 09, 2023

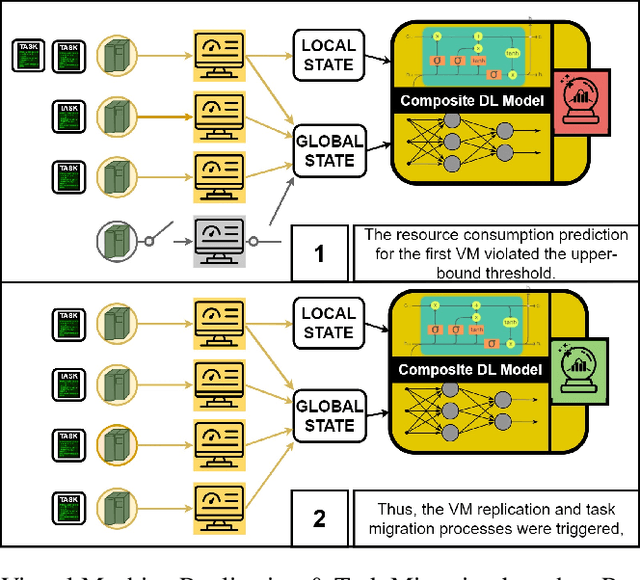

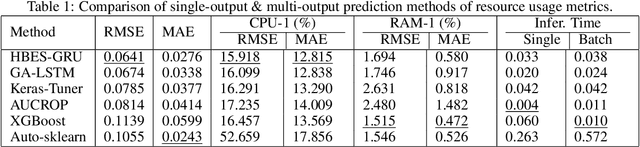

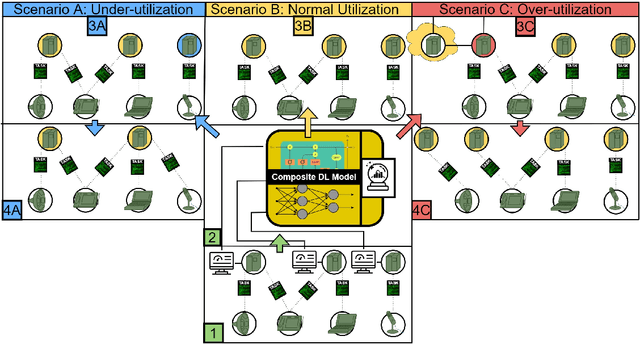

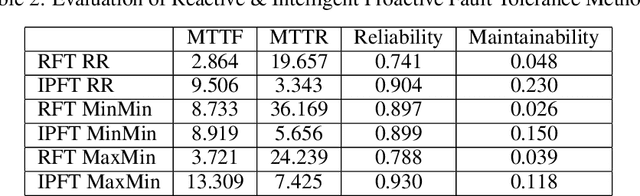

Abstract:The proliferation of demanding applications and edge computing establishes the need for an efficient management of the underlying computing infrastructures, urging the providers to rethink their operational methods. In this paper, we propose an Intelligent Proactive Fault Tolerance (IPFT) method that leverages the edge resource usage predictions through Recurrent Neural Networks (RNN). More specifically, we focus on the process-faults, which are related with the inability of the infrastructure to provide Quality of Service (QoS) in acceptable ranges due to the lack of processing power. In order to tackle this challenge we propose a composite deep learning architecture that predicts the resource usage metrics of the edge nodes and triggers proactive node replications and task migration. Taking also into consideration that the edge computing infrastructure is also highly dynamic and heterogeneous, we propose an innovative Hybrid Bayesian Evolution Strategy (HBES) algorithm for automated adaptation of the resource usage models. The proposed resource usage prediction mechanism has been experimentally evaluated and compared with other state of the art methods with significant improvements in terms of Root Mean Squared Error (RMSE) and Mean Absolute Error (MAE). Additionally, the IPFT mechanism that leverages the resource usage predictions has been evaluated in an extensive simulation in CloudSim Plus and the results show significant improvement compared to the reactive fault tolerance method in terms of reliability and maintainability.

* Also available in ITU's Journal on Future and Evolving Technologies

Partially Oblivious Neural Network Inference

Oct 27, 2022

Abstract:Oblivious inference is the task of outsourcing a ML model, like neural-networks, without disclosing critical and sensitive information, like the model's parameters. One of the most prominent solutions for secure oblivious inference is based on a powerful cryptographic tools, like Homomorphic Encryption (HE) and/or multi-party computation (MPC). Even though the implementation of oblivious inference systems schemes has impressively improved the last decade, there are still significant limitations on the ML models that they can practically implement. Especially when both the ML model and the input data's confidentiality must be protected. In this paper, we introduce the notion of partially oblivious inference. We empirically show that for neural network models, like CNNs, some information leakage can be acceptable. We therefore propose a novel trade-off between security and efficiency. In our research, we investigate the impact on security and inference runtime performance from the CNN model's weights partial leakage. We experimentally demonstrate that in a CIFAR-10 network we can leak up to $80\%$ of the model's weights with practically no security impact, while the necessary HE-mutliplications are performed four times faster.

TraClets: Harnessing the power of computer vision for trajectory classification

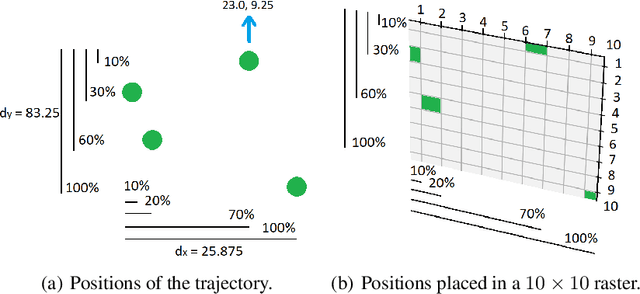

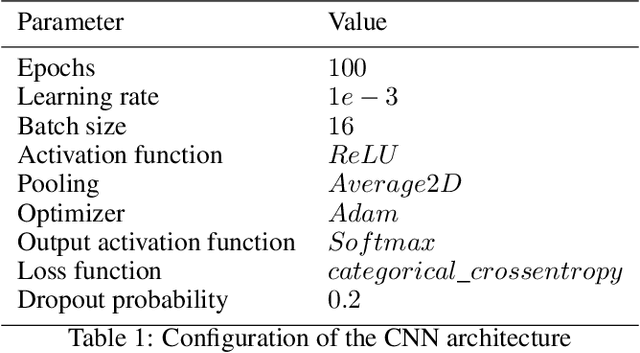

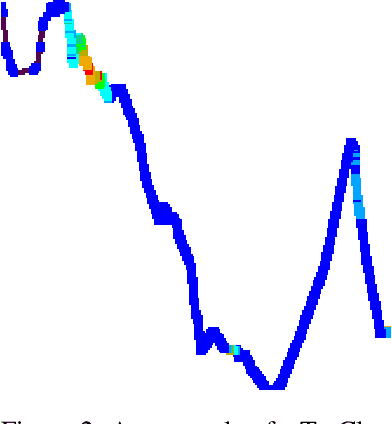

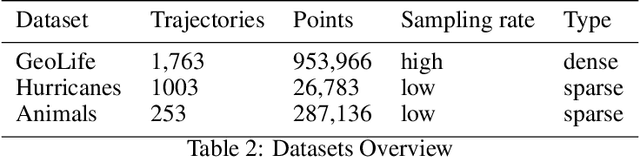

May 30, 2022

Abstract:Due to the advent of new mobile devices and tracking sensors in recent years, huge amounts of data are being produced every day. Therefore, novel methodologies need to emerge that dive through this vast sea of information and generate insights and meaningful information. To this end, researchers have developed several trajectory classification algorithms over the years that are able to annotate tracking data. Similarly, in this research, a novel methodology is presented that exploits image representations of trajectories, called TraClets, in order to classify trajectories in an intuitive humans way, through computer vision techniques. Several real-world datasets are used to evaluate the proposed approach and compare its classification performance to other state-of-the-art trajectory classification algorithms. Experimental results demonstrate that TraClets achieves a classification performance that is comparable to, or in most cases, better than the state-of-the-art, acting as a universal, high-accuracy approach for trajectory classification.

AI-as-a-Service Toolkit for Human-Centered Intelligence in Autonomous Driving

Feb 09, 2022

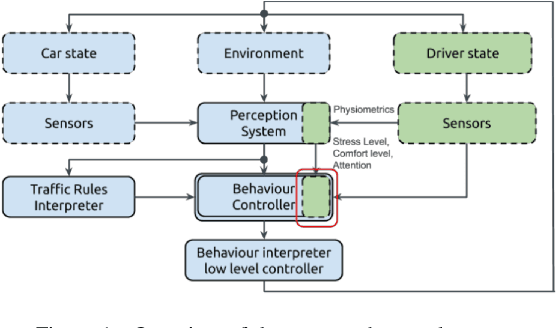

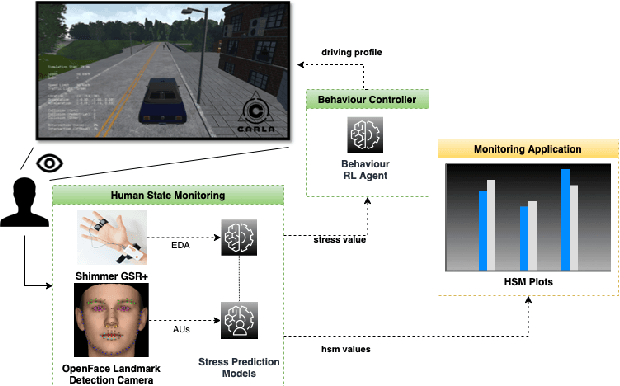

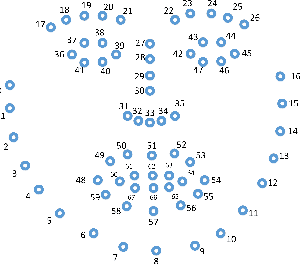

Abstract:This paper presents a proof-of-concept implementation of the AI-as-a-Service toolkit developed within the H2020 TEACHING project and designed to implement an autonomous driving personalization system according to the output of an automatic driver's stress recognition algorithm, both of them realizing a Cyber-Physical System of Systems. In addition, we implemented a data-gathering subsystem to collect data from different sensors, i.e., wearables and cameras, to automatize stress recognition. The system was attached for testing to a driving simulation software, CARLA, which allows testing the approach's feasibility with minimum cost and without putting at risk drivers and passengers. At the core of the relative subsystems, different learning algorithms were implemented using Deep Neural Networks, Recurrent Neural Networks, and Reinforcement Learning.

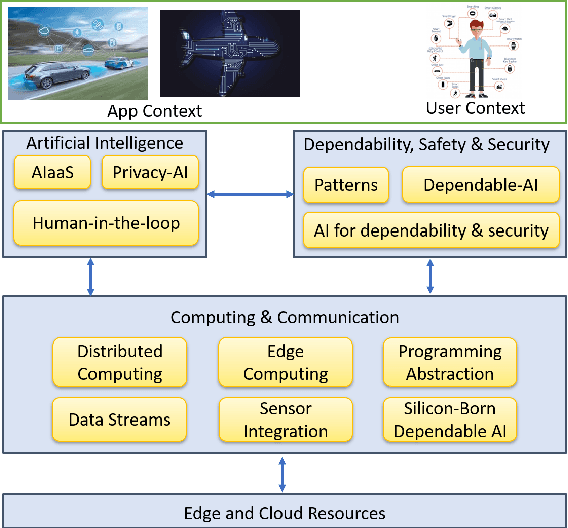

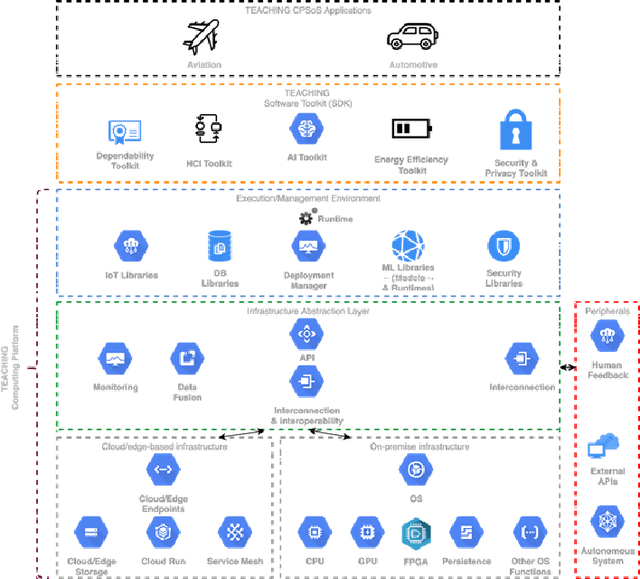

TEACHING -- Trustworthy autonomous cyber-physical applications through human-centred intelligence

Jul 14, 2021

Abstract:This paper discusses the perspective of the H2020 TEACHING project on the next generation of autonomous applications running in a distributed and highly heterogeneous environment comprising both virtual and physical resources spanning the edge-cloud continuum. TEACHING puts forward a human-centred vision leveraging the physiological, emotional, and cognitive state of the users as a driver for the adaptation and optimization of the autonomous applications. It does so by building a distributed, embedded and federated learning system complemented by methods and tools to enforce its dependability, security and privacy preservation. The paper discusses the main concepts of the TEACHING approach and singles out the main AI-related research challenges associated with it. Further, we provide a discussion of the design choices for the TEACHING system to tackle the aforementioned challenges

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge