Enrico Nasca

Institute for Artificial Intelligence in Medicine, University Hospital Essen, Essen, Germany, Cancer Research Center Cologne Essen

Sliding Window FastEdit: A Framework for Lesion Annotation in Whole-body PET Images

Nov 24, 2023

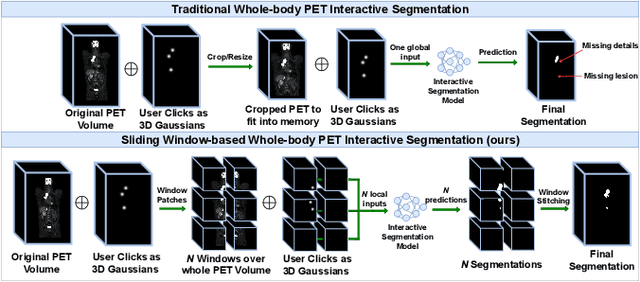

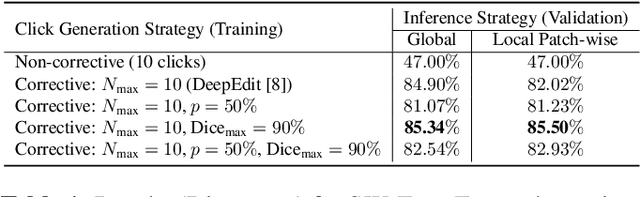

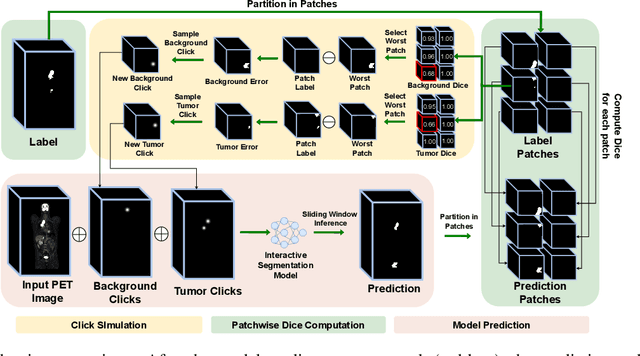

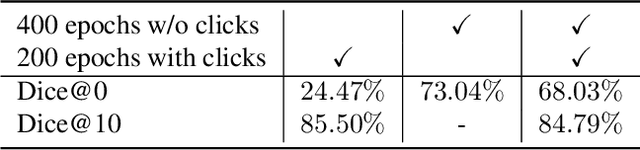

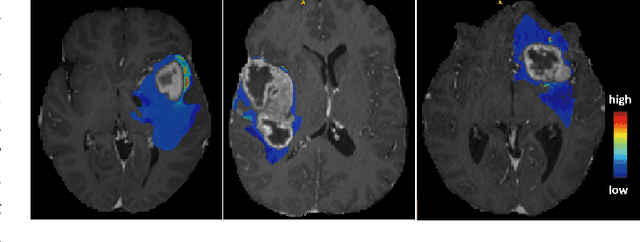

Abstract:Deep learning has revolutionized the accurate segmentation of diseases in medical imaging. However, achieving such results requires training with numerous manual voxel annotations. This requirement presents a challenge for whole-body Positron Emission Tomography (PET) imaging, where lesions are scattered throughout the body. To tackle this problem, we introduce SW-FastEdit - an interactive segmentation framework that accelerates the labeling by utilizing only a few user clicks instead of voxelwise annotations. While prior interactive models crop or resize PET volumes due to memory constraints, we use the complete volume with our sliding window-based interactive scheme. Our model outperforms existing non-sliding window interactive models on the AutoPET dataset and generalizes to the previously unseen HECKTOR dataset. A user study revealed that annotators achieve high-quality predictions with only 10 click iterations and a low perceived NASA-TLX workload. Our framework is implemented using MONAI Label and is available: https://github.com/matt3o/AutoPET2-Submission/

MedShapeNet -- A Large-Scale Dataset of 3D Medical Shapes for Computer Vision

Sep 12, 2023

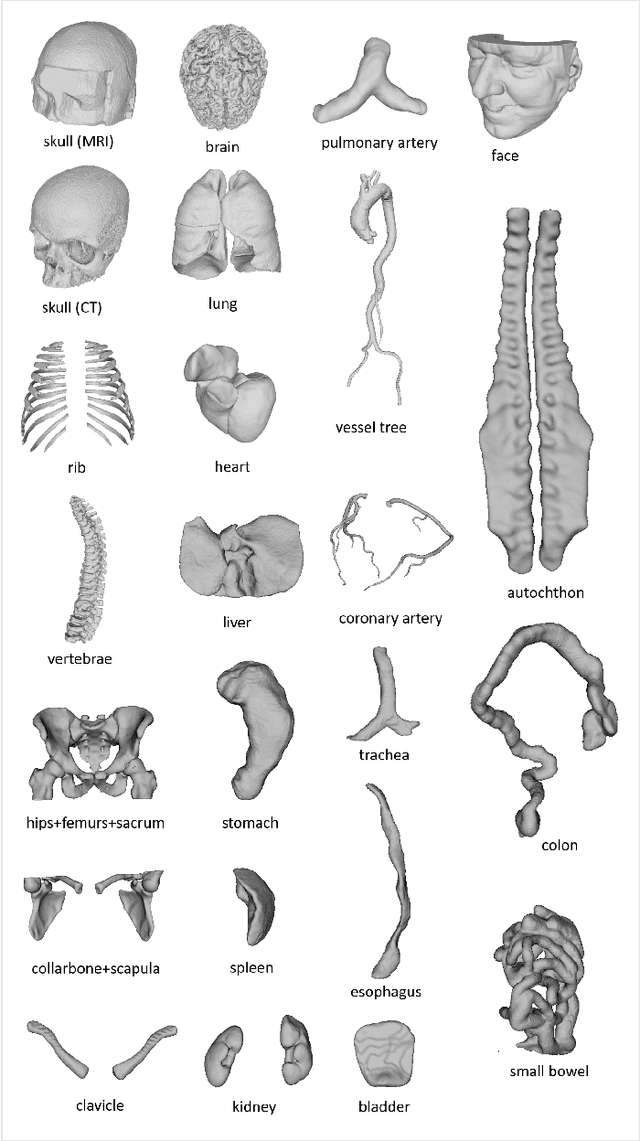

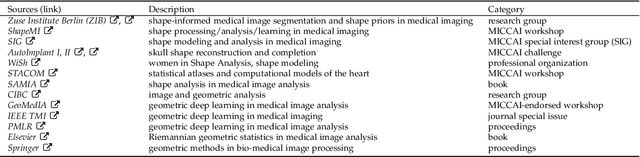

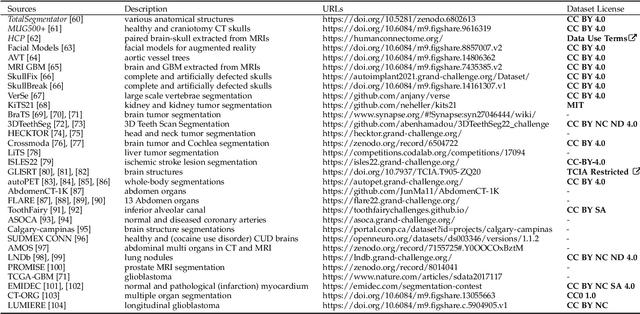

Abstract:We present MedShapeNet, a large collection of anatomical shapes (e.g., bones, organs, vessels) and 3D surgical instrument models. Prior to the deep learning era, the broad application of statistical shape models (SSMs) in medical image analysis is evidence that shapes have been commonly used to describe medical data. Nowadays, however, state-of-the-art (SOTA) deep learning algorithms in medical imaging are predominantly voxel-based. In computer vision, on the contrary, shapes (including, voxel occupancy grids, meshes, point clouds and implicit surface models) are preferred data representations in 3D, as seen from the numerous shape-related publications in premier vision conferences, such as the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), as well as the increasing popularity of ShapeNet (about 51,300 models) and Princeton ModelNet (127,915 models) in computer vision research. MedShapeNet is created as an alternative to these commonly used shape benchmarks to facilitate the translation of data-driven vision algorithms to medical applications, and it extends the opportunities to adapt SOTA vision algorithms to solve critical medical problems. Besides, the majority of the medical shapes in MedShapeNet are modeled directly on the imaging data of real patients, and therefore it complements well existing shape benchmarks comprising of computer-aided design (CAD) models. MedShapeNet currently includes more than 100,000 medical shapes, and provides annotations in the form of paired data. It is therefore also a freely available repository of 3D models for extended reality (virtual reality - VR, augmented reality - AR, mixed reality - MR) and medical 3D printing. This white paper describes in detail the motivations behind MedShapeNet, the shape acquisition procedures, the use cases, as well as the usage of the online shape search portal: https://medshapenet.ikim.nrw/

Why does my medical AI look at pictures of birds? Exploring the efficacy of transfer learning across domain boundaries

Jun 30, 2023Abstract:It is an open secret that ImageNet is treated as the panacea of pretraining. Particularly in medical machine learning, models not trained from scratch are often finetuned based on ImageNet-pretrained models. We posit that pretraining on data from the domain of the downstream task should almost always be preferred instead. We leverage RadNet-12M, a dataset containing more than 12 million computed tomography (CT) image slices, to explore the efficacy of self-supervised pretraining on medical and natural images. Our experiments cover intra- and cross-domain transfer scenarios, varying data scales, finetuning vs. linear evaluation, and feature space analysis. We observe that intra-domain transfer compares favorably to cross-domain transfer, achieving comparable or improved performance (0.44% - 2.07% performance increase using RadNet pretraining, depending on the experiment) and demonstrate the existence of a domain boundary-related generalization gap and domain-specific learned features.

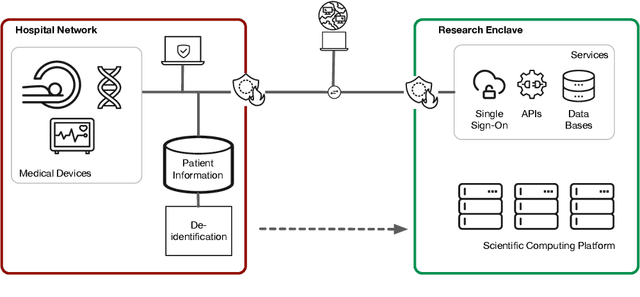

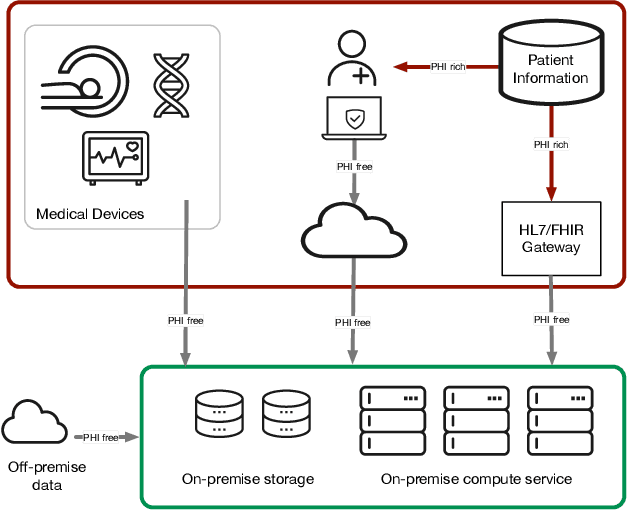

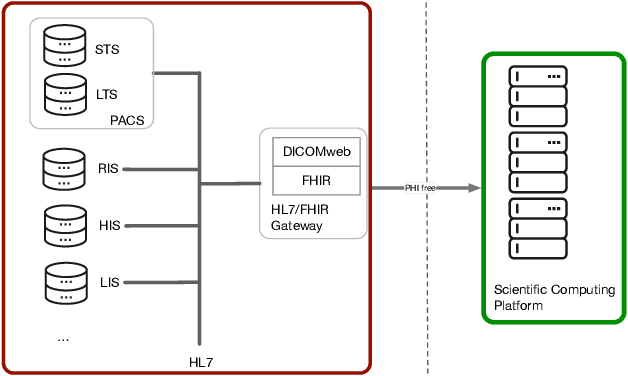

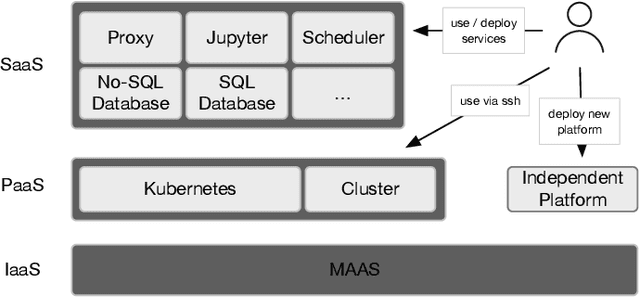

Towards a trustworthy, secure and reliable enclave for machine learning in a hospital setting: The Essen Medical Computing Platform (EMCP)

Jan 13, 2022

Abstract:AI/Computing at scale is a difficult problem, especially in a health care setting. We outline the requirements, planning and implementation choices as well as the guiding principles that led to the implementation of our secure research computing enclave, the Essen Medical Computing Platform (EMCP), affiliated with a major German hospital. Compliance, data privacy and usability were the immutable requirements of the system. We will discuss the features of our computing enclave and we will provide our recipe for groups wishing to adopt a similar setup.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge