Kay Hansel

Global Tensor Motion Planning

Nov 28, 2024Abstract:Batch planning is increasingly crucial for the scalability of robotics tasks and dataset generation diversity. This paper presents Global Tensor Motion Planning (GTMP) -- a sampling-based motion planning algorithm comprising only tensor operations. We introduce a novel discretization structure represented as a random multipartite graph, enabling efficient vectorized sampling, collision checking, and search. We provide an early theoretical investigation showing that GTMP exhibits probabilistic completeness while supporting modern GPU/TPU. Additionally, by incorporating smooth structures into the multipartite graph, GTMP directly plans smooth splines without requiring gradient-based optimization. Experiments on lidar-scanned occupancy maps and the MotionBenchMarker dataset demonstrate GTMP's computation efficiency in batch planning compared to baselines, underscoring GTMP's potential as a robust, scalable planner for diverse applications and large-scale robot learning tasks.

Machine Learning with Physics Knowledge for Prediction: A Survey

Aug 19, 2024

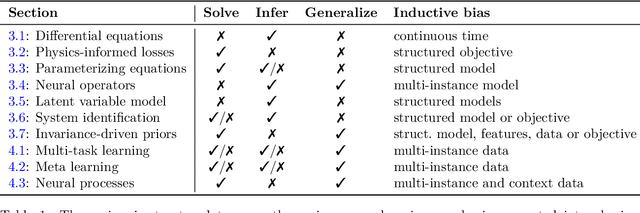

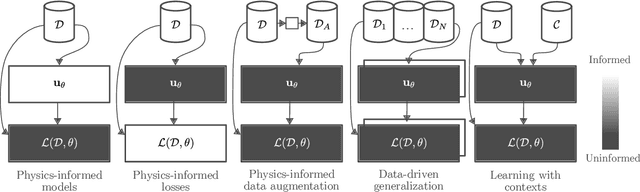

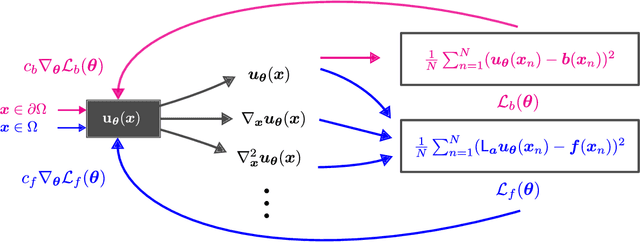

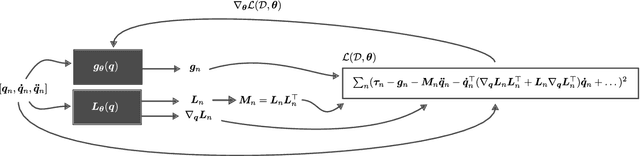

Abstract:This survey examines the broad suite of methods and models for combining machine learning with physics knowledge for prediction and forecast, with a focus on partial differential equations. These methods have attracted significant interest due to their potential impact on advancing scientific research and industrial practices by improving predictive models with small- or large-scale datasets and expressive predictive models with useful inductive biases. The survey has two parts. The first considers incorporating physics knowledge on an architectural level through objective functions, structured predictive models, and data augmentation. The second considers data as physics knowledge, which motivates looking at multi-task, meta, and contextual learning as an alternative approach to incorporating physics knowledge in a data-driven fashion. Finally, we also provide an industrial perspective on the application of these methods and a survey of the open-source ecosystem for physics-informed machine learning.

Integrating Visuo-tactile Sensing with Haptic Feedback for Teleoperated Robot Manipulation

Apr 30, 2024

Abstract:Telerobotics enables humans to overcome spatial constraints and allows them to physically interact with the environment in remote locations. However, the sensory feedback provided by the system to the operator is often purely visual, limiting the operator's dexterity in manipulation tasks. In this work, we address this issue by equipping the robot's end-effector with high-resolution visuotactile GelSight sensors. Using low-cost MANUS-Gloves, we provide the operator with haptic feedback about forces acting at the points of contact in the form of vibration signals. We propose two different methods for estimating these forces; one based on estimating the movement of markers on the sensor surface and one deep-learning approach. Additionally, we integrate our system into a virtual-reality teleoperation pipeline in which a human operator controls both arms of a Tiago robot while receiving visual and haptic feedback. We believe that integrating haptic feedback is a crucial step for dexterous manipulation in teleoperated robotic systems.

Human Preferences and Robot Constraints Aware Shared Control for Smooth Follower Motion Execution

Jul 31, 2023Abstract:With the continuous advancement of robot teleoperation technology, shared control is used to reduce the physical and mental load of the operator in teleoperation system. This paper proposes an alternating shared control framework for object grasping that considers both operator's preferences through their manual manipulation and the constraints of the follower robot. The switching between manual mode and automatic mode enables the operator to intervene the task according to their wishes. The generation of the grasping pose takes into account the current state of the operator's hand pose, as well as the manipulability of the robot. The object grasping experiment indicates that the use of the proposed grasping pose selection strategy leads to smoother follower movements when switching from manual mode to automatic mode.

Visual Tactile Sensor Based Force Estimation for Position-Force Teleoperation

Dec 26, 2022Abstract:Vision-based tactile sensors have gained extensive attention in the robotics community. The sensors are highly expected to be capable of extracting contact information i.e. haptic information during in-hand manipulation. This nature of tactile sensors makes them a perfect match for haptic feedback applications. In this paper, we propose a contact force estimation method using the vision-based tactile sensor DIGIT, and apply it to a position-force teleoperation architecture for force feedback. The force estimation is done by building a depth map for DIGIT gel surface deformation measurement and applying a regression algorithm on estimated depth data and ground truth force data to get the depth-force relationship. The experiment is performed by constructing a grasping force feedback system with a haptic device as a leader robot and a parallel robot gripper as a follower robot, where the DIGIT sensor is attached to the tip of the robot gripper to estimate the contact force. The preliminary results show the capability of using the low-cost vision-based sensor for force feedback applications.

Hierarchical Policy Blending As Optimal Transport

Dec 04, 2022Abstract:We present hierarchical policy blending as optimal transport (HiPBOT). This hierarchical framework adapts the weights of low-level reactive expert policies, adding a look-ahead planning layer on the parameter space of a product of expert policies and agents. Our high-level planner realizes a policy blending via unbalanced optimal transport, consolidating the scaling of underlying Riemannian motion policies, effectively adjusting their Riemannian matrix, and deciding over the priorities between experts and agents, guaranteeing safety and task success. Our experimental results in a range of application scenarios from low-dimensional navigation to high-dimensional whole-body control showcase the efficacy and efficiency of HiPBOT, which outperforms state-of-the-art baselines that either perform probabilistic inference or define a tree structure of experts, paving the way for new applications of optimal transport to robot control. More material at https://sites.google.com/view/hipobot

Hierarchical Policy Blending as Inference for Reactive Robot Control

Oct 14, 2022

Abstract:Motion generation in cluttered, dense, and dynamic environments is a central topic in robotics, rendered as a multi-objective decision-making problem. Current approaches trade-off between safety and performance. On the one hand, reactive policies guarantee fast response to environmental changes at the risk of suboptimal behavior. On the other hand, planning-based motion generation provides feasible trajectories, but the high computational cost may limit the control frequency and thus safety. To combine the benefits of reactive policies and planning, we propose a hierarchical motion generation method. Moreover, we adopt probabilistic inference methods to formalize the hierarchical model and stochastic optimization. We realize this approach as a weighted product of stochastic, reactive expert policies, where planning is used to adaptively compute the optimal weights over the task horizon. This stochastic optimization avoids local optima and proposes feasible reactive plans that find paths in cluttered and dense environments. Our extensive experimental study in planar navigation and 6DoF manipulation shows that our proposed hierarchical motion generation method outperforms both myopic reactive controllers and online re-planning methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge