Kalyanmoy Deb

BEACON Center, Michigan State University

A Novel Pareto-optimal Ranking Method for Comparing Multi-objective Optimization Algorithms

Nov 27, 2024

Abstract:As the interest in multi- and many-objective optimization algorithms grows, the performance comparison of these algorithms becomes increasingly important. A large number of performance indicators for multi-objective optimization algorithms have been introduced, each of which evaluates these algorithms based on a certain aspect. Therefore, assessing the quality of multi-objective results using multiple indicators is essential to guarantee that the evaluation considers all quality perspectives. This paper proposes a novel multi-metric comparison method to rank the performance of multi-/ many-objective optimization algorithms based on a set of performance indicators. We utilize the Pareto optimality concept (i.e., non-dominated sorting algorithm) to create the rank levels of algorithms by simultaneously considering multiple performance indicators as criteria/objectives. As a result, four different techniques are proposed to rank algorithms based on their contribution at each Pareto level. This method allows researchers to utilize a set of existing/newly developed performance metrics to adequately assess/rank multi-/many-objective algorithms. The proposed methods are scalable and can accommodate in its comprehensive scheme any newly introduced metric. The method was applied to rank 10 competing algorithms in the 2018 CEC competition solving 15 many-objective test problems. The Pareto-optimal ranking was conducted based on 10 well-known multi-objective performance indicators and the results were compared to the final ranks reported by the competition, which were based on the inverted generational distance (IGD) and hypervolume indicator (HV) measures. The techniques suggested in this paper have broad applications in science and engineering, particularly in areas where multiple metrics are used for comparisons. Examples include machine learning and data mining.

GNBG-Generated Test Suite for Box-Constrained Numerical Global Optimization

Dec 12, 2023Abstract:This document introduces a set of 24 box-constrained numerical global optimization problem instances, systematically constructed using the Generalized Numerical Benchmark Generator (GNBG). These instances cover a broad spectrum of problem features, including varying degrees of modality, ruggedness, symmetry, conditioning, variable interaction structures, basin linearity, and deceptiveness. Purposefully designed, this test suite offers varying difficulty levels and problem characteristics, facilitating rigorous evaluation and comparative analysis of optimization algorithms. By presenting these problems, we aim to provide researchers with a structured platform to assess the strengths and weaknesses of their algorithms against challenges with known, controlled characteristics. For reproducibility, the MATLAB source code for this test suite is publicly available.

GNBG: A Generalized and Configurable Benchmark Generator for Continuous Numerical Optimization

Dec 12, 2023Abstract:As optimization challenges continue to evolve, so too must our tools and understanding. To effectively assess, validate, and compare optimization algorithms, it is crucial to use a benchmark test suite that encompasses a diverse range of problem instances with various characteristics. Traditional benchmark suites often consist of numerous fixed test functions, making it challenging to align these with specific research objectives, such as the systematic evaluation of algorithms under controllable conditions. This paper introduces the Generalized Numerical Benchmark Generator (GNBG) for single-objective, box-constrained, continuous numerical optimization. Unlike existing approaches that rely on multiple baseline functions and transformations, GNBG utilizes a single, parametric, and configurable baseline function. This design allows for control over various problem characteristics. Researchers using GNBG can generate instances that cover a broad array of morphological features, from unimodal to highly multimodal functions, various local optima patterns, and symmetric to highly asymmetric structures. The generated problems can also vary in separability, variable interaction structures, dimensionality, conditioning, and basin shapes. These customizable features enable the systematic evaluation and comparison of optimization algorithms, allowing researchers to probe their strengths and weaknesses under diverse and controllable conditions.

Discovering Adaptable Symbolic Algorithms from Scratch

Jul 31, 2023

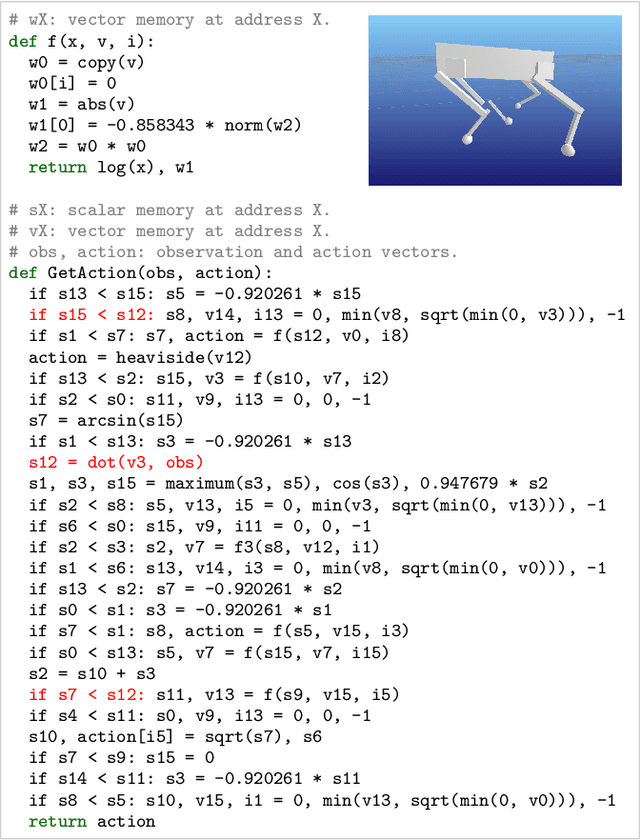

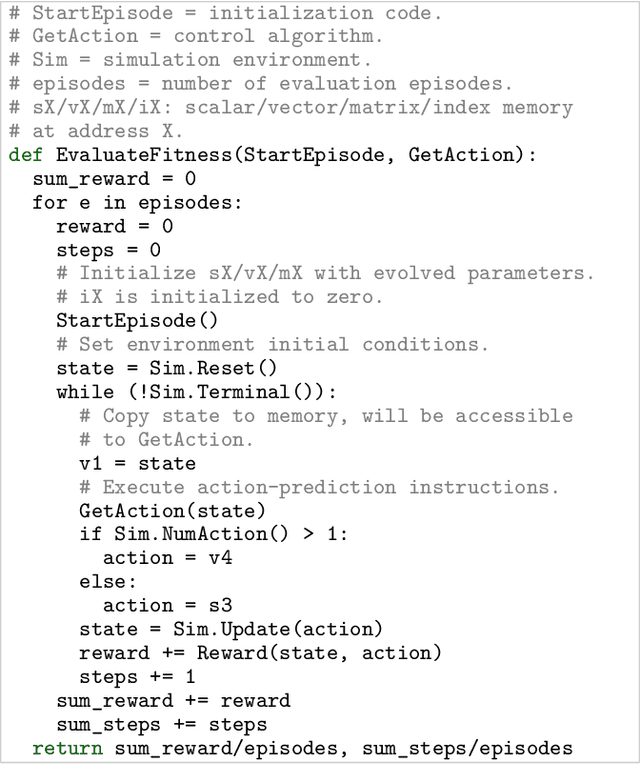

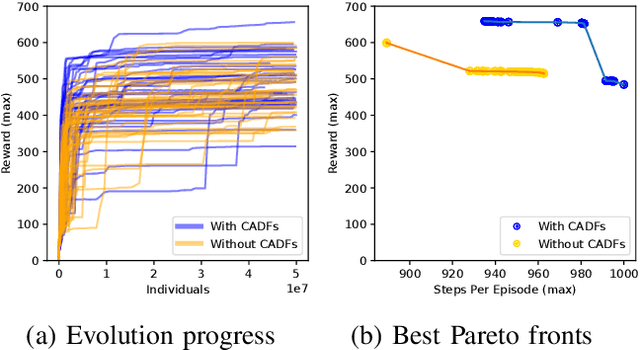

Abstract:Autonomous robots deployed in the real world will need control policies that rapidly adapt to environmental changes. To this end, we propose AutoRobotics-Zero (ARZ), a method based on AutoML-Zero that discovers zero-shot adaptable policies from scratch. In contrast to neural network adaption policies, where only model parameters are optimized, ARZ can build control algorithms with the full expressive power of a linear register machine. We evolve modular policies that tune their model parameters and alter their inference algorithm on-the-fly to adapt to sudden environmental changes. We demonstrate our method on a realistic simulated quadruped robot, for which we evolve safe control policies that avoid falling when individual limbs suddenly break. This is a challenging task in which two popular neural network baselines fail. Finally, we conduct a detailed analysis of our method on a novel and challenging non-stationary control task dubbed Cataclysmic Cartpole. Results confirm our findings that ARZ is significantly more robust to sudden environmental changes and can build simple, interpretable control policies.

Revisiting Residual Networks for Adversarial Robustness: An Architectural Perspective

Dec 21, 2022

Abstract:Efforts to improve the adversarial robustness of convolutional neural networks have primarily focused on developing more effective adversarial training methods. In contrast, little attention was devoted to analyzing the role of architectural elements (such as topology, depth, and width) on adversarial robustness. This paper seeks to bridge this gap and present a holistic study on the impact of architectural design on adversarial robustness. We focus on residual networks and consider architecture design at the block level, i.e., topology, kernel size, activation, and normalization, as well as at the network scaling level, i.e., depth and width of each block in the network. In both cases, we first derive insights through systematic ablative experiments. Then we design a robust residual block, dubbed RobustResBlock, and a compound scaling rule, dubbed RobustScaling, to distribute depth and width at the desired FLOP count. Finally, we combine RobustResBlock and RobustScaling and present a portfolio of adversarially robust residual networks, RobustResNets, spanning a broad spectrum of model capacities. Experimental validation across multiple datasets and adversarial attacks demonstrate that RobustResNets consistently outperform both the standard WRNs and other existing robust architectures, achieving state-of-the-art AutoAttack robust accuracy of 61.1% without additional data and 63.7% with 500K external data while being $2\times$ more compact in terms of parameters. Code is available at \url{ https://github.com/zhichao-lu/robust-residual-network}

A Survey on Evaluation Metrics for Synthetic Material Micro-Structure Images from Generative Models

Nov 03, 2022Abstract:The evaluation of synthetic micro-structure images is an emerging problem as machine learning and materials science research have evolved together. Typical state of the art methods in evaluating synthetic images from generative models have relied on the Fr\'echet Inception Distance. However, this and other similar methods, are limited in the materials domain due to both the unique features that characterize physically accurate micro-structures and limited dataset sizes. In this study we evaluate a variety of methods on scanning electron microscope (SEM) images of graphene-reinforced polyurethane foams. The primary objective of this paper is to report our findings with regards to the shortcomings of existing methods so as to encourage the machine learning community to consider enhancements in metrics for assessing quality of synthetic images in the material science domain.

An Interactive Knowledge-based Multi-objective Evolutionary Algorithm Framework for Practical Optimization Problems

Sep 18, 2022

Abstract:Experienced users often have useful knowledge and intuition in solving real-world optimization problems. User knowledge can be formulated as inter-variable relationships to assist an optimization algorithm in finding good solutions faster. Such inter-variable interactions can also be automatically learned from high-performing solutions discovered at intermediate iterations in an optimization run - a process called innovization. These relations, if vetted by the users, can be enforced among newly generated solutions to steer the optimization algorithm towards practically promising regions in the search space. Challenges arise for large-scale problems where the number of such variable relationships may be high. This paper proposes an interactive knowledge-based evolutionary multi-objective optimization (IK-EMO) framework that extracts hidden variable-wise relationships as knowledge from evolving high-performing solutions, shares them with users to receive feedback, and applies them back to the optimization process to improve its effectiveness. The knowledge extraction process uses a systematic and elegant graph analysis method which scales well with number of variables. The working of the proposed IK-EMO is demonstrated on three large-scale real-world engineering design problems. The simplicity and elegance of the proposed knowledge extraction process and achievement of high-performing solutions quickly indicate the power of the proposed framework. The results presented should motivate further such interaction-based optimization studies for their routine use in practice.

Neural Architecture Search as Multiobjective Optimization Benchmarks: Problem Formulation and Performance Assessment

Aug 08, 2022

Abstract:The ongoing advancements in network architecture design have led to remarkable achievements in deep learning across various challenging computer vision tasks. Meanwhile, the development of neural architecture search (NAS) has provided promising approaches to automating the design of network architectures for lower prediction error. Recently, the emerging application scenarios of deep learning have raised higher demands for network architectures considering multiple design criteria: number of parameters/floating-point operations, and inference latency, among others. From an optimization point of view, the NAS tasks involving multiple design criteria are intrinsically multiobjective optimization problems; hence, it is reasonable to adopt evolutionary multiobjective optimization (EMO) algorithms for tackling them. Nonetheless, there is still a clear gap confining the related research along this pathway: on the one hand, there is a lack of a general problem formulation of NAS tasks from an optimization point of view; on the other hand, there are challenges in conducting benchmark assessments of EMO algorithms on NAS tasks. To bridge the gap: (i) we formulate NAS tasks into general multi-objective optimization problems and analyze the complex characteristics from an optimization point of view; (ii) we present an end-to-end pipeline, dubbed $\texttt{EvoXBench}$, to generate benchmark test problems for EMO algorithms to run efficiently -- without the requirement of GPUs or Pytorch/Tensorflow; (iii) we instantiate two test suites comprehensively covering two datasets, seven search spaces, and three hardware devices, involving up to eight objectives. Based on the above, we validate the proposed test suites using six representative EMO algorithms and provide some empirical analyses. The code of $\texttt{EvoXBench}$ is available from $\href{https://github.com/EMI-Group/EvoXBench}{\rm{here}}$.

Optimal Design of Electric Machine with Efficient Handling of Constraints and Surrogate Assistance

Jun 03, 2022

Abstract:Electric machine design optimization is a computationally expensive multi-objective optimization problem. While the objectives require time-consuming finite element analysis, optimization constraints can often be based on mathematical expressions, such as geometric constraints. This article investigates this optimization problem of mixed computationally expensive nature by proposing an optimization method incorporated into a popularly-used evolutionary multi-objective optimization algorithm - NSGA-II. The proposed method exploits the inexpensiveness of geometric constraints to generate feasible designs by using a custom repair operator. The proposed method also addresses the time-consuming objective functions by incorporating surrogate models for predicting machine performance. The article successfully establishes the superiority of the proposed method over the conventional optimization approach. This study clearly demonstrates how a complex engineering design can be optimized for multiple objectives and constraints requiring heterogeneous evaluation times and optimal solutions can be analyzed to select a single preferred solution and importantly harnessed to reveal vital design features common to optimal solutions as design principles.

Variable Functioning and Its Application to Large Scale Steel Frame Design Optimization

May 15, 2022

Abstract:To solve complex real-world problems, heuristics and concept-based approaches can be used in order to incorporate information into the problem. In this study, a concept-based approach called variable functioning Fx is introduced to reduce the optimization variables and narrow down the search space. In this method, the relationships among one or more subset of variables are defined with functions using information prior to optimization; thus, instead of modifying the variables in the search process, the function variables are optimized. By using problem structure analysis technique and engineering expert knowledge, the $Fx$ method is used to enhance the steel frame design optimization process as a complex real-world problem. The proposed approach is coupled with particle swarm optimization and differential evolution algorithms and used for three case studies. The algorithms are applied to optimize the case studies by considering the relationships among column cross-section areas. The results show that $Fx$ can significantly improve both the convergence rate and the final design of a frame structure, even if it is only used for seeding.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge