Amir H. Gandomi

Faculty of Engineering & Information Technology, University of Technology Sydney, University Research and Innovation Center

Smart Buildings Energy Consumption Forecasting using Adaptive Evolutionary Ensemble Learning Models

Jun 13, 2025Abstract:Smart buildings are gaining popularity because they can enhance energy efficiency, lower costs, improve security, and provide a more comfortable and convenient environment for building occupants. A considerable portion of the global energy supply is consumed in the building sector and plays a pivotal role in future decarbonization pathways. To manage energy consumption and improve energy efficiency in smart buildings, developing reliable and accurate energy demand forecasting is crucial and meaningful. However, extending an effective predictive model for the total energy use of appliances at the building level is challenging because of temporal oscillations and complex linear and non-linear patterns. This paper proposes three hybrid ensemble predictive models, incorporating Bagging, Stacking, and Voting mechanisms combined with a fast and effective evolutionary hyper-parameters tuner. The performance of the proposed energy forecasting model was evaluated using a hybrid dataset comprising meteorological parameters, appliance energy use, temperature, humidity, and lighting energy consumption from various sections of a building, collected by 18 sensors located in Stambroek, Mons, Belgium. To provide a comparative framework and investigate the efficiency of the proposed predictive model, 15 popular machine learning (ML) models, including two classic ML models, three NNs, a Decision Tree (DT), a Random Forest (RF), two Deep Learning (DL) and six Ensemble models, were compared. The prediction results indicate that the adaptive evolutionary bagging model surpassed other predictive models in both accuracy and learning error. Notably, it achieved accuracy gains of 12.6%, 13.7%, 12.9%, 27.04%, and 17.4% compared to Extreme Gradient Boosting (XGB), Categorical Boosting (CatBoost), GBM, LGBM, and Random Forest (RF).

Hybrid Wave-wind System Power Optimisation Using Effective Ensemble Covariance Matrix Adaptation Evolutionary Algorithm

May 28, 2025Abstract:Floating hybrid wind-wave systems combine offshore wind platforms with wave energy converters (WECs) to create cost-effective and reliable energy solutions. Adequately designed and tuned WECs are essential to avoid unwanted loads disrupting turbine motion while efficiently harvesting wave energy. These systems diversify energy sources, enhancing energy security and reducing supply risks while providing a more consistent power output by smoothing energy production variability. However, optimising such systems is complex due to the physical and hydrodynamic interactions between components, resulting in a challenging optimisation space. This study uses a 5-MW OC4-DeepCwind semi-submersible platform with three spherical WECs to explore these synergies. To address these challenges, we propose an effective ensemble optimisation (EEA) technique that combines covariance matrix adaptation, novelty search, and discretisation techniques. To evaluate the EEA performance, we used four sea sites located along Australia's southern coast. In this framework, geometry and power take-off parameters are simultaneously optimised to maximise the average power output of the hybrid wind-wave system. Ensemble optimisation methods enhance performance, flexibility, and robustness by identifying the best algorithm or combination of algorithms for a given problem, addressing issues like premature convergence, stagnation, and poor search space exploration. The EEA was benchmarked against 14 advanced optimisation methods, demonstrating superior solution quality and convergence rates. EEA improved total power output by 111%, 95%, and 52% compared to WOA, EO, and AHA, respectively. Additionally, in comparisons with advanced methods, LSHADE, SaNSDE, and SLPSO, EEA achieved absorbed power enhancements of 498%, 638%, and 349% at the Sydney sea site, showcasing its effectiveness in optimising hybrid energy systems.

Kolmogorov-Arnold networks for metal surface defect classification

Jan 10, 2025Abstract:This paper presents the application of Kolmogorov-Arnold Networks (KAN) in classifying metal surface defects. Specifically, steel surfaces are analyzed to detect defects such as cracks, inclusions, patches, pitted surfaces, and scratches. Drawing on the Kolmogorov-Arnold theorem, KAN provides a novel approach compared to conventional multilayer perceptrons (MLPs), facilitating more efficient function approximation by utilizing spline functions. The results show that KAN networks can achieve better accuracy than convolutional neural networks (CNNs) with fewer parameters, resulting in faster convergence and improved performance in image classification.

A Review of 315 Benchmark and Test Functions for Machine Learning Optimization Algorithms and Metaheuristics with Mathematical and Visual Descriptions

Jun 13, 2024

Abstract:In the rapidly evolving optimization and metaheuristics domains, the efficacy of algorithms is crucially determined by the benchmark (test) functions. While several functions have been developed and derived over the past decades, little information is available on the mathematical and visual description, range of suitability, and applications of many such functions. To bridge this knowledge gap, this review provides an exhaustive survey of more than 300 benchmark functions used in the evaluation of optimization and metaheuristics algorithms. This review first catalogs benchmark and test functions based on their characteristics, complexity, properties, visuals, and domain implications to offer a wide view that aids in selecting appropriate benchmarks for various algorithmic challenges. This review also lists the 25 most commonly used functions in the open literature and proposes two new, highly dimensional, dynamic and challenging functions that could be used for testing new algorithms. Finally, this review identifies gaps in current benchmarking practices and suggests directions for future research.

Clustering in Dynamic Environments: A Framework for Benchmark Dataset Generation With Heterogeneous Changes

Feb 24, 2024

Abstract:Clustering in dynamic environments is of increasing importance, with broad applications ranging from real-time data analysis and online unsupervised learning to dynamic facility location problems. While meta-heuristics have shown promising effectiveness in static clustering tasks, their application for tracking optimal clustering solutions or robust clustering over time in dynamic environments remains largely underexplored. This is partly due to a lack of dynamic datasets with diverse, controllable, and realistic dynamic characteristics, hindering systematic performance evaluations of clustering algorithms in various dynamic scenarios. This deficiency leads to a gap in our understanding and capability to effectively design algorithms for clustering in dynamic environments. To bridge this gap, this paper introduces the Dynamic Dataset Generator (DDG). DDG features multiple dynamic Gaussian components integrated with a range of heterogeneous, local, and global changes. These changes vary in spatial and temporal severity, patterns, and domain of influence, providing a comprehensive tool for simulating a wide range of dynamic scenarios.

Semantic-Preserving Feature Partitioning for Multi-View Ensemble Learning

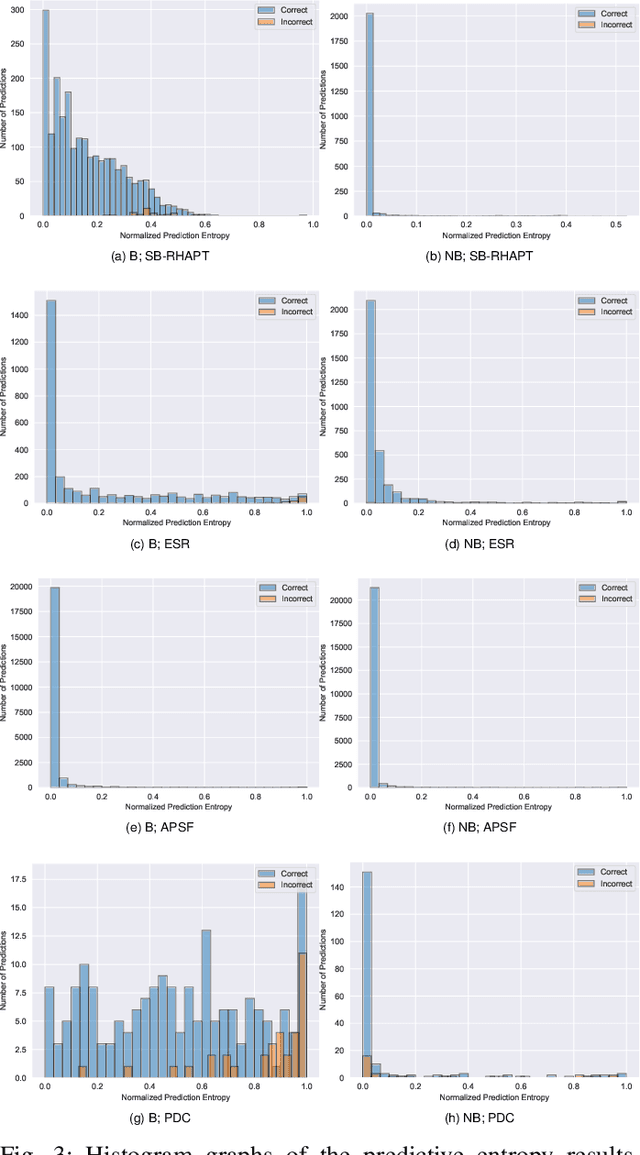

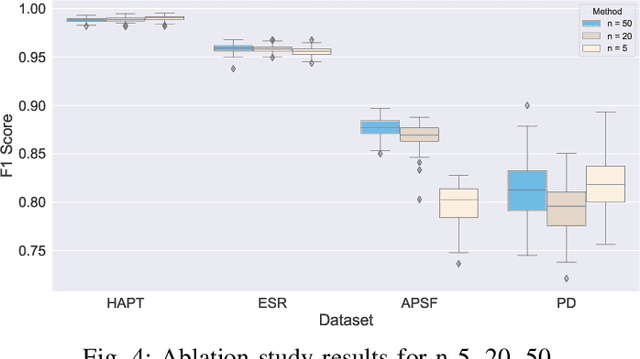

Jan 11, 2024Abstract:In machine learning, the exponential growth of data and the associated ``curse of dimensionality'' pose significant challenges, particularly with expansive yet sparse datasets. Addressing these challenges, multi-view ensemble learning (MEL) has emerged as a transformative approach, with feature partitioning (FP) playing a pivotal role in constructing artificial views for MEL. Our study introduces the Semantic-Preserving Feature Partitioning (SPFP) algorithm, a novel method grounded in information theory. The SPFP algorithm effectively partitions datasets into multiple semantically consistent views, enhancing the MEL process. Through extensive experiments on eight real-world datasets, ranging from high-dimensional with limited instances to low-dimensional with high instances, our method demonstrates notable efficacy. It maintains model accuracy while significantly improving uncertainty measures in scenarios where high generalization performance is achievable. Conversely, it retains uncertainty metrics while enhancing accuracy where high generalization accuracy is less attainable. An effect size analysis further reveals that the SPFP algorithm outperforms benchmark models by large effect size and reduces computational demands through effective dimensionality reduction. The substantial effect sizes observed in most experiments underscore the algorithm's significant improvements in model performance.

GNBG-Generated Test Suite for Box-Constrained Numerical Global Optimization

Dec 12, 2023Abstract:This document introduces a set of 24 box-constrained numerical global optimization problem instances, systematically constructed using the Generalized Numerical Benchmark Generator (GNBG). These instances cover a broad spectrum of problem features, including varying degrees of modality, ruggedness, symmetry, conditioning, variable interaction structures, basin linearity, and deceptiveness. Purposefully designed, this test suite offers varying difficulty levels and problem characteristics, facilitating rigorous evaluation and comparative analysis of optimization algorithms. By presenting these problems, we aim to provide researchers with a structured platform to assess the strengths and weaknesses of their algorithms against challenges with known, controlled characteristics. For reproducibility, the MATLAB source code for this test suite is publicly available.

GNBG: A Generalized and Configurable Benchmark Generator for Continuous Numerical Optimization

Dec 12, 2023Abstract:As optimization challenges continue to evolve, so too must our tools and understanding. To effectively assess, validate, and compare optimization algorithms, it is crucial to use a benchmark test suite that encompasses a diverse range of problem instances with various characteristics. Traditional benchmark suites often consist of numerous fixed test functions, making it challenging to align these with specific research objectives, such as the systematic evaluation of algorithms under controllable conditions. This paper introduces the Generalized Numerical Benchmark Generator (GNBG) for single-objective, box-constrained, continuous numerical optimization. Unlike existing approaches that rely on multiple baseline functions and transformations, GNBG utilizes a single, parametric, and configurable baseline function. This design allows for control over various problem characteristics. Researchers using GNBG can generate instances that cover a broad array of morphological features, from unimodal to highly multimodal functions, various local optima patterns, and symmetric to highly asymmetric structures. The generated problems can also vary in separability, variable interaction structures, dimensionality, conditioning, and basin shapes. These customizable features enable the systematic evaluation and comparison of optimization algorithms, allowing researchers to probe their strengths and weaknesses under diverse and controllable conditions.

Noise-Augmented Boruta: The Neural Network Perturbation Infusion with Boruta Feature Selection

Sep 18, 2023

Abstract:With the surge in data generation, both vertically (i.e., volume of data) and horizontally (i.e., dimensionality), the burden of the curse of dimensionality has become increasingly palpable. Feature selection, a key facet of dimensionality reduction techniques, has advanced considerably to address this challenge. One such advancement is the Boruta feature selection algorithm, which successfully discerns meaningful features by contrasting them to their permutated counterparts known as shadow features. However, the significance of a feature is shaped more by the data's overall traits than by its intrinsic value, a sentiment echoed in the conventional Boruta algorithm where shadow features closely mimic the characteristics of the original ones. Building on this premise, this paper introduces an innovative approach to the Boruta feature selection algorithm by incorporating noise into the shadow variables. Drawing parallels from the perturbation analysis framework of artificial neural networks, this evolved version of the Boruta method is presented. Rigorous testing on four publicly available benchmark datasets revealed that this proposed technique outperforms the classic Boruta algorithm, underscoring its potential for enhanced, accurate feature selection.

Unveiling the frontiers of deep learning: innovations shaping diverse domains

Sep 06, 2023

Abstract:Deep learning (DL) enables the development of computer models that are capable of learning, visualizing, optimizing, refining, and predicting data. In recent years, DL has been applied in a range of fields, including audio-visual data processing, agriculture, transportation prediction, natural language, biomedicine, disaster management, bioinformatics, drug design, genomics, face recognition, and ecology. To explore the current state of deep learning, it is necessary to investigate the latest developments and applications of deep learning in these disciplines. However, the literature is lacking in exploring the applications of deep learning in all potential sectors. This paper thus extensively investigates the potential applications of deep learning across all major fields of study as well as the associated benefits and challenges. As evidenced in the literature, DL exhibits accuracy in prediction and analysis, makes it a powerful computational tool, and has the ability to articulate itself and optimize, making it effective in processing data with no prior training. Given its independence from training data, deep learning necessitates massive amounts of data for effective analysis and processing, much like data volume. To handle the challenge of compiling huge amounts of medical, scientific, healthcare, and environmental data for use in deep learning, gated architectures like LSTMs and GRUs can be utilized. For multimodal learning, shared neurons in the neural network for all activities and specialized neurons for particular tasks are necessary.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge