Wenjian Luo

Fairness-Aware Performance Evaluation for Multi-Party Multi-Objective Optimization

Jan 30, 2026Abstract:In multiparty multiobjective optimization problems, solution sets are usually evaluated using classical performance metrics, aggregated across DMs. However, such mean-based evaluations may be unfair by favoring certain parties, as they assume identical geometric approximation quality to each party's PF carries comparable evaluative significance. Moreover, prevailing notions of MPMOP optimal solutions are restricted to strictly common Pareto optimal solutions, representing a narrow form of cooperation in multiparty decision making scenarios. These limitations obscure whether a solution set reflects balanced relative gains or meaningful consensus among heterogeneous DMs. To address these issues, this paper develops a fairness-aware performance evaluation framework grounded in a generalized notion of consensus solutions. From a cooperative game-theoretic perspective, we formalize four axioms that a fairness-aware evaluation function for MPMOPs should satisfy. By introducing a concession rate vector to quantify acceptable compromises by individual DMs, we generalize the classical definition of MPMOP optimal solutions and embed classical performance metrics into a Nash-product-based evaluation framework, which is theoretically shown to satisfy all axioms. To support empirical validation, we further construct benchmark problems that extend existing MPMOP suites by incorporating consensus-deficient negotiation structures. Experimental results demonstrate that the proposed evaluation framework is able to distinguish algorithmic performance in a manner consistent with consensus-aware fairness considerations. Specifically, algorithms converging toward strictly common solutions are assigned higher evaluation scores when such solutions exist, whereas in the absence of strictly common solutions, algorithms that effectively cover the commonly acceptable region are more favorably evaluated.

BAMBO: Construct Ability and Efficiency LLM Pareto Set via Bayesian Adaptive Multi-objective Block-wise Optimization

Dec 12, 2025

Abstract:Constructing a Pareto set is pivotal for navigating the capability-efficiency trade-offs in Large Language Models (LLMs); however, existing merging techniques remain inadequate for this task. Coarse-grained, model-level methods yield only a sparse set of suboptimal solutions, while fine-grained, layer-wise approaches suffer from the "curse of dimensionality," rendering the search space computationally intractable. To resolve this dichotomy, we propose BAMBO (Bayesian Adaptive Multi-objective Block-wise Optimization), a novel framework that automatically constructs the LLM Pareto set. BAMBO renders the search tractable by introducing a Hybrid Optimal Block Partitioning strategy. Formulated as a 1D clustering problem, this strategy leverages a dynamic programming approach to optimally balance intra-block homogeneity and inter-block information distribution, thereby dramatically reducing dimensionality without sacrificing critical granularity. The entire process is automated within an evolutionary loop driven by the q-Expected Hypervolume Improvement (qEHVI) acquisition function. Experiments demonstrate that BAMBO discovers a superior and more comprehensive Pareto frontier than baselines, enabling agile model selection tailored to diverse operational constraints. Code is available at: https://github.com/xin8coder/BAMBO.

Nearest-Better Network for Visualizing and Analyzing Combinatorial Optimization Problems: A Unified Tool

Jul 30, 2025Abstract:The Nearest-Better Network (NBN) is a powerful method to visualize sampled data for continuous optimization problems while preserving multiple landscape features. However, the calculation of NBN is very time-consuming, and the extension of the method to combinatorial optimization problems is challenging but very important for analyzing the algorithm's behavior. This paper provides a straightforward theoretical derivation showing that the NBN network essentially functions as the maximum probability transition network for algorithms. This paper also presents an efficient NBN computation method with logarithmic linear time complexity to address the time-consuming issue. By applying this efficient NBN algorithm to the OneMax problem and the Traveling Salesman Problem (TSP), we have made several remarkable discoveries for the first time: The fitness landscape of OneMax exhibits neutrality, ruggedness, and modality features. The primary challenges of TSP problems are ruggedness, modality, and deception. Two state-of-the-art TSP algorithms (i.e., EAX and LKH) have limitations when addressing challenges related to modality and deception, respectively. LKH, based on local search operators, fails when there are deceptive solutions near global optima. EAX, which is based on a single population, can efficiently maintain diversity. However, when multiple attraction basins exist, EAX retains individuals within multiple basins simultaneously, reducing inter-basin interaction efficiency and leading to algorithm's stagnation.

Decomposability-Guaranteed Cooperative Coevolution for Large-Scale Itinerary Planning

Jun 06, 2025Abstract:Large-scale itinerary planning is a variant of the traveling salesman problem, aiming to determine an optimal path that maximizes the collected points of interest (POIs) scores while minimizing travel time and cost, subject to travel duration constraints. This paper analyzes the decomposability of large-scale itinerary planning, proving that strict decomposability is difficult to satisfy, and introduces a weak decomposability definition based on a necessary condition, deriving the corresponding graph structures that fulfill this property. With decomposability guaranteed, we propose a novel multi-objective cooperative coevolutionary algorithm for large-scale itinerary planning, addressing the challenges of component imbalance and interactions. Specifically, we design a dynamic decomposition strategy based on the normalized fitness within each component, define optimization potential considering component scale and contribution, and develop a computational resource allocation strategy. Finally, we evaluate the proposed algorithm on a set of real-world datasets. Comparative experiments with state-of-the-art multi-objective itinerary planning algorithms demonstrate the superiority of our approach, with performance advantages increasing as the problem scale grows.

Community Search in Time-dependent Road-social Attributed Networks

May 18, 2025Abstract:Real-world networks often involve both keywords and locations, along with travel time variations between locations due to traffic conditions. However, most existing cohesive subgraph-based community search studies utilize a single attribute, either keywords or locations, to identify communities. They do not simultaneously consider both keywords and locations, which results in low semantic or spatial cohesiveness of the detected communities, and they fail to account for variations in travel time. Additionally, these studies traverse the entire network to build efficient indexes, but the detected community only involves nodes around the query node, leading to the traversal of nodes that are not relevant to the community. Therefore, we propose the problem of discovering semantic-spatial aware k-core, which refers to a k-core with high semantic and time-dependent spatial cohesiveness containing the query node. To address this problem, we propose an exact and a greedy algorithm, both of which gradually expand outward from the query node. They are local methods that only access the local part of the attributed network near the query node rather than the entire network. Moreover, we design a method to calculate the semantic similarity between two keywords using large language models. This method alleviates the disadvantages of keyword-matching methods used in existing community search studies, such as mismatches caused by differently expressed synonyms and the presence of irrelevant words. Experimental results show that the greedy algorithm outperforms baselines in terms of structural, semantic, and time-dependent spatial cohesiveness.

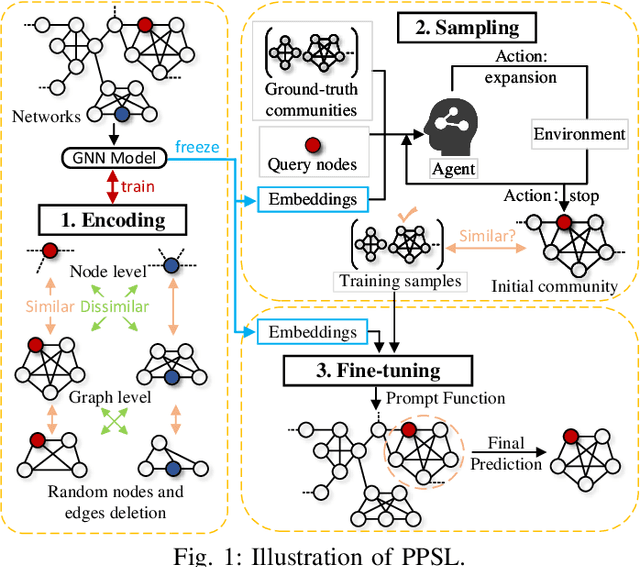

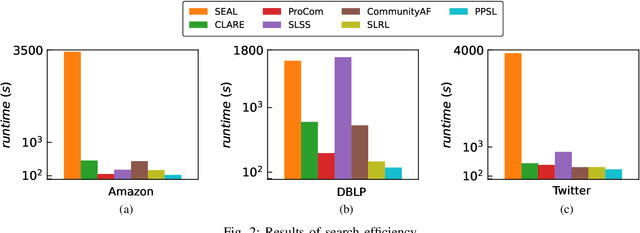

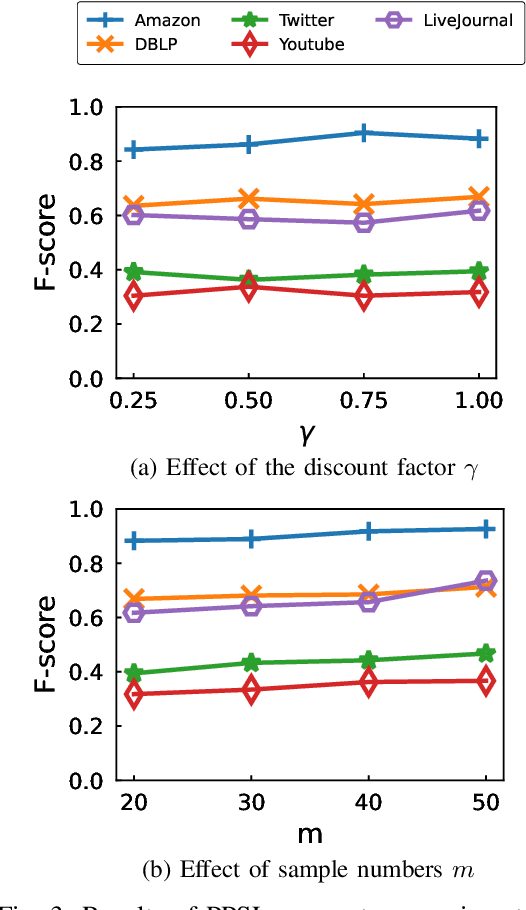

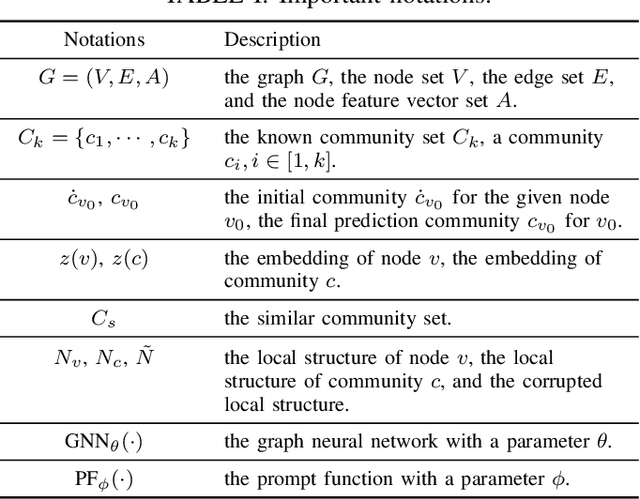

Pre-trained Prompt-driven Community Search

May 18, 2025

Abstract:The "pre-train, prompt" paradigm is widely adopted in various graph-based tasks and has shown promising performance in community detection. Most existing semi-supervised community detection algorithms detect communities based on known ones, and the detected communities typically do not contain the given query node. Therefore, they are not suitable for searching the community of a given node. Motivated by this, we adopt this paradigm into the semi-supervised community search for the first time and propose Pre-trained Prompt-driven Community Search (PPCS), a novel model designed to enhance search accuracy and efficiency. PPCS consists of three main components: node encoding, sample generation, and prompt-driven fine-tuning. Specifically, the node encoding component employs graph neural networks to learn local structural patterns of nodes in a graph, thereby obtaining representations for nodes and communities. Next, the sample generation component identifies an initial community for a given node and selects known communities that are structurally similar to the initial one as training samples. Finally, the prompt-driven fine-tuning component leverages these samples as prompts to guide the final community prediction. Experimental results on five real-world datasets demonstrate that PPCS performs better than baseline algorithms. It also achieves higher community search efficiency than semi-supervised community search baseline methods, with ablation studies verifying the effectiveness of each component of PPCS.

Community and hyperedge inference in multiple hypergraphs

May 08, 2025Abstract:Hypergraphs, capable of representing high-order interactions via hyperedges, have become a powerful tool for modeling real-world biological and social systems. Inherent relationships within these real-world systems, such as the encoding relationship between genes and their protein products, drive the establishment of interconnections between multiple hypergraphs. Here, we demonstrate how to utilize those interconnections between multiple hypergraphs to synthesize integrated information from multiple higher-order systems, thereby enhancing understanding of underlying structures. We propose a model based on the stochastic block model, which integrates information from multiple hypergraphs to reveal latent high-order structures. Real-world hyperedges exhibit preferential attachment, where certain nodes dominate hyperedge formation. To characterize this phenomenon, our model introduces hyperedge internal degree to quantify nodes' contributions to hyperedge formation. This model is capable of mining communities, predicting missing hyperedges of arbitrary sizes within hypergraphs, and inferring inter-hypergraph edges between hypergraphs. We apply our model to high-order datasets to evaluate its performance. Experimental results demonstrate strong performance of our model in community detection, hyperedge prediction, and inter-hypergraph edge prediction tasks. Moreover, we show that our model enables analysis of multiple hypergraphs of different types and supports the analysis of a single hypergraph in the absence of inter-hypergraph edges. Our work provides a practical and flexible tool for analyzing multiple hypergraphs, greatly advancing the understanding of the organization in real-world high-order systems.

Showing Many Labels in Multi-label Classification Models: An Empirical Study of Adversarial Examples

Sep 26, 2024

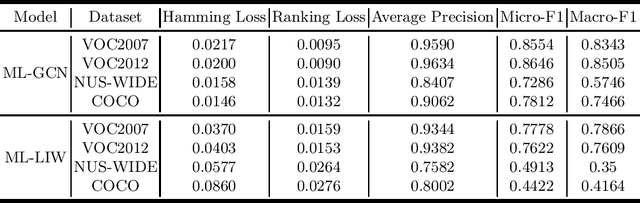

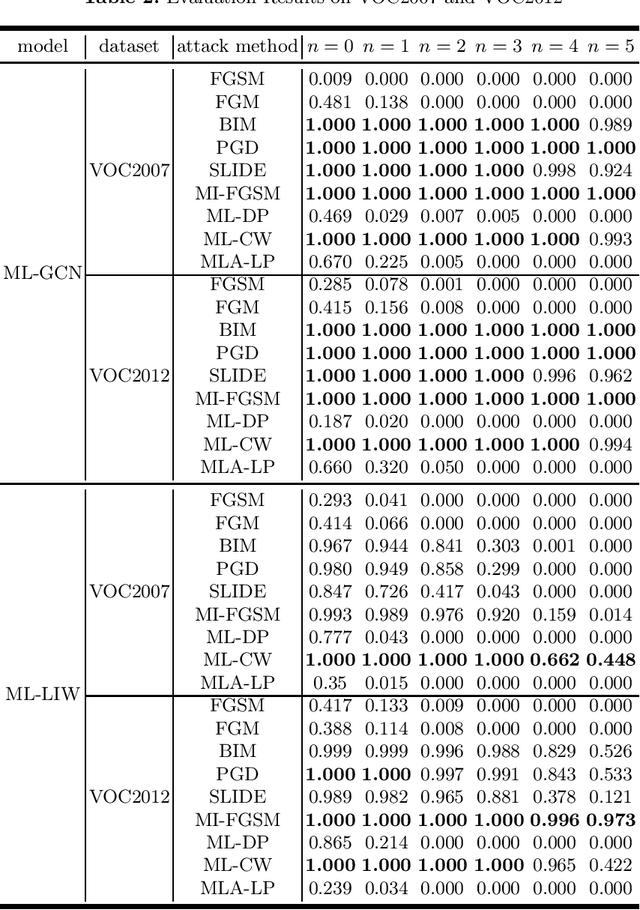

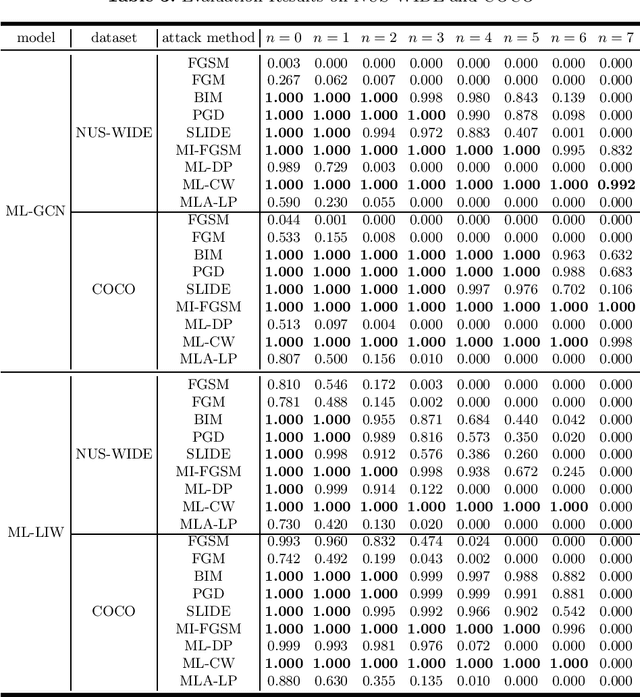

Abstract:With the rapid development of Deep Neural Networks (DNNs), they have been applied in numerous fields. However, research indicates that DNNs are susceptible to adversarial examples, and this is equally true in the multi-label domain. To further investigate multi-label adversarial examples, we introduce a novel type of attacks, termed "Showing Many Labels". The objective of this attack is to maximize the number of labels included in the classifier's prediction results. In our experiments, we select nine attack algorithms and evaluate their performance under "Showing Many Labels". Eight of the attack algorithms were adapted from the multi-class environment to the multi-label environment, while the remaining one was specifically designed for the multi-label environment. We choose ML-LIW and ML-GCN as target models and train them on four popular multi-label datasets: VOC2007, VOC2012, NUS-WIDE, and COCO. We record the success rate of each algorithm when it shows the expected number of labels in eight different scenarios. Experimental results indicate that under the "Showing Many Labels", iterative attacks perform significantly better than one-step attacks. Moreover, it is possible to show all labels in the dataset.

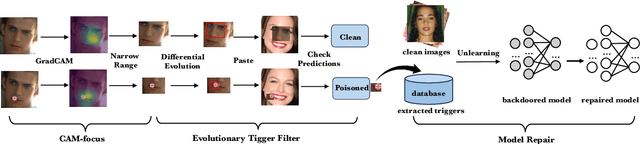

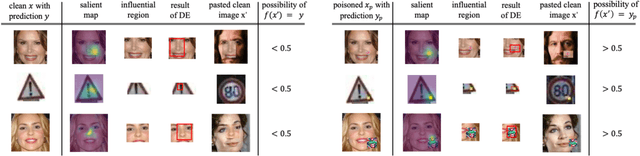

Evolutionary Trigger Detection and Lightweight Model Repair Based Backdoor Defense

Jul 07, 2024

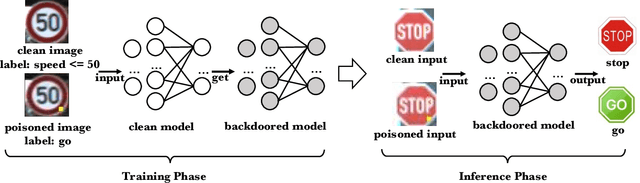

Abstract:Deep Neural Networks (DNNs) have been widely used in many areas such as autonomous driving and face recognition. However, DNN model is fragile to backdoor attack. A backdoor in the DNN model can be activated by a poisoned input with trigger and leads to wrong prediction, which causes serious security issues in applications. It is challenging for current defenses to eliminate the backdoor effectively with limited computing resources, especially when the sizes and numbers of the triggers are variable as in the physical world. We propose an efficient backdoor defense based on evolutionary trigger detection and lightweight model repair. In the first phase of our method, CAM-focus Evolutionary Trigger Filter (CETF) is proposed for trigger detection. CETF is an effective sample-preprocessing based method with the evolutionary algorithm, and our experimental results show that CETF not only distinguishes the images with triggers accurately from the clean images, but also can be widely used in practice for its simplicity and stability in different backdoor attack situations. In the second phase of our method, we leverage several lightweight unlearning methods with the trigger detected by CETF for model repair, which also constructively demonstrate the underlying correlation of the backdoor with Batch Normalization layers. Source code will be published after accepted.

Benchmark for CEC 2024 Competition on Multiparty Multiobjective Optimization

Feb 03, 2024Abstract:The competition focuses on Multiparty Multiobjective Optimization Problems (MPMOPs), where multiple decision makers have conflicting objectives, as seen in applications like UAV path planning. Despite their importance, MPMOPs remain understudied in comparison to conventional multiobjective optimization. The competition aims to address this gap by encouraging researchers to explore tailored modeling approaches. The test suite comprises two parts: problems with common Pareto optimal solutions and Biparty Multiobjective UAV Path Planning (BPMO-UAVPP) problems with unknown solutions. Optimization algorithms for the first part are evaluated using Multiparty Inverted Generational Distance (MPIGD), and the second part is evaluated using Multiparty Hypervolume (MPHV) metrics. The average algorithm ranking across all problems serves as a performance benchmark.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge