Pranav Nashikkar

Discovering Adaptable Symbolic Algorithms from Scratch

Jul 31, 2023

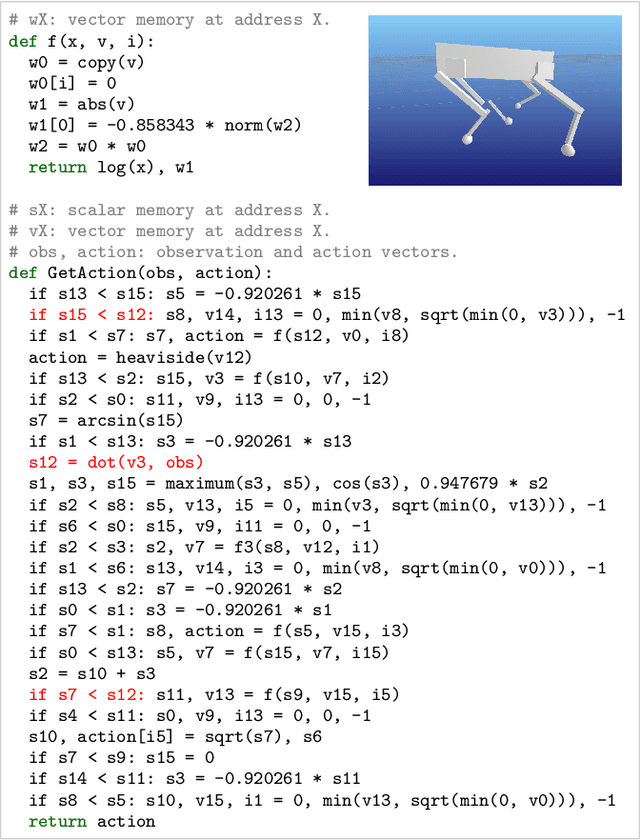

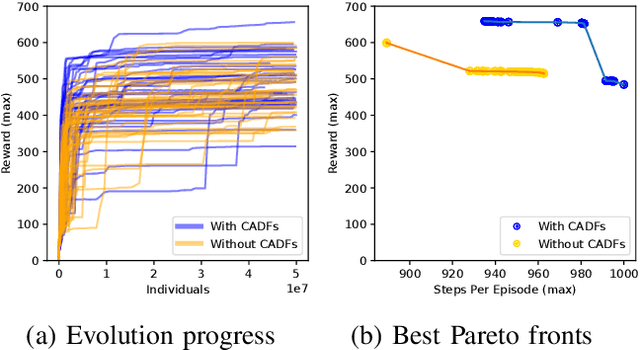

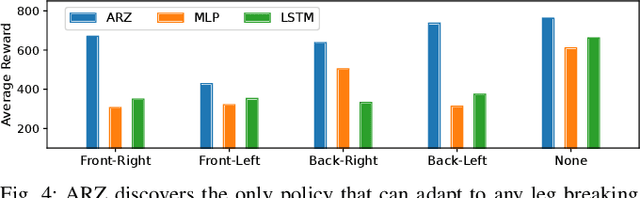

Abstract:Autonomous robots deployed in the real world will need control policies that rapidly adapt to environmental changes. To this end, we propose AutoRobotics-Zero (ARZ), a method based on AutoML-Zero that discovers zero-shot adaptable policies from scratch. In contrast to neural network adaption policies, where only model parameters are optimized, ARZ can build control algorithms with the full expressive power of a linear register machine. We evolve modular policies that tune their model parameters and alter their inference algorithm on-the-fly to adapt to sudden environmental changes. We demonstrate our method on a realistic simulated quadruped robot, for which we evolve safe control policies that avoid falling when individual limbs suddenly break. This is a challenging task in which two popular neural network baselines fail. Finally, we conduct a detailed analysis of our method on a novel and challenging non-stationary control task dubbed Cataclysmic Cartpole. Results confirm our findings that ARZ is significantly more robust to sudden environmental changes and can build simple, interpretable control policies.

Optimizing Object-based Perception and Control by Free-Energy Principle

Mar 04, 2019

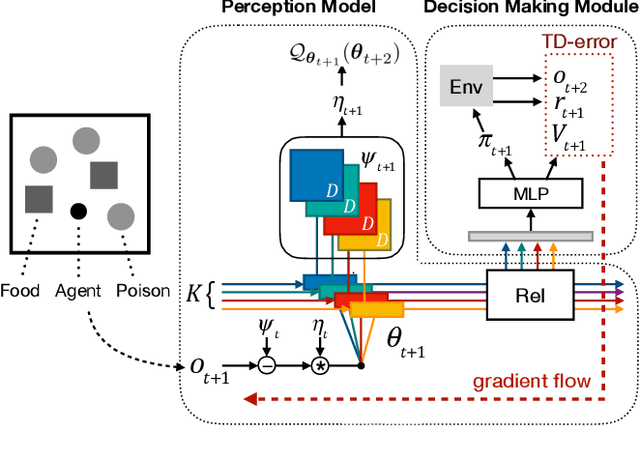

Abstract:One of the well-known formulations of human perception is a hierarchical inference model based on the interaction between conceptual knowledge and sensory stimuli from the partially observable environment. This model helps human to learn inductive biases and guides their behaviors by minimizing their surprise of observations. However, most model-based reinforcement learning still lacks the support of object-based physical reasoning. In this paper, we propose Object-based Perception Control (OPC). It combines the learning of perceiving objects from the scene and that of control of the objects in the perceived environments by the free-energy principle. Extensive experiments on high-dimensional pixel environments show that OPC outperforms several strong baselines in accumulated rewards and the quality of perceptual grouping.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge