Junyi An

Equivariant Spherical Transformer for Efficient Molecular Modeling

May 29, 2025

Abstract:SE(3)-equivariant Graph Neural Networks (GNNs) have significantly advanced molecular system modeling by employing group representations. However, their message passing processes, which rely on tensor product-based convolutions, are limited by insufficient non-linearity and incomplete group representations, thereby restricting expressiveness. To overcome these limitations, we introduce the Equivariant Spherical Transformer (EST), a novel framework that leverages a Transformer structure within the spatial domain of group representations after Fourier transform. We theoretically and empirically demonstrate that EST can encompass the function space of tensor products while achieving superior expressiveness. Furthermore, EST's equivariant inductive bias is guaranteed through a uniform sampling strategy for the Fourier transform. Our experiments demonstrate state-of-the-art performance by EST on various molecular benchmarks, including OC20 and QM9.

Physics-inspired Energy Transition Neural Network for Sequence Learning

May 06, 2025Abstract:Recently, the superior performance of Transformers has made them a more robust and scalable solution for sequence modeling than traditional recurrent neural networks (RNNs). However, the effectiveness of Transformer in capturing long-term dependencies is primarily attributed to their comprehensive pair-modeling process rather than inherent inductive biases toward sequence semantics. In this study, we explore the capabilities of pure RNNs and reassess their long-term learning mechanisms. Inspired by the physics energy transition models that track energy changes over time, we propose a effective recurrent structure called the``Physics-inspired Energy Transition Neural Network" (PETNN). We demonstrate that PETNN's memory mechanism effectively stores information over long-term dependencies. Experimental results indicate that PETNN outperforms transformer-based methods across various sequence tasks. Furthermore, owing to its recurrent nature, PETNN exhibits significantly lower complexity. Our study presents an optimal foundational recurrent architecture and highlights the potential for developing effective recurrent neural networks in fields currently dominated by Transformer.

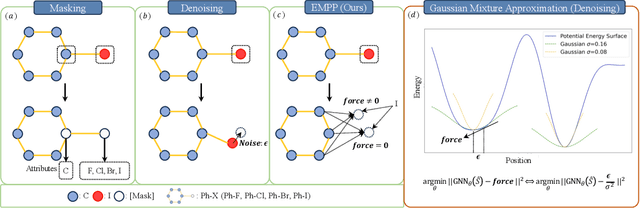

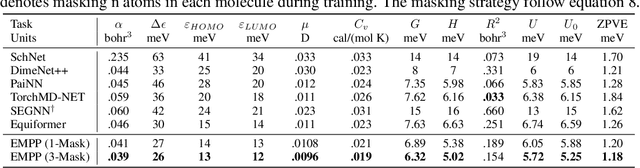

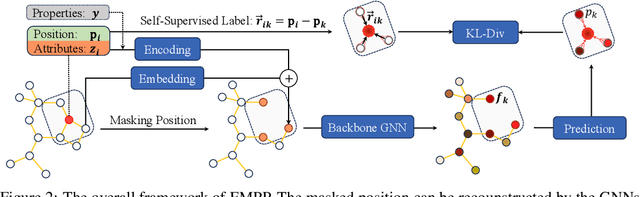

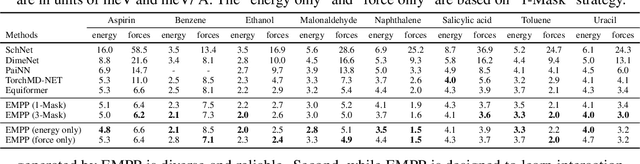

Equivariant Masked Position Prediction for Efficient Molecular Representation

Feb 12, 2025

Abstract:Graph neural networks (GNNs) have shown considerable promise in computational chemistry. However, the limited availability of molecular data raises concerns regarding GNNs' ability to effectively capture the fundamental principles of physics and chemistry, which constrains their generalization capabilities. To address this challenge, we introduce a novel self-supervised approach termed Equivariant Masked Position Prediction (EMPP), grounded in intramolecular potential and force theory. Unlike conventional attribute masking techniques, EMPP formulates a nuanced position prediction task that is more well-defined and enhances the learning of quantum mechanical features. EMPP also bypasses the approximation of the Gaussian mixture distribution commonly used in denoising methods, allowing for more accurate acquisition of physical properties. Experimental results indicate that EMPP significantly enhances performance of advanced molecular architectures, surpassing state-of-the-art self-supervised approaches. Our code is released in https://github.com/ajy112/EMPP.

* 24 pages, 6 figures

Multi-View Partial Point Cloud Challenge 2021 on Completion and Registration: Methods and Results

Dec 22, 2021

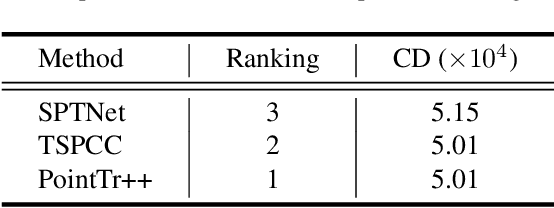

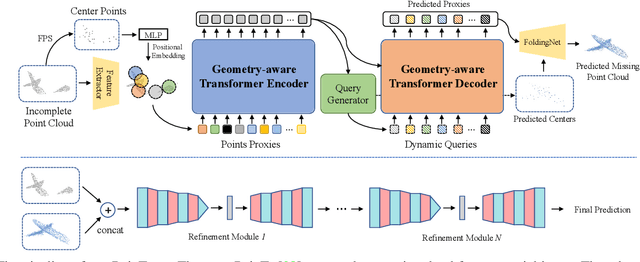

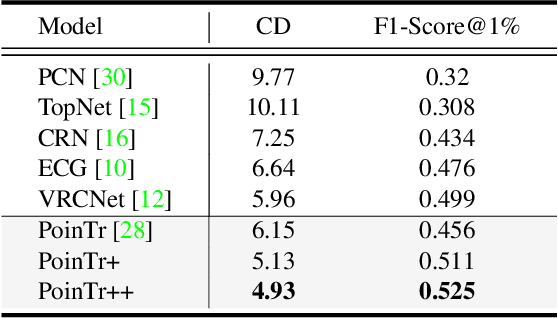

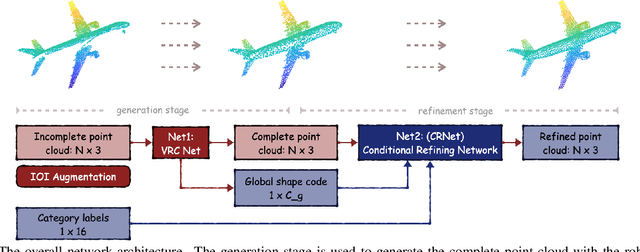

Abstract:As real-scanned point clouds are mostly partial due to occlusions and viewpoints, reconstructing complete 3D shapes based on incomplete observations becomes a fundamental problem for computer vision. With a single incomplete point cloud, it becomes the partial point cloud completion problem. Given multiple different observations, 3D reconstruction can be addressed by performing partial-to-partial point cloud registration. Recently, a large-scale Multi-View Partial (MVP) point cloud dataset has been released, which consists of over 100,000 high-quality virtual-scanned partial point clouds. Based on the MVP dataset, this paper reports methods and results in the Multi-View Partial Point Cloud Challenge 2021 on Completion and Registration. In total, 128 participants registered for the competition, and 31 teams made valid submissions. The top-ranked solutions will be analyzed, and then we will discuss future research directions.

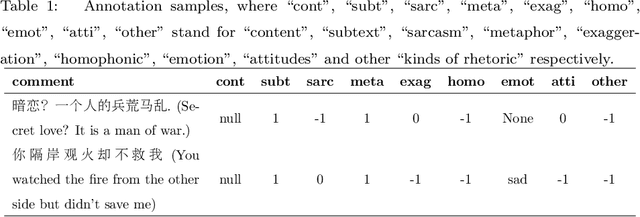

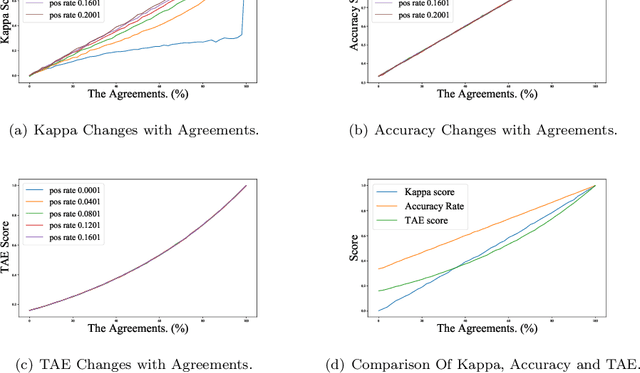

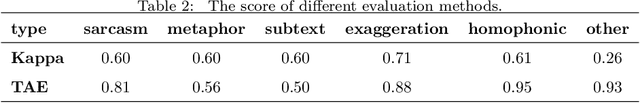

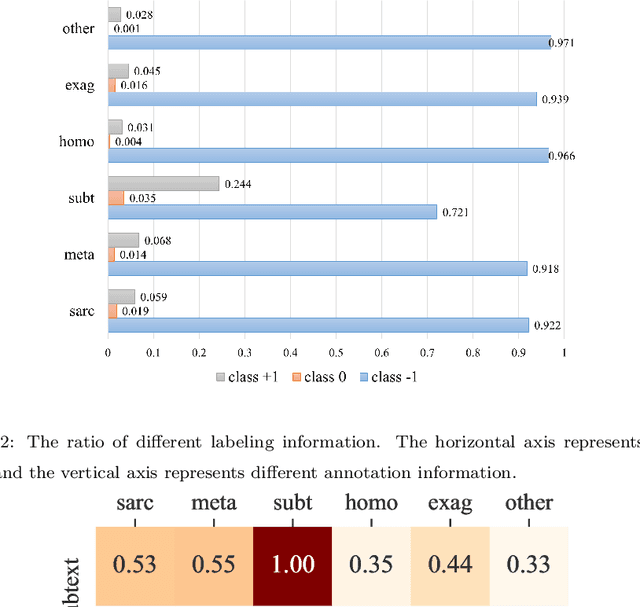

SASICM A Multi-Task Benchmark For Subtext Recognition

Jul 04, 2021

Abstract:Subtext is a kind of deep semantics which can be acquired after one or more rounds of expression transformation. As a popular way of expressing one's intentions, it is well worth studying. In this paper, we try to make computers understand whether there is a subtext by means of machine learning. We build a Chinese dataset whose source data comes from the popular social media (e.g. Weibo, Netease Music, Zhihu, and Bilibili). In addition, we also build a baseline model called SASICM to deal with subtext recognition. The F1 score of SASICMg, whose pretrained model is GloVe, is as high as 64.37%, which is 3.97% higher than that of BERT based model, 12.7% higher than that of traditional methods on average, including support vector machine, logistic regression classifier, maximum entropy classifier, naive bayes classifier and decision tree and 2.39% higher than that of the state-of-the-art, including MARIN and BTM. The F1 score of SASICMBERT, whose pretrained model is BERT, is 65.12%, which is 0.75% higher than that of SASICMg. The accuracy rates of SASICMg and SASICMBERT are 71.16% and 70.76%, respectively, which can compete with those of other methods which are mentioned before.

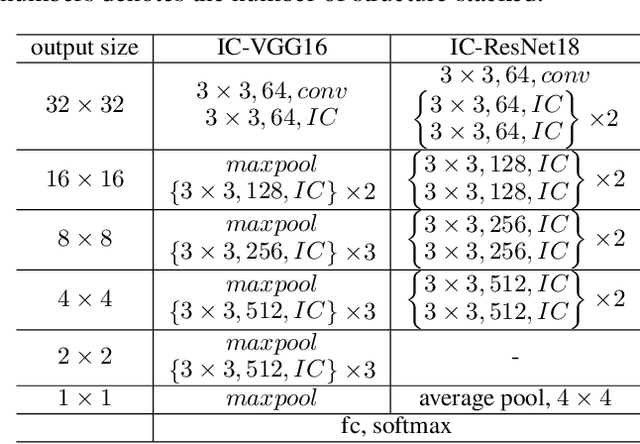

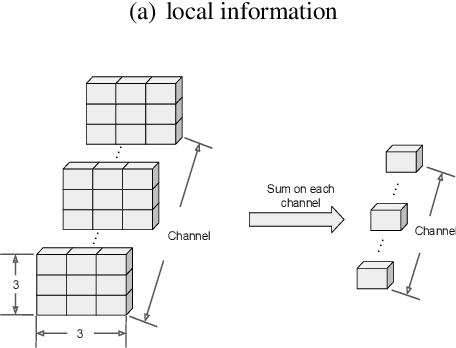

IC Networks: Remodeling the Basic Unit for Convolutional Neural Networks

Feb 06, 2021

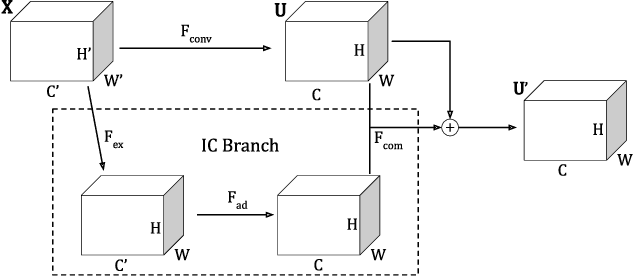

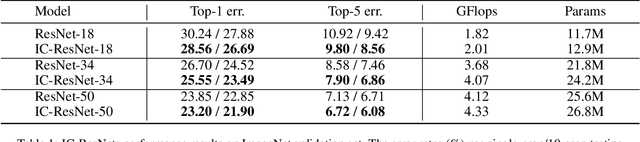

Abstract:Convolutional neural network (CNN) is a class of artificial neural networks widely used in computer vision tasks. Most CNNs achieve excellent performance by stacking certain types of basic units. In addition to increasing the depth and width of the network, designing more effective basic units has become an important research topic. Inspired by the elastic collision model in physics, we present a general structure which can be integrated into the existing CNNs to improve their performance. We term it the "Inter-layer Collision" (IC) structure. Compared to the traditional convolution structure, the IC structure introduces nonlinearity and feature recalibration in the linear convolution operation, which can capture more fine-grained features. In addition, a new training method, namely weak logit distillation (WLD), is proposed to speed up the training of IC networks by extracting knowledge from pre-trained basic models. In the ImageNet experiment, we integrate the IC structure into ResNet-50 and reduce the top-1 error from 22.38% to 21.75%, which also catches up the top-1 error of ResNet-100 (21.75%) with nearly half of FLOPs.

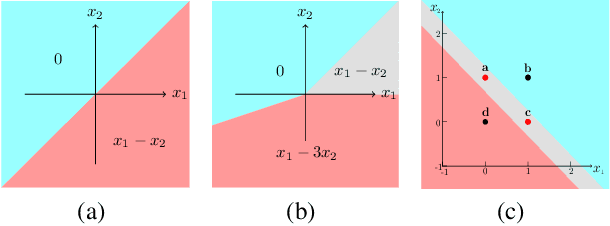

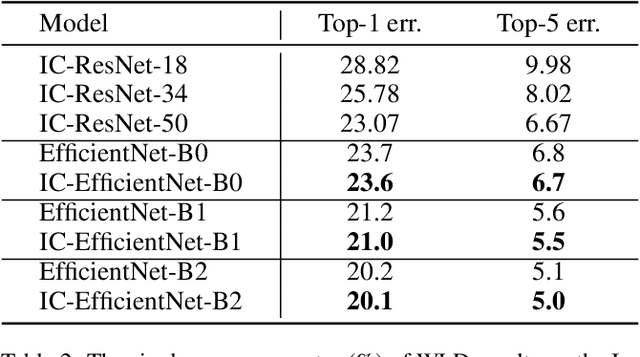

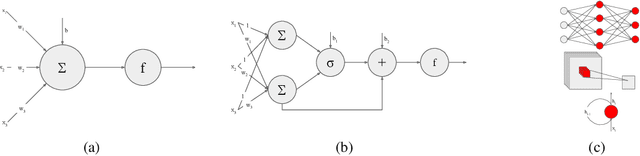

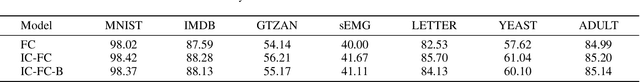

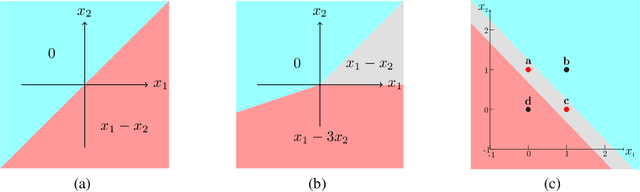

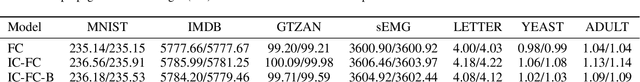

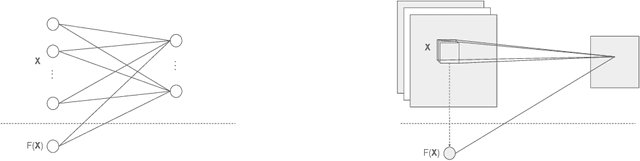

IC Neuron: An Efficient Unit to Construct Neural Networks

Nov 23, 2020

Abstract:As a popular machine learning method, neural networks can be used to solve many complex tasks. Their strong generalization ability comes from the representation ability of the basic neuron model. The most popular neuron is the MP neuron, which uses a linear transformation and a non-linear activation function to process the input successively. Inspired by the elastic collision model in physics, we propose a new neuron model that can represent more complex distributions. We term it Inter-layer collision (IC) neuron. The IC neuron divides the input space into multiple subspaces used to represent different linear transformations. This operation enhanced non-linear representation ability and emphasizes some useful input features for the given task. We build the IC networks by integrating the IC neurons into the fully-connected (FC), convolutional, and recurrent structures. The IC networks outperform the traditional networks in a wide range of experiments. We believe that the IC neuron can be a basic unit to build network structures.

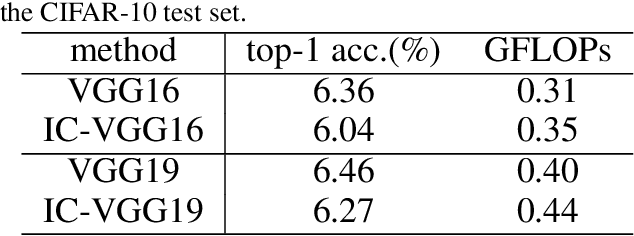

Inter-layer Collision Networks

Nov 19, 2019

Abstract:Deeper neural networks are hard to train. Inspired by the elastic collision model in physics, we present a universal structure that could be integrated into the existing network structures to speed up the training process and eventually increase its generalization ability. We apply our structure to the Convolutional Neural Networks(CNNs) to form a new structure, which we term the "Inter-layer Collision" (IC) structure. The IC structure provides the deeper layer a better representation of the input features. We evaluate the IC structure on CIFAR10 and Imagenet by integrating it into the existing state-of-the-art CNNs. Our experiment shows that the proposed IC structure can effectively increase the accuracy and convergence speed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge