Fengshan Liu

IC Networks: Remodeling the Basic Unit for Convolutional Neural Networks

Feb 06, 2021

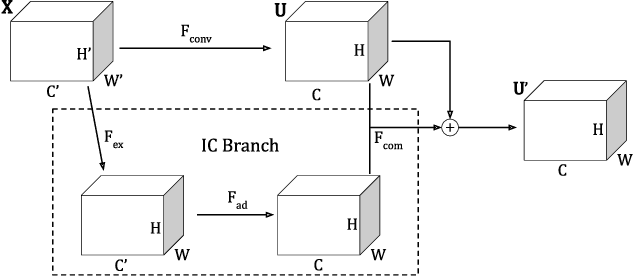

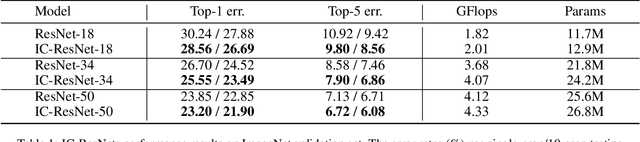

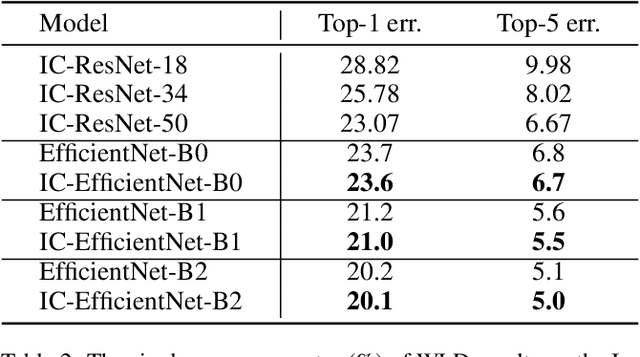

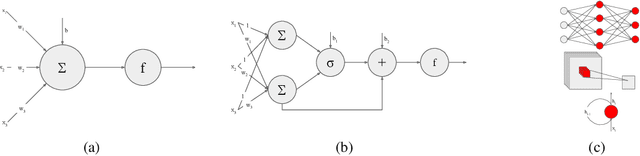

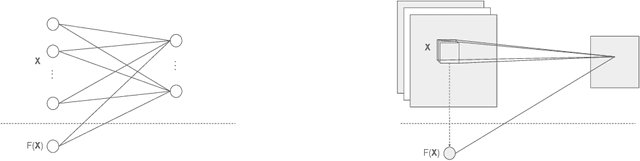

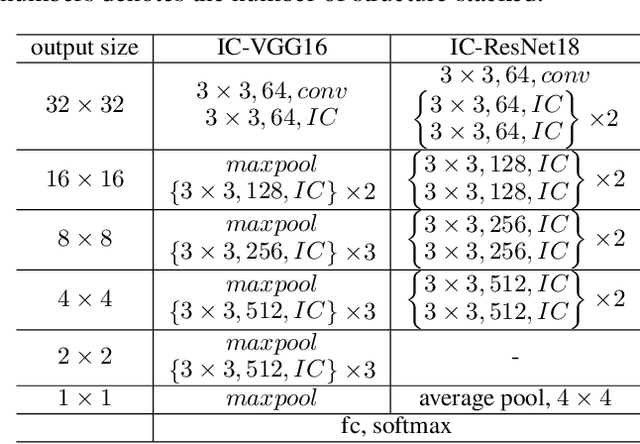

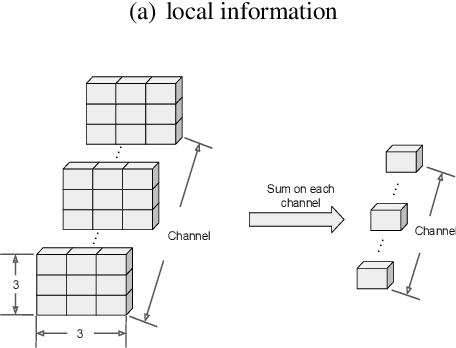

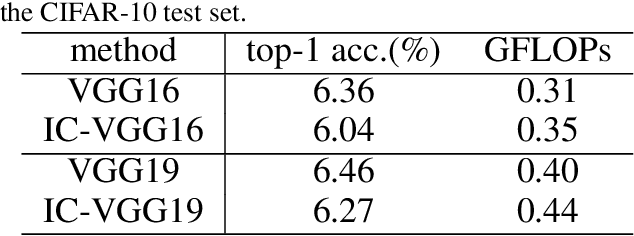

Abstract:Convolutional neural network (CNN) is a class of artificial neural networks widely used in computer vision tasks. Most CNNs achieve excellent performance by stacking certain types of basic units. In addition to increasing the depth and width of the network, designing more effective basic units has become an important research topic. Inspired by the elastic collision model in physics, we present a general structure which can be integrated into the existing CNNs to improve their performance. We term it the "Inter-layer Collision" (IC) structure. Compared to the traditional convolution structure, the IC structure introduces nonlinearity and feature recalibration in the linear convolution operation, which can capture more fine-grained features. In addition, a new training method, namely weak logit distillation (WLD), is proposed to speed up the training of IC networks by extracting knowledge from pre-trained basic models. In the ImageNet experiment, we integrate the IC structure into ResNet-50 and reduce the top-1 error from 22.38% to 21.75%, which also catches up the top-1 error of ResNet-100 (21.75%) with nearly half of FLOPs.

IC Neuron: An Efficient Unit to Construct Neural Networks

Nov 23, 2020

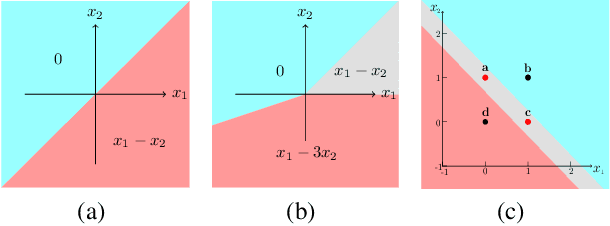

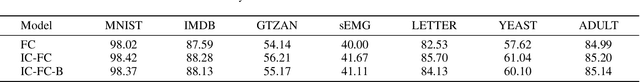

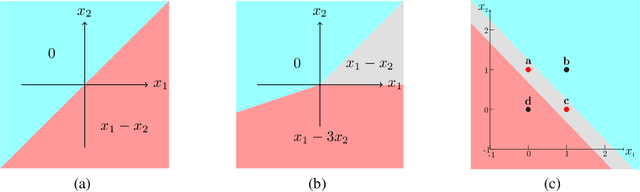

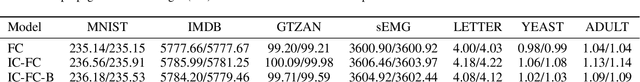

Abstract:As a popular machine learning method, neural networks can be used to solve many complex tasks. Their strong generalization ability comes from the representation ability of the basic neuron model. The most popular neuron is the MP neuron, which uses a linear transformation and a non-linear activation function to process the input successively. Inspired by the elastic collision model in physics, we propose a new neuron model that can represent more complex distributions. We term it Inter-layer collision (IC) neuron. The IC neuron divides the input space into multiple subspaces used to represent different linear transformations. This operation enhanced non-linear representation ability and emphasizes some useful input features for the given task. We build the IC networks by integrating the IC neurons into the fully-connected (FC), convolutional, and recurrent structures. The IC networks outperform the traditional networks in a wide range of experiments. We believe that the IC neuron can be a basic unit to build network structures.

Inter-layer Collision Networks

Nov 19, 2019

Abstract:Deeper neural networks are hard to train. Inspired by the elastic collision model in physics, we present a universal structure that could be integrated into the existing network structures to speed up the training process and eventually increase its generalization ability. We apply our structure to the Convolutional Neural Networks(CNNs) to form a new structure, which we term the "Inter-layer Collision" (IC) structure. The IC structure provides the deeper layer a better representation of the input features. We evaluate the IC structure on CIFAR10 and Imagenet by integrating it into the existing state-of-the-art CNNs. Our experiment shows that the proposed IC structure can effectively increase the accuracy and convergence speed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge