Jun Baba

From Metrics to Meaning: Insights from a Mixed-Methods Field Experiment on Retail Robot Deployment

Jan 05, 2026Abstract:We report a mixed-methods field experiment of a conversational service robot deployed under everyday staffing discretion in a live bedding store. Over 12 days we alternated three conditions--Baseline (no robot), Robot-only, and Robot+Fixture--and video-annotated the service funnel from passersby to purchase. An explanatory sequential design then used six post-experiment staff interviews to interpret the quantitative patterns. Quantitatively, the robot increased stopping per passerby (highest with the fixture), yet clerk-led downstream steps per stopper--clerk approach, store entry, assisted experience, and purchase--decreased. Interviews explained this divergence: clerks avoided interrupting ongoing robot-customer talk, struggled with ambiguous timing amid conversational latency, and noted child-centered attraction that often satisfied curiosity at the doorway. The fixture amplified visibility but also anchored encounters at the threshold, creating a well-defined micro-space where needs could ``close'' without moving inside. We synthesize these strands into an integrative account from the initial show of interest on the part of a customer to their entering the store and derive actionable guidance. The results advance the understanding of interactions between customers, staff members, and the robot and offer practical recommendations for deploying service robots in high-touch retail.

Hypothesis on the Functional Advantages of the Selection-Broadcast Cycle Structure: Global Workspace Theory and Dealing with a Real-Time World

May 20, 2025Abstract:This paper discusses the functional advantages of the Selection-Broadcast Cycle structure proposed by Global Workspace Theory (GWT), inspired by human consciousness, particularly focusing on its applicability to artificial intelligence and robotics in dynamic, real-time scenarios. While previous studies often examined the Selection and Broadcast processes independently, this research emphasizes their combined cyclic structure and the resulting benefits for real-time cognitive systems. Specifically, the paper identifies three primary benefits: Dynamic Thinking Adaptation, Experience-Based Adaptation, and Immediate Real-Time Adaptation. This work highlights GWT's potential as a cognitive architecture suitable for sophisticated decision-making and adaptive performance in unsupervised, dynamic environments. It suggests new directions for the development and implementation of robust, general-purpose AI and robotics systems capable of managing complex, real-world tasks.

Proactive User Information Acquisition via Chats on User-Favored Topics

Apr 10, 2025Abstract:Chat-oriented dialogue systems designed to provide tangible benefits, such as sharing the latest news or preventing frailty in senior citizens, often require Proactive acquisition of specific user Information via chats on user-faVOred Topics (PIVOT). This study proposes the PIVOT task, designed to advance the technical foundation for these systems. In this task, a system needs to acquire the answers of a user to predefined questions without making the user feel abrupt while engaging in a chat on a predefined topic. We found that even recent large language models (LLMs) show a low success rate in the PIVOT task. We constructed a dataset suitable for the analysis to develop more effective systems. Finally, we developed a simple but effective system for this task by incorporating insights obtained through the analysis of this dataset.

A Noise-Robust Turn-Taking System for Real-World Dialogue Robots: A Field Experiment

Mar 08, 2025Abstract:Turn-taking is a crucial aspect of human-robot interaction, directly influencing conversational fluidity and user engagement. While previous research has explored turn-taking models in controlled environments, their robustness in real-world settings remains underexplored. In this study, we propose a noise-robust voice activity projection (VAP) model, based on a Transformer architecture, to enhance real-time turn-taking in dialogue robots. To evaluate the effectiveness of the proposed system, we conducted a field experiment in a shopping mall, comparing the VAP system with a conventional cloud-based speech recognition system. Our analysis covered both subjective user evaluations and objective behavioral analysis. The results showed that the proposed system significantly reduced response latency, leading to a more natural conversation where both the robot and users responded faster. The subjective evaluations suggested that faster responses contribute to a better interaction experience.

What Drives You to Interact?: The Role of User Motivation for a Robot in the Wild

Jan 09, 2025

Abstract:In this paper, we aim to understand how user motivation shapes human-robot interaction (HRI) in the wild. To explore this, we conducted a field study by deploying a fully autonomous conversational robot in a shopping mall over two days. Through sequential video analysis, we identified five patterns of interaction fluency (Smooth, Awkward, Active, Messy, and Quiet), four types of user motivation for interacting with the robot (Function, Experiment, Curiosity, and Education), and user positioning towards the robot. We further analyzed how these motivations and positioning influence interaction fluency. Our findings suggest that incorporating users' motivation types into the design of robot behavior can enhance interaction fluency, engagement, and user satisfaction in real-world HRI scenarios.

User Willingness-aware Sales Talk Dataset

Dec 27, 2024Abstract:User willingness is a crucial element in the sales talk process that affects the achievement of the salesperson's or sales system's objectives. Despite the importance of user willingness, to the best of our knowledge, no previous study has addressed the development of automated sales talk dialogue systems that explicitly consider user willingness. A major barrier is the lack of sales talk datasets with reliable user willingness data. Thus, in this study, we developed a user willingness-aware sales talk collection by leveraging the ecological validity concept, which is discussed in the field of human-computer interaction. Our approach focused on three types of user willingness essential in real sales interactions. We created a dialogue environment that closely resembles real-world scenarios to elicit natural user willingness, with participants evaluating their willingness at the utterance level from multiple perspectives. We analyzed the collected data to gain insights into practical user willingness-aware sales talk strategies. In addition, as a practical application of the constructed dataset, we developed and evaluated a sales dialogue system aimed at enhancing the user's intent to purchase.

RetailOpt: An Opt-In, Easy-to-Deploy Trajectory Estimation System Leveraging Smartphone Motion Data and Retail Facility Information

Apr 19, 2024

Abstract:We present RetailOpt, a novel opt-in, easy-to-deploy system for tracking customer movements in indoor retail environments. The system utilizes information presently accessible to customers through smartphones and retail apps: motion data, store map, and purchase records. The approach eliminates the need for additional hardware installations/maintenance and ensures customers maintain full control of their data. Specifically, RetailOpt first employs inertial navigation to recover relative trajectories from smartphone motion data. The store map and purchase records are then cross-referenced to identify a list of visited shelves, providing anchors to localize the relative trajectories in a store through continuous and discrete optimization. We demonstrate the effectiveness of our system through systematic experiments in five diverse environments. The proposed system, if successful, would produce accurate customer movement data, essential for a broad range of retail applications, including customer behavior analysis and in-store navigation. The potential application could also extend to other domains such as entertainment and assistive technologies.

Influence of collaborative customer service by service robots and clerks in bakery stores

Dec 20, 2022

Abstract:In recent years, various service robots have been introduced in stores as recommendation systems. Previous studies attempted to increase the influence of these robots by improving their social acceptance and trust. However, when such service robots recommend a product to customers in real environments, the effect on the customers is influenced not only by the robot itself, but also by the social influence of the surrounding people such as store clerks. Therefore, leveraging the social influence of the clerks may increase the influence of the robots on the customers. Hence, we compared the influence of robots with and without collaborative customer service between the robots and clerks in two bakery stores. The experimental results showed that collaborative customer service increased the purchase rate of the recommended bread and improved the impression regarding the robot and store experience of the customers. Because the results also showed that the workload required for the clerks to collaborate with the robot was not high, this study suggests that all stores with service robots may show high effectiveness in introducing collaborative customer service.

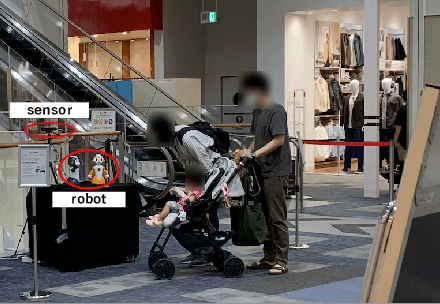

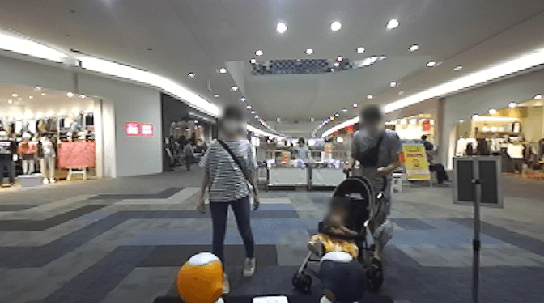

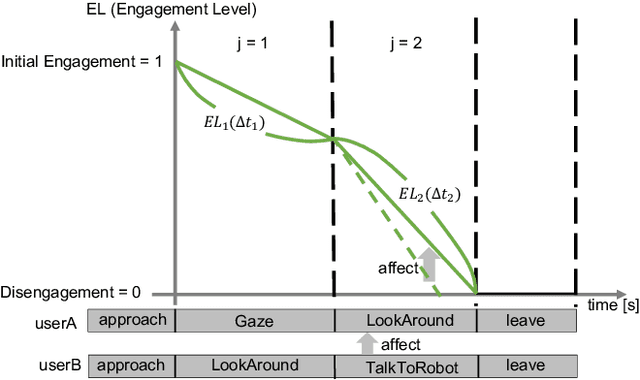

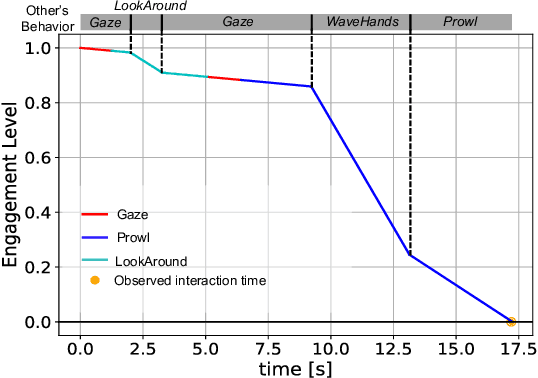

An Estimation Framework for Passerby Engagement Interacting with Social Robots

Jun 06, 2022

Abstract:Social robots are expected to be a human labor support technology, and one application of them is an advertising medium in public spaces. When social robots provide information, such as recommended shops, adaptive communication according to the user's state is desired. User engagement, which is also defined as the level of interest in the robot, is likely to play an important role in adaptive communication. Therefore, in this paper, we propose a new framework to estimate user engagement. The proposed method focuses on four unsolved open problems: multi-party interactions, process of state change in engagement, difficulty in annotating engagement, and interaction dataset in the real world. The accuracy of the proposed method for estimating engagement was evaluated using interaction duration. The results show that the interaction duration can be accurately estimated by considering the influence of the behaviors of other people; this also implies that the proposed model accurately estimates the level of engagement during interaction with the robot.

Decoupling Speaker-Independent Emotions for Voice Conversion Via Source-Filter Networks

Oct 04, 2021

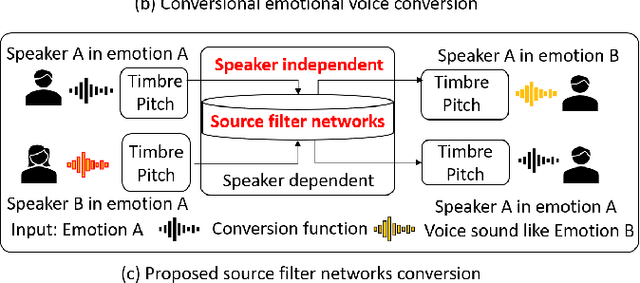

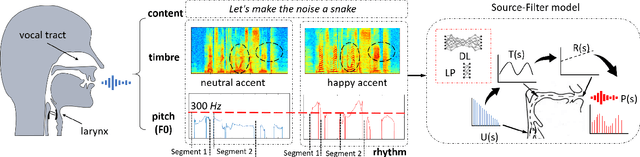

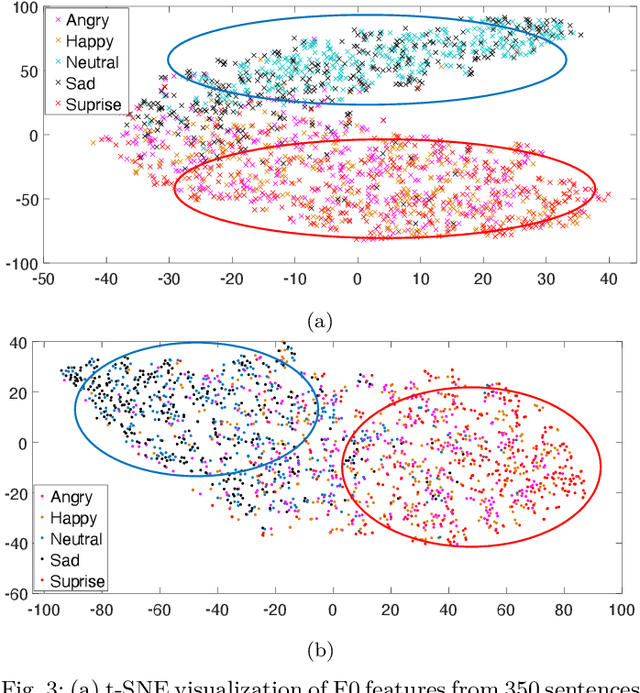

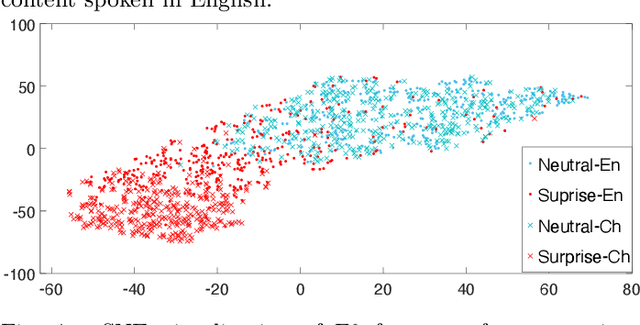

Abstract:Emotional voice conversion (VC) aims to convert a neutral voice to an emotional (e.g. happy) one while retaining the linguistic information and speaker identity. We note that the decoupling of emotional features from other speech information (such as speaker, content, etc.) is the key to achieving remarkable performance. Some recent attempts about speech representation decoupling on the neutral speech can not work well on the emotional speech, due to the more complex acoustic properties involved in the latter. To address this problem, here we propose a novel Source-Filter-based Emotional VC model (SFEVC) to achieve proper filtering of speaker-independent emotion features from both the timbre and pitch features. Our SFEVC model consists of multi-channel encoders, emotion separate encoders, and one decoder. Note that all encoder modules adopt a designed information bottlenecks auto-encoder. Additionally, to further improve the conversion quality for various emotions, a novel two-stage training strategy based on the 2D Valence-Arousal (VA) space was proposed. Experimental results show that the proposed SFEVC along with a two-stage training strategy outperforms all baselines and achieves the state-of-the-art performance in speaker-independent emotional VC with nonparallel data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge