Koichiro Yoshino

WarrantScore: Modeling Warrants between Claims and Evidence for Substantiation Evaluation in Peer Reviews

Jan 24, 2026Abstract:The scientific peer-review process is facing a shortage of human resources due to the rapid growth in the number of submitted papers. The use of language models to reduce the human cost of peer review has been actively explored as a potential solution to this challenge. A method has been proposed to evaluate the level of substantiation in scientific reviews in a manner that is interpretable by humans. This method extracts the core components of an argument, claims and evidence, and assesses the level of substantiation based on the proportion of claims supported by evidence. The level of substantiation refers to the extent to which claims are based on objective facts. However, when assessing the level of substantiation, simply detecting the presence or absence of supporting evidence for a claim is insufficient; it is also necessary to accurately assess the logical inference between a claim and its evidence. We propose a new evaluation metric for scientific review comments that assesses the logical inference between claims and evidence. Experimental results show that the proposed method achieves a higher correlation with human scores than conventional methods, indicating its potential to better support the efficiency of the peer-review process.

Pragmatic Theories Enhance Understanding of Implied Meanings in LLMs

Oct 30, 2025Abstract:The ability to accurately interpret implied meanings plays a crucial role in human communication and language use, and language models are also expected to possess this capability. This study demonstrates that providing language models with pragmatic theories as prompts is an effective in-context learning approach for tasks to understand implied meanings. Specifically, we propose an approach in which an overview of pragmatic theories, such as Gricean pragmatics and Relevance Theory, is presented as a prompt to the language model, guiding it through a step-by-step reasoning process to derive a final interpretation. Experimental results showed that, compared to the baseline, which prompts intermediate reasoning without presenting pragmatic theories (0-shot Chain-of-Thought), our methods enabled language models to achieve up to 9.6\% higher scores on pragmatic reasoning tasks. Furthermore, we show that even without explaining the details of pragmatic theories, merely mentioning their names in the prompt leads to a certain performance improvement (around 1-3%) in larger models compared to the baseline.

Disambiguating Reference in Visually Grounded Dialogues through Joint Modeling of Textual and Multimodal Semantic Structures

May 16, 2025Abstract:Multimodal reference resolution, including phrase grounding, aims to understand the semantic relations between mentions and real-world objects. Phrase grounding between images and their captions is a well-established task. In contrast, for real-world applications, it is essential to integrate textual and multimodal reference resolution to unravel the reference relations within dialogue, especially in handling ambiguities caused by pronouns and ellipses. This paper presents a framework that unifies textual and multimodal reference resolution by mapping mention embeddings to object embeddings and selecting mentions or objects based on their similarity. Our experiments show that learning textual reference resolution, such as coreference resolution and predicate-argument structure analysis, positively affects performance in multimodal reference resolution. In particular, our model with coreference resolution performs better in pronoun phrase grounding than representative models for this task, MDETR and GLIP. Our qualitative analysis demonstrates that incorporating textual reference relations strengthens the confidence scores between mentions, including pronouns and predicates, and objects, which can reduce the ambiguities that arise in visually grounded dialogues.

Proactive User Information Acquisition via Chats on User-Favored Topics

Apr 10, 2025Abstract:Chat-oriented dialogue systems designed to provide tangible benefits, such as sharing the latest news or preventing frailty in senior citizens, often require Proactive acquisition of specific user Information via chats on user-faVOred Topics (PIVOT). This study proposes the PIVOT task, designed to advance the technical foundation for these systems. In this task, a system needs to acquire the answers of a user to predefined questions without making the user feel abrupt while engaging in a chat on a predefined topic. We found that even recent large language models (LLMs) show a low success rate in the PIVOT task. We constructed a dataset suitable for the analysis to develop more effective systems. Finally, we developed a simple but effective system for this task by incorporating insights obtained through the analysis of this dataset.

ClaimBrush: A Novel Framework for Automated Patent Claim Refinement Based on Large Language Models

Oct 10, 2024

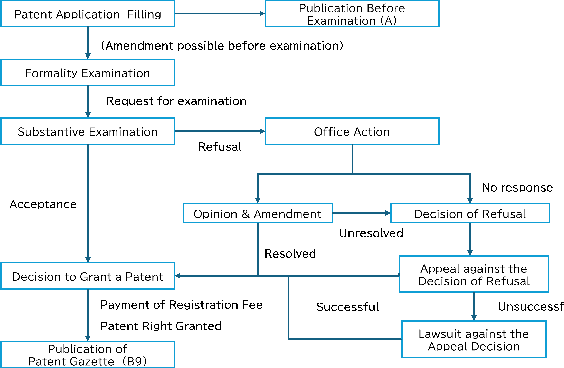

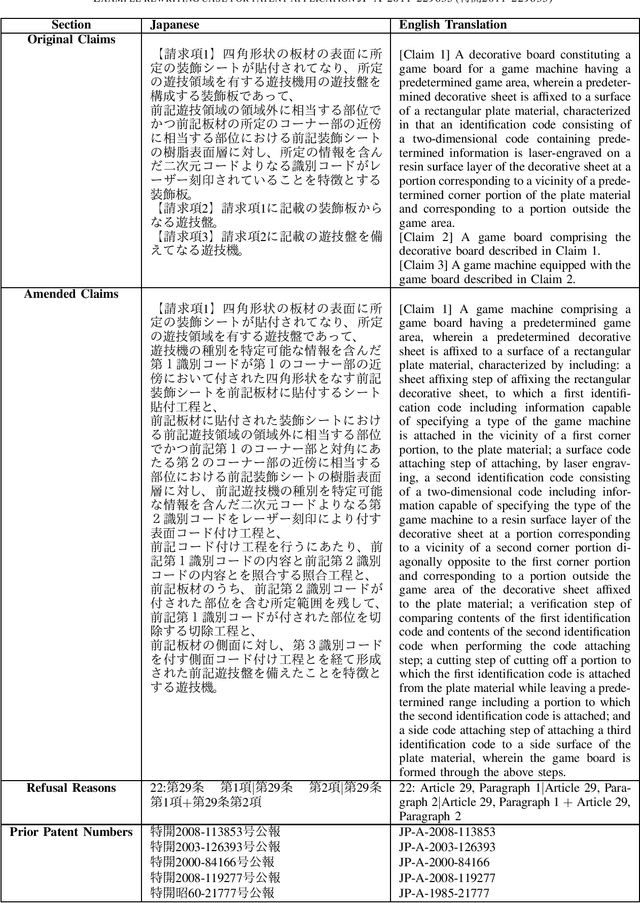

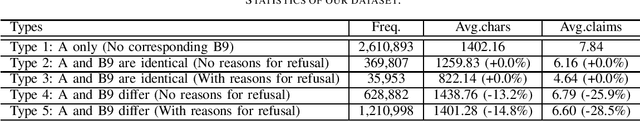

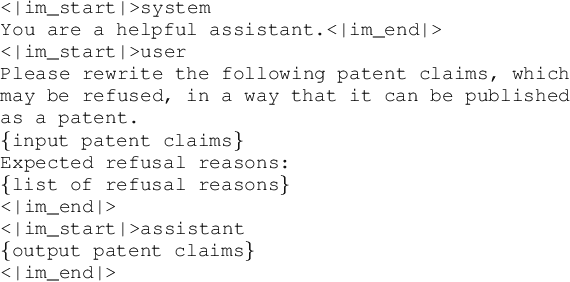

Abstract:Automatic refinement of patent claims in patent applications is crucial from the perspective of intellectual property strategy. In this paper, we propose ClaimBrush, a novel framework for automated patent claim refinement that includes a dataset and a rewriting model. We constructed a dataset for training and evaluating patent claim rewriting models by collecting a large number of actual patent claim rewriting cases from the patent examination process. Using the constructed dataset, we built an automatic patent claim rewriting model by fine-tuning a large language model. Furthermore, we enhanced the performance of the automatic patent claim rewriting model by applying preference optimization based on a prediction model of patent examiners' Office Actions. The experimental results showed that our proposed rewriting model outperformed heuristic baselines and zero-shot learning in state-of-the-art large language models. Moreover, preference optimization based on patent examiners' preferences boosted the performance of patent claim refinement.

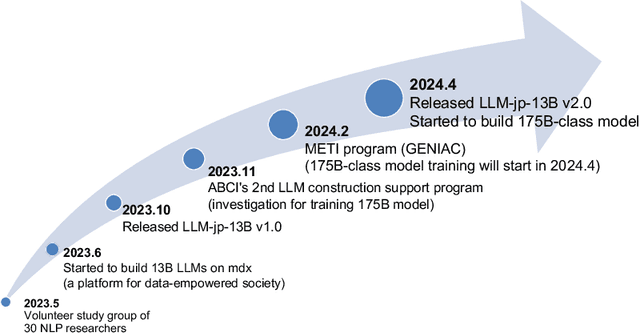

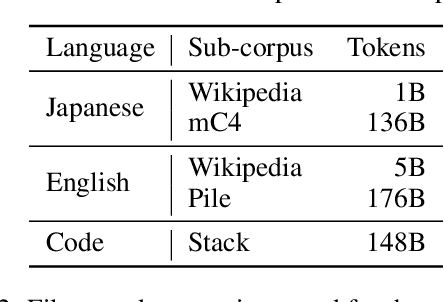

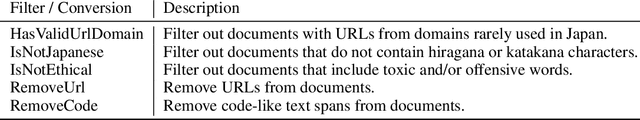

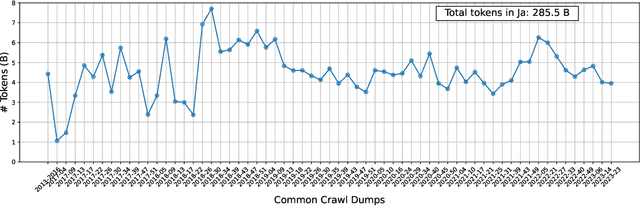

LLM-jp: A Cross-organizational Project for the Research and Development of Fully Open Japanese LLMs

Jul 04, 2024

Abstract:This paper introduces LLM-jp, a cross-organizational project for the research and development of Japanese large language models (LLMs). LLM-jp aims to develop open-source and strong Japanese LLMs, and as of this writing, more than 1,500 participants from academia and industry are working together for this purpose. This paper presents the background of the establishment of LLM-jp, summaries of its activities, and technical reports on the LLMs developed by LLM-jp. For the latest activities, visit https://llm-jp.nii.ac.jp/en/.

Rapport-Driven Virtual Agent: Rapport Building Dialogue Strategy for Improving User Experience at First Meeting

Jun 14, 2024

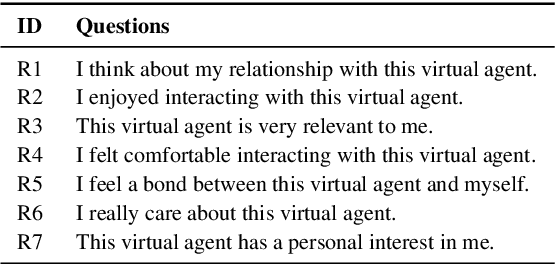

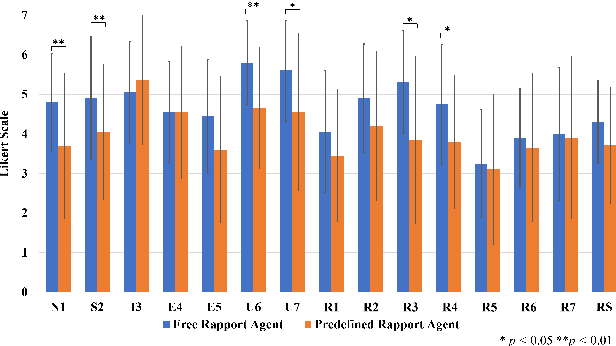

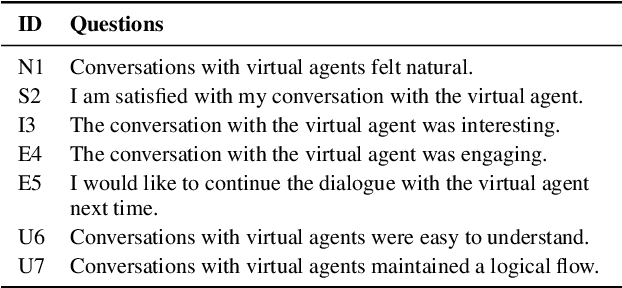

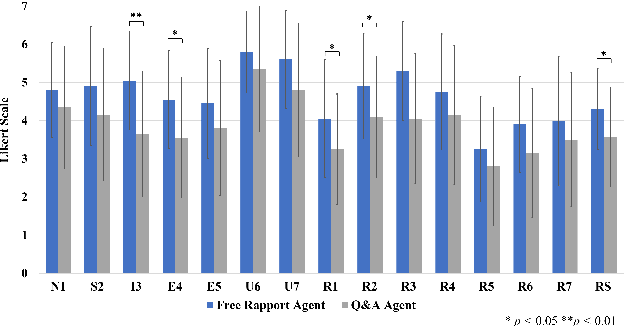

Abstract:Rapport is known as a conversational aspect focusing on relationship building, which influences outcomes in collaborative tasks. This study aims to establish human-agent rapport through small talk by using a rapport-building strategy. We implemented this strategy for the virtual agents based on dialogue strategies by prompting a large language model (LLM). In particular, we utilized two dialogue strategies-predefined sequence and free-form-to guide the dialogue generation framework. We conducted analyses based on human evaluations, examining correlations between total turn, utterance characters, rapport score, and user experience variables: naturalness, satisfaction, interest, engagement, and usability. We investigated correlations between rapport score and naturalness, satisfaction, engagement, and conversation flow. Our experimental results also indicated that using free-form to prompt the rapport-building strategy performed the best in subjective scores.

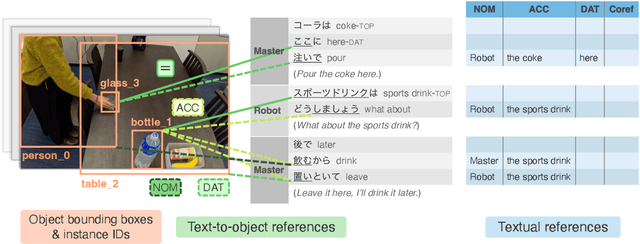

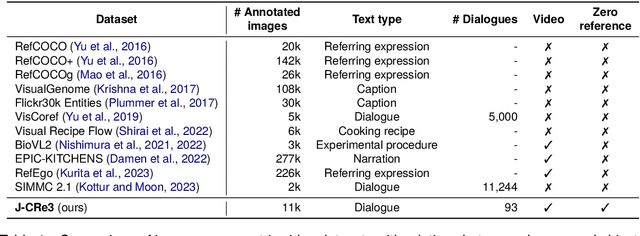

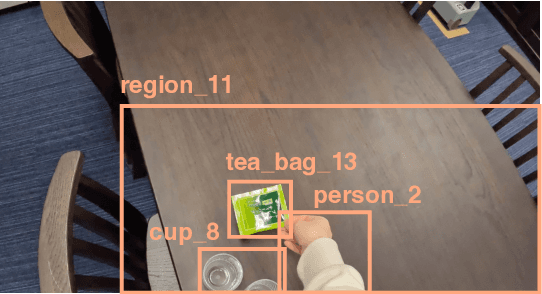

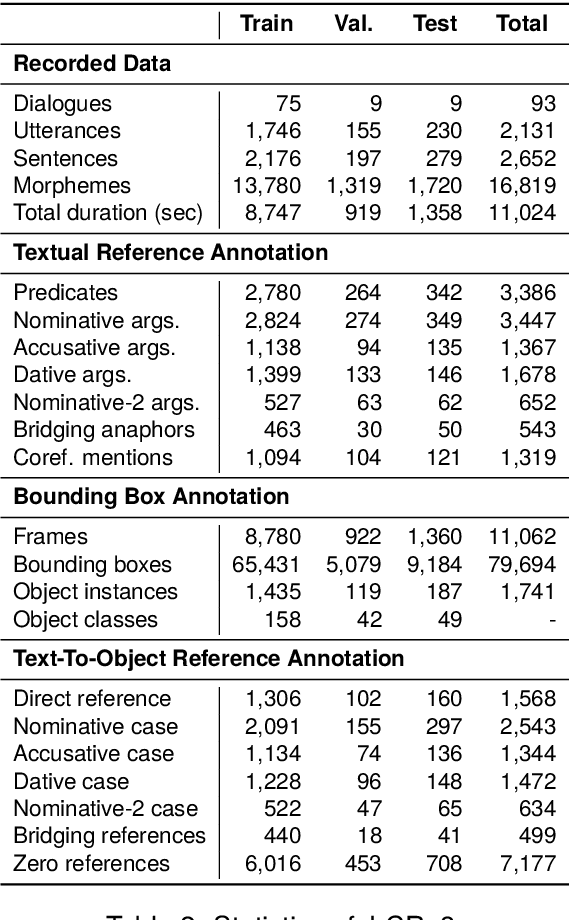

J-CRe3: A Japanese Conversation Dataset for Real-world Reference Resolution

Mar 28, 2024

Abstract:Understanding expressions that refer to the physical world is crucial for such human-assisting systems in the real world, as robots that must perform actions that are expected by users. In real-world reference resolution, a system must ground the verbal information that appears in user interactions to the visual information observed in egocentric views. To this end, we propose a multimodal reference resolution task and construct a Japanese Conversation dataset for Real-world Reference Resolution (J-CRe3). Our dataset contains egocentric video and dialogue audio of real-world conversations between two people acting as a master and an assistant robot at home. The dataset is annotated with crossmodal tags between phrases in the utterances and the object bounding boxes in the video frames. These tags include indirect reference relations, such as predicate-argument structures and bridging references as well as direct reference relations. We also constructed an experimental model and clarified the challenges in multimodal reference resolution tasks.

A Gaze-grounded Visual Question Answering Dataset for Clarifying Ambiguous Japanese Questions

Mar 26, 2024Abstract:Situated conversations, which refer to visual information as visual question answering (VQA), often contain ambiguities caused by reliance on directive information. This problem is exacerbated because some languages, such as Japanese, often omit subjective or objective terms. Such ambiguities in questions are often clarified by the contexts in conversational situations, such as joint attention with a user or user gaze information. In this study, we propose the Gaze-grounded VQA dataset (GazeVQA) that clarifies ambiguous questions using gaze information by focusing on a clarification process complemented by gaze information. We also propose a method that utilizes gaze target estimation results to improve the accuracy of GazeVQA tasks. Our experimental results showed that the proposed method improved the performance in some cases of a VQA system on GazeVQA and identified some typical problems of GazeVQA tasks that need to be improved.

Whats New? Identifying the Unfolding of New Events in Narratives

Feb 20, 2023

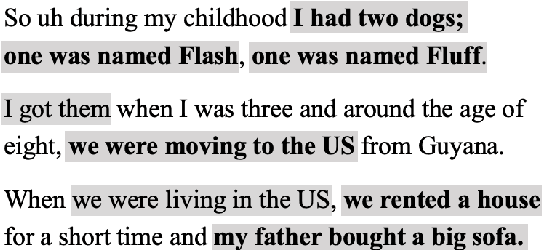

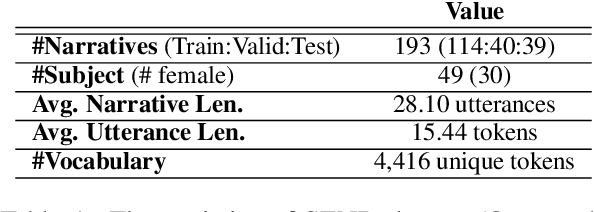

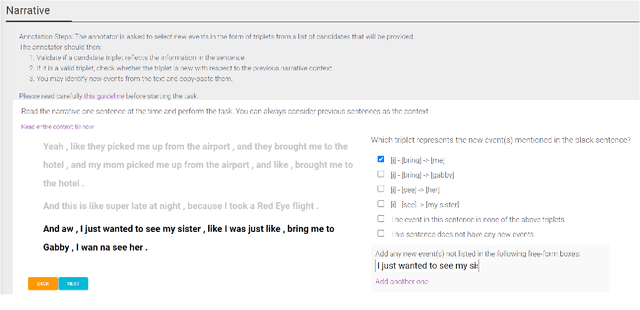

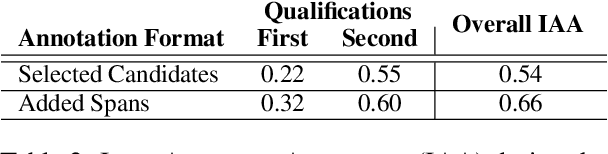

Abstract:Narratives include a rich source of events unfolding over time and context. Automatic understanding of these events may provide a summarised comprehension of the narrative for further computation (such as reasoning). In this paper, we study the Information Status (IS) of the events and propose a novel challenging task: the automatic identification of new events in a narrative. We define an event as a triplet of subject, predicate, and object. The event is categorized as new with respect to the discourse context and whether it can be inferred through commonsense reasoning. We annotated a publicly available corpus of narratives with the new events at sentence level using human annotators. We present the annotation protocol and a study aiming at validating the quality of the annotation and the difficulty of the task. We publish the annotated dataset, annotation materials, and machine learning baseline models for the task of new event extraction for narrative understanding.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge