Yasutomo Kawanishi

View-aware Cross-modal Distillation for Multi-view Action Recognition

Nov 17, 2025Abstract:The widespread use of multi-sensor systems has increased research in multi-view action recognition. While existing approaches in multi-view setups with fully overlapping sensors benefit from consistent view coverage, partially overlapping settings where actions are visible in only a subset of views remain underexplored. This challenge becomes more severe in real-world scenarios, as many systems provide only limited input modalities and rely on sequence-level annotations instead of dense frame-level labels. In this study, we propose View-aware Cross-modal Knowledge Distillation (ViCoKD), a framework that distills knowledge from a fully supervised multi-modal teacher to a modality- and annotation-limited student. ViCoKD employs a cross-modal adapter with cross-modal attention, allowing the student to exploit multi-modal correlations while operating with incomplete modalities. Moreover, we propose a View-aware Consistency module to address view misalignment, where the same action may appear differently or only partially across viewpoints. It enforces prediction alignment when the action is co-visible across views, guided by human-detection masks and confidence-weighted Jensen-Shannon divergence between their predicted class distributions. Experiments on the real-world MultiSensor-Home dataset show that ViCoKD consistently outperforms competitive distillation methods across multiple backbones and environments, delivering significant gains and surpassing the teacher model under limited conditions.

MultiSensor-Home: A Wide-area Multi-modal Multi-view Dataset for Action Recognition and Transformer-based Sensor Fusion

Apr 03, 2025

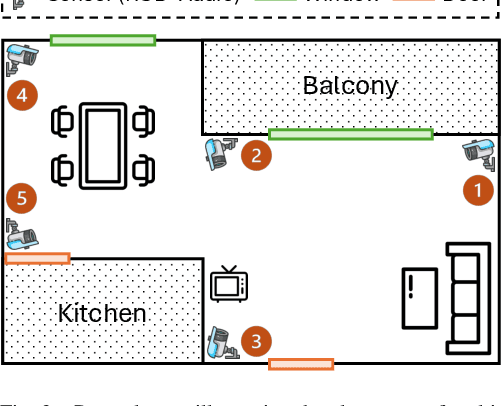

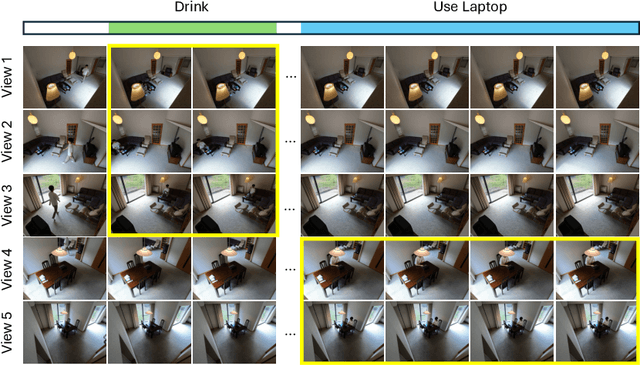

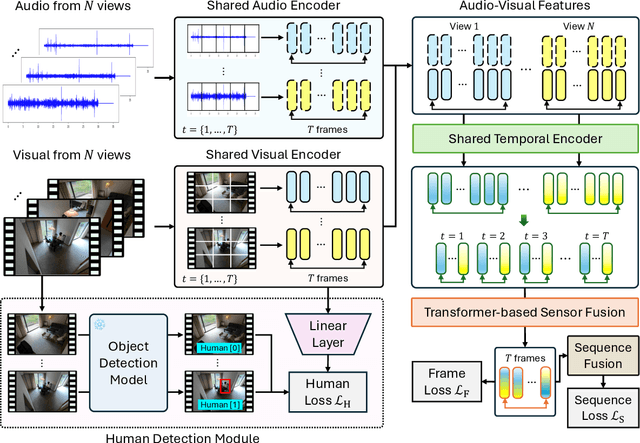

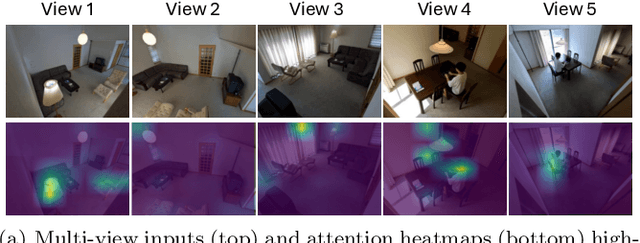

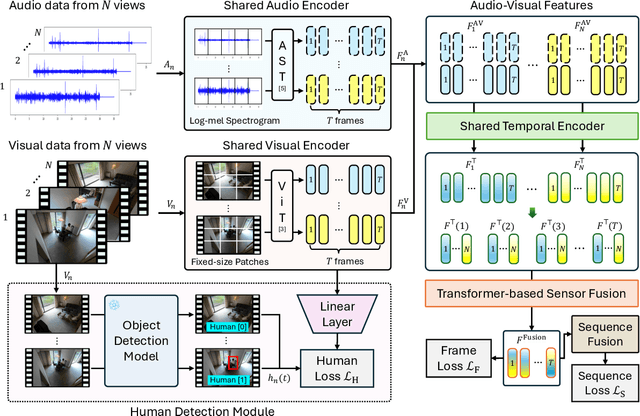

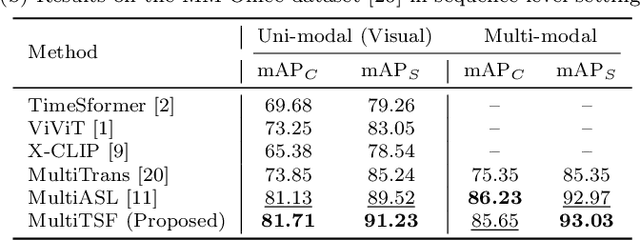

Abstract:Multi-modal multi-view action recognition is a rapidly growing field in computer vision, offering significant potential for applications in surveillance. However, current datasets often fail to address real-world challenges such as wide-area environmental conditions, asynchronous data streams, and the lack of frame-level annotations. Furthermore, existing methods face difficulties in effectively modeling inter-view relationships and enhancing spatial feature learning. In this study, we propose the Multi-modal Multi-view Transformer-based Sensor Fusion (MultiTSF) method and introduce the MultiSensor-Home dataset, a novel benchmark designed for comprehensive action recognition in home environments. The MultiSensor-Home dataset features untrimmed videos captured by distributed sensors, providing high-resolution RGB and audio data along with detailed multi-view frame-level action labels. The proposed MultiTSF method leverages a Transformer-based fusion mechanism to dynamically model inter-view relationships. Furthermore, the method also integrates a external human detection module to enhance spatial feature learning. Experiments on MultiSensor-Home and MM-Office datasets demonstrate the superiority of MultiTSF over the state-of-the-art methods. The quantitative and qualitative results highlight the effectiveness of the proposed method in advancing real-world multi-modal multi-view action recognition.

MultiTSF: Transformer-based Sensor Fusion for Human-Centric Multi-view and Multi-modal Action Recognition

Apr 03, 2025

Abstract:Action recognition from multi-modal and multi-view observations holds significant potential for applications in surveillance, robotics, and smart environments. However, existing methods often fall short of addressing real-world challenges such as diverse environmental conditions, strict sensor synchronization, and the need for fine-grained annotations. In this study, we propose the Multi-modal Multi-view Transformer-based Sensor Fusion (MultiTSF). The proposed method leverages a Transformer-based to dynamically model inter-view relationships and capture temporal dependencies across multiple views. Additionally, we introduce a Human Detection Module to generate pseudo-ground-truth labels, enabling the model to prioritize frames containing human activity and enhance spatial feature learning. Comprehensive experiments conducted on our in-house MultiSensor-Home dataset and the existing MM-Office dataset demonstrate that MultiTSF outperforms state-of-the-art methods in both video sequence-level and frame-level action recognition settings.

Action Selection Learning for Multi-label Multi-view Action Recognition

Oct 04, 2024

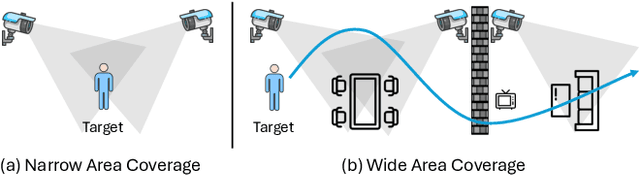

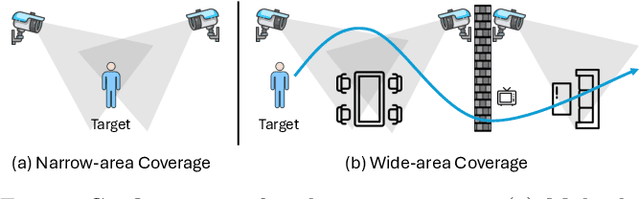

Abstract:Multi-label multi-view action recognition aims to recognize multiple concurrent or sequential actions from untrimmed videos captured by multiple cameras. Existing work has focused on multi-view action recognition in a narrow area with strong labels available, where the onset and offset of each action are labeled at the frame-level. This study focuses on real-world scenarios where cameras are distributed to capture a wide-range area with only weak labels available at the video-level. We propose the method named MultiASL (Multi-view Action Selection Learning), which leverages action selection learning to enhance view fusion by selecting the most useful information from different viewpoints. The proposed method includes a Multi-view Spatial-Temporal Transformer video encoder to extract spatial and temporal features from multi-viewpoint videos. Action Selection Learning is employed at the frame-level, using pseudo ground-truth obtained from weak labels at the video-level, to identify the most relevant frames for action recognition. Experiments in a real-world office environment using the MM-Office dataset demonstrate the superior performance of the proposed method compared to existing methods.

Tracking Small Birds by Detection Candidate Region Filtering and Detection History-aware Association

May 27, 2024Abstract:This paper focuses on tracking birds that appear small in a panoramic video. When the size of the tracked object is small in the image (small object tracking) and move quickly, object detection and association suffers. To address these problems, we propose Adaptive Slicing Aided Hyper Inference (Adaptive SAHI), which reduces the candidate regions to apply detection, and Detection History-aware Similarity Criterion (DHSC), which accurately associates objects in consecutive frames based on the detection history. Experiments on the NUBird2022 dataset verifies the effectiveness of the proposed method by showing improvements in both accuracy and speed.

One-Stage Open-Vocabulary Temporal Action Detection Leveraging Temporal Multi-scale and Action Label Features

Apr 30, 2024

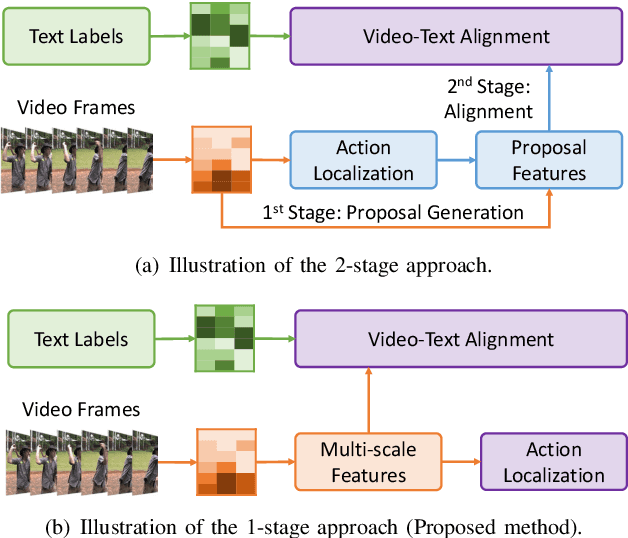

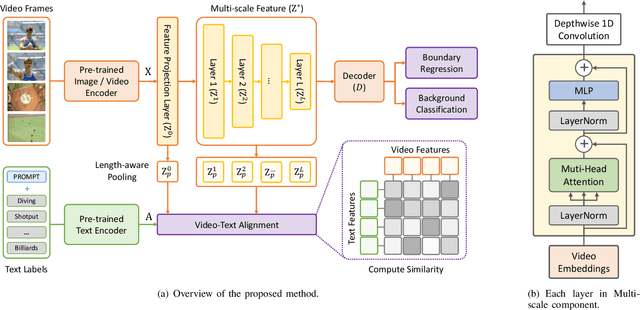

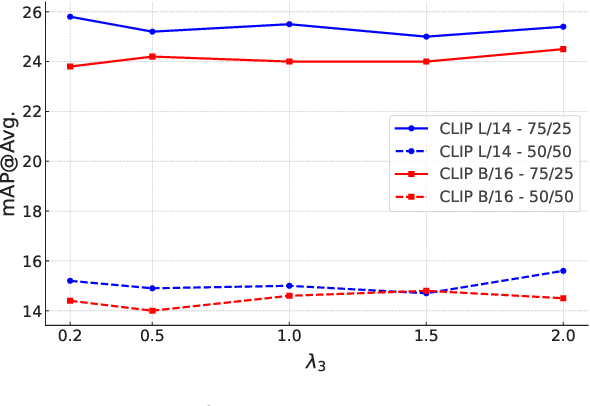

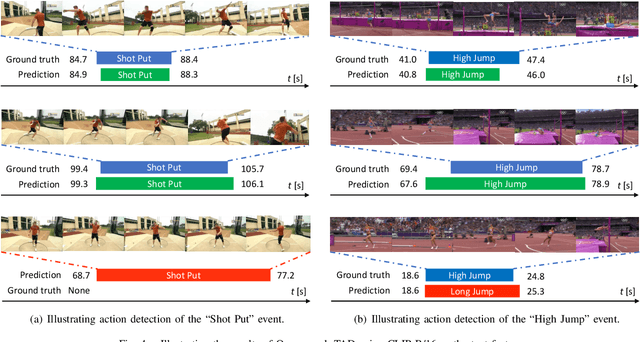

Abstract:Open-vocabulary Temporal Action Detection (Open-vocab TAD) is an advanced video analysis approach that expands Closed-vocabulary Temporal Action Detection (Closed-vocab TAD) capabilities. Closed-vocab TAD is typically confined to localizing and classifying actions based on a predefined set of categories. In contrast, Open-vocab TAD goes further and is not limited to these predefined categories. This is particularly useful in real-world scenarios where the variety of actions in videos can be vast and not always predictable. The prevalent methods in Open-vocab TAD typically employ a 2-stage approach, which involves generating action proposals and then identifying those actions. However, errors made during the first stage can adversely affect the subsequent action identification accuracy. Additionally, existing studies face challenges in handling actions of different durations owing to the use of fixed temporal processing methods. Therefore, we propose a 1-stage approach consisting of two primary modules: Multi-scale Video Analysis (MVA) and Video-Text Alignment (VTA). The MVA module captures actions at varying temporal resolutions, overcoming the challenge of detecting actions with diverse durations. The VTA module leverages the synergy between visual and textual modalities to precisely align video segments with corresponding action labels, a critical step for accurate action identification in Open-vocab scenarios. Evaluations on widely recognized datasets THUMOS14 and ActivityNet-1.3, showed that the proposed method achieved superior results compared to the other methods in both Open-vocab and Closed-vocab settings. This serves as a strong demonstration of the effectiveness of the proposed method in the TAD task.

J-CRe3: A Japanese Conversation Dataset for Real-world Reference Resolution

Mar 28, 2024

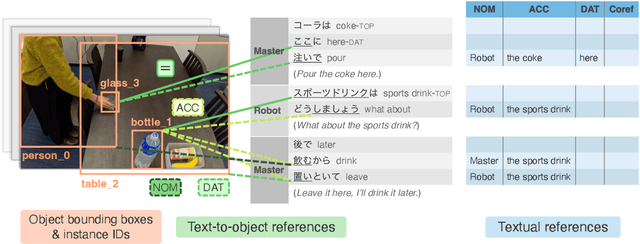

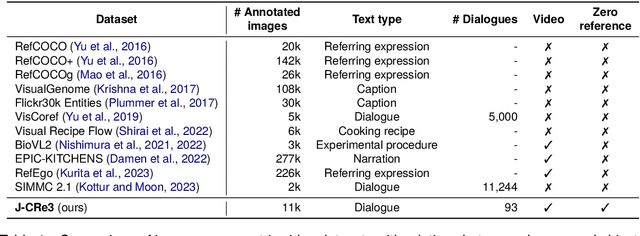

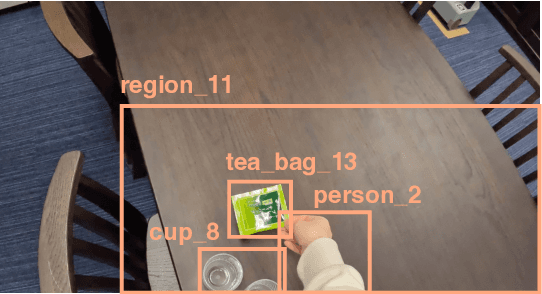

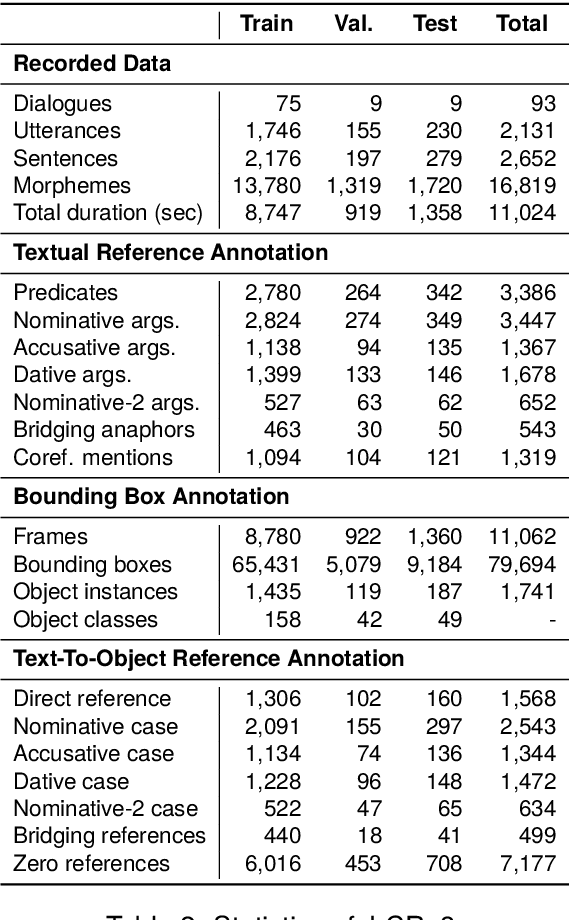

Abstract:Understanding expressions that refer to the physical world is crucial for such human-assisting systems in the real world, as robots that must perform actions that are expected by users. In real-world reference resolution, a system must ground the verbal information that appears in user interactions to the visual information observed in egocentric views. To this end, we propose a multimodal reference resolution task and construct a Japanese Conversation dataset for Real-world Reference Resolution (J-CRe3). Our dataset contains egocentric video and dialogue audio of real-world conversations between two people acting as a master and an assistant robot at home. The dataset is annotated with crossmodal tags between phrases in the utterances and the object bounding boxes in the video frames. These tags include indirect reference relations, such as predicate-argument structures and bridging references as well as direct reference relations. We also constructed an experimental model and clarified the challenges in multimodal reference resolution tasks.

A Gaze-grounded Visual Question Answering Dataset for Clarifying Ambiguous Japanese Questions

Mar 26, 2024Abstract:Situated conversations, which refer to visual information as visual question answering (VQA), often contain ambiguities caused by reliance on directive information. This problem is exacerbated because some languages, such as Japanese, often omit subjective or objective terms. Such ambiguities in questions are often clarified by the contexts in conversational situations, such as joint attention with a user or user gaze information. In this study, we propose the Gaze-grounded VQA dataset (GazeVQA) that clarifies ambiguous questions using gaze information by focusing on a clarification process complemented by gaze information. We also propose a method that utilizes gaze target estimation results to improve the accuracy of GazeVQA tasks. Our experimental results showed that the proposed method improved the performance in some cases of a VQA system on GazeVQA and identified some typical problems of GazeVQA tasks that need to be improved.

Multi-View Video-Based Learning: Leveraging Weak Labels for Frame-Level Perception

Mar 19, 2024Abstract:For training a video-based action recognition model that accepts multi-view video, annotating frame-level labels is tedious and difficult. However, it is relatively easy to annotate sequence-level labels. This kind of coarse annotations are called as weak labels. However, training a multi-view video-based action recognition model with weak labels for frame-level perception is challenging. In this paper, we propose a novel learning framework, where the weak labels are first used to train a multi-view video-based base model, which is subsequently used for downstream frame-level perception tasks. The base model is trained to obtain individual latent embeddings for each view in the multi-view input. For training the model using the weak labels, we propose a novel latent loss function. We also propose a model that uses the view-specific latent embeddings for downstream frame-level action recognition and detection tasks. The proposed framework is evaluated using the MM Office dataset by comparing several baseline algorithms. The results show that the proposed base model is effectively trained using weak labels and the latent embeddings help the downstream models improve accuracy.

ManifoldNeRF: View-dependent Image Feature Supervision for Few-shot Neural Radiance Fields

Oct 20, 2023

Abstract:Novel view synthesis has recently made significant progress with the advent of Neural Radiance Fields (NeRF). DietNeRF is an extension of NeRF that aims to achieve this task from only a few images by introducing a new loss function for unknown viewpoints with no input images. The loss function assumes that a pre-trained feature extractor should output the same feature even if input images are captured at different viewpoints since the images contain the same object. However, while that assumption is ideal, in reality, it is known that as viewpoints continuously change, also feature vectors continuously change. Thus, the assumption can harm training. To avoid this harmful training, we propose ManifoldNeRF, a method for supervising feature vectors at unknown viewpoints using interpolated features from neighboring known viewpoints. Since the method provides appropriate supervision for each unknown viewpoint by the interpolated features, the volume representation is learned better than DietNeRF. Experimental results show that the proposed method performs better than others in a complex scene. We also experimented with several subsets of viewpoints from a set of viewpoints and identified an effective set of viewpoints for real environments. This provided a basic policy of viewpoint patterns for real-world application. The code is available at https://github.com/haganelego/ManifoldNeRF_BMVC2023

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge