Hakaru Tamukoh

Reservoir Computing inspired Matrix Multiplication-free Language Model

Dec 29, 2025Abstract:Large language models (LLMs) have achieved state-of-the-art performance in natural language processing; however, their high computational cost remains a major bottleneck. In this study, we target computational efficiency by focusing on a matrix multiplication free language model (MatMul-free LM) and further reducing the training cost through an architecture inspired by reservoir computing. Specifically, we partially fix and share the weights of selected layers in the MatMul-free LM and insert reservoir layers to obtain rich dynamic representations without additional training overhead. Additionally, several operations are combined to reduce memory accesses. Experimental results show that the proposed architecture reduces the number of parameters by up to 19%, training time by 9.9%, and inference time by 8.0%, while maintaining comparable performance to the baseline model.

Sign Language Recognition using Parallel Bidirectional Reservoir Computing

Dec 22, 2025

Abstract:Sign language recognition (SLR) facilitates communication between deaf and hearing communities. Deep learning based SLR models are commonly used but require extensive computational resources, making them unsuitable for deployment on edge devices. To address these limitations, we propose a lightweight SLR system that combines parallel bidirectional reservoir computing (PBRC) with MediaPipe. MediaPipe enables real-time hand tracking and precise extraction of hand joint coordinates, which serve as input features for the PBRC architecture. The proposed PBRC architecture consists of two echo state network (ESN) based bidirectional reservoir computing (BRC) modules arranged in parallel to capture temporal dependencies, thereby creating a rich feature representation for classification. We trained our PBRC-based SLR system on the Word-Level American Sign Language (WLASL) video dataset, achieving top-1, top-5, and top-10 accuracies of 60.85%, 85.86%, and 91.74%, respectively. Training time was significantly reduced to 18.67 seconds due to the intrinsic properties of reservoir computing, compared to over 55 minutes for deep learning based methods such as Bi-GRU. This approach offers a lightweight, cost-effective solution for real-time SLR on edge devices.

Techniques for Enhancing Memory Capacity of Reservoir Computing

Feb 25, 2025

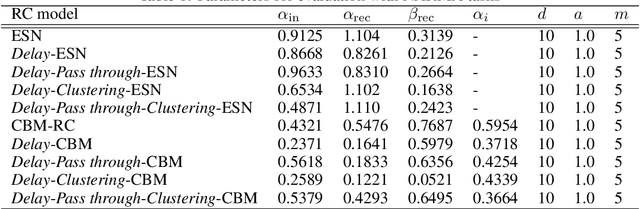

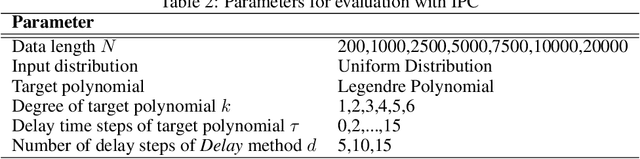

Abstract:Reservoir Computing (RC) is a bio-inspired machine learning framework, and various models have been proposed. RC is a well-suited model for time series data processing, but there is a trade-off between memory capacity and nonlinearity. In this study, we propose methods to improve the memory capacity of reservoir models by modifying their network configuration except for the inside of reservoirs. The Delay method retains past inputs by adding delay node chains to the input layer with the specified number of delay steps. To suppress the effect of input value increase due to the Delay method, we divide the input weights by the number of added delay steps. The Pass through method feeds input values directly to the output layer. The Clustering method divides the input and reservoir nodes into multiple parts and integrates them at the output layer. We applied these methods to an echo state network (ESN), a typical RC model, and the chaotic Boltzmann machine (CBM)-RC, which can be efficiently implemented in integrated circuits. We evaluated their performance on the NARMA task, and measured information processing capacity (IPC) to evaluate the trade-off between memory capacity and nonlinearity.

Unified Understanding of Environment, Task, and Human for Human-Robot Interaction in Real-World Environments

Dec 18, 2024

Abstract:To facilitate human--robot interaction (HRI) tasks in real-world scenarios, service robots must adapt to dynamic environments and understand the required tasks while effectively communicating with humans. To accomplish HRI in practice, we propose a novel indoor dynamic map, task understanding system, and response generation system. The indoor dynamic map optimizes robot behavior by managing an occupancy grid map and dynamic information, such as furniture and humans, in separate layers. The task understanding system targets tasks that require multiple actions, such as serving ordered items. Task representations that predefine the flow of necessary actions are applied to achieve highly accurate understanding. The response generation system is executed in parallel with task understanding to facilitate smooth HRI by informing humans of the subsequent actions of the robot. In this study, we focused on waiter duties in a restaurant setting as a representative application of HRI in a dynamic environment. We developed an HRI system that could perform tasks such as serving food and cleaning up while communicating with customers. In experiments conducted in a simulated restaurant environment, the proposed HRI system successfully communicated with customers and served ordered food with 90\% accuracy. In a questionnaire administered after the experiment, the HRI system of the robot received 4.2 points out of 5. These outcomes indicated the effectiveness of the proposed method and HRI system in executing waiter tasks in real-world environments.

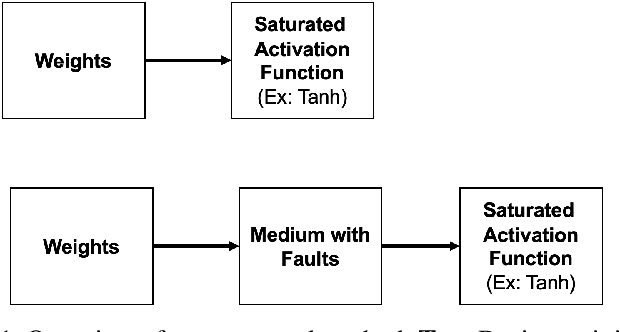

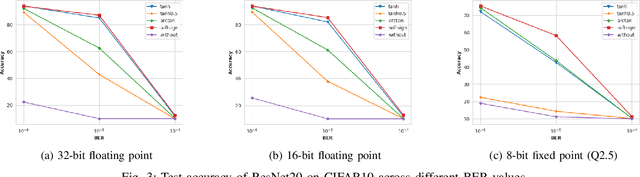

Harden Deep Neural Networks Against Fault Injections Through Weight Scaling

Nov 28, 2024Abstract:Deep neural networks (DNNs) have enabled smart applications on hardware devices. However, these hardware devices are vulnerable to unintended faults caused by aging, temperature variance, and write errors. These faults can cause bit-flips in DNN weights and significantly degrade the performance of DNNs. Thus, protection against these faults is crucial for the deployment of DNNs in critical applications. Previous works have proposed error correction codes based methods, however these methods often require high overheads in both memory and computation. In this paper, we propose a simple yet effective method to harden DNN weights by multiplying weights by constants before storing them to fault-prone medium. When used, these weights are divided back by the same constants to restore the original scale. Our method is based on the observation that errors from bit-flips have properties similar to additive noise, therefore by dividing by constants can reduce the absolute error from bit-flips. To demonstrate our method, we conduct experiments across four ImageNet 2012 pre-trained models along with three different data types: 32-bit floating point, 16-bit floating point, and 8-bit fixed point. This method demonstrates that by only multiplying weights with constants, Top-1 Accuracy of 8-bit fixed point ResNet50 is improved by 54.418 at bit-error rate of 0.0001.

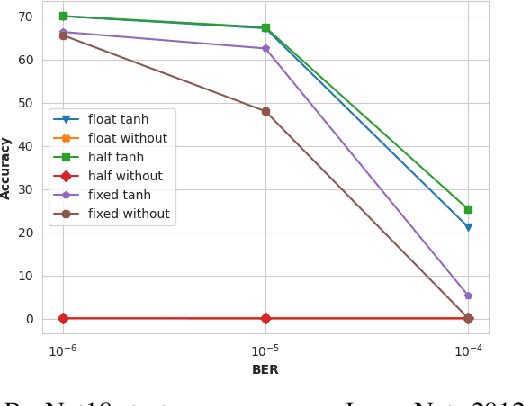

Enhancing Neural Network Robustness Against Fault Injection Through Non-linear Weight Transformations

Nov 28, 2024

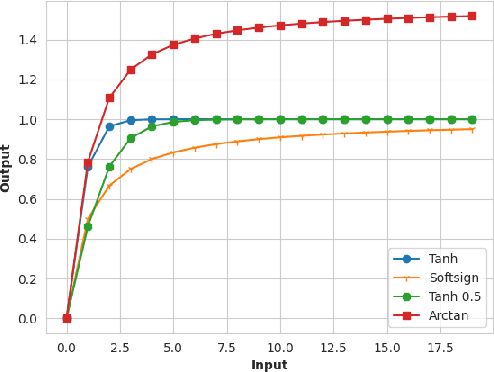

Abstract:Deploying deep neural networks (DNNs) in real-world environments poses challenges due to faults that can manifest in physical hardware from radiation, aging, and temperature fluctuations. To address this, previous works have focused on protecting DNNs via activation range restriction using clipped ReLU and finding the optimal clipping threshold. However, this work instead focuses on constraining DNN weights by applying saturated activation functions (SAFs): Tanh, Arctan, and others. SAFs prevent faults from causing DNN weights to become excessively large, which can lead to model failure. These methods not only enhance the robustness of DNNs against fault injections but also improve DNN performance by a small margin. Before deployment, DNNs are trained with weights constrained by SAFs. During deployment, the weights without applied SAF are written to mediums with faults. When read, weights with faults are applied with SAFs and are used for inference. We demonstrate our proposed method across three datasets (CIFAR10, CIFAR100, ImageNet 2012) and across three datatypes (32-bit floating point (FP32), 16-bit floating point, and 8-bit fixed point). We show that our method enables FP32 ResNet18 with ImageNet 2012 to operate at a bit-error rate of 0.00001 with minor accuracy loss, while without the proposed method, the FP32 DNN only produces random guesses. Furthermore, to accelerate the training process, we demonstrate that an ImageNet 2012 pre-trained ResNet18 can be adapted to SAF by training for a few epochs with a slight improvement in Top-1 accuracy while still ensuring robustness against fault injection.

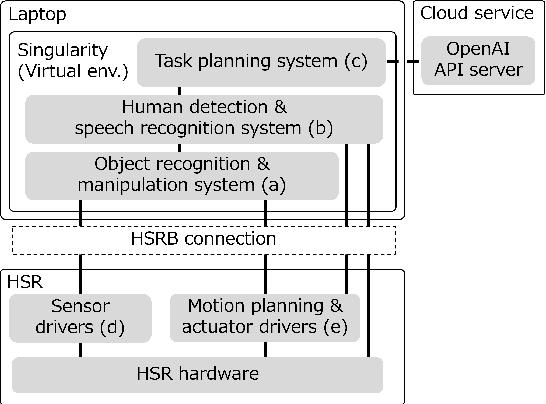

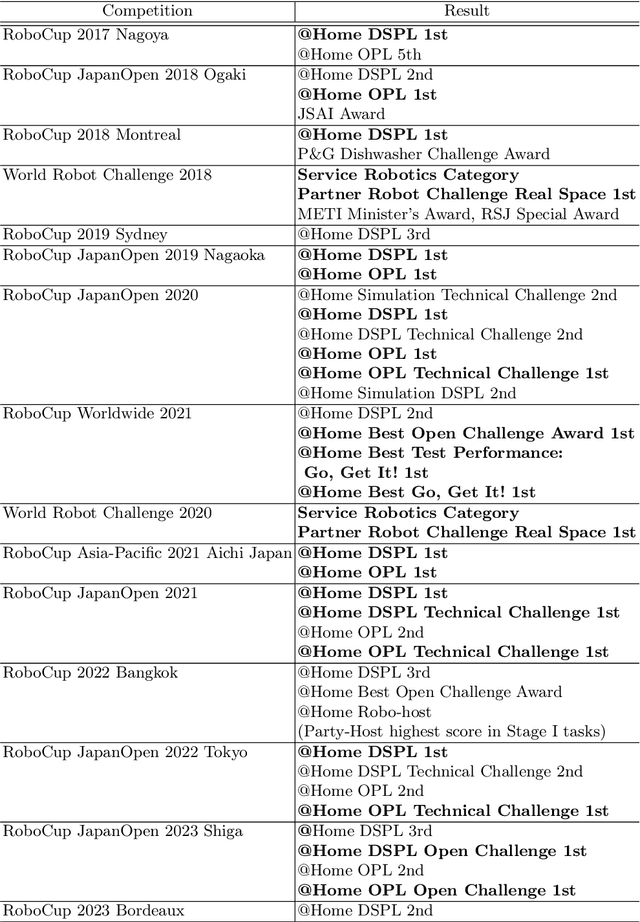

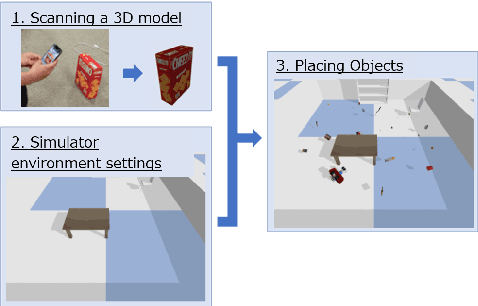

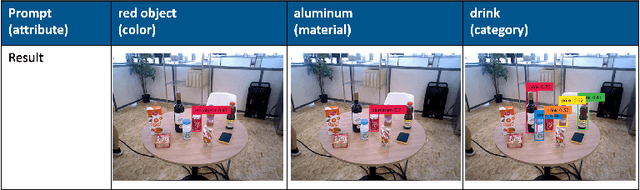

Hibikino-Musashi@Home 2024 Team Description Paper

Oct 08, 2024

Abstract:This paper provides an overview of the techniques employed by Hibikino-Musashi@Home, which intends to participate in the domestic standard platform league. The team has developed a dataset generator for training a robot vision system and an open-source development environment running on a Human Support Robot simulator. The large language model powered task planner selects appropriate primitive skills to perform the task requested by users. The team aims to design a home service robot that can assist humans in their homes and continuously attends competitions to evaluate and improve the developed system.

Haptic in-sensor computing device made of carbon nanotube-polydimethylsiloxane nanocomposites

Jun 06, 2024Abstract:The importance of haptic in-sensor computing devices has been increasing. In this study, we successfully fabricated a haptic sensor with a hierarchical structure via the sacrificial template method, using carbon nanotubes-polydimethylsiloxane (CNTs-PDMS) nanocomposites for in-sensor computing applications. The CNTs-PDMS nanocomposite sensors, with different sensitivities, were obtained by varying the amount of CNTs. We transformed the input stimuli into higher-dimensional information, enabling a new path for the CNTs-PDMS nanocomposite application, which was implemented on a robotic hand as an in-sensor computing device by applying a reservoir computing paradigm. The nonlinear output data obtained from the sensors were trained using linear regression and used to classify nine different objects used in everyday life with an object recognition accuracy of >80 % for each object. This approach could enable tactile sensation in robots while reducing the computational cost.

ManifoldNeRF: View-dependent Image Feature Supervision for Few-shot Neural Radiance Fields

Oct 20, 2023

Abstract:Novel view synthesis has recently made significant progress with the advent of Neural Radiance Fields (NeRF). DietNeRF is an extension of NeRF that aims to achieve this task from only a few images by introducing a new loss function for unknown viewpoints with no input images. The loss function assumes that a pre-trained feature extractor should output the same feature even if input images are captured at different viewpoints since the images contain the same object. However, while that assumption is ideal, in reality, it is known that as viewpoints continuously change, also feature vectors continuously change. Thus, the assumption can harm training. To avoid this harmful training, we propose ManifoldNeRF, a method for supervising feature vectors at unknown viewpoints using interpolated features from neighboring known viewpoints. Since the method provides appropriate supervision for each unknown viewpoint by the interpolated features, the volume representation is learned better than DietNeRF. Experimental results show that the proposed method performs better than others in a complex scene. We also experimented with several subsets of viewpoints from a set of viewpoints and identified an effective set of viewpoints for real environments. This provided a basic policy of viewpoint patterns for real-world application. The code is available at https://github.com/haganelego/ManifoldNeRF_BMVC2023

Hibikino-Musashi@Home 2023 Team Description Paper

Oct 19, 2023Abstract:This paper describes an overview of the techniques of Hibikino-Musashi@Home, which intends to participate in the domestic standard platform league. The team has developed a dataset generator for the training of a robot vision system and an open-source development environment running on a human support robot simulator. The robot system comprises self-developed libraries including those for motion synthesis and open-source software works on the robot operating system. The team aims to realize a home service robot that assists humans in a home, and continuously attend the competition to evaluate the developed system. The brain-inspired artificial intelligence system is also proposed for service robots which are expected to work in a real home environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge