Atsuki Yokota

Techniques for Enhancing Memory Capacity of Reservoir Computing

Feb 25, 2025

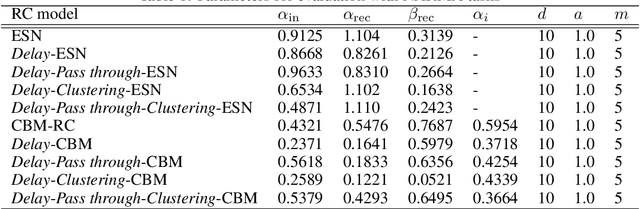

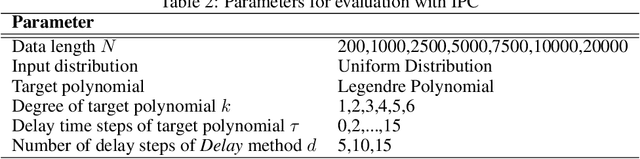

Abstract:Reservoir Computing (RC) is a bio-inspired machine learning framework, and various models have been proposed. RC is a well-suited model for time series data processing, but there is a trade-off between memory capacity and nonlinearity. In this study, we propose methods to improve the memory capacity of reservoir models by modifying their network configuration except for the inside of reservoirs. The Delay method retains past inputs by adding delay node chains to the input layer with the specified number of delay steps. To suppress the effect of input value increase due to the Delay method, we divide the input weights by the number of added delay steps. The Pass through method feeds input values directly to the output layer. The Clustering method divides the input and reservoir nodes into multiple parts and integrates them at the output layer. We applied these methods to an echo state network (ESN), a typical RC model, and the chaotic Boltzmann machine (CBM)-RC, which can be efficiently implemented in integrated circuits. We evaluated their performance on the NARMA task, and measured information processing capacity (IPC) to evaluate the trade-off between memory capacity and nonlinearity.

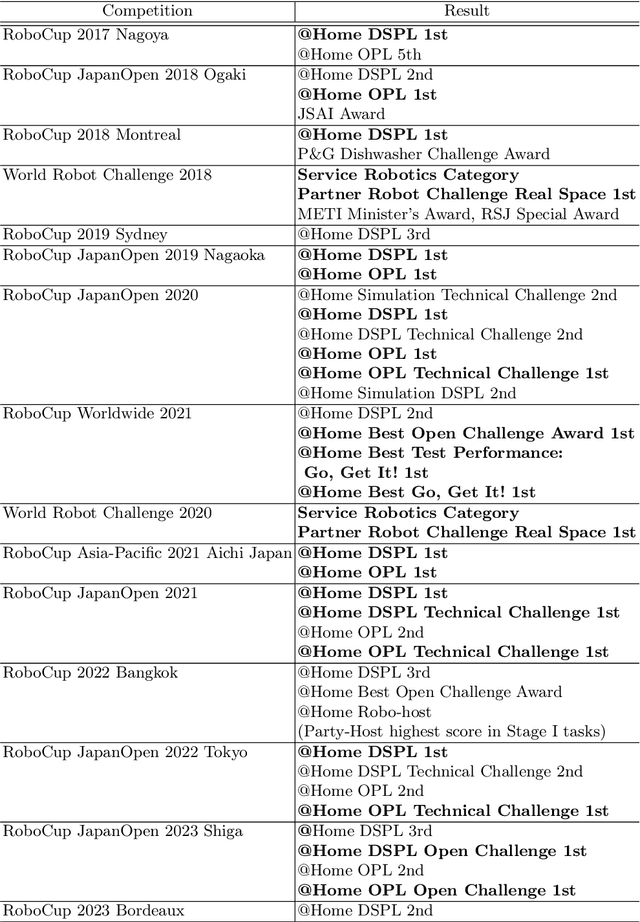

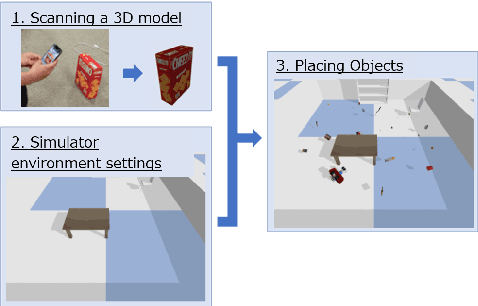

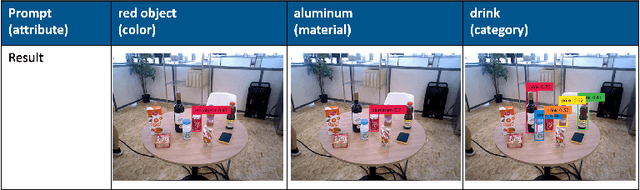

Hibikino-Musashi@Home 2024 Team Description Paper

Oct 08, 2024

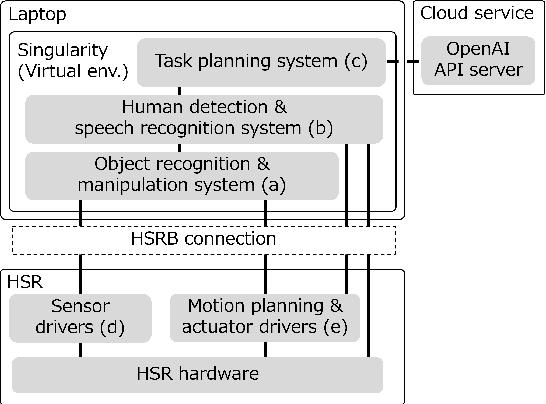

Abstract:This paper provides an overview of the techniques employed by Hibikino-Musashi@Home, which intends to participate in the domestic standard platform league. The team has developed a dataset generator for training a robot vision system and an open-source development environment running on a Human Support Robot simulator. The large language model powered task planner selects appropriate primitive skills to perform the task requested by users. The team aims to design a home service robot that can assist humans in their homes and continuously attends competitions to evaluate and improve the developed system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge