Jinho Lee

FairDICE: Fairness-Driven Offline Multi-Objective Reinforcement Learning

Jun 09, 2025Abstract:Multi-objective reinforcement learning (MORL) aims to optimize policies in the presence of conflicting objectives, where linear scalarization is commonly used to reduce vector-valued returns into scalar signals. While effective for certain preferences, this approach cannot capture fairness-oriented goals such as Nash social welfare or max-min fairness, which require nonlinear and non-additive trade-offs. Although several online algorithms have been proposed for specific fairness objectives, a unified approach for optimizing nonlinear welfare criteria in the offline setting-where learning must proceed from a fixed dataset-remains unexplored. In this work, we present FairDICE, the first offline MORL framework that directly optimizes nonlinear welfare objective. FairDICE leverages distribution correction estimation to jointly account for welfare maximization and distributional regularization, enabling stable and sample-efficient learning without requiring explicit preference weights or exhaustive weight search. Across multiple offline benchmarks, FairDICE demonstrates strong fairness-aware performance compared to existing baselines.

MimiQ: Low-Bit Data-Free Quantization of Vision Transformers

Jul 29, 2024Abstract:Data-free quantization (DFQ) is a technique that creates a lightweight network from its full-precision counterpart without the original training data, often through a synthetic dataset. Although several DFQ methods have been proposed for vision transformer (ViT) architectures, they fail to achieve efficacy in low-bit settings. Examining the existing methods, we identify that their synthetic data produce misaligned attention maps, while those of the real samples are highly aligned. From the observation of aligned attention, we find that aligning attention maps of synthetic data helps to improve the overall performance of quantized ViTs. Motivated by this finding, we devise \aname, a novel DFQ method designed for ViTs that focuses on inter-head attention similarity. First, we generate synthetic data by aligning head-wise attention responses in relation to spatial query patches. Then, we apply head-wise structural attention distillation to align the attention maps of the quantized network to those of the full-precision teacher. The experimental results show that the proposed method significantly outperforms baselines, setting a new state-of-the-art performance for data-free ViT quantization.

DeepClair: Utilizing Market Forecasts for Effective Portfolio Selection

Jul 18, 2024

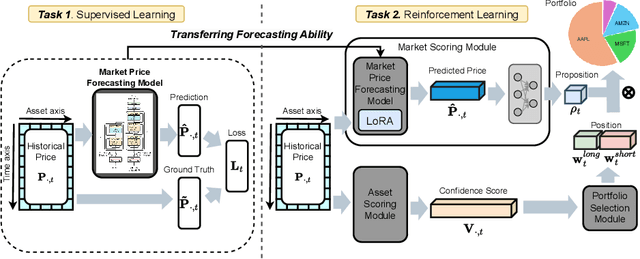

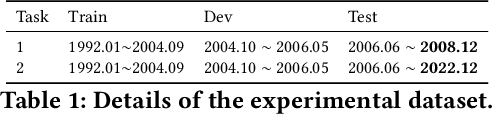

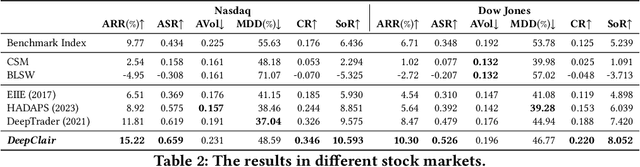

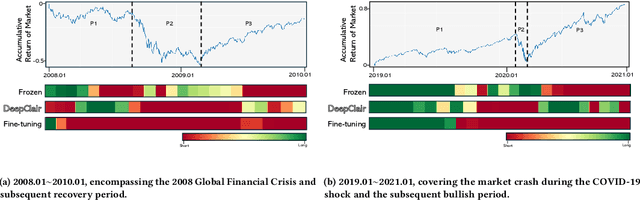

Abstract:Utilizing market forecasts is pivotal in optimizing portfolio selection strategies. We introduce DeepClair, a novel framework for portfolio selection. DeepClair leverages a transformer-based time-series forecasting model to predict market trends, facilitating more informed and adaptable portfolio decisions. To integrate the forecasting model into a deep reinforcement learning-driven portfolio selection framework, we introduced a two-step strategy: first, pre-training the time-series model on market data, followed by fine-tuning the portfolio selection architecture using this model. Additionally, we investigated the optimization technique, Low-Rank Adaptation (LoRA), to enhance the pre-trained forecasting model for fine-tuning in investment scenarios. This work bridges market forecasting and portfolio selection, facilitating the advancement of investment strategies.

DataFreeShield: Defending Adversarial Attacks without Training Data

Jun 21, 2024Abstract:Recent advances in adversarial robustness rely on an abundant set of training data, where using external or additional datasets has become a common setting. However, in real life, the training data is often kept private for security and privacy issues, while only the pretrained weight is available to the public. In such scenarios, existing methods that assume accessibility to the original data become inapplicable. Thus we investigate the pivotal problem of data-free adversarial robustness, where we try to achieve adversarial robustness without accessing any real data. Through a preliminary study, we highlight the severity of the problem by showing that robustness without the original dataset is difficult to achieve, even with similar domain datasets. To address this issue, we propose DataFreeShield, which tackles the problem from two perspectives: surrogate dataset generation and adversarial training using the generated data. Through extensive validation, we show that DataFreeShield outperforms baselines, demonstrating that the proposed method sets the first entirely data-free solution for the adversarial robustness problem.

Pipette: Automatic Fine-grained Large Language Model Training Configurator for Real-World Clusters

May 28, 2024

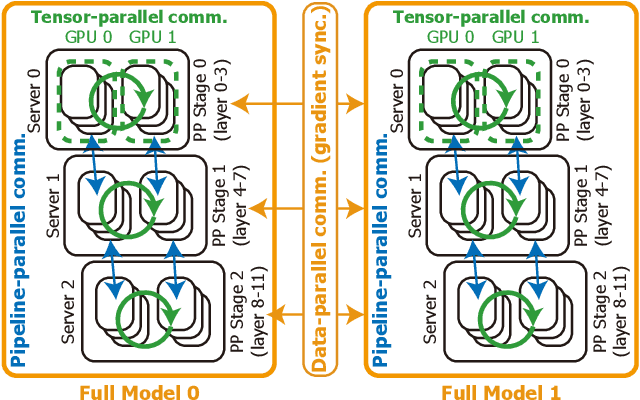

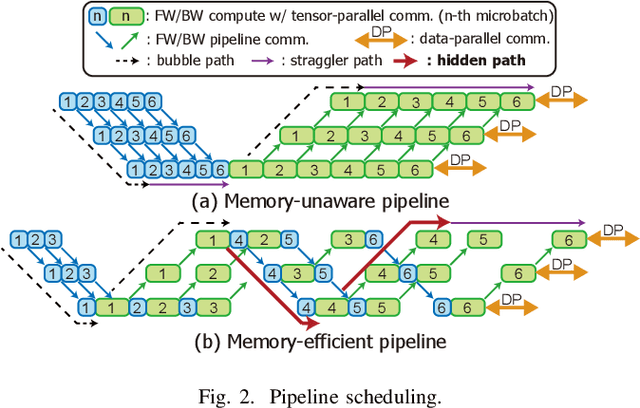

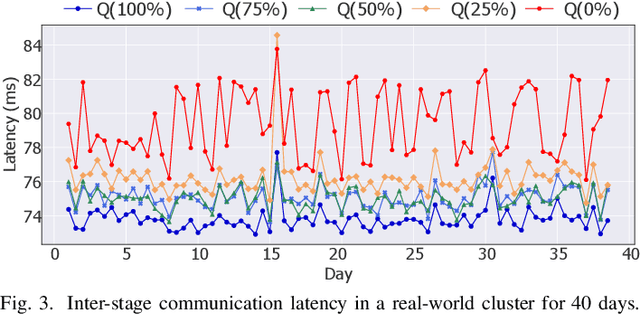

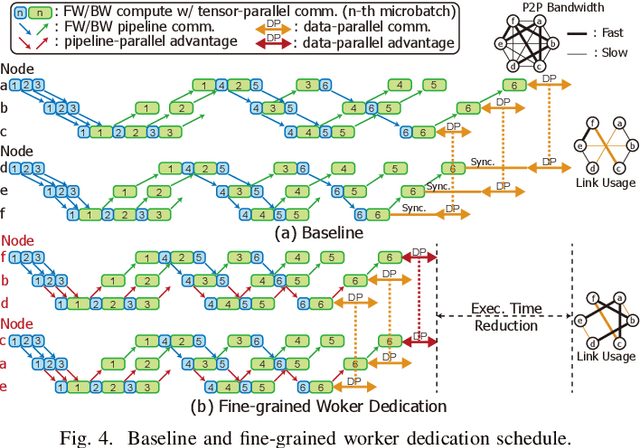

Abstract:Training large language models (LLMs) is known to be challenging because of the huge computational and memory capacity requirements. To address these issues, it is common to use a cluster of GPUs with 3D parallelism, which splits a model along the data batch, pipeline stage, and intra-layer tensor dimensions. However, the use of 3D parallelism produces the additional challenge of finding the optimal number of ways on each dimension and mapping the split models onto the GPUs. Several previous studies have attempted to automatically find the optimal configuration, but many of these lacked several important aspects. For instance, the heterogeneous nature of the interconnect speeds is often ignored. While the peak bandwidths for the interconnects are usually made equal, the actual attained bandwidth varies per link in real-world clusters. Combined with the critical path modeling that does not properly consider the communication, they easily fall into sub-optimal configurations. In addition, they often fail to consider the memory requirement per GPU, often recommending solutions that could not be executed. To address these challenges, we propose Pipette, which is an automatic fine-grained LLM training configurator for real-world clusters. By devising better performance models along with the memory estimator and fine-grained individual GPU assignment, Pipette achieves faster configurations that satisfy the memory constraints. We evaluated Pipette on large clusters to show that it provides a significant speedup over the prior art. The implementation of Pipette is available at https://github.com/yimjinkyu1/date2024_pipette.

Smart-Infinity: Fast Large Language Model Training using Near-Storage Processing on a Real System

Mar 11, 2024

Abstract:The recent huge advance of Large Language Models (LLMs) is mainly driven by the increase in the number of parameters. This has led to substantial memory capacity requirements, necessitating the use of dozens of GPUs just to meet the capacity. One popular solution to this is storage-offloaded training, which uses host memory and storage as an extended memory hierarchy. However, this obviously comes at the cost of storage bandwidth bottleneck because storage devices have orders of magnitude lower bandwidth compared to that of GPU device memories. Our work, Smart-Infinity, addresses the storage bandwidth bottleneck of storage-offloaded LLM training using near-storage processing devices on a real system. The main component of Smart-Infinity is SmartUpdate, which performs parameter updates on custom near-storage accelerators. We identify that moving parameter updates to the storage side removes most of the storage traffic. In addition, we propose an efficient data transfer handler structure to address the system integration issues for Smart-Infinity. The handler allows overlapping data transfers with fixed memory consumption by reusing the device buffer. Lastly, we propose accelerator-assisted gradient compression/decompression to enhance the scalability of Smart-Infinity. When scaling to multiple near-storage processing devices, the write traffic on the shared channel becomes the bottleneck. To alleviate this, we compress the gradients on the GPU and decompress them on the accelerators. It provides further acceleration from reduced traffic. As a result, Smart-Infinity achieves a significant speedup compared to the baseline. Notably, Smart-Infinity is a ready-to-use approach that is fully integrated into PyTorch on a real system. We will open-source Smart-Infinity to facilitate its use.

PeerAiD: Improving Adversarial Distillation from a Specialized Peer Tutor

Mar 11, 2024Abstract:Adversarial robustness of the neural network is a significant concern when it is applied to security-critical domains. In this situation, adversarial distillation is a promising option which aims to distill the robustness of the teacher network to improve the robustness of a small student network. Previous works pretrain the teacher network to make it robust to the adversarial examples aimed at itself. However, the adversarial examples are dependent on the parameters of the target network. The fixed teacher network inevitably degrades its robustness against the unseen transferred adversarial examples which targets the parameters of the student network in the adversarial distillation process. We propose PeerAiD to make a peer network learn the adversarial examples of the student network instead of adversarial examples aimed at itself. PeerAiD is an adversarial distillation that trains the peer network and the student network simultaneously in order to make the peer network specialized for defending the student network. We observe that such peer networks surpass the robustness of pretrained robust teacher network against student-attacked adversarial samples. With this peer network and adversarial distillation, PeerAiD achieves significantly higher robustness of the student network with AutoAttack (AA) accuracy up to 1.66%p and improves the natural accuracy of the student network up to 4.72%p with ResNet-18 and TinyImageNet dataset.

GraNNDis: Efficient Unified Distributed Training Framework for Deep GNNs on Large Clusters

Nov 12, 2023

Abstract:Graph neural networks (GNNs) are one of the most rapidly growing fields within deep learning. According to the growth in the dataset and the model size used for GNNs, an important problem is that it becomes nearly impossible to keep the whole network on GPU memory. Among numerous attempts, distributed training is one popular approach to address the problem. However, due to the nature of GNNs, existing distributed approaches suffer from poor scalability, mainly due to the slow external server communications. In this paper, we propose GraNNDis, an efficient distributed GNN training framework for training GNNs on large graphs and deep layers. GraNNDis introduces three new techniques. First, shared preloading provides a training structure for a cluster of multi-GPU servers. We suggest server-wise preloading of essential vertex dependencies to reduce the low-bandwidth external server communications. Second, we present expansion-aware sampling. Because shared preloading alone has limitations because of the neighbor explosion, expansion-aware sampling reduces vertex dependencies that span across server boundaries. Third, we propose cooperative batching to create a unified framework for full-graph and minibatch training. It significantly reduces redundant memory usage in mini-batch training. From this, GraNNDis enables a reasonable trade-off between full-graph and mini-batch training through unification especially when the entire graph does not fit into the GPU memory. With experiments conducted on a multi-server/multi-GPU cluster, we show that GraNNDis provides superior speedup over the state-of-the-art distributed GNN training frameworks.

Pipe-BD: Pipelined Parallel Blockwise Distillation

Jan 29, 2023

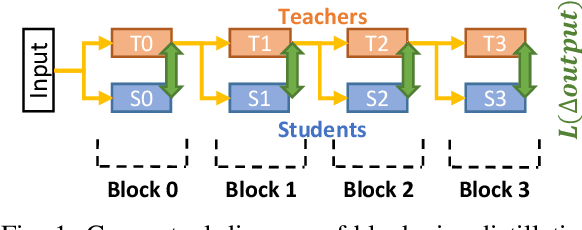

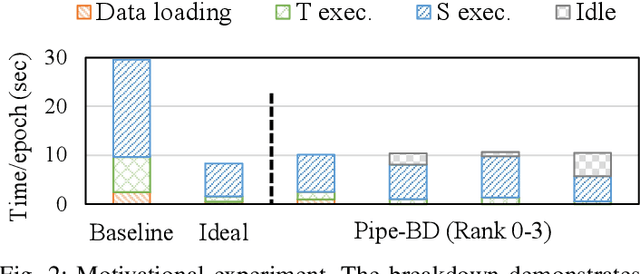

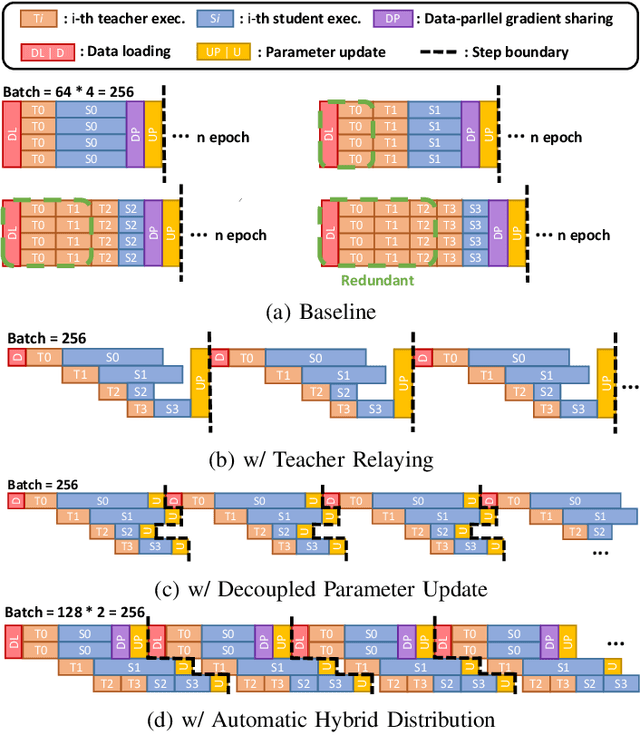

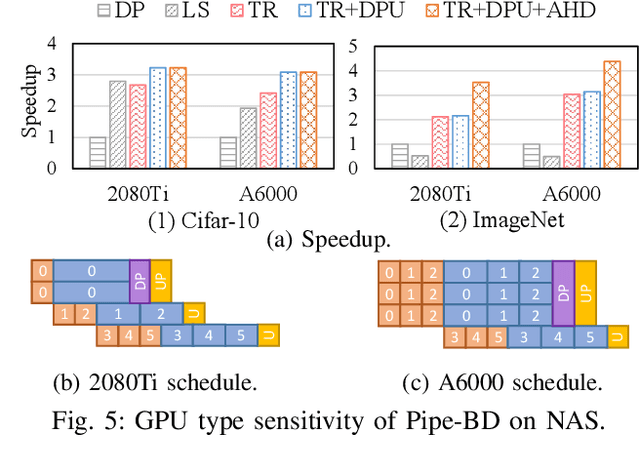

Abstract:Training large deep neural network models is highly challenging due to their tremendous computational and memory requirements. Blockwise distillation provides one promising method towards faster convergence by splitting a large model into multiple smaller models. In state-of-the-art blockwise distillation methods, training is performed block-by-block in a data-parallel manner using multiple GPUs. To produce inputs for the student blocks, the teacher model is executed from the beginning until the current block under training. However, this results in a high overhead of redundant teacher execution, low GPU utilization, and extra data loading. To address these problems, we propose Pipe-BD, a novel parallelization method for blockwise distillation. Pipe-BD aggressively utilizes pipeline parallelism for blockwise distillation, eliminating redundant teacher block execution and increasing per-device batch size for better resource utilization. We also extend to hybrid parallelism for efficient workload balancing. As a result, Pipe-BD achieves significant acceleration without modifying the mathematical formulation of blockwise distillation. We implement Pipe-BD on PyTorch, and experiments reveal that Pipe-BD is effective on multiple scenarios, models, and datasets.

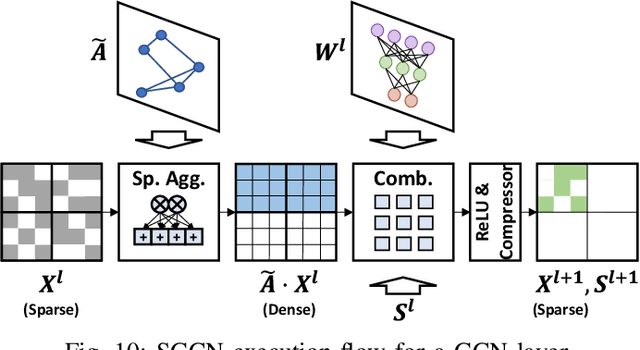

SGCN: Exploiting Compressed-Sparse Features in Deep Graph Convolutional Network Accelerators

Jan 25, 2023

Abstract:Graph convolutional networks (GCNs) are becoming increasingly popular as they overcome the limited applicability of prior neural networks. A GCN takes as input an arbitrarily structured graph and executes a series of layers which exploit the graph's structure to calculate their output features. One recent trend in GCNs is the use of deep network architectures. As opposed to the traditional GCNs which only span around two to five layers deep, modern GCNs now incorporate tens to hundreds of layers with the help of residual connections. From such deep GCNs, we find an important characteristic that they exhibit very high intermediate feature sparsity. We observe that with deep layers and residual connections, the number of zeros in the intermediate features sharply increases. This reveals a new opportunity for accelerators to exploit in GCN executions that was previously not present. In this paper, we propose SGCN, a fast and energy-efficient GCN accelerator which fully exploits the sparse intermediate features of modern GCNs. SGCN suggests several techniques to achieve significantly higher performance and energy efficiency than the existing accelerators. First, SGCN employs a GCN-friendly feature compression format. We focus on reducing the off-chip memory traffic, which often is the bottleneck for GCN executions. Second, we propose microarchitectures for seamlessly handling the compressed feature format. Third, to better handle locality in the existence of the varying sparsity, SGCN employs sparsity-aware cooperation. Sparsity-aware cooperation creates a pattern that exhibits multiple reuse windows, such that the cache can capture diverse sizes of working sets and therefore adapt to the varying level of sparsity. We show that SGCN achieves 1.71x speedup and 43.9% higher energy efficiency compared to the existing accelerators.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge