Jiayang Xu

Make a Video Call with LLM: A Measurement Campaign over Five Mainstream Apps

Oct 01, 2025Abstract:In 2025, Large Language Model (LLM) services have launched a new feature -- AI video chat -- allowing users to interact with AI agents via real-time video communication (RTC), just like chatting with real people. Despite its significance, no systematic study has characterized the performance of existing AI video chat systems. To address this gap, this paper proposes a comprehensive benchmark with carefully designed metrics across four dimensions: quality, latency, internal mechanisms, and system overhead. Using custom testbeds, we further evaluate five mainstream AI video chatbots with this benchmark. This work provides the research community a baseline of real-world performance and identifies unique system bottlenecks. In the meantime, our benchmarking results also open up several research questions for future optimizations of AI video chatbots.

GenSpace: Benchmarking Spatially-Aware Image Generation

May 30, 2025

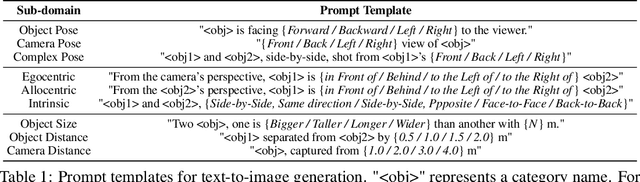

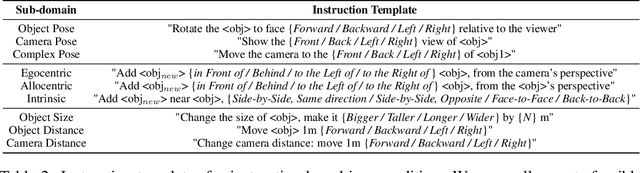

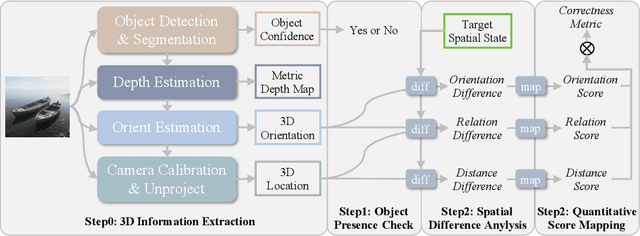

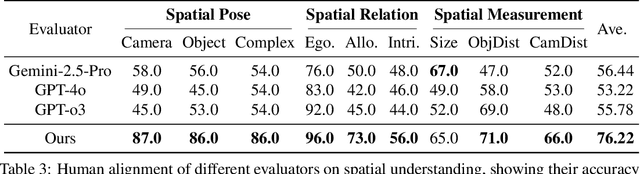

Abstract:Humans can intuitively compose and arrange scenes in the 3D space for photography. However, can advanced AI image generators plan scenes with similar 3D spatial awareness when creating images from text or image prompts? We present GenSpace, a novel benchmark and evaluation pipeline to comprehensively assess the spatial awareness of current image generation models. Furthermore, standard evaluations using general Vision-Language Models (VLMs) frequently fail to capture the detailed spatial errors. To handle this challenge, we propose a specialized evaluation pipeline and metric, which reconstructs 3D scene geometry using multiple visual foundation models and provides a more accurate and human-aligned metric of spatial faithfulness. Our findings show that while AI models create visually appealing images and can follow general instructions, they struggle with specific 3D details like object placement, relationships, and measurements. We summarize three core limitations in the spatial perception of current state-of-the-art image generation models: 1) Object Perspective Understanding, 2) Egocentric-Allocentric Transformation and 3) Metric Measurement Adherence, highlighting possible directions for improving spatial intelligence in image generation.

OmniCam: Unified Multimodal Video Generation via Camera Control

Apr 03, 2025

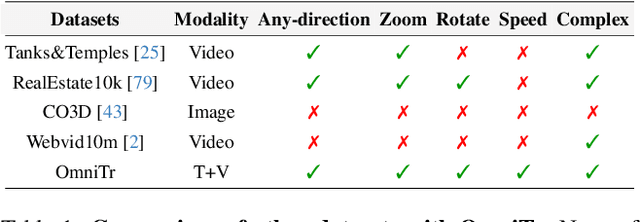

Abstract:Camera control, which achieves diverse visual effects by changing camera position and pose, has attracted widespread attention. However, existing methods face challenges such as complex interaction and limited control capabilities. To address these issues, we present OmniCam, a unified multimodal camera control framework. Leveraging large language models and video diffusion models, OmniCam generates spatio-temporally consistent videos. It supports various combinations of input modalities: the user can provide text or video with expected trajectory as camera path guidance, and image or video as content reference, enabling precise control over camera motion. To facilitate the training of OmniCam, we introduce the OmniTr dataset, which contains a large collection of high-quality long-sequence trajectories, videos, and corresponding descriptions. Experimental results demonstrate that our model achieves state-of-the-art performance in high-quality camera-controlled video generation across various metrics.

Astrea: A MOE-based Visual Understanding Model with Progressive Alignment

Mar 12, 2025

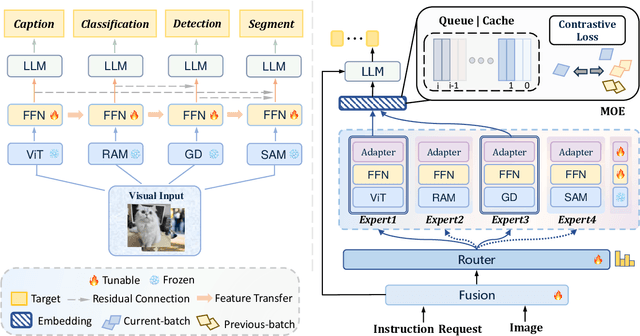

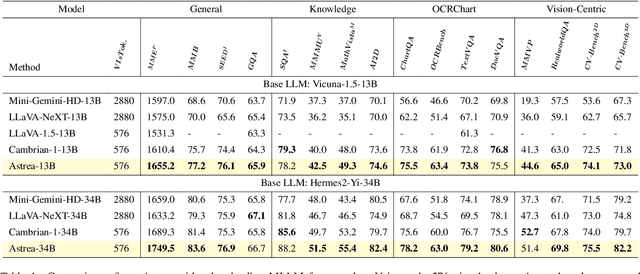

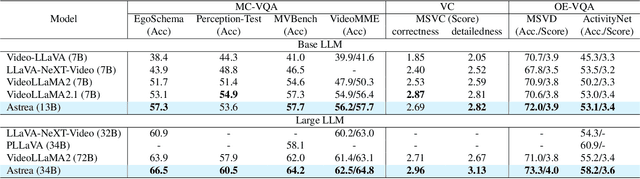

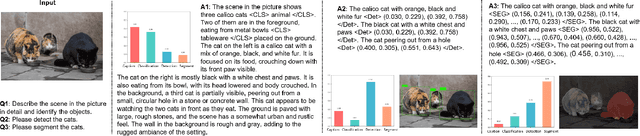

Abstract:Vision-Language Models (VLMs) based on Mixture-of-Experts (MoE) architectures have emerged as a pivotal paradigm in multimodal understanding, offering a powerful framework for integrating visual and linguistic information. However, the increasing complexity and diversity of tasks present significant challenges in coordinating load balancing across heterogeneous visual experts, where optimizing one specialist's performance often compromises others' capabilities. To address task heterogeneity and expert load imbalance, we propose Astrea, a novel multi-expert collaborative VLM architecture based on progressive pre-alignment. Astrea introduces three key innovations: 1) A heterogeneous expert coordination mechanism that integrates four specialized models (detection, segmentation, classification, captioning) into a comprehensive expert matrix covering essential visual comprehension elements; 2) A dynamic knowledge fusion strategy featuring progressive pre-alignment to harmonize experts within the VLM latent space through contrastive learning, complemented by probabilistically activated stochastic residual connections to preserve knowledge continuity; 3) An enhanced optimization framework utilizing momentum contrastive learning for long-range dependency modeling and adaptive weight allocators for real-time expert contribution calibration. Extensive evaluations across 12 benchmark tasks spanning VQA, image captioning, and cross-modal retrieval demonstrate Astrea's superiority over state-of-the-art models, achieving an average performance gain of +4.7\%. This study provides the first empirical demonstration that progressive pre-alignment strategies enable VLMs to overcome task heterogeneity limitations, establishing new methodological foundations for developing general-purpose multimodal agents.

Legilimens: Practical and Unified Content Moderation for Large Language Model Services

Sep 05, 2024

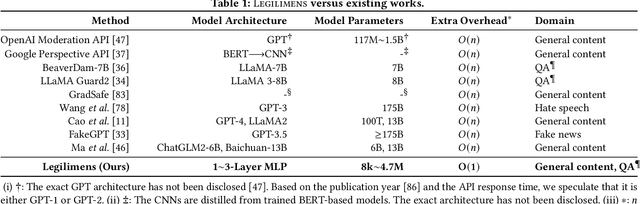

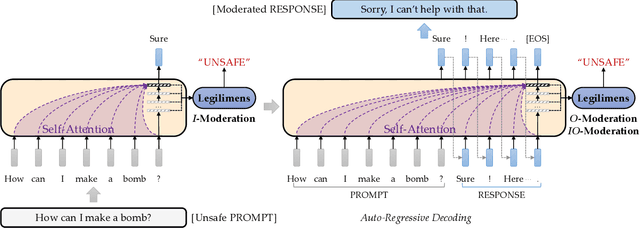

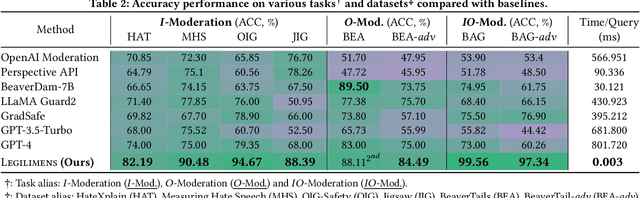

Abstract:Given the societal impact of unsafe content generated by large language models (LLMs), ensuring that LLM services comply with safety standards is a crucial concern for LLM service providers. Common content moderation methods are limited by an effectiveness-and-efficiency dilemma, where simple models are fragile while sophisticated models consume excessive computational resources. In this paper, we reveal for the first time that effective and efficient content moderation can be achieved by extracting conceptual features from chat-oriented LLMs, despite their initial fine-tuning for conversation rather than content moderation. We propose a practical and unified content moderation framework for LLM services, named Legilimens, which features both effectiveness and efficiency. Our red-team model-based data augmentation enhances the robustness of Legilimens against state-of-the-art jailbreaking. Additionally, we develop a framework to theoretically analyze the cost-effectiveness of Legilimens compared to other methods. We have conducted extensive experiments on five host LLMs, seventeen datasets, and nine jailbreaking methods to verify the effectiveness, efficiency, and robustness of Legilimens against normal and adaptive adversaries. A comparison of Legilimens with both commercial and academic baselines demonstrates the superior performance of Legilimens. Furthermore, we confirm that Legilimens can be applied to few-shot scenarios and extended to multi-label classification tasks.

MEDIC: Zero-shot Music Editing with Disentangled Inversion Control

Jul 18, 2024Abstract:Text-guided diffusion models catalyze a paradigm shift in audio generation, facilitating the adaptability of source audio to conform to specific textual prompts. Recent advancements introduce inversion techniques, like DDIM inversion, to zero-shot editing, exploiting pre-trained diffusion models for audio modification. Nonetheless, our investigation exposes that DDIM inversion suffers from an accumulation of errors across each diffusion step, undermining its efficacy. And the lack of attention control hinders the fine-grained manipulations of music. To counteract these limitations, we introduce the \textit{Disentangled Inversion} technique, which is designed to disentangle the diffusion process into triple branches, thereby magnifying their individual capabilities for both precise editing and preservation. Furthermore, we propose the \textit{Harmonized Attention Control} framework, which unifies the mutual self-attention and cross-attention with an additional Harmonic Branch to achieve the desired composition and structural information in the target music. Collectively, these innovations comprise the \textit{Disentangled Inversion Control (DIC)} framework, enabling accurate music editing whilst safeguarding structural integrity. To benchmark audio editing efficacy, we introduce \textit{ZoME-Bench}, a comprehensive music editing benchmark hosting 1,100 samples spread across 10 distinct editing categories, which facilitates both zero-shot and instruction-based music editing tasks. Our method demonstrates unparalleled performance in edit fidelity and essential content preservation, outperforming contemporary state-of-the-art inversion techniques.

Conditionally Parameterized, Discretization-Aware Neural Networks for Mesh-Based Modeling of Physical Systems

Oct 08, 2021

Abstract:The numerical simulations of physical systems are heavily dependent on mesh-based models. While neural networks have been extensively explored to assist such tasks, they often ignore the interactions or hierarchical relations between input features, and process them as concatenated mixtures. In this work, we generalize the idea of conditional parametrization -- using trainable functions of input parameters to generate the weights of a neural network, and extend them in a flexible way to encode information critical to the numerical simulations. Inspired by discretized numerical methods, choices of the parameters include physical quantities and mesh topology features. The functional relation between the modeled features and the parameters are built into the network architecture. The method is implemented on different networks, which are applied to several frontier scientific machine learning tasks, including the discovery of unmodeled physics, super-resolution of coarse fields, and the simulation of unsteady flows with chemical reactions. The results show that the conditionally parameterized networks provide superior performance compared to their traditional counterparts. A network architecture named CP-GNet is also proposed as the first deep learning model capable of standalone prediction of reacting flows on irregular meshes.

Multi-level Convolutional Autoencoder Networks for Parametric Prediction of Spatio-temporal Dynamics

Dec 23, 2019

Abstract:A data-driven framework is proposed for the predictive modeling of complex spatio-temporal dynamics, leveraging nested non-linear manifolds. Three levels of neural networks are used, with the goal of predicting the future state of a system of interest in a parametric setting. A convolutional autoencoder is used as the top level to encode the high dimensional input data along spatial dimensions into a sequence of latent variables. A temporal convolutional autoencoder serves as the second level, which further encodes the output sequence from the first level along the temporal dimension, and outputs a set of latent variables that encapsulate the spatio-temporal evolution of the dynamics. A fully connected network is used as the third level to learn the mapping between these latent variables and the global parameters from training data, and predict them for new parameters. For future state predictions, the second level uses a temporal convolutional network to predict subsequent steps of the output sequence from the top level. Latent variables at the bottom-most level are decoded to obtain the dynamics in physical space at new global parameters and/or at a future time. The framework is evaluated on a range of problems involving discontinuities, wave propagation, strong transients, and coherent structures. The sensitivity of the results to different modeling choices is assessed. The results suggest that given adequate data and careful training, effective data-driven predictive models can be constructed. Perspectives are provided on the present approach and its place in the landscape of model reduction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge