Jianbing Shen

Towards Geometry-Aware and Motion-Guided Video Human Mesh Recovery

Jan 29, 2026Abstract:Existing video-based 3D Human Mesh Recovery (HMR) methods often produce physically implausible results, stemming from their reliance on flawed intermediate 3D pose anchors and their inability to effectively model complex spatiotemporal dynamics. To overcome these deep-rooted architectural problems, we introduce HMRMamba, a new paradigm for HMR that pioneers the use of Structured State Space Models (SSMs) for their efficiency and long-range modeling prowess. Our framework is distinguished by two core contributions. First, the Geometry-Aware Lifting Module, featuring a novel dual-scan Mamba architecture, creates a robust foundation for reconstruction. It directly grounds the 2D-to-3D pose lifting process with geometric cues from image features, producing a highly reliable 3D pose sequence that serves as a stable anchor. Second, the Motion-guided Reconstruction Network leverages this anchor to explicitly process kinematic patterns over time. By injecting this crucial temporal awareness, it significantly enhances the final mesh's coherence and robustness, particularly under occlusion and motion blur. Comprehensive evaluations on 3DPW, MPI-INF-3DHP, and Human3.6M benchmarks confirm that HMRMamba sets a new state-of-the-art, outperforming existing methods in both reconstruction accuracy and temporal consistency while offering superior computational efficiency.

From Human Intention to Action Prediction: A Comprehensive Benchmark for Intention-driven End-to-End Autonomous Driving

Dec 13, 2025Abstract:Current end-to-end autonomous driving systems operate at a level of intelligence akin to following simple steering commands. However, achieving genuinely intelligent autonomy requires a paradigm shift: moving from merely executing low-level instructions to understanding and fulfilling high-level, abstract human intentions. This leap from a command-follower to an intention-fulfiller, as illustrated in our conceptual framework, is hindered by a fundamental challenge: the absence of a standardized benchmark to measure and drive progress on this complex task. To address this critical gap, we introduce Intention-Drive, the first comprehensive benchmark designed to evaluate the ability to translate high-level human intent into safe and precise driving actions. Intention-Drive features two core contributions: (1) a new dataset of complex scenarios paired with corresponding natural language intentions, and (2) a novel evaluation protocol centered on the Intent Success Rate (ISR), which assesses the semantic fulfillment of the human's goal beyond simple geometric accuracy. Through an extensive evaluation of a spectrum of baseline models on Intention-Drive, we reveal a significant performance deficit, showing that the baseline model struggle to achieve the comprehensive scene and intention understanding required for this advanced task.

TransBridge: Boost 3D Object Detection by Scene-Level Completion with Transformer Decoder

Dec 12, 2025

Abstract:3D object detection is essential in autonomous driving, providing vital information about moving objects and obstacles. Detecting objects in distant regions with only a few LiDAR points is still a challenge, and numerous strategies have been developed to address point cloud sparsity through densification.This paper presents a joint completion and detection framework that improves the detection feature in sparse areas while maintaining costs unchanged. Specifically, we propose TransBridge, a novel transformer-based up-sampling block that fuses the features from the detection and completion networks.The detection network can benefit from acquiring implicit completion features derived from the completion network. Additionally, we design the Dynamic-Static Reconstruction (DSRecon) module to produce dense LiDAR data for the completion network, meeting the requirement for dense point cloud ground truth.Furthermore, we employ the transformer mechanism to establish connections between channels and spatial relations, resulting in a high-resolution feature map used for completion purposes.Extensive experiments on the nuScenes and Waymo datasets demonstrate the effectiveness of the proposed framework.The results show that our framework consistently improves end-to-end 3D object detection, with the mean average precision (mAP) ranging from 0.7 to 1.5 across multiple methods, indicating its generalization ability. For the two-stage detection framework, it also boosts the mAP up to 5.78 points.

Sim4Seg: Boosting Multimodal Multi-disease Medical Diagnosis Segmentation with Region-Aware Vision-Language Similarity Masks

Nov 10, 2025Abstract:Despite significant progress in pixel-level medical image analysis, existing medical image segmentation models rarely explore medical segmentation and diagnosis tasks jointly. However, it is crucial for patients that models can provide explainable diagnoses along with medical segmentation results. In this paper, we introduce a medical vision-language task named Medical Diagnosis Segmentation (MDS), which aims to understand clinical queries for medical images and generate the corresponding segmentation masks as well as diagnostic results. To facilitate this task, we first present the Multimodal Multi-disease Medical Diagnosis Segmentation (M3DS) dataset, containing diverse multimodal multi-disease medical images paired with their corresponding segmentation masks and diagnosis chain-of-thought, created via an automated diagnosis chain-of-thought generation pipeline. Moreover, we propose Sim4Seg, a novel framework that improves the performance of diagnosis segmentation by taking advantage of the Region-Aware Vision-Language Similarity to Mask (RVLS2M) module. To improve overall performance, we investigate a test-time scaling strategy for MDS tasks. Experimental results demonstrate that our method outperforms the baselines in both segmentation and diagnosis.

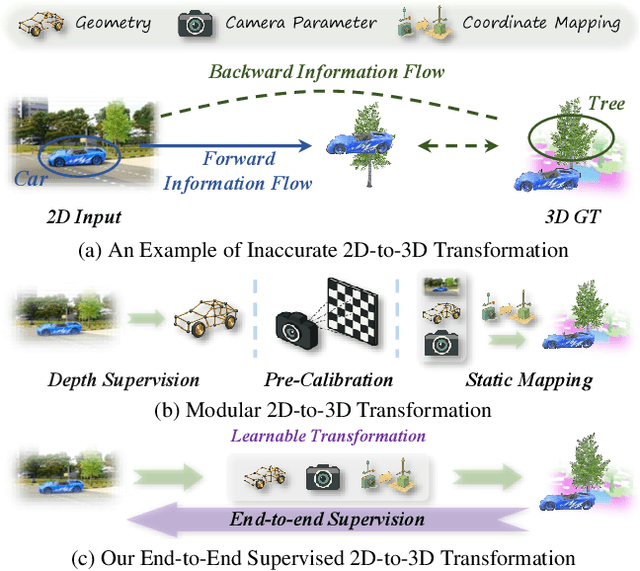

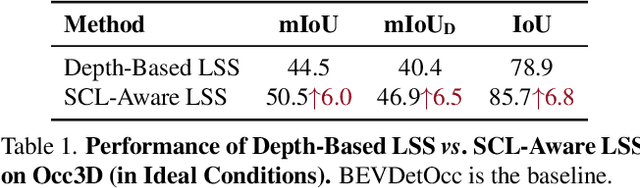

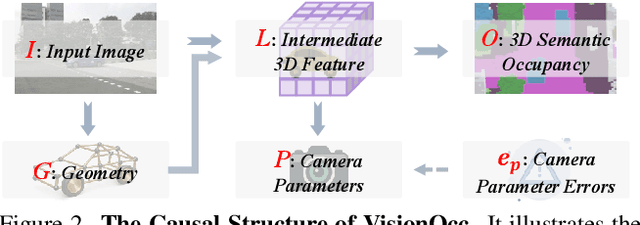

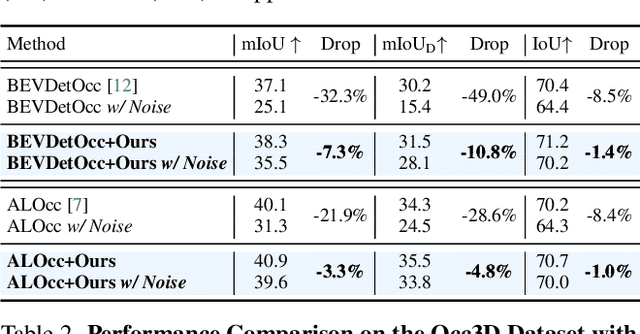

Semantic Causality-Aware Vision-Based 3D Occupancy Prediction

Sep 10, 2025

Abstract:Vision-based 3D semantic occupancy prediction is a critical task in 3D vision that integrates volumetric 3D reconstruction with semantic understanding. Existing methods, however, often rely on modular pipelines. These modules are typically optimized independently or use pre-configured inputs, leading to cascading errors. In this paper, we address this limitation by designing a novel causal loss that enables holistic, end-to-end supervision of the modular 2D-to-3D transformation pipeline. Grounded in the principle of 2D-to-3D semantic causality, this loss regulates the gradient flow from 3D voxel representations back to the 2D features. Consequently, it renders the entire pipeline differentiable, unifying the learning process and making previously non-trainable components fully learnable. Building on this principle, we propose the Semantic Causality-Aware 2D-to-3D Transformation, which comprises three components guided by our causal loss: Channel-Grouped Lifting for adaptive semantic mapping, Learnable Camera Offsets for enhanced robustness against camera perturbations, and Normalized Convolution for effective feature propagation. Extensive experiments demonstrate that our method achieves state-of-the-art performance on the Occ3D benchmark, demonstrating significant robustness to camera perturbations and improved 2D-to-3D semantic consistency.

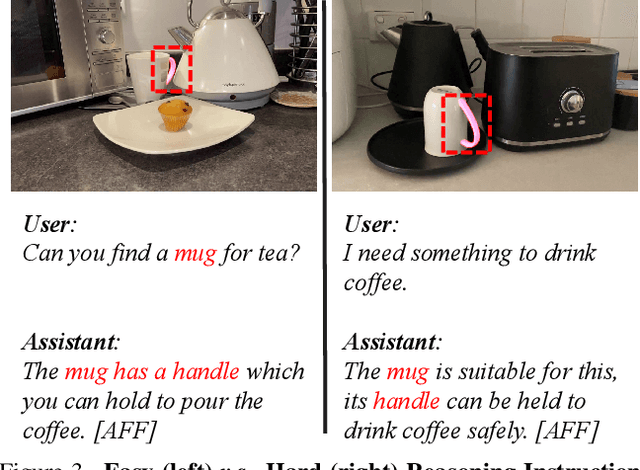

RAGNet: Large-scale Reasoning-based Affordance Segmentation Benchmark towards General Grasping

Jul 31, 2025

Abstract:General robotic grasping systems require accurate object affordance perception in diverse open-world scenarios following human instructions. However, current studies suffer from the problem of lacking reasoning-based large-scale affordance prediction data, leading to considerable concern about open-world effectiveness. To address this limitation, we build a large-scale grasping-oriented affordance segmentation benchmark with human-like instructions, named RAGNet. It contains 273k images, 180 categories, and 26k reasoning instructions. The images cover diverse embodied data domains, such as wild, robot, ego-centric, and even simulation data. They are carefully annotated with an affordance map, while the difficulty of language instructions is largely increased by removing their category name and only providing functional descriptions. Furthermore, we propose a comprehensive affordance-based grasping framework, named AffordanceNet, which consists of a VLM pre-trained on our massive affordance data and a grasping network that conditions an affordance map to grasp the target. Extensive experiments on affordance segmentation benchmarks and real-robot manipulation tasks show that our model has a powerful open-world generalization ability. Our data and code is available at https://github.com/wudongming97/AffordanceNet.

MAM: Modular Multi-Agent Framework for Multi-Modal Medical Diagnosis via Role-Specialized Collaboration

Jun 24, 2025Abstract:Recent advancements in medical Large Language Models (LLMs) have showcased their powerful reasoning and diagnostic capabilities. Despite their success, current unified multimodal medical LLMs face limitations in knowledge update costs, comprehensiveness, and flexibility. To address these challenges, we introduce the Modular Multi-Agent Framework for Multi-Modal Medical Diagnosis (MAM). Inspired by our empirical findings highlighting the benefits of role assignment and diagnostic discernment in LLMs, MAM decomposes the medical diagnostic process into specialized roles: a General Practitioner, Specialist Team, Radiologist, Medical Assistant, and Director, each embodied by an LLM-based agent. This modular and collaborative framework enables efficient knowledge updates and leverages existing medical LLMs and knowledge bases. Extensive experimental evaluations conducted on a wide range of publicly accessible multimodal medical datasets, incorporating text, image, audio, and video modalities, demonstrate that MAM consistently surpasses the performance of modality-specific LLMs. Notably, MAM achieves significant performance improvements ranging from 18% to 365% compared to baseline models. Our code is released at https://github.com/yczhou001/MAM.

Self-Rewarding Large Vision-Language Models for Optimizing Prompts in Text-to-Image Generation

May 22, 2025Abstract:Text-to-image models are powerful for producing high-quality images based on given text prompts, but crafting these prompts often requires specialized vocabulary. To address this, existing methods train rewriting models with supervision from large amounts of manually annotated data and trained aesthetic assessment models. To alleviate the dependence on data scale for model training and the biases introduced by trained models, we propose a novel prompt optimization framework, designed to rephrase a simple user prompt into a sophisticated prompt to a text-to-image model. Specifically, we employ the large vision language models (LVLMs) as the solver to rewrite the user prompt, and concurrently, employ LVLMs as a reward model to score the aesthetics and alignment of the images generated by the optimized prompt. Instead of laborious human feedback, we exploit the prior knowledge of the LVLM to provide rewards, i.e., AI feedback. Simultaneously, the solver and the reward model are unified into one model and iterated in reinforcement learning to achieve self-improvement by giving a solution and judging itself. Results on two popular datasets demonstrate that our method outperforms other strong competitors.

Towards Better Cephalometric Landmark Detection with Diffusion Data Generation

May 09, 2025Abstract:Cephalometric landmark detection is essential for orthodontic diagnostics and treatment planning. Nevertheless, the scarcity of samples in data collection and the extensive effort required for manual annotation have significantly impeded the availability of diverse datasets. This limitation has restricted the effectiveness of deep learning-based detection methods, particularly those based on large-scale vision models. To address these challenges, we have developed an innovative data generation method capable of producing diverse cephalometric X-ray images along with corresponding annotations without human intervention. To achieve this, our approach initiates by constructing new cephalometric landmark annotations using anatomical priors. Then, we employ a diffusion-based generator to create realistic X-ray images that correspond closely with these annotations. To achieve precise control in producing samples with different attributes, we introduce a novel prompt cephalometric X-ray image dataset. This dataset includes real cephalometric X-ray images and detailed medical text prompts describing the images. By leveraging these detailed prompts, our method improves the generation process to control different styles and attributes. Facilitated by the large, diverse generated data, we introduce large-scale vision detection models into the cephalometric landmark detection task to improve accuracy. Experimental results demonstrate that training with the generated data substantially enhances the performance. Compared to methods without using the generated data, our approach improves the Success Detection Rate (SDR) by 6.5%, attaining a notable 82.2%. All code and data are available at: https://um-lab.github.io/cepha-generation

Geometry-aware Temporal Aggregation Network for Monocular 3D Lane Detection

Apr 29, 2025Abstract:Monocular 3D lane detection aims to estimate 3D position of lanes from frontal-view (FV) images. However, current monocular 3D lane detection methods suffer from two limitations, including inaccurate geometric information of the predicted 3D lanes and difficulties in maintaining lane integrity. To address these issues, we seek to fully exploit the potential of multiple input frames. First, we aim at enhancing the ability to perceive the geometry of scenes by leveraging temporal geometric consistency. Second, we strive to improve the integrity of lanes by revealing more instance information from temporal sequences. Therefore, we propose a novel Geometry-aware Temporal Aggregation Network (GTA-Net) for monocular 3D lane detection. On one hand, we develop the Temporal Geometry Enhancement Module (TGEM), which exploits geometric consistency across successive frames, facilitating effective geometry perception. On the other hand, we present the Temporal Instance-aware Query Generation (TIQG), which strategically incorporates temporal cues into query generation, thereby enabling the exploration of comprehensive instance information. Experiments demonstrate that our GTA-Net achieves SoTA results, surpassing existing monocular 3D lane detection solutions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge