Guodong Long

Bi-level Personalization for Federated Foundation Models: A Task-vector Aggregation Approach

Sep 16, 2025Abstract:Federated foundation models represent a new paradigm to jointly fine-tune pre-trained foundation models across clients. It is still a challenge to fine-tune foundation models for a small group of new users or specialized scenarios, which typically involve limited data compared to the large-scale data used in pre-training. In this context, the trade-off between personalization and federation becomes more sensitive. To tackle these, we proposed a bi-level personalization framework for federated fine-tuning on foundation models. Specifically, we conduct personalized fine-tuning on the client-level using its private data, and then conduct a personalized aggregation on the server-level using similar users measured by client-specific task vectors. Given the personalization information gained from client-level fine-tuning, the server-level personalized aggregation can gain group-wise personalization information while mitigating the disturbance of irrelevant or interest-conflict clients with non-IID data. The effectiveness of the proposed algorithm has been demonstrated by extensive experimental analysis in benchmark datasets.

FeDaL: Federated Dataset Learning for Time Series Foundation Models

Aug 06, 2025Abstract:Dataset-wise heterogeneity introduces significant domain biases that fundamentally degrade generalization on Time Series Foundation Models (TSFMs), yet this challenge remains underexplored. This paper rethink the development of TSFMs using the paradigm of federated learning. We propose a novel Federated Dataset Learning (FeDaL) approach to tackle heterogeneous time series by learning dataset-agnostic temporal representations. Specifically, the distributed architecture of federated learning is a nature solution to decompose heterogeneous TS datasets into shared generalized knowledge and preserved personalized knowledge. Moreover, based on the TSFM architecture, FeDaL explicitly mitigates both local and global biases by adding two complementary mechanisms: Domain Bias Elimination (DBE) and Global Bias Elimination (GBE). FeDaL`s cross-dataset generalization has been extensively evaluated in real-world datasets spanning eight tasks, including both representation learning and downstream time series analysis, against 54 baselines. We further analyze federated scaling behavior, showing how data volume, client count, and join rate affect model performance under decentralization.

Learn to Preserve Personality: Federated Foundation Models in Recommendations

Jun 13, 2025Abstract:A core learning challenge for existed Foundation Models (FM) is striking the tradeoff between generalization with personalization, which is a dilemma that has been highlighted by various parameter-efficient adaptation techniques. Federated foundation models (FFM) provide a structural means to decouple shared knowledge from individual specific adaptations via decentralized processes. Recommendation systems offer a perfect testbed for FFMs, given their reliance on rich implicit feedback reflecting unique user characteristics. This position paper discusses a novel learning paradigm where FFMs not only harness their generalization capabilities but are specifically designed to preserve the integrity of user personality, illustrated thoroughly within the recommendation contexts. We envision future personal agents, powered by personalized adaptive FMs, guiding user decisions on content. Such an architecture promises a user centric, decentralized system where individuals maintain control over their personalized agents.

Federated Adapter on Foundation Models: An Out-Of-Distribution Approach

May 02, 2025Abstract:As foundation models gain prominence, Federated Foundation Models (FedFM) have emerged as a privacy-preserving approach to collaboratively fine-tune models in federated learning (FL) frameworks using distributed datasets across clients. A key challenge for FedFM, given the versatile nature of foundation models, is addressing out-of-distribution (OOD) generalization, where unseen tasks or clients may exhibit distribution shifts leading to suboptimal performance. Although numerous studies have explored OOD generalization in conventional FL, these methods are inadequate for FedFM due to the challenges posed by large parameter scales and increased data heterogeneity. To address these, we propose FedOA, which employs adapter-based parameter-efficient fine-tuning methods for efficacy and introduces personalized adapters with feature distance-based regularization to align distributions and guarantee OOD generalization for each client. Theoretically, we demonstrate that the conventional aggregated global model in FedFM inherently retains OOD generalization capabilities, and our proposed method enhances the personalized model's OOD generalization through regularization informed by the global model, with proven convergence under general non-convex settings. Empirically, the effectiveness of the proposed method is validated on benchmark datasets across various NLP tasks.

WALL-E 2.0: World Alignment by NeuroSymbolic Learning improves World Model-based LLM Agents

Apr 22, 2025Abstract:Can we build accurate world models out of large language models (LLMs)? How can world models benefit LLM agents? The gap between the prior knowledge of LLMs and the specified environment's dynamics usually bottlenecks LLMs' performance as world models. To bridge the gap, we propose a training-free "world alignment" that learns an environment's symbolic knowledge complementary to LLMs. The symbolic knowledge covers action rules, knowledge graphs, and scene graphs, which are extracted by LLMs from exploration trajectories and encoded into executable codes to regulate LLM agents' policies. We further propose an RL-free, model-based agent "WALL-E 2.0" through the model-predictive control (MPC) framework. Unlike classical MPC requiring costly optimization on the fly, we adopt an LLM agent as an efficient look-ahead optimizer of future steps' actions by interacting with the neurosymbolic world model. While the LLM agent's strong heuristics make it an efficient planner in MPC, the quality of its planned actions is also secured by the accurate predictions of the aligned world model. They together considerably improve learning efficiency in a new environment. On open-world challenges in Mars (Minecraft like) and ALFWorld (embodied indoor environments), WALL-E 2.0 significantly outperforms existing methods, e.g., surpassing baselines in Mars by 16.1%-51.6% of success rate and by at least 61.7% in score. In ALFWorld, it achieves a new record 98% success rate after only 4 iterations.

FedMerge: Federated Personalization via Model Merging

Apr 09, 2025Abstract:One global model in federated learning (FL) might not be sufficient to serve many clients with non-IID tasks and distributions. While there has been advances in FL to train multiple global models for better personalization, they only provide limited choices to clients so local finetuning is still indispensable. In this paper, we propose a novel ``FedMerge'' approach that can create a personalized model per client by simply merging multiple global models with automatically optimized and customized weights. In FedMerge, a few global models can serve many non-IID clients, even without further local finetuning. We formulate this problem as a joint optimization of global models and the merging weights for each client. Unlike existing FL approaches where the server broadcasts one or multiple global models to all clients, the server only needs to send a customized, merged model to each client. Moreover, instead of periodically interrupting the local training and re-initializing it to a global model, the merged model aligns better with each client's task and data distribution, smoothening the local-global gap between consecutive rounds caused by client drift. We evaluate FedMerge on three different non-IID settings applied to different domains with diverse tasks and data types, in which FedMerge consistently outperforms existing FL approaches, including clustering-based and mixture-of-experts (MoE) based methods.

DPC: Dual-Prompt Collaboration for Tuning Vision-Language Models

Mar 17, 2025

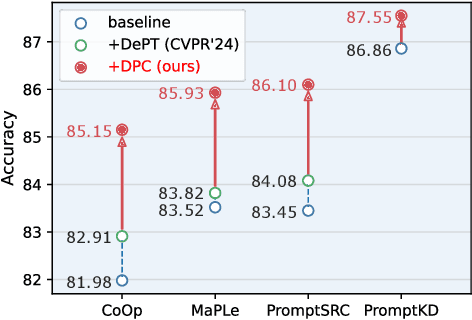

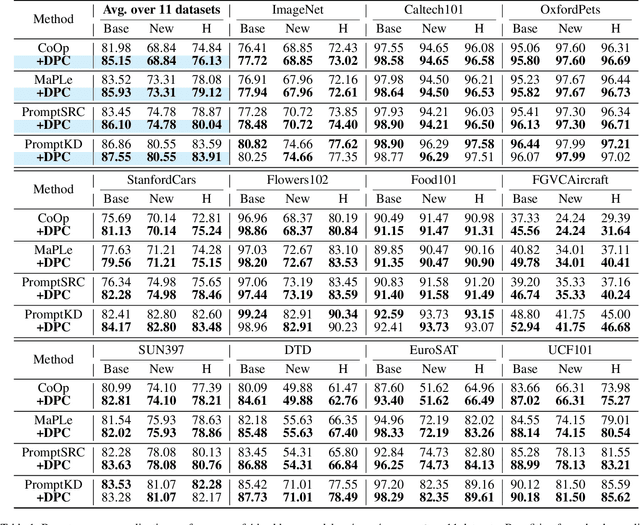

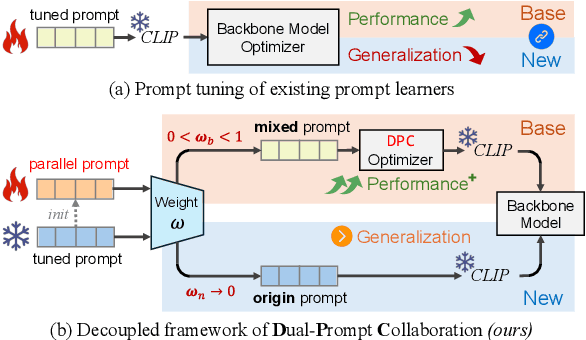

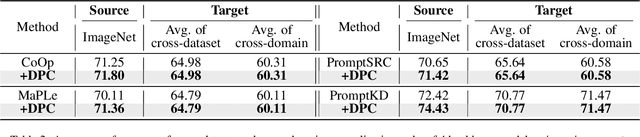

Abstract:The Base-New Trade-off (BNT) problem universally exists during the optimization of CLIP-based prompt tuning, where continuous fine-tuning on base (target) classes leads to a simultaneous decrease of generalization ability on new (unseen) classes. Existing approaches attempt to regulate the prompt tuning process to balance BNT by appending constraints. However, imposed on the same target prompt, these constraints fail to fully avert the mutual exclusivity between the optimization directions for base and new. As a novel solution to this challenge, we propose the plug-and-play Dual-Prompt Collaboration (DPC) framework, the first that decoupling the optimization processes of base and new tasks at the prompt level. Specifically, we clone a learnable parallel prompt based on the backbone prompt, and introduce a variable Weighting-Decoupling framework to independently control the optimization directions of dual prompts specific to base or new tasks, thus avoiding the conflict in generalization. Meanwhile, we propose a Dynamic Hard Negative Optimizer, utilizing dual prompts to construct a more challenging optimization task on base classes for enhancement. For interpretability, we prove the feature channel invariance of the prompt vector during the optimization process, providing theoretical support for the Weighting-Decoupling of DPC. Extensive experiments on multiple backbones demonstrate that DPC can significantly improve base performance without introducing any external knowledge beyond the base classes, while maintaining generalization to new classes. Code is available at: https://github.com/JREion/DPC.

Multifaceted User Modeling in Recommendation: A Federated Foundation Models Approach

Dec 22, 2024

Abstract:Multifaceted user modeling aims to uncover fine-grained patterns and learn representations from user data, revealing their diverse interests and characteristics, such as profile, preference, and personality. Recent studies on foundation model-based recommendation have emphasized the Transformer architecture's remarkable ability to capture complex, non-linear user-item interaction relationships. This paper aims to advance foundation model-based recommendersystems by introducing enhancements to multifaceted user modeling capabilities. We propose a novel Transformer layer designed specifically for recommendation, using the self-attention mechanism to capture sequential user-item interaction patterns. Specifically, we design a group gating network to identify user groups, enabling hierarchical discovery across different layers, thereby capturing the multifaceted nature of user interests through multiple Transformer layers. Furthermore, to broaden the data scope and further enhance multifaceted user modeling, we extend the framework to a federated setting, enabling the use of private datasets while ensuring privacy. Experimental validations on benchmark datasets demonstrate the superior performance of our proposed method. Code is available.

Federated Foundation Models on Heterogeneous Time Series

Dec 12, 2024Abstract:Training a general-purpose time series foundation models with robust generalization capabilities across diverse applications from scratch is still an open challenge. Efforts are primarily focused on fusing cross-domain time series datasets to extract shared subsequences as tokens for training models on Transformer architecture. However, due to significant statistical heterogeneity across domains, this cross-domain fusing approach doesn't work effectively as the same as fusing texts and images. To tackle this challenge, this paper proposes a novel federated learning approach to address the heterogeneity in time series foundation models training, namely FFTS. Specifically, each data-holding organization is treated as an independent client in a collaborative learning framework with federated settings, and then many client-specific local models will be trained to preserve the unique characteristics per dataset. Moreover, a new regularization mechanism will be applied to both client-side and server-side, thus to align the shared knowledge across heterogeneous datasets from different domains. Extensive experiments on benchmark datasets demonstrate the effectiveness of the proposed federated learning approach. The newly learned time series foundation models achieve superior generalization capabilities on cross-domain time series analysis tasks, including forecasting, imputation, and anomaly detection.

DMGNN: Detecting and Mitigating Backdoor Attacks in Graph Neural Networks

Oct 18, 2024Abstract:Recent studies have revealed that GNNs are highly susceptible to multiple adversarial attacks. Among these, graph backdoor attacks pose one of the most prominent threats, where attackers cause models to misclassify by learning the backdoored features with injected triggers and modified target labels during the training phase. Based on the features of the triggers, these attacks can be categorized into out-of-distribution (OOD) and in-distribution (ID) graph backdoor attacks, triggers with notable differences from the clean sample feature distributions constitute OOD backdoor attacks, whereas the triggers in ID backdoor attacks are nearly identical to the clean sample feature distributions. Existing methods can successfully defend against OOD backdoor attacks by comparing the feature distribution of triggers and clean samples but fail to mitigate stealthy ID backdoor attacks. Due to the lack of proper supervision signals, the main task accuracy is negatively affected in defending against ID backdoor attacks. To bridge this gap, we propose DMGNN against OOD and ID graph backdoor attacks that can powerfully eliminate stealthiness to guarantee defense effectiveness and improve the model performance. Specifically, DMGNN can easily identify the hidden ID and OOD triggers via predicting label transitions based on counterfactual explanation. To further filter the diversity of generated explainable graphs and erase the influence of the trigger features, we present a reverse sampling pruning method to screen and discard the triggers directly on the data level. Extensive experimental evaluations on open graph datasets demonstrate that DMGNN far outperforms the state-of-the-art (SOTA) defense methods, reducing the attack success rate to 5% with almost negligible degradation in model performance (within 3.5%).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge