Gunar Schirner

Grasp-HGN: Grasping the Unexpected

Aug 11, 2025Abstract:For transradial amputees, robotic prosthetic hands promise to regain the capability to perform daily living activities. To advance next-generation prosthetic hand control design, it is crucial to address current shortcomings in robustness to out of lab artifacts, and generalizability to new environments. Due to the fixed number of object to interact with in existing datasets, contrasted with the virtually infinite variety of objects encountered in the real world, current grasp models perform poorly on unseen objects, negatively affecting users' independence and quality of life. To address this: (i) we define semantic projection, the ability of a model to generalize to unseen object types and show that conventional models like YOLO, despite 80% training accuracy, drop to 15% on unseen objects. (ii) we propose Grasp-LLaVA, a Grasp Vision Language Model enabling human-like reasoning to infer the suitable grasp type estimate based on the object's physical characteristics resulting in a significant 50.2% accuracy over unseen object types compared to 36.7% accuracy of an SOTA grasp estimation model. Lastly, to bridge the performance-latency gap, we propose Hybrid Grasp Network (HGN), an edge-cloud deployment infrastructure enabling fast grasp estimation on edge and accurate cloud inference as a fail-safe, effectively expanding the latency vs. accuracy Pareto. HGN with confidence calibration (DC) enables dynamic switching between edge and cloud models, improving semantic projection accuracy by 5.6% (to 42.3%) with 3.5x speedup over the unseen object types. Over a real-world sample mix, it reaches 86% average accuracy (12.2% gain over edge-only), and 2.2x faster inference than Grasp-LLaVA alone.

Mitigation of Radar Range Deception Jamming Using Random Finite Sets

Aug 21, 2024Abstract:This paper presents a radar target tracking framework for addressing main-beam range deception jamming attacks using random finite sets (RFSs). Our system handles false alarms and detections with false range information through multiple hypothesis tracking (MHT) to resolve data association uncertainties. We focus on range gate pull-off (RGPO) attacks, where the attacker adds positive delays to the radar pulse, thereby mimicking the target trajectory while appearing at a larger distance from the radar. The proposed framework incorporates knowledge about the spatial behavior of the attack into the assumed RFS clutter model and uses only position information without relying on additional signal features. We present an adaptive solution that estimates the jammer-induced biases to improve tracking accuracy as well as a simpler non-adaptive version that performs well when accurate priors on the jamming range are available. Furthermore, an expression for RGPO attack detection is derived, where the adaptive solution offers superior performance. The presented strategies provide tracking resilience against multiple RGPO attacks in terms of position estimation accuracy and jamming detection without degrading tracking performance in the absence of jamming.

Reinforcement Learning-Based Model Matching to Reduce the Sim-Real Gap in COBRA

Jun 19, 2024Abstract:This paper employs a reinforcement learning-based model identification method aimed at enhancing the accuracy of the dynamics for our snake robot, called COBRA. Leveraging gradient information and iterative optimization, the proposed approach refines the parameters of COBRA's dynamical model such as coefficient of friction and actuator parameters using experimental and simulated data. Experimental validation on the hardware platform demonstrates the efficacy of the proposed approach, highlighting its potential to address sim-to-real gap in robot implementation.

Dynamic Posture Manipulation During Tumbling for Closed-Loop Heading Angle Control

May 08, 2024

Abstract:Passive tumbling uses natural forces like gravity for efficient travel. But without an active means of control, passive tumblers must rely entirely on external forces. Northeastern University's COBRA is a snake robot that can morph into a ring, which employs passive tumbling to traverse down slopes. However, due to its articulated joints, it is also capable of dynamically altering its posture to manipulate the dynamics of the tumbling locomotion for active steering. This paper presents a modelling and control strategy based on collocation optimization for real-time steering of COBRA's tumbling locomotion. We validate our approach using Matlab simulations.

Loco-Manipulation with Nonimpulsive Contact-Implicit Planning in a Slithering Robot

Apr 12, 2024Abstract:Object manipulation has been extensively studied in the context of fixed base and mobile manipulators. However, the overactuated locomotion modality employed by snake robots allows for a unique blend of object manipulation through locomotion, referred to as loco-manipulation. The following work presents an optimization approach to solving the loco-manipulation problem based on non-impulsive implicit contact path planning for our snake robot COBRA. We present the mathematical framework and show high-fidelity simulation results and experiments to demonstrate the effectiveness of our approach.

Non-impulsive Contact-Implicit Motion Planning for Morpho-functional Loco-manipulation

Apr 12, 2024Abstract:Object manipulation has been extensively studied in the context of fixed base and mobile manipulators. However, the overactuated locomotion modality employed by snake robots allows for a unique blend of object manipulation through locomotion, referred to as loco-manipulation. The following work presents an optimization approach to solving the loco-manipulation problem based on non-impulsive implicit contact path planning for our snake robot COBRA. We present the mathematical framework and show high fidelity simulation results for fixed-shape lateral rolling trajectories that demonstrate the object manipulation.

Multistatic-Radar RCS-Signature Recognition of Aerial Vehicles: A Bayesian Fusion Approach

Mar 08, 2024

Abstract:Radar Automated Target Recognition (RATR) for Unmanned Aerial Vehicles (UAVs) involves transmitting Electromagnetic Waves (EMWs) and performing target type recognition on the received radar echo, crucial for defense and aerospace applications. Previous studies highlighted the advantages of multistatic radar configurations over monostatic ones in RATR. However, fusion methods in multistatic radar configurations often suboptimally combine classification vectors from individual radars probabilistically. To address this, we propose a fully Bayesian RATR framework employing Optimal Bayesian Fusion (OBF) to aggregate classification probability vectors from multiple radars. OBF, based on expected 0-1 loss, updates a Recursive Bayesian Classification (RBC) posterior distribution for target UAV type, conditioned on historical observations across multiple time steps. We evaluate the approach using simulated random walk trajectories for seven drones, correlating target aspect angles to Radar Cross Section (RCS) measurements in an anechoic chamber. Comparing against single radar Automated Target Recognition (ATR) systems and suboptimal fusion methods, our empirical results demonstrate that the OBF method integrated with RBC significantly enhances classification accuracy compared to other fusion methods and single radar configurations.

Segmentation and Classification of EMG Time-Series During Reach-to-Grasp Motion

Apr 19, 2021

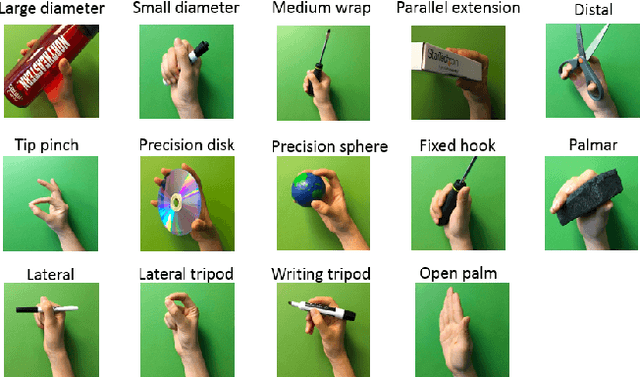

Abstract:The electromyography (EMG) signals have been widely utilized in human robot interaction for extracting user hand and arm motion instructions. A major challenge of the online interaction with robots is the reliable EMG recognition from real-time data. However, previous studies mainly focused on using steady-state EMG signals with a small number of grasp patterns to implement classification algorithms, which is insufficient to generate robust control regarding the dynamic muscular activity variation in practice. Introducing more EMG variability during training and validation could implement a better dynamic-motion detection, but only limited research focused on such grasp-movement identification, and all of those assessments on the non-static EMG classification require supervised ground-truth label of the movement status. In this study, we propose a framework for classifying EMG signals generated from continuous grasp movements with variations on dynamic arm/hand postures, using an unsupervised motion status segmentation method. We collected data from large gesture vocabularies with multiple dynamic motion phases to encode the transitions from one intent to another based on common sequences of the grasp movements. Two classifiers were constructed for identifying the motion-phase label and grasp-type label, where the dynamic motion phases were segmented and labeled in an unsupervised manner. The proposed framework was evaluated in real-time with the accuracy variation over time presented, which was shown to be efficient due to the high degree of freedom of the EMG data.

Multimodal Fusion of EMG and Vision for Human Grasp Intent Inference in Prosthetic Hand Control

Apr 08, 2021

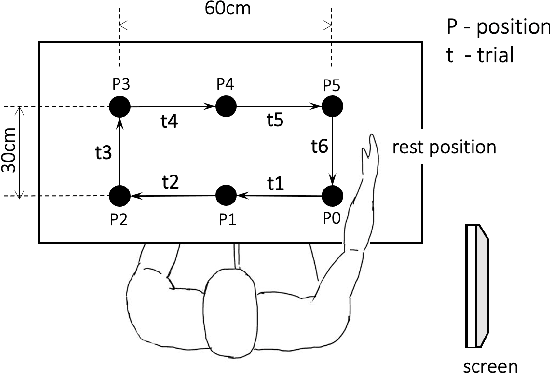

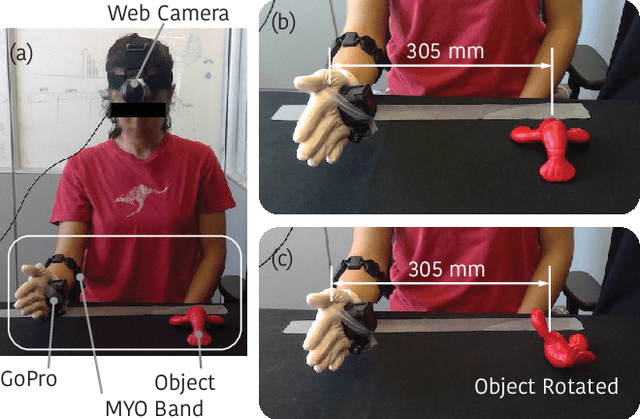

Abstract:For lower arm amputees, robotic prosthetic hands offer the promise to regain the capability to perform fine object manipulation in activities of daily living. Current control methods based on physiological signals such as EEG and EMG are prone to poor inference outcomes due to motion artifacts, variability of skin electrode junction impedance over time, muscle fatigue, and other factors. Visual evidence is also susceptible to its own artifacts, most often due to object occlusion, lighting changes, variable shapes of objects depending on view-angle, among other factors. Multimodal evidence fusion using physiological and vision sensor measurements is a natural approach due to the complementary strengths of these modalities. In this paper, we present a Bayesian evidence fusion framework for grasp intent inference using eye-view video, gaze, and EMG from the forearm processed by neural network models. We analyze individual and fused performance as a function of time as the hand approaches the object to grasp it. For this purpose, we have also developed novel data processing and augmentation techniques to train neural network components. Our experimental data analyses demonstrate that EMG and visual evidence show complementary strengths, and as a consequence, fusion of multimodal evidence can outperform each individual evidence modality at any given time. Specifically, results indicate that, on average, fusion improves the instantaneous upcoming grasp type classification accuracy while in the reaching phase by 13.66% and 14.8%, relative to EMG and visual evidence individually. An overall fusion accuracy of 95.3% among 13 labels (compared to a chance level of 7.7%) is achieved, and more detailed analysis indicate that the correct grasp is inferred sufficiently early and with high confidence compared to the top contender, in order to allow successful robot actuation to close the loop.

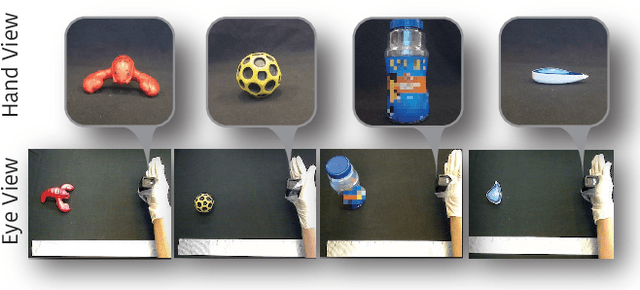

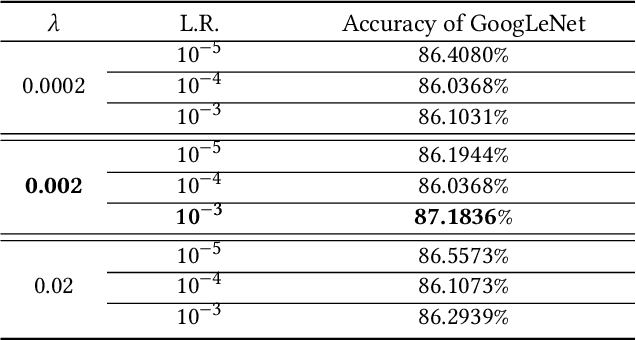

From Hand-Perspective Visual Information to Grasp Type Probabilities: Deep Learning via Ranking Labels

Mar 08, 2021

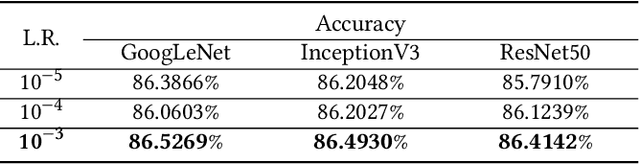

Abstract:Limb deficiency severely affects the daily lives of amputees and drives efforts to provide functional robotic prosthetic hands to compensate this deprivation. Convolutional neural network-based computer vision control of the prosthetic hand has received increased attention as a method to replace or complement physiological signals due to its reliability by training visual information to predict the hand gesture. Mounting a camera into the palm of a prosthetic hand is proved to be a promising approach to collect visual data. However, the grasp type labelled from the eye and hand perspective may differ as object shapes are not always symmetric. Thus, to represent this difference in a realistic way, we employed a dataset containing synchronous images from eye- and hand- view, where the hand-perspective images are used for training while the eye-view images are only for manual labelling. Electromyogram (EMG) activity and movement kinematics data from the upper arm are also collected for multi-modal information fusion in future work. Moreover, in order to include human-in-the-loop control and combine the computer vision with physiological signal inputs, instead of making absolute positive or negative predictions, we build a novel probabilistic classifier according to the Plackett-Luce model. To predict the probability distribution over grasps, we exploit the statistical model over label rankings to solve the permutation domain problems via a maximum likelihood estimation, utilizing the manually ranked lists of grasps as a new form of label. We indicate that the proposed model is applicable to the most popular and productive convolutional neural network frameworks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge