Cagdas D. Onal

Technical Report: A Contact-aware Feedback CPG System for Learning-based Locomotion Control in a Soft Snake Robot

Sep 06, 2023

Abstract:Integrating contact-awareness into a soft snake robot and efficiently controlling its locomotion in response to contact information present significant challenges. This paper aims to solve contact-aware locomotion problem of a soft snake robot through developing bio-inspired contact-aware locomotion controllers. To provide effective contact information for the controllers, we develop a scale covered sensor structure mimicking natural snakes' \textit{scale sensilla}. In the design of control framework, our core contribution is the development of a novel sensory feedback mechanism of the Matsuoka central pattern generator (CPG) network. This mechanism allows the Matsuoka CPG system to work like a "spine cord" in the whole contact-aware control scheme, which simultaneously takes the stimuli including tonic input signals from the "brain" (a goal-tracking locomotion controller) and sensory feedback signals from the "reflex arc" (the contact reactive controller), and generate rhythmic signals to effectively actuate the soft snake robot to slither through densely allocated obstacles. In the design of the "reflex arc", we develop two types of reactive controllers -- 1) a reinforcement learning (RL) sensor regulator that learns to manipulate the sensory feedback inputs of the CPG system, and 2) a local reflexive sensor-CPG network that directly connects sensor readings and the CPG's feedback inputs in a special topology. These two reactive controllers respectively facilitate two different contact-aware locomotion control schemes. The two control schemes are tested and evaluated in the soft snake robot, showing promising performance in the contact-aware locomotion tasks. The experimental results also further verify the benefit of Matsuoka CPG system in bio-inspired robot controller design.

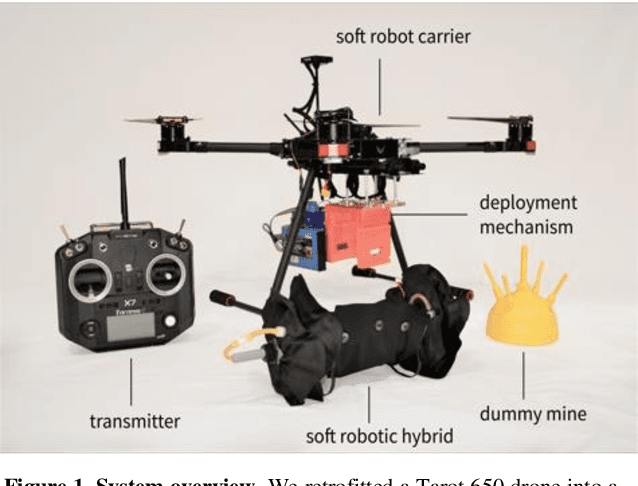

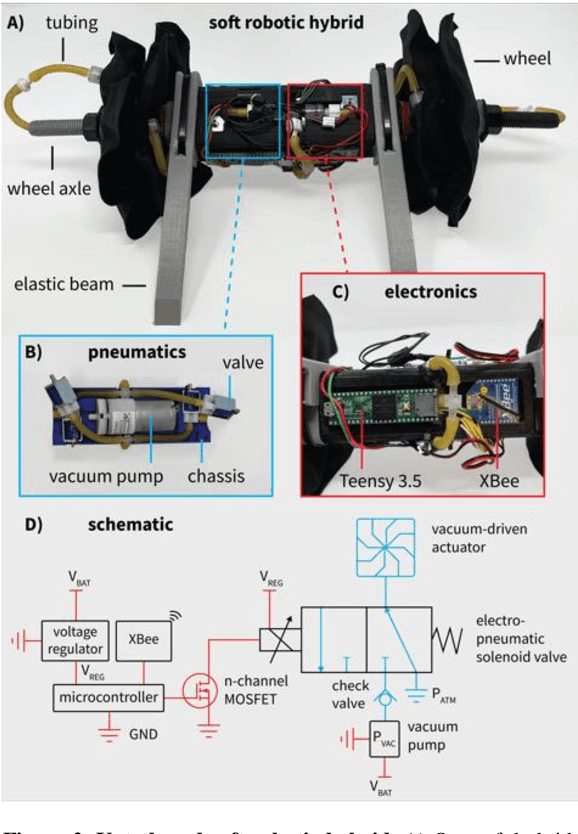

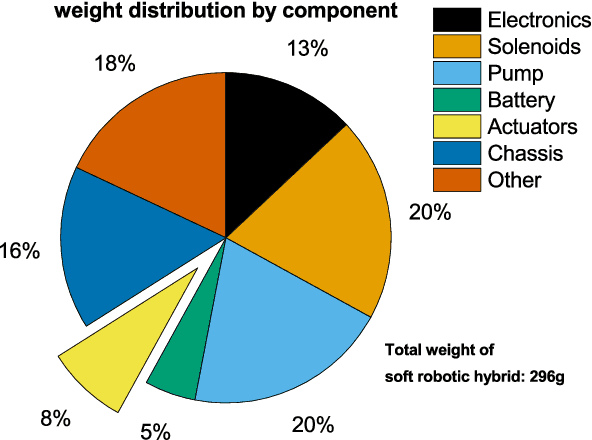

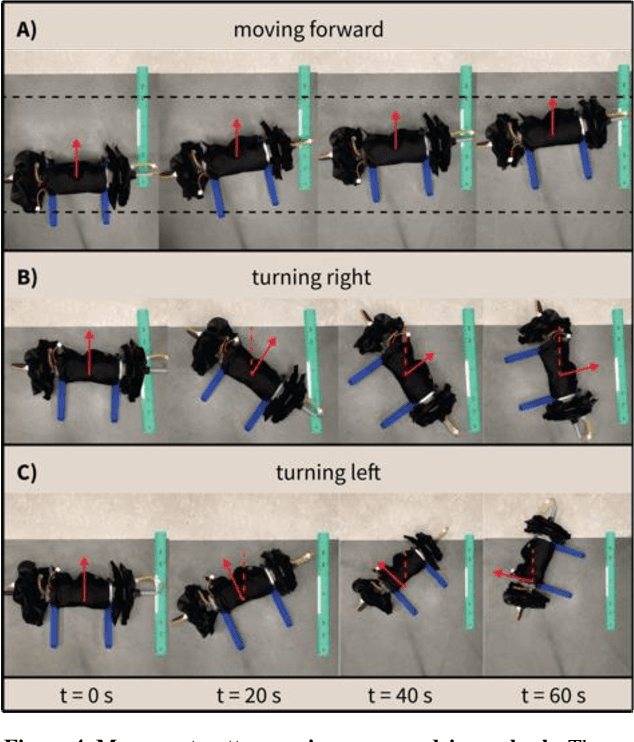

Air-Releasable Soft Robots for Explosive Ordnance Disposal

Feb 07, 2022

Abstract:The demining of landmines using drones is challenging; air-releasable payloads are typically non-intelligent (e.g., water balloons or explosives) and deploying them at even low altitudes (~6 meter) is inherently inaccurate due to complex deployment trajectories and constrained visual awareness by the drone pilot. Soft robotics offers a unique approach for aerial demining, namely due to the robust, low-cost, and lightweight designs of soft robots. Instead of non-intelligent payloads, here, we propose the use of air-releasable soft robots for demining. We developed a full system consisting of an unmanned aerial vehicle retrofitted to a soft robot carrier including a custom-made deployment mechanism, and an air-releasable, lightweight (296 g), untethered soft hybrid robot with integrated electronics that incorporates a new type of a vacuum-based flasher roller actuator system. We demonstrate a deployment cycle in which the drone drops the soft robotic hybrid from an altitude of 4.5 m meters and after which the robot approaches a dummy landmine. By deploying soft robots at points of interest, we can transition soft robotic technologies from the laboratory to real-world environments.

From Hand-Perspective Visual Information to Grasp Type Probabilities: Deep Learning via Ranking Labels

Mar 08, 2021

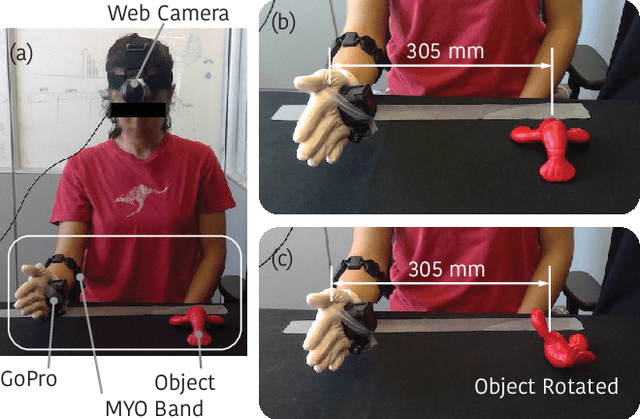

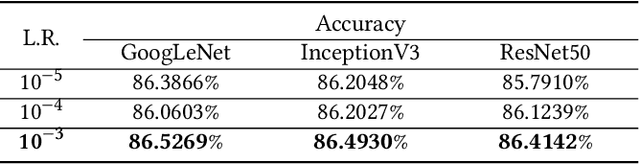

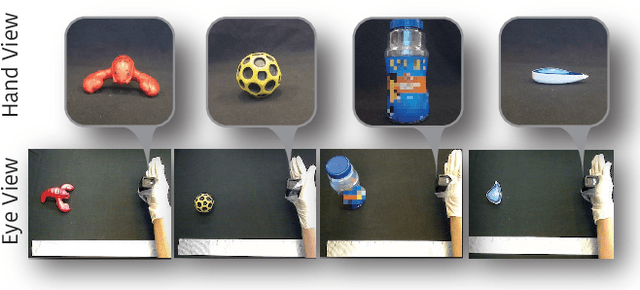

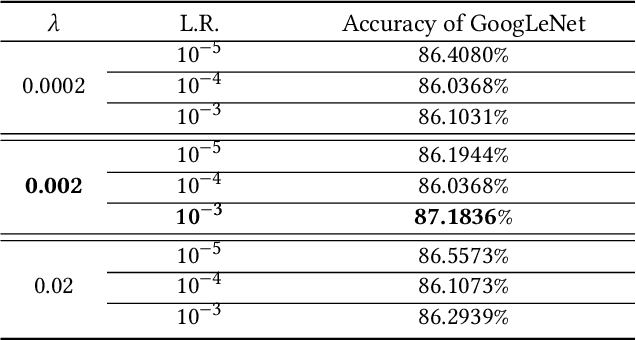

Abstract:Limb deficiency severely affects the daily lives of amputees and drives efforts to provide functional robotic prosthetic hands to compensate this deprivation. Convolutional neural network-based computer vision control of the prosthetic hand has received increased attention as a method to replace or complement physiological signals due to its reliability by training visual information to predict the hand gesture. Mounting a camera into the palm of a prosthetic hand is proved to be a promising approach to collect visual data. However, the grasp type labelled from the eye and hand perspective may differ as object shapes are not always symmetric. Thus, to represent this difference in a realistic way, we employed a dataset containing synchronous images from eye- and hand- view, where the hand-perspective images are used for training while the eye-view images are only for manual labelling. Electromyogram (EMG) activity and movement kinematics data from the upper arm are also collected for multi-modal information fusion in future work. Moreover, in order to include human-in-the-loop control and combine the computer vision with physiological signal inputs, instead of making absolute positive or negative predictions, we build a novel probabilistic classifier according to the Plackett-Luce model. To predict the probability distribution over grasps, we exploit the statistical model over label rankings to solve the permutation domain problems via a maximum likelihood estimation, utilizing the manually ranked lists of grasps as a new form of label. We indicate that the proposed model is applicable to the most popular and productive convolutional neural network frameworks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge