Cagdas Onal

Reinforcement Learning of a CPG-regulated Locomotion Controller for a Soft Snake Robot

Jul 11, 2022

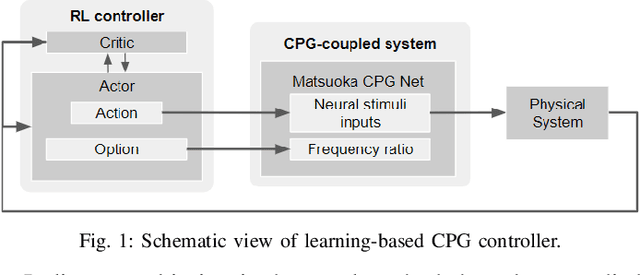

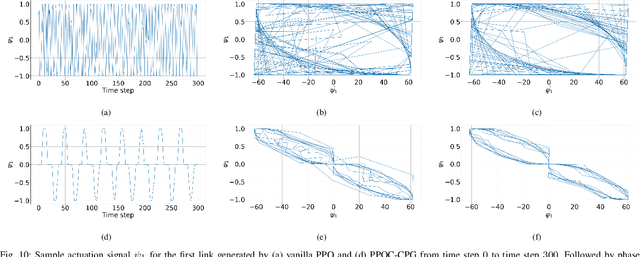

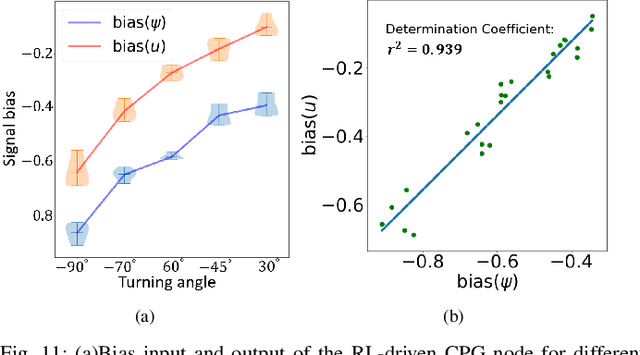

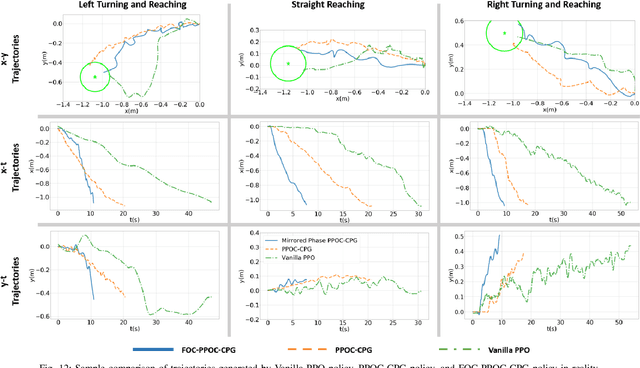

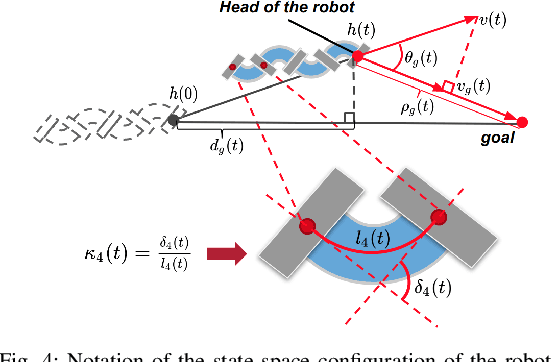

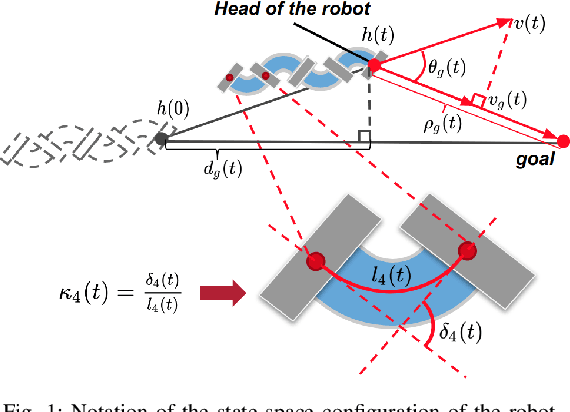

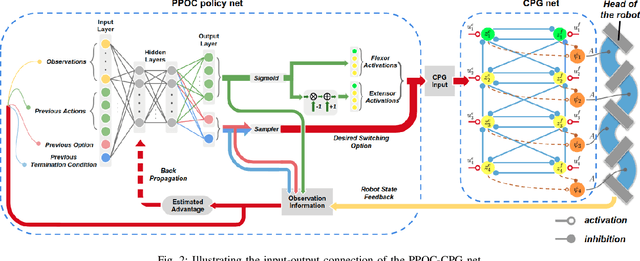

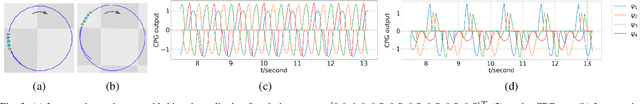

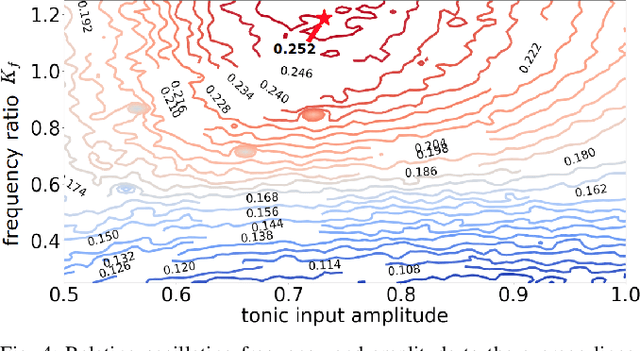

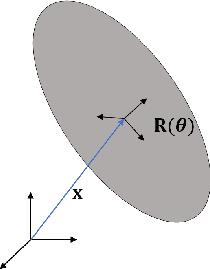

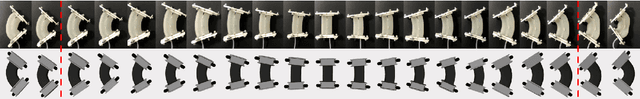

Abstract:In this work, we present a learning-based goal-tracking control method for soft robot snakes. Inspired by biological snakes, our controller is composed of two key modules: A reinforcement learning (RL) module for learning goal-tracking behaviors given stochastic dynamics of the soft snake robot, and a central pattern generator (CPG) system with the Matsuoka oscillators for generating stable and diverse locomotion patterns. Based on the proposed framework, we comprehensively discuss the maneuverability of the soft snake robot, including steering and speed control during its serpentine locomotion. Such maneuverability can be mapped into the control of oscillation patterns of the CPG system. Through theoretical analysis of the oscillating properties of the Matsuoka CPG system, this work shows that the key to realizing the free mobility of our soft snake robot is to properly constrain and control certain coefficients of the Matsuoka CPG system, including the tonic inputs and the frequency ratio. Based on this analysis, we systematically formulate the controllable coefficients of the CPG system for the RL agent to operate. With experimental validation, we show that our control policy learned in the simulated environment can be directly applied to control our real snake robot to perform goal-tracking tasks, regardless of the physical environment gap between simulation and the real world. The experiment results also show that our method's adaptability and robustness to the sim-to-real transition are significantly improved compared to our previous approach and a baseline RL method (PPO).

Learning Contact-aware CPG-based Locomotion in a Soft Snake Robot

May 10, 2021

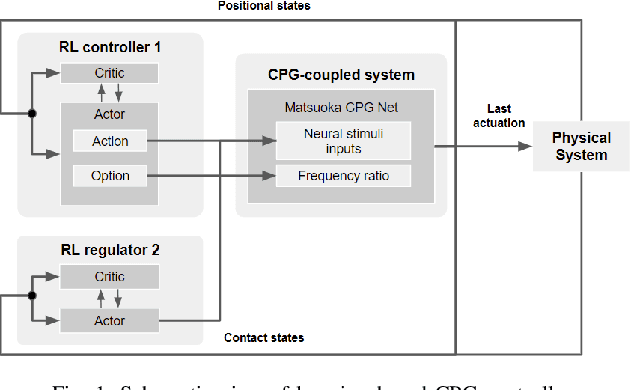

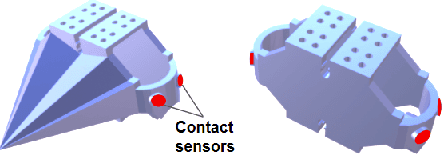

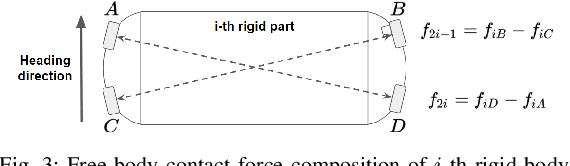

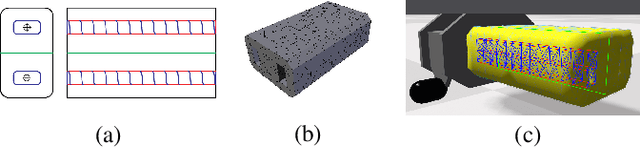

Abstract:In this paper, we present a model-free learning-based control scheme for the soft snake robot to improve its contact-aware locomotion performance in a cluttered environment. The control scheme includes two cooperative controllers: A bio-inspired controller (C1) that controls both the steering and velocity of the soft snake robot, and an event-triggered regulator (R2) that controls the steering of the snake in anticipation of obstacle contacts and during contact. The inputs from the two controllers are composed as the input to a Matsuoka CPG network to generate smooth and rhythmic actuation inputs to the soft snake. To enable stable and efficient learning with two controllers, we develop a game-theoretic process, fictitious play, to train C1 and R2 with a shared potential-field-based reward function for goal tracking tasks. The proposed approach is tested and evaluated in the simulator and shows significant improvement of locomotion performance in the obstacle-based environment comparing to two baseline controllers.

Multimodal Fusion of EMG and Vision for Human Grasp Intent Inference in Prosthetic Hand Control

Apr 08, 2021

Abstract:For lower arm amputees, robotic prosthetic hands offer the promise to regain the capability to perform fine object manipulation in activities of daily living. Current control methods based on physiological signals such as EEG and EMG are prone to poor inference outcomes due to motion artifacts, variability of skin electrode junction impedance over time, muscle fatigue, and other factors. Visual evidence is also susceptible to its own artifacts, most often due to object occlusion, lighting changes, variable shapes of objects depending on view-angle, among other factors. Multimodal evidence fusion using physiological and vision sensor measurements is a natural approach due to the complementary strengths of these modalities. In this paper, we present a Bayesian evidence fusion framework for grasp intent inference using eye-view video, gaze, and EMG from the forearm processed by neural network models. We analyze individual and fused performance as a function of time as the hand approaches the object to grasp it. For this purpose, we have also developed novel data processing and augmentation techniques to train neural network components. Our experimental data analyses demonstrate that EMG and visual evidence show complementary strengths, and as a consequence, fusion of multimodal evidence can outperform each individual evidence modality at any given time. Specifically, results indicate that, on average, fusion improves the instantaneous upcoming grasp type classification accuracy while in the reaching phase by 13.66% and 14.8%, relative to EMG and visual evidence individually. An overall fusion accuracy of 95.3% among 13 labels (compared to a chance level of 7.7%) is achieved, and more detailed analysis indicate that the correct grasp is inferred sufficiently early and with high confidence compared to the top contender, in order to allow successful robot actuation to close the loop.

Learning to Locomote with Deep Neural-Network and CPG-based Control in a Soft Snake Robot

Jan 13, 2020

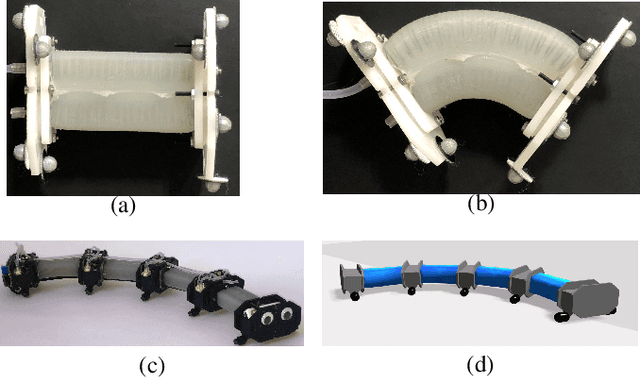

Abstract:In this paper, we present a new locomotion control method for soft robot snakes. Inspired by biological snakes, our control architecture is composed of two key modules: A deep reinforcement learning (RL) module for achieving adaptive goal-reaching behaviors with changing goals, and a central pattern generator (CPG) system with Matsuoka oscillators for generating stable and diverse behavior patterns. The two modules are interconnected into a closed-loop system: The RL module, acting as the "brain", regulates the input of the CPG system based on state feedback from the robot. The output of the CPG system is then translated into pressure inputs to pneumatic actuators of a soft snake robot. Since the oscillation frequency and wave amplitude of the Matsuoka oscillator can be independently controlled under different time scales, we adapt the option-critic framework to improve the learning performance measured by optimality and data efficiency. We verify the performance of the proposed control method in experiments with both simulated and real snake robots.

A Validated Physical Model For Real-Time Simulation of Soft Robotic Snakes

Apr 05, 2019

Abstract:In this work we present a framework that is capable of accurately representing soft robotic actuators in a multiphysics environment in real-time. We propose a constraint-based dynamics model of a 1-dimensional pneumatic soft actuator that accounts for internal pressure forces, as well as the effect of actuator latency and damping under inflation and deflation and demonstrate its accuracy a full soft robotic snake with the composition of multiple 1D actuators. We verify our model's accuracy in static deformation and dynamic locomotion open-loop control experiments. To achieve real-time performance we leverage the parallel computation power of GPUs to allow interactive control and feedback.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge