Reinforcement Learning of a CPG-regulated Locomotion Controller for a Soft Snake Robot

Paper and Code

Jul 11, 2022

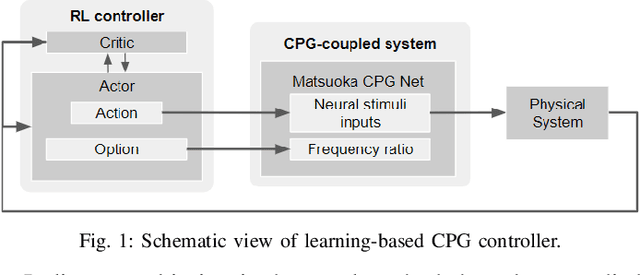

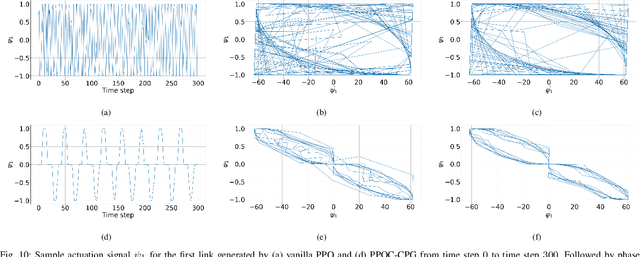

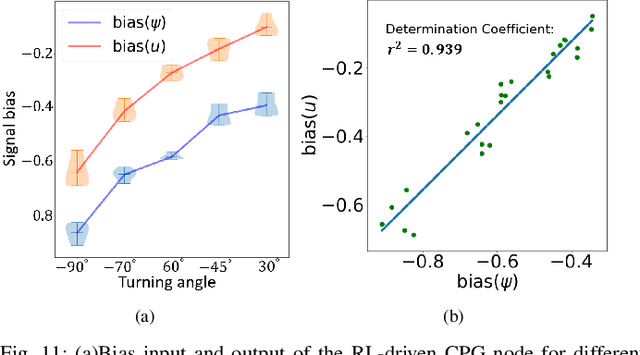

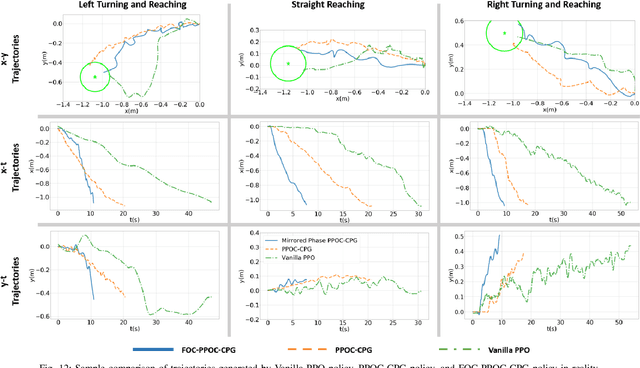

In this work, we present a learning-based goal-tracking control method for soft robot snakes. Inspired by biological snakes, our controller is composed of two key modules: A reinforcement learning (RL) module for learning goal-tracking behaviors given stochastic dynamics of the soft snake robot, and a central pattern generator (CPG) system with the Matsuoka oscillators for generating stable and diverse locomotion patterns. Based on the proposed framework, we comprehensively discuss the maneuverability of the soft snake robot, including steering and speed control during its serpentine locomotion. Such maneuverability can be mapped into the control of oscillation patterns of the CPG system. Through theoretical analysis of the oscillating properties of the Matsuoka CPG system, this work shows that the key to realizing the free mobility of our soft snake robot is to properly constrain and control certain coefficients of the Matsuoka CPG system, including the tonic inputs and the frequency ratio. Based on this analysis, we systematically formulate the controllable coefficients of the CPG system for the RL agent to operate. With experimental validation, we show that our control policy learned in the simulated environment can be directly applied to control our real snake robot to perform goal-tracking tasks, regardless of the physical environment gap between simulation and the real world. The experiment results also show that our method's adaptability and robustness to the sim-to-real transition are significantly improved compared to our previous approach and a baseline RL method (PPO).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge