Renato Gasoto

Isaac Lab: A GPU-Accelerated Simulation Framework for Multi-Modal Robot Learning

Nov 06, 2025

Abstract:We present Isaac Lab, the natural successor to Isaac Gym, which extends the paradigm of GPU-native robotics simulation into the era of large-scale multi-modal learning. Isaac Lab combines high-fidelity GPU parallel physics, photorealistic rendering, and a modular, composable architecture for designing environments and training robot policies. Beyond physics and rendering, the framework integrates actuator models, multi-frequency sensor simulation, data collection pipelines, and domain randomization tools, unifying best practices for reinforcement and imitation learning at scale within a single extensible platform. We highlight its application to a diverse set of challenges, including whole-body control, cross-embodiment mobility, contact-rich and dexterous manipulation, and the integration of human demonstrations for skill acquisition. Finally, we discuss upcoming integration with the differentiable, GPU-accelerated Newton physics engine, which promises new opportunities for scalable, data-efficient, and gradient-based approaches to robot learning. We believe Isaac Lab's combination of advanced simulation capabilities, rich sensing, and data-center scale execution will help unlock the next generation of breakthroughs in robotics research.

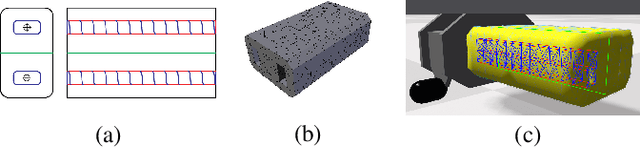

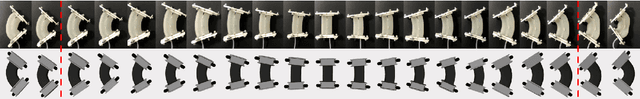

Learning to Locomote with Deep Neural-Network and CPG-based Control in a Soft Snake Robot

Jan 13, 2020

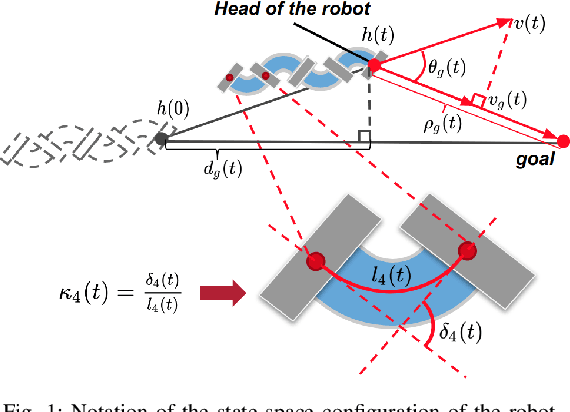

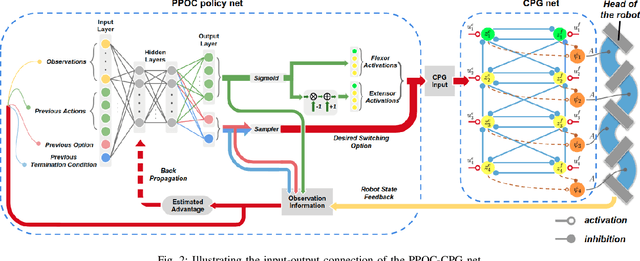

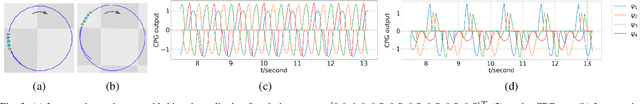

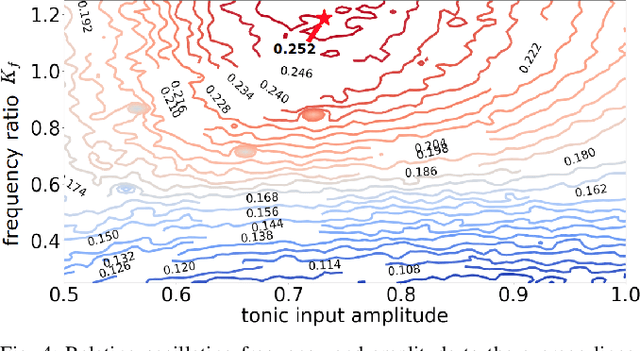

Abstract:In this paper, we present a new locomotion control method for soft robot snakes. Inspired by biological snakes, our control architecture is composed of two key modules: A deep reinforcement learning (RL) module for achieving adaptive goal-reaching behaviors with changing goals, and a central pattern generator (CPG) system with Matsuoka oscillators for generating stable and diverse behavior patterns. The two modules are interconnected into a closed-loop system: The RL module, acting as the "brain", regulates the input of the CPG system based on state feedback from the robot. The output of the CPG system is then translated into pressure inputs to pneumatic actuators of a soft snake robot. Since the oscillation frequency and wave amplitude of the Matsuoka oscillator can be independently controlled under different time scales, we adapt the option-critic framework to improve the learning performance measured by optimality and data efficiency. We verify the performance of the proposed control method in experiments with both simulated and real snake robots.

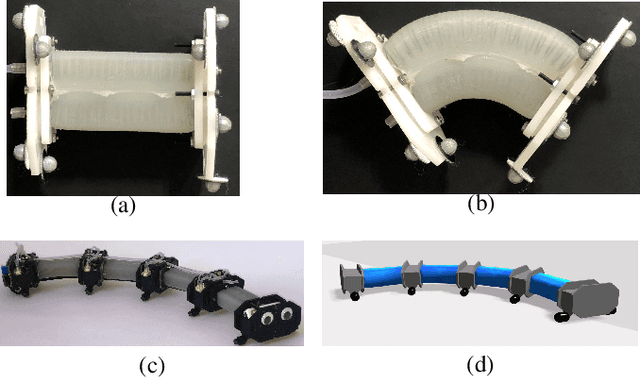

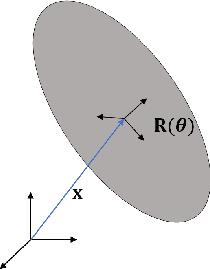

A Validated Physical Model For Real-Time Simulation of Soft Robotic Snakes

Apr 05, 2019

Abstract:In this work we present a framework that is capable of accurately representing soft robotic actuators in a multiphysics environment in real-time. We propose a constraint-based dynamics model of a 1-dimensional pneumatic soft actuator that accounts for internal pressure forces, as well as the effect of actuator latency and damping under inflation and deflation and demonstrate its accuracy a full soft robotic snake with the composition of multiple 1D actuators. We verify our model's accuracy in static deformation and dynamic locomotion open-loop control experiments. To achieve real-time performance we leverage the parallel computation power of GPUs to allow interactive control and feedback.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge