Learning to Locomote with Deep Neural-Network and CPG-based Control in a Soft Snake Robot

Paper and Code

Jan 13, 2020

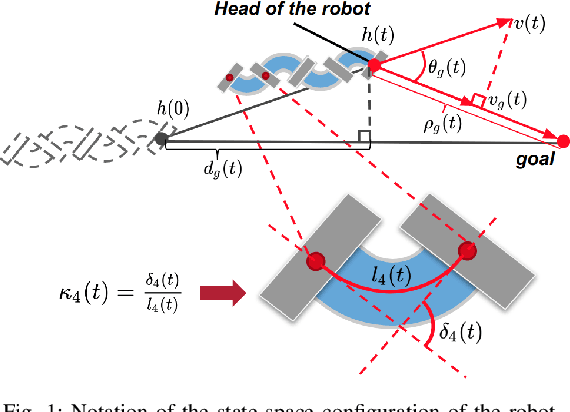

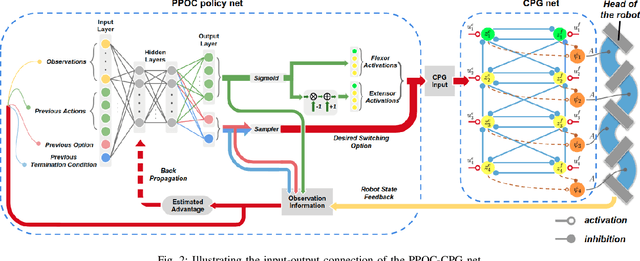

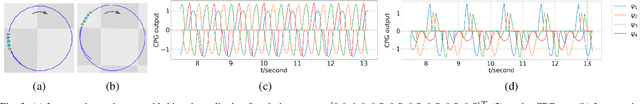

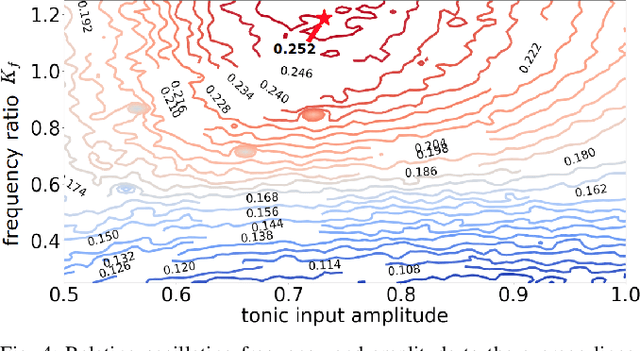

In this paper, we present a new locomotion control method for soft robot snakes. Inspired by biological snakes, our control architecture is composed of two key modules: A deep reinforcement learning (RL) module for achieving adaptive goal-reaching behaviors with changing goals, and a central pattern generator (CPG) system with Matsuoka oscillators for generating stable and diverse behavior patterns. The two modules are interconnected into a closed-loop system: The RL module, acting as the "brain", regulates the input of the CPG system based on state feedback from the robot. The output of the CPG system is then translated into pressure inputs to pneumatic actuators of a soft snake robot. Since the oscillation frequency and wave amplitude of the Matsuoka oscillator can be independently controlled under different time scales, we adapt the option-critic framework to improve the learning performance measured by optimality and data efficiency. We verify the performance of the proposed control method in experiments with both simulated and real snake robots.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge