Grey Nearing

Google Research, Tel-Aviv, Israel

A Physically Driven Long Short Term Memory Model for Estimating Snow Water Equivalent over the Continental United States

Apr 28, 2025

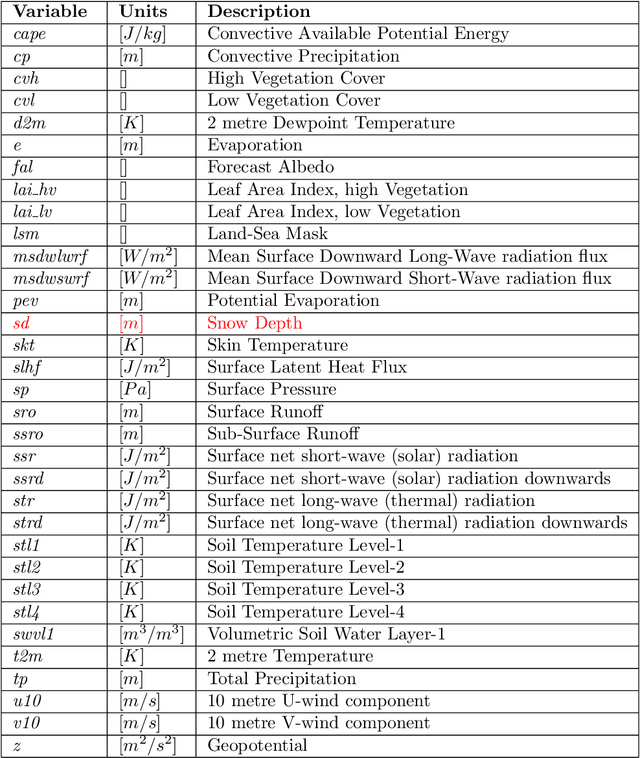

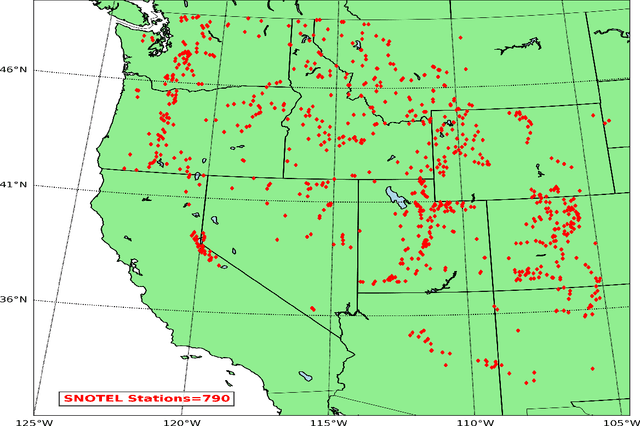

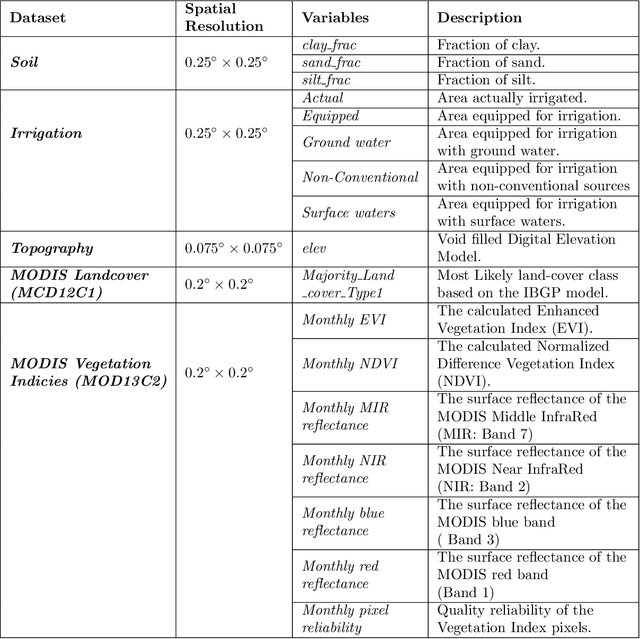

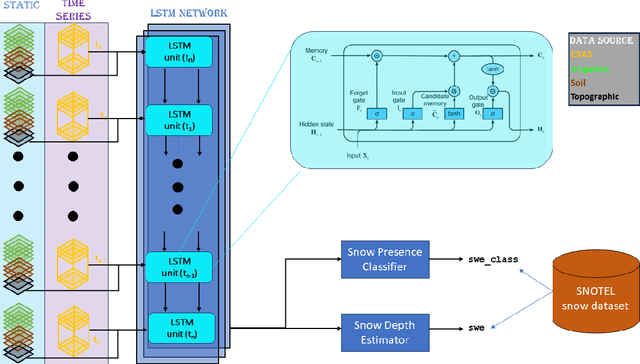

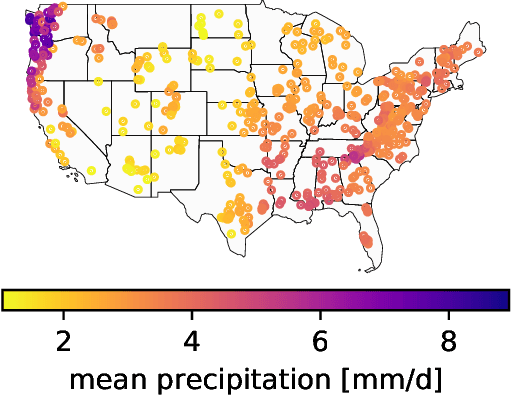

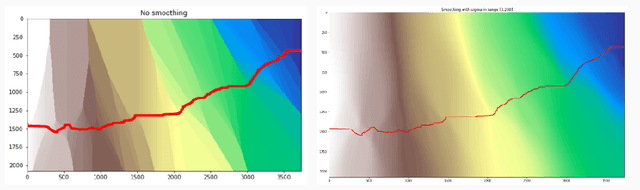

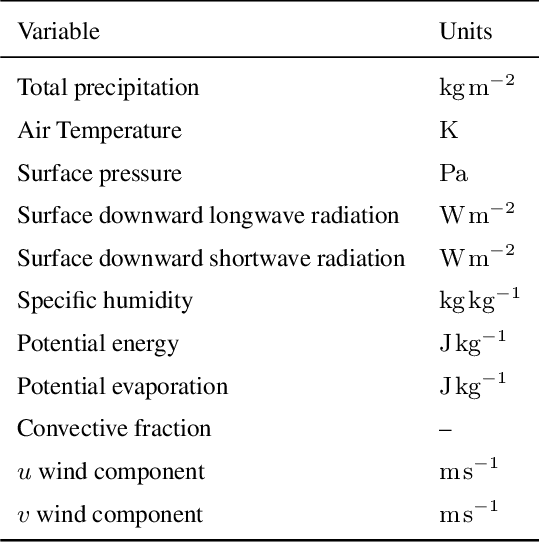

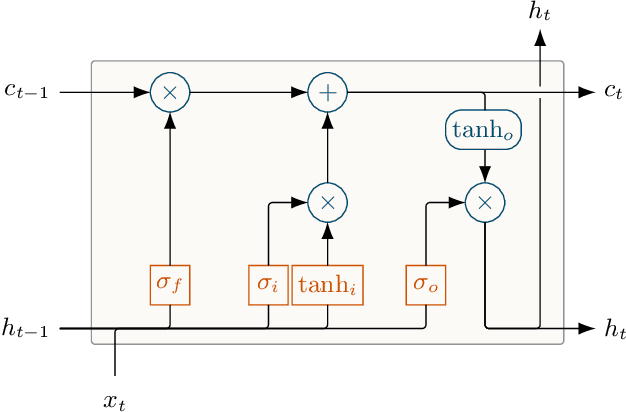

Abstract:Snow is an essential input for various land surface models. Seasonal snow estimates are available as snow water equivalent (SWE) from process-based reanalysis products or locally from in situ measurements. While the reanalysis products are computationally expensive and available at only fixed spatial and temporal resolutions, the in situ measurements are highly localized and sparse. To address these issues and enable the analysis of the effect of a large suite of physical, morphological, and geological conditions on the presence and amount of snow, we build a Long Short-Term Memory (LSTM) network, which is able to estimate the SWE based on time series input of the various physical/meteorological factors as well static spatial/morphological factors. Specifically, this model breaks down the SWE estimation into two separate tasks: (i) a classification task that indicates the presence/absence of snow on a specific day and (ii) a regression task that indicates the height of the SWE on a specific day in the case of snow presence. The model is trained using physical/in situ SWE measurements from the SNOw TELemetry (SNOTEL) snow pillows in the western United States. We will show that trained LSTM models have a classification accuracy of $\geq 93\%$ for the presence of snow and a coefficient of correlation of $\sim 0.9$ concerning their SWE estimates. We will also demonstrate that the models can generalize both spatially and temporally to previously unseen data.

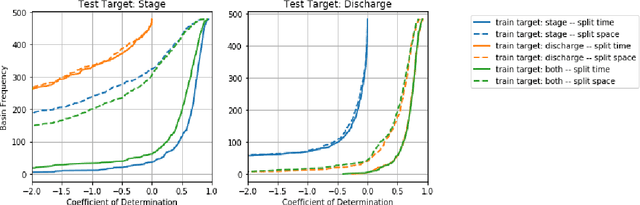

Fine Flood Forecasts: Incorporating local data into global models through fine-tuning

Apr 17, 2025Abstract:Floods are the most common form of natural disaster and accurate flood forecasting is essential for early warning systems. Previous work has shown that machine learning (ML) models are a promising way to improve flood predictions when trained on large, geographically-diverse datasets. This requirement of global training can result in a loss of ownership for national forecasters who cannot easily adapt the models to improve performance in their region, preventing ML models from being operationally deployed. Furthermore, traditional hydrology research with physics-based models suggests that local data -- which in many cases is only accessible to local agencies -- is valuable for improving model performance. To address these concerns, we demonstrate a methodology of pre-training a model on a large, global dataset and then fine-tuning that model on data from individual basins. This results in performance increases, validating our hypothesis that there is extra information to be captured in local data. In particular, we show that performance increases are most significant in watersheds that underperform during global training. We provide a roadmap for national forecasters who wish to take ownership of global models using their own data, aiming to lower the barrier to operational deployment of ML-based hydrological forecast systems.

AI Increases Global Access to Reliable Flood Forecasts

Aug 10, 2023

Abstract:Floods are one of the most common and impactful natural disasters, with a disproportionate impact in developing countries that often lack dense streamflow monitoring networks. Accurate and timely warnings are critical for mitigating flood risks, but accurate hydrological simulation models typically must be calibrated to long data records in each watershed where they are applied. We developed an Artificial Intelligence (AI) model to predict extreme hydrological events at timescales up to 7 days in advance. This model significantly outperforms current state of the art global hydrology models (the Copernicus Emergency Management Service Global Flood Awareness System) across all continents, lead times, and return periods. AI is especially effective at forecasting in ungauged basins, which is important because only a few percent of the world's watersheds have stream gauges, with a disproportionate number of ungauged basins in developing countries that are especially vulnerable to the human impacts of flooding. We produce forecasts of extreme events in South America and Africa that achieve reliability approaching the current state of the art in Europe and North America, and we achieve reliability at between 4 and 6-day lead times that are similar to current state of the art nowcasts (0-day lead time). Additionally, we achieve accuracies over 10-year return period events that are similar to current accuracies over 2-year return period events, meaning that AI can provide warnings earlier and over larger and more impactful events. The model that we develop in this paper has been incorporated into an operational early warning system that produces publicly available (free and open) forecasts in real time in over 80 countries. This work using AI and open data highlights a need for increasing the availability of hydrological data to continue to improve global access to reliable flood warnings.

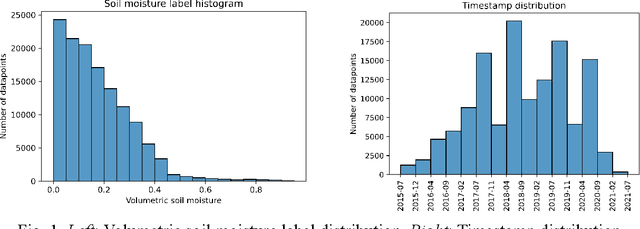

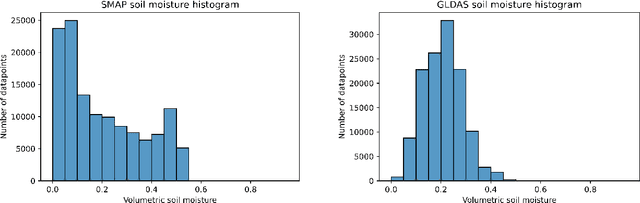

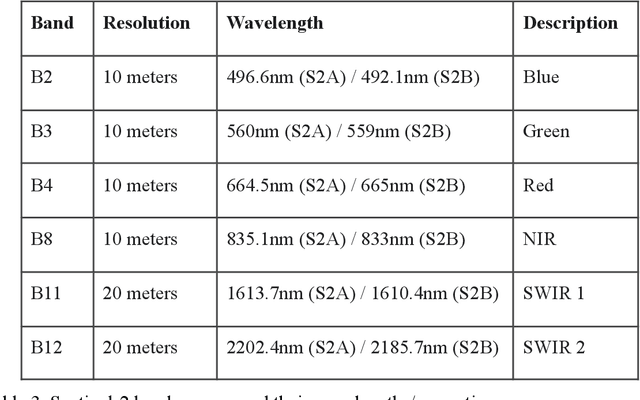

A Machine Learning Data Fusion Model for Soil Moisture Retrieval

Jun 20, 2022

Abstract:We develop a deep learning based convolutional-regression model that estimates the volumetric soil moisture content in the top ~5 cm of soil. Input predictors include Sentinel-1 (active radar), Sentinel-2 (optical imagery), and SMAP (passive radar) as well as geophysical variables from SoilGrids and modelled soil moisture fields from GLDAS. The model was trained and evaluated on data from ~1300 in-situ sensors globally over the period 2015 - 2021 and obtained an average per-sensor correlation of 0.727 and ubRMSE of 0.054, and can be used to produce a soil moisture map at a nominal 320m resolution. These results are benchmarked against 13 other soil moisture works at different locations, and an ablation study was used to identify important predictors.

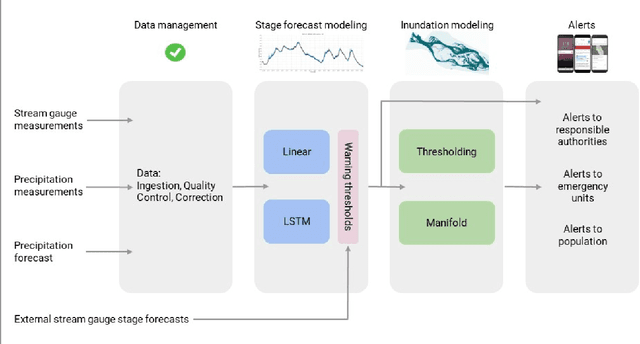

Flood forecasting with machine learning models in an operational framework

Nov 04, 2021

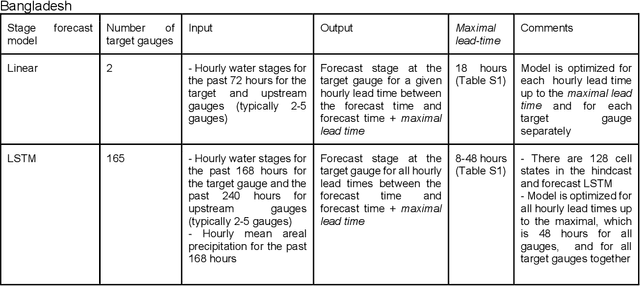

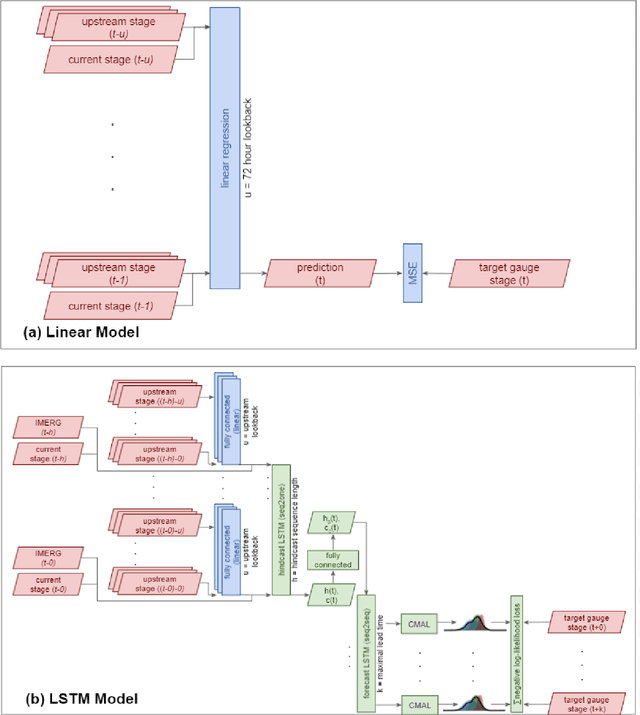

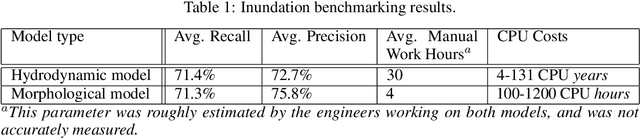

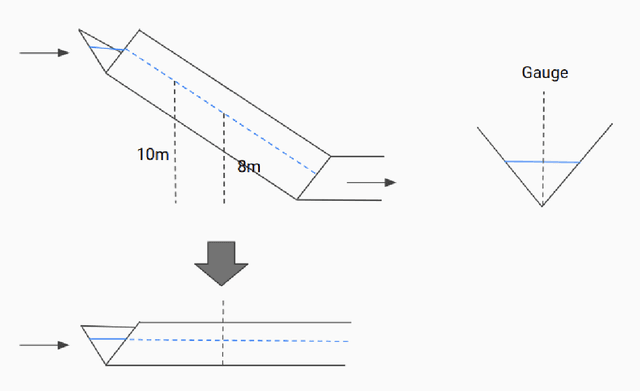

Abstract:The operational flood forecasting system by Google was developed to provide accurate real-time flood warnings to agencies and the public, with a focus on riverine floods in large, gauged rivers. It became operational in 2018 and has since expanded geographically. This forecasting system consists of four subsystems: data validation, stage forecasting, inundation modeling, and alert distribution. Machine learning is used for two of the subsystems. Stage forecasting is modeled with the Long Short-Term Memory (LSTM) networks and the Linear models. Flood inundation is computed with the Thresholding and the Manifold models, where the former computes inundation extent and the latter computes both inundation extent and depth. The Manifold model, presented here for the first time, provides a machine-learning alternative to hydraulic modeling of flood inundation. When evaluated on historical data, all models achieve sufficiently high-performance metrics for operational use. The LSTM showed higher skills than the Linear model, while the Thresholding and Manifold models achieved similar performance metrics for modeling inundation extent. During the 2021 monsoon season, the flood warning system was operational in India and Bangladesh, covering flood-prone regions around rivers with a total area of 287,000 km2, home to more than 350M people. More than 100M flood alerts were sent to affected populations, to relevant authorities, and to emergency organizations. Current and future work on the system includes extending coverage to additional flood-prone locations, as well as improving modeling capabilities and accuracy.

MC-LSTM: Mass-Conserving LSTM

Feb 08, 2021

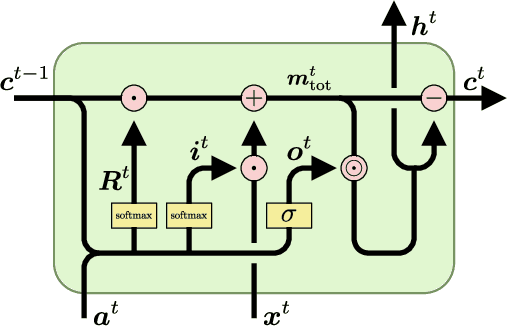

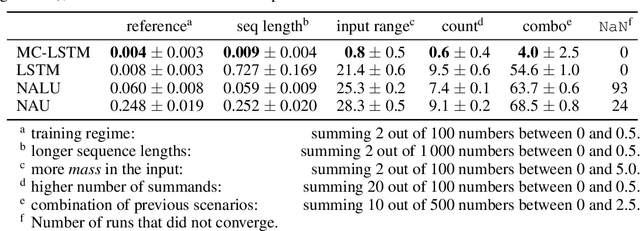

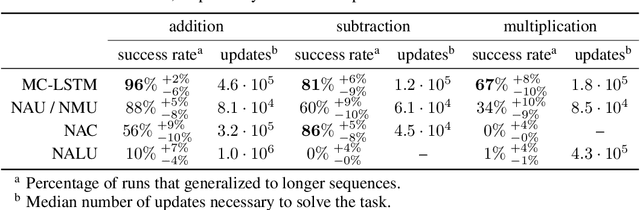

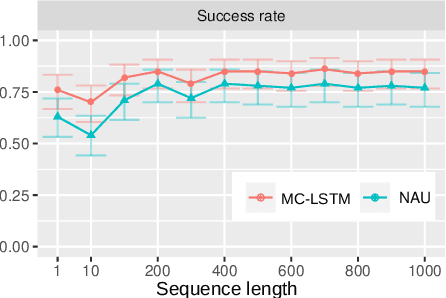

Abstract:The success of Convolutional Neural Networks (CNNs) in computer vision is mainly driven by their strong inductive bias, which is strong enough to allow CNNs to solve vision-related tasks with random weights, meaning without learning. Similarly, Long Short-Term Memory (LSTM) has a strong inductive bias towards storing information over time. However, many real-world systems are governed by conservation laws, which lead to the redistribution of particular quantities -- e.g. in physical and economical systems. Our novel Mass-Conserving LSTM (MC-LSTM) adheres to these conservation laws by extending the inductive bias of LSTM to model the redistribution of those stored quantities. MC-LSTMs set a new state-of-the-art for neural arithmetic units at learning arithmetic operations, such as addition tasks, which have a strong conservation law, as the sum is constant over time. Further, MC-LSTM is applied to traffic forecasting, modelling a pendulum, and a large benchmark dataset in hydrology, where it sets a new state-of-the-art for predicting peak flows. In the hydrology example, we show that MC-LSTM states correlate with real-world processes and are therefore interpretable.

Uncertainty Estimation with Deep Learning for Rainfall-Runoff Modelling

Dec 15, 2020

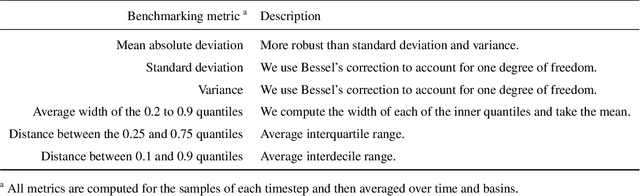

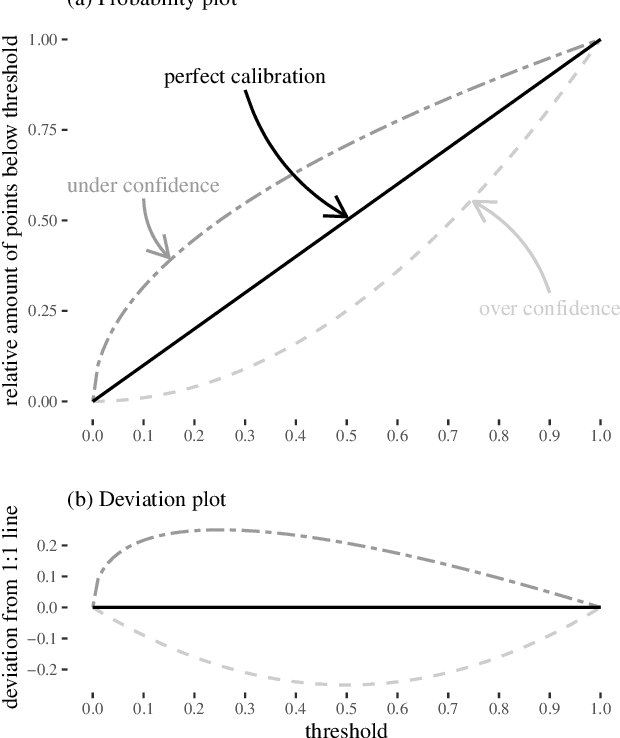

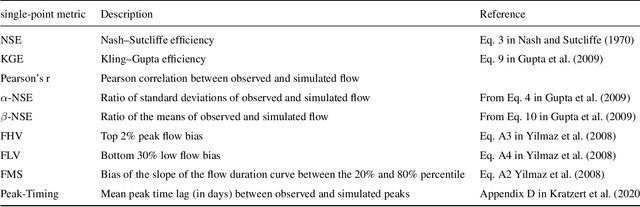

Abstract:Deep Learning is becoming an increasingly important way to produce accurate hydrological predictions across a wide range of spatial and temporal scales. Uncertainty estimations are critical for actionable hydrological forecasting, and while standardized community benchmarks are becoming an increasingly important part of hydrological model development and research, similar tools for benchmarking uncertainty estimation are lacking. We establish an uncertainty estimation benchmarking procedure and present four Deep Learning baselines, out of which three are based on Mixture Density Networks and one is based on Monte Carlo dropout. Additionally, we provide a post-hoc model analysis to put forward some qualitative understanding of the resulting models. Most importantly however, we show that accurate, precise, and reliable uncertainty estimation can be achieved with Deep Learning.

ML-based Flood Forecasting: Advances in Scale, Accuracy and Reach

Dec 06, 2020

Abstract:Floods are among the most common and deadly natural disasters in the world, and flood warning systems have been shown to be effective in reducing harm. Yet the majority of the world's vulnerable population does not have access to reliable and actionable warning systems, due to core challenges in scalability, computational costs, and data availability. In this paper we present two components of flood forecasting systems which were developed over the past year, providing access to these critical systems to 75 million people who didn't have this access before.

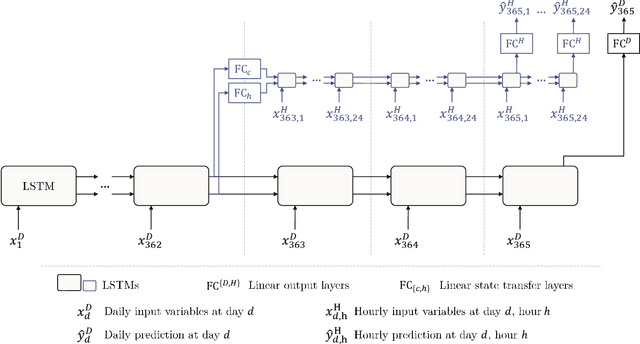

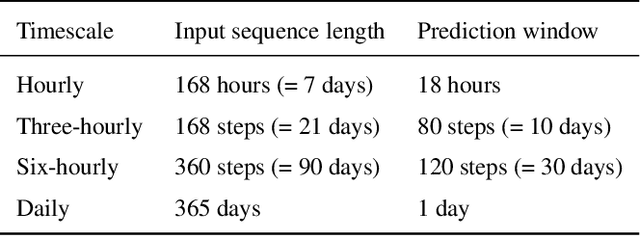

Rainfall-Runoff Prediction at Multiple Timescales with a Single Long Short-Term Memory Network

Oct 15, 2020

Abstract:Long Short-Term Memory Networks (LSTMs) have been applied to daily discharge prediction with remarkable success. Many practical scenarios, however, require predictions at more granular timescales. For instance, accurate prediction of short but extreme flood peaks can make a life-saving difference, yet such peaks may escape the coarse temporal resolution of daily predictions. Naively training an LSTM on hourly data, however, entails very long input sequences that make learning hard and computationally expensive. In this study, we propose two Multi-Timescale LSTM (MTS-LSTM) architectures that jointly predict multiple timescales within one model, as they process long-past inputs at a single temporal resolution and branch out into each individual timescale for more recent input steps. We test these models on 516 basins across the continental United States and benchmark against the US National Water Model. Compared to naive prediction with a distinct LSTM per timescale, the multi-timescale architectures are computationally more efficient with no loss in accuracy. Beyond prediction quality, the multi-timescale LSTM can process different input variables at different timescales, which is especially relevant to operational applications where the lead time of meteorological forcings depends on their temporal resolution.

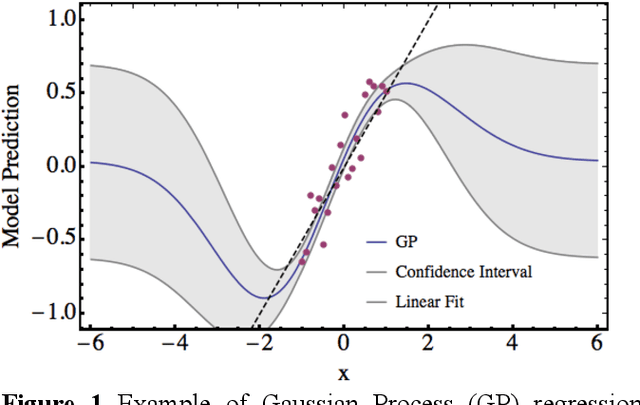

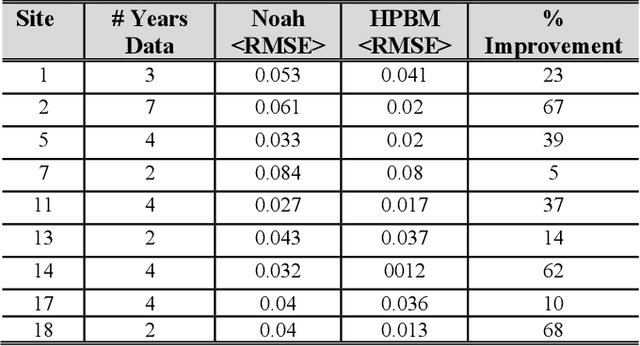

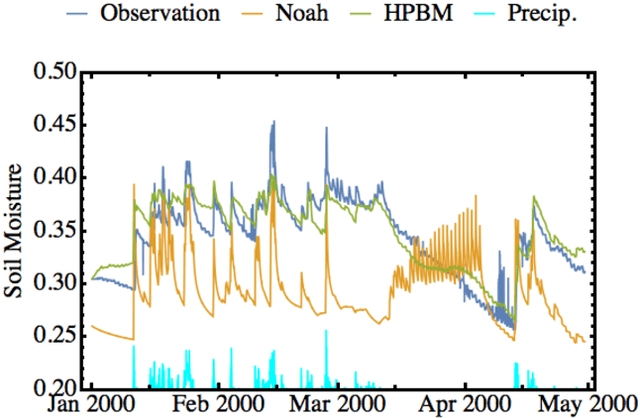

Combining Parametric Land Surface Models with Machine Learning

Feb 14, 2020

Abstract:A hybrid machine learning and process-based-modeling (PBM) approach is proposed and evaluated at a handful of AmeriFlux sites to simulate the top-layer soil moisture state. The Hybrid-PBM (HPBM) employed here uses the Noah land-surface model integrated with Gaussian Processes. It is designed to correct the model only in climatological situations similar to the training data else it reverts to the PBM. In this way, our approach avoids bad predictions in scenarios where similar training data is not available and incorporates our physical understanding of the system. Here we assume an autoregressive model and obtain out-of-sample results with upwards of a 3-fold reduction in the RMSE using a one-year leave-one-out cross-validation at each of the selected sites. A path is outlined for using hybrid modeling to build global land-surface models with the potential to significantly outperform the current state-of-the-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge