Martin Gauch

AI Increases Global Access to Reliable Flood Forecasts

Aug 10, 2023

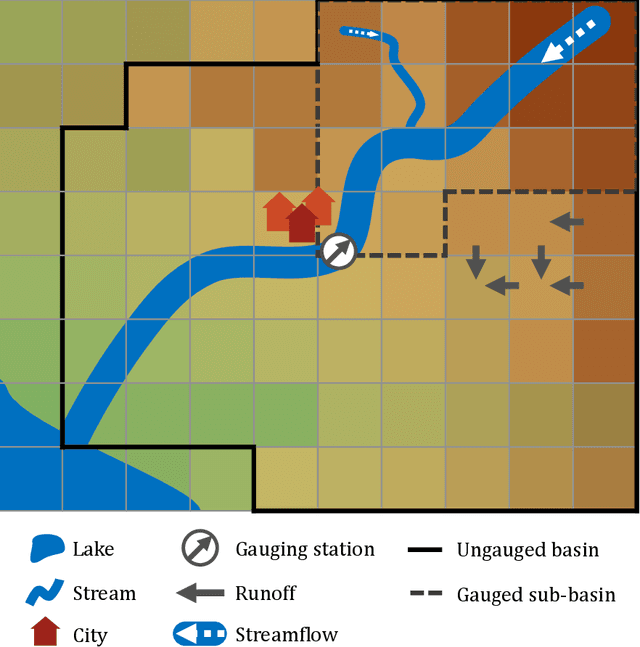

Abstract:Floods are one of the most common and impactful natural disasters, with a disproportionate impact in developing countries that often lack dense streamflow monitoring networks. Accurate and timely warnings are critical for mitigating flood risks, but accurate hydrological simulation models typically must be calibrated to long data records in each watershed where they are applied. We developed an Artificial Intelligence (AI) model to predict extreme hydrological events at timescales up to 7 days in advance. This model significantly outperforms current state of the art global hydrology models (the Copernicus Emergency Management Service Global Flood Awareness System) across all continents, lead times, and return periods. AI is especially effective at forecasting in ungauged basins, which is important because only a few percent of the world's watersheds have stream gauges, with a disproportionate number of ungauged basins in developing countries that are especially vulnerable to the human impacts of flooding. We produce forecasts of extreme events in South America and Africa that achieve reliability approaching the current state of the art in Europe and North America, and we achieve reliability at between 4 and 6-day lead times that are similar to current state of the art nowcasts (0-day lead time). Additionally, we achieve accuracies over 10-year return period events that are similar to current accuracies over 2-year return period events, meaning that AI can provide warnings earlier and over larger and more impactful events. The model that we develop in this paper has been incorporated into an operational early warning system that produces publicly available (free and open) forecasts in real time in over 80 countries. This work using AI and open data highlights a need for increasing the availability of hydrological data to continue to improve global access to reliable flood warnings.

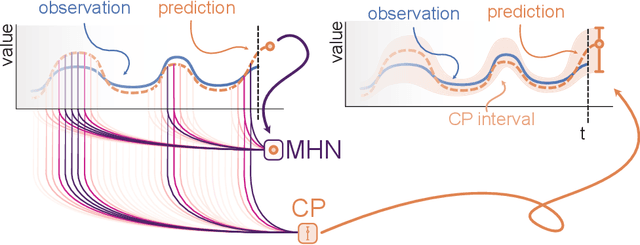

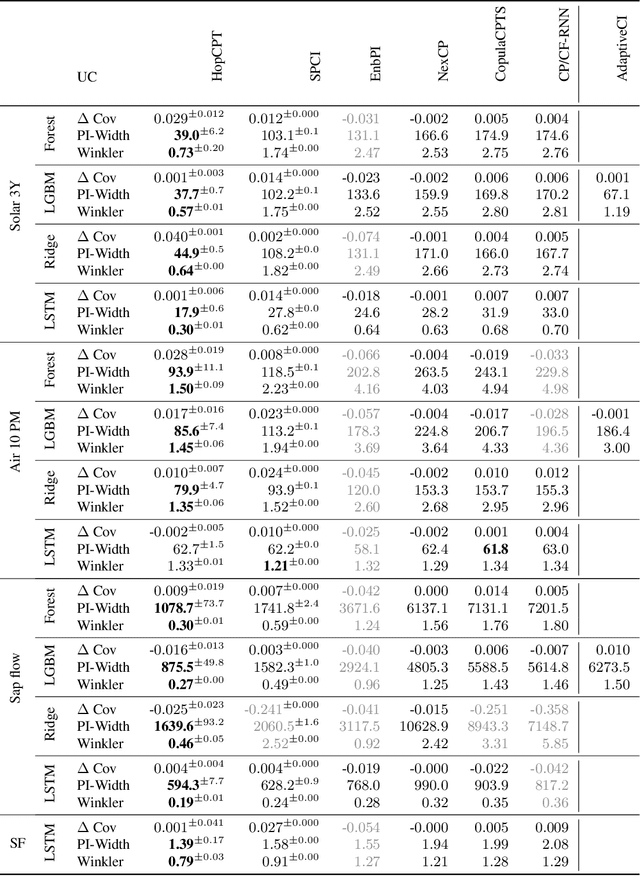

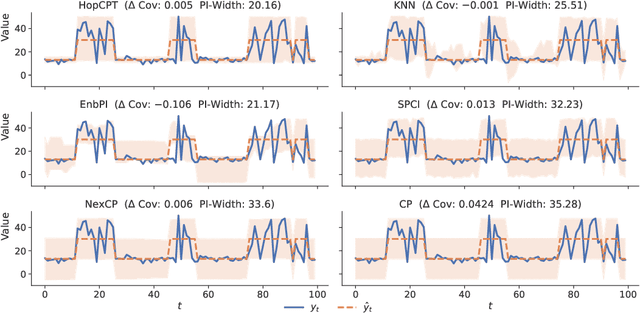

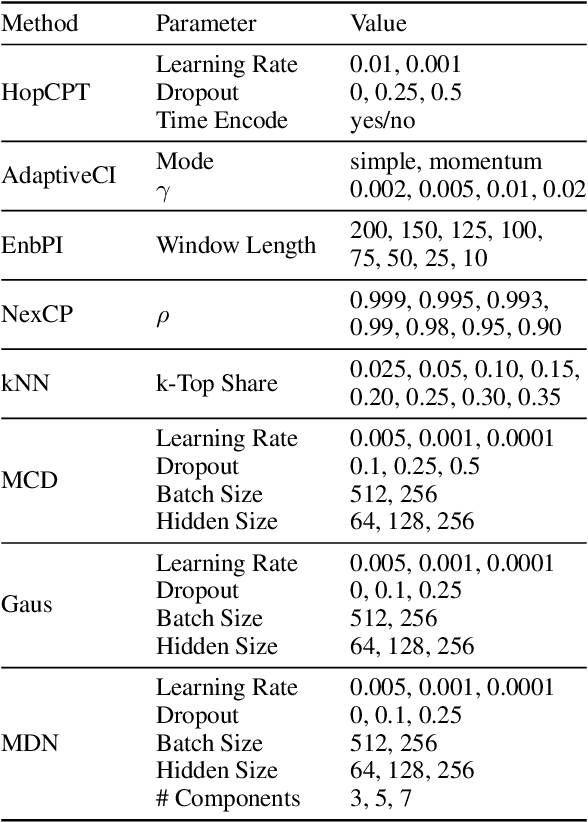

Conformal Prediction for Time Series with Modern Hopfield Networks

Mar 22, 2023

Abstract:To quantify uncertainty, conformal prediction methods are gaining continuously more interest and have already been successfully applied to various domains. However, they are difficult to apply to time series as the autocorrelative structure of time series violates basic assumptions required by conformal prediction. We propose HopCPT, a novel conformal prediction approach for time series that not only copes with temporal structures but leverages them. We show that our approach is theoretically well justified for time series where temporal dependencies are present. In experiments, we demonstrate that our new approach outperforms state-of-the-art conformal prediction methods on multiple real-world time series datasets from four different domains.

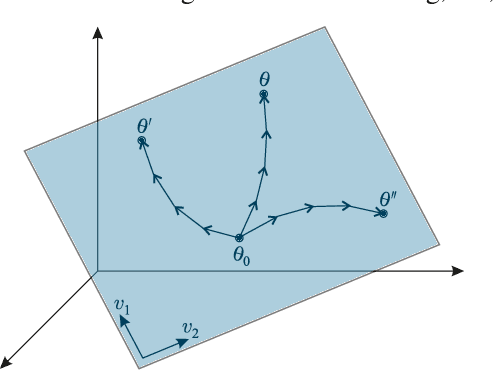

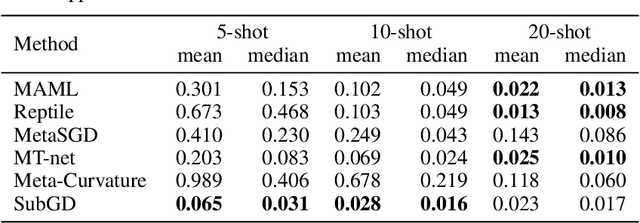

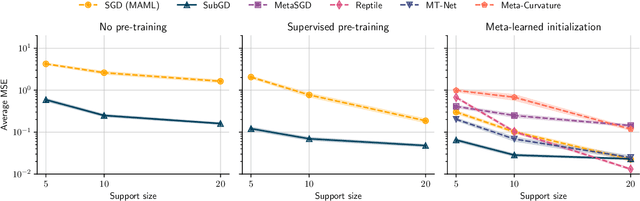

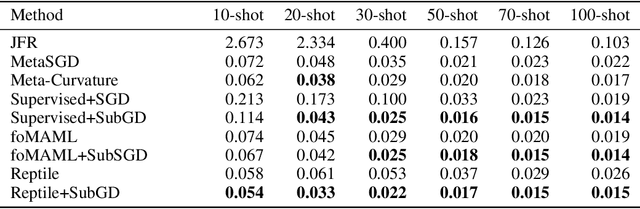

Few-Shot Learning by Dimensionality Reduction in Gradient Space

Jun 07, 2022

Abstract:We introduce SubGD, a novel few-shot learning method which is based on the recent finding that stochastic gradient descent updates tend to live in a low-dimensional parameter subspace. In experimental and theoretical analyses, we show that models confined to a suitable predefined subspace generalize well for few-shot learning. A suitable subspace fulfills three criteria across the given tasks: it (a) allows to reduce the training error by gradient flow, (b) leads to models that generalize well, and (c) can be identified by stochastic gradient descent. SubGD identifies these subspaces from an eigendecomposition of the auto-correlation matrix of update directions across different tasks. Demonstrably, we can identify low-dimensional suitable subspaces for few-shot learning of dynamical systems, which have varying properties described by one or few parameters of the analytical system description. Such systems are ubiquitous among real-world applications in science and engineering. We experimentally corroborate the advantages of SubGD on three distinct dynamical systems problem settings, significantly outperforming popular few-shot learning methods both in terms of sample efficiency and performance.

Uncertainty Estimation with Deep Learning for Rainfall-Runoff Modelling

Dec 15, 2020

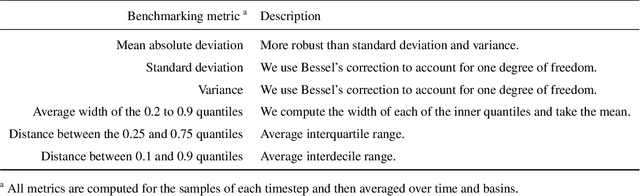

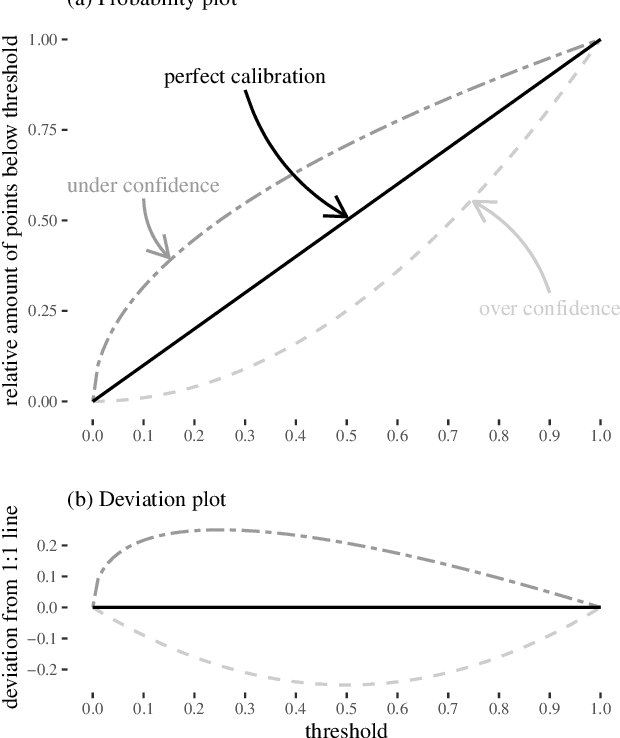

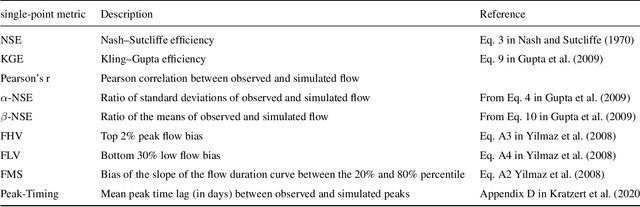

Abstract:Deep Learning is becoming an increasingly important way to produce accurate hydrological predictions across a wide range of spatial and temporal scales. Uncertainty estimations are critical for actionable hydrological forecasting, and while standardized community benchmarks are becoming an increasingly important part of hydrological model development and research, similar tools for benchmarking uncertainty estimation are lacking. We establish an uncertainty estimation benchmarking procedure and present four Deep Learning baselines, out of which three are based on Mixture Density Networks and one is based on Monte Carlo dropout. Additionally, we provide a post-hoc model analysis to put forward some qualitative understanding of the resulting models. Most importantly however, we show that accurate, precise, and reliable uncertainty estimation can be achieved with Deep Learning.

Rainfall-Runoff Prediction at Multiple Timescales with a Single Long Short-Term Memory Network

Oct 15, 2020

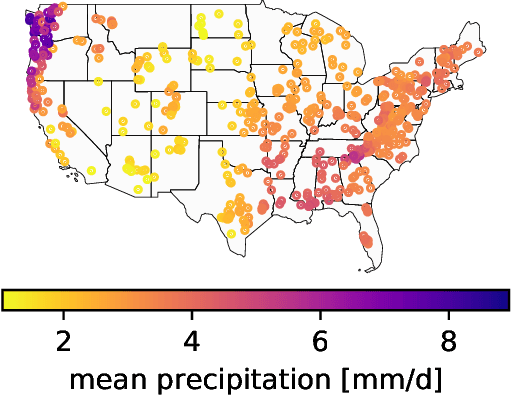

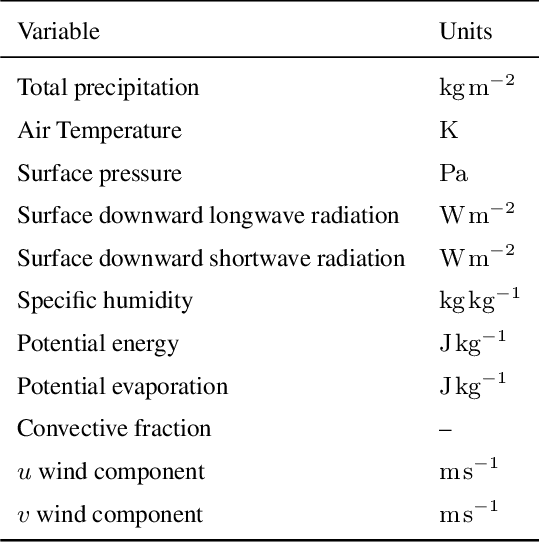

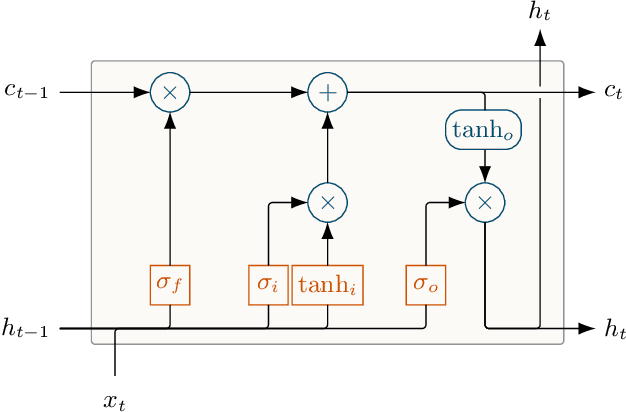

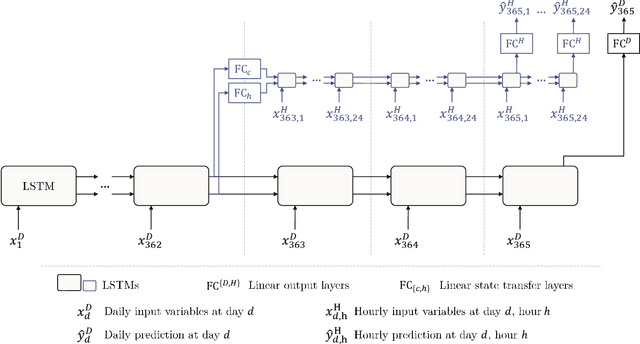

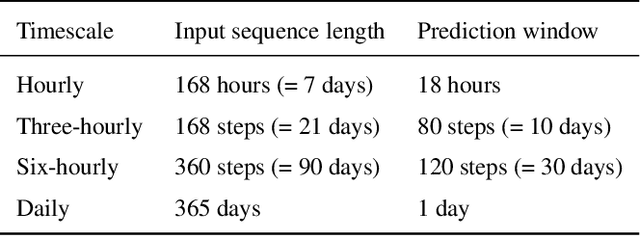

Abstract:Long Short-Term Memory Networks (LSTMs) have been applied to daily discharge prediction with remarkable success. Many practical scenarios, however, require predictions at more granular timescales. For instance, accurate prediction of short but extreme flood peaks can make a life-saving difference, yet such peaks may escape the coarse temporal resolution of daily predictions. Naively training an LSTM on hourly data, however, entails very long input sequences that make learning hard and computationally expensive. In this study, we propose two Multi-Timescale LSTM (MTS-LSTM) architectures that jointly predict multiple timescales within one model, as they process long-past inputs at a single temporal resolution and branch out into each individual timescale for more recent input steps. We test these models on 516 basins across the continental United States and benchmark against the US National Water Model. Compared to naive prediction with a distinct LSTM per timescale, the multi-timescale architectures are computationally more efficient with no loss in accuracy. Beyond prediction quality, the multi-timescale LSTM can process different input variables at different timescales, which is especially relevant to operational applications where the lead time of meteorological forcings depends on their temporal resolution.

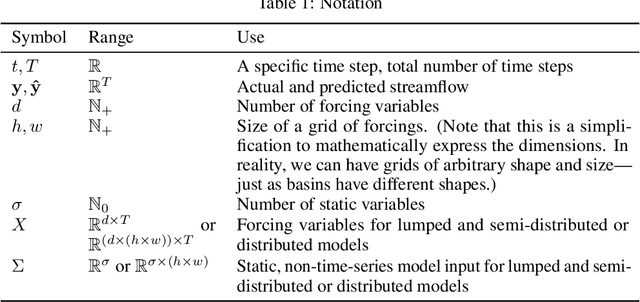

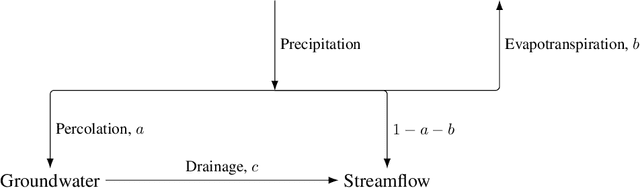

A Data Scientist's Guide to Streamflow Prediction

Jun 05, 2020

Abstract:In recent years, the paradigms of data-driven science have become essential components of physical sciences, particularly in geophysical disciplines such as climatology. The field of hydrology is one of these disciplines where machine learning and data-driven models have attracted significant attention. This offers significant potential for data scientists' contributions to hydrologic research. As in every interdisciplinary research effort, an initial mutual understanding of the domain is key to successful work later on. In this work, we focus on the element of hydrologic rainfall--runoff models and their application to forecast floods and predict streamflow, the volume of water flowing in a river. This guide aims to help interested data scientists gain an understanding of the problem, the hydrologic concepts involved, and the details that come up along the way. We have captured lessons that we have learned while "coming up to speed" on streamflow prediction and hope that our experiences will be useful to the community.

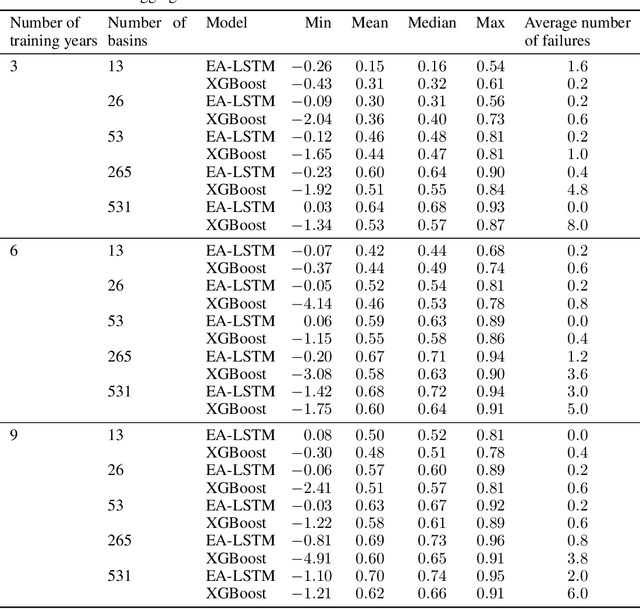

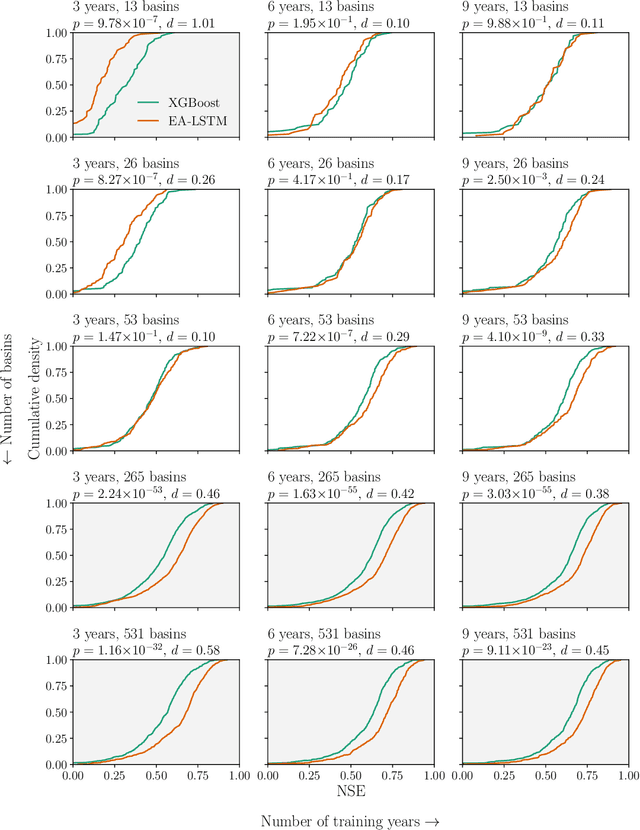

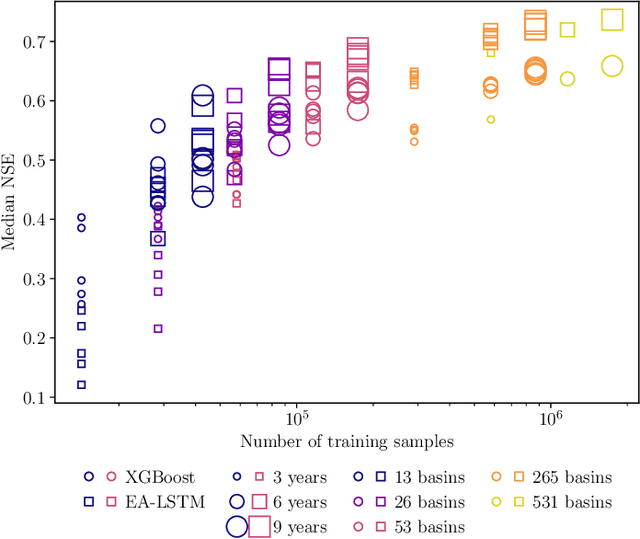

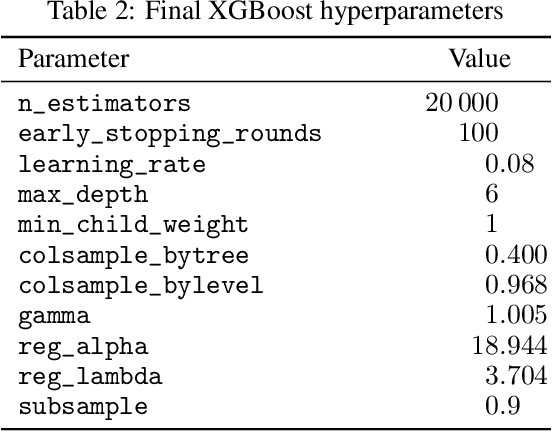

The Proper Care and Feeding of CAMELS: How Limited Training Data Affects Streamflow Prediction

Nov 17, 2019

Abstract:Accurate streamflow prediction largely relies on historical records of both meteorological data and streamflow measurements. For many regions around the world, however, such data are only scarcely or not at all available. To select an appropriate model for a region with a given amount of historical data, it is therefore indispensable to know a model's sensitivity to limited training data, both in terms of geographic diversity and different spans of time. In this study, we provide decision support for tree- and LSTM-based models. We feed the models meteorological measurements from the CAMELS dataset, and individually restrict the training period length and the number of basins used in training. Our findings show that tree-based models provide more accurate predictions on small datasets, while LSTMs are superior given sufficient training data. This is perhaps not surprising, as neural networks are known to be data-hungry; however, we are able to characterize each model's strengths under different conditions, including the "breakeven point" when LSTMs begin to overtake tree-based models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge