Dana Weitzner

Google Research, Tel-Aviv, Israel

On the Relation Between Linear Diffusion and Power Iteration

Oct 16, 2024

Abstract:Recently, diffusion models have gained popularity due to their impressive generative abilities. These models learn the implicit distribution given by the training dataset, and sample new data by transforming random noise through the reverse process, which can be thought of as gradual denoising. In this work, we examine the generation process as a ``correlation machine'', where random noise is repeatedly enhanced in correlation with the implicit given distribution. To this end, we explore the linear case, where the optimal denoiser in the MSE sense is known to be the PCA projection. This enables us to connect the theory of diffusion models to the spiked covariance model, where the dependence of the denoiser on the noise level and the amount of training data can be expressed analytically, in the rank-1 case. In a series of numerical experiments, we extend this result to general low rank data, and show that low frequencies emerge earlier in the generation process, where the denoising basis vectors are more aligned to the true data with a rate depending on their eigenvalues. This model allows us to show that the linear diffusion model converges in mean to the leading eigenvector of the underlying data, similarly to the prevalent power iteration method. Finally, we empirically demonstrate the applicability of our findings beyond the linear case, in the Jacobians of a deep, non-linear denoiser, used in general image generation tasks.

On The Relationship Between Universal Adversarial Attacks And Sparse Representations

Nov 14, 2023Abstract:The prominent success of neural networks, mainly in computer vision tasks, is increasingly shadowed by their sensitivity to small, barely perceivable adversarial perturbations in image input. In this work, we aim at explaining this vulnerability through the framework of sparsity. We show the connection between adversarial attacks and sparse representations, with a focus on explaining the universality and transferability of adversarial examples in neural networks. To this end, we show that sparse coding algorithms, and the neural network-based learned iterative shrinkage thresholding algorithm (LISTA) among them, suffer from this sensitivity, and that common attacks on neural networks can be expressed as attacks on the sparse representation of the input image. The phenomenon that we observe holds true also when the network is agnostic to the sparse representation and dictionary, and thus can provide a possible explanation for the universality and transferability of adversarial attacks. The code is available at https://github.com/danawr/adversarial_attacks_and_sparse_representations.

AI Increases Global Access to Reliable Flood Forecasts

Aug 10, 2023

Abstract:Floods are one of the most common and impactful natural disasters, with a disproportionate impact in developing countries that often lack dense streamflow monitoring networks. Accurate and timely warnings are critical for mitigating flood risks, but accurate hydrological simulation models typically must be calibrated to long data records in each watershed where they are applied. We developed an Artificial Intelligence (AI) model to predict extreme hydrological events at timescales up to 7 days in advance. This model significantly outperforms current state of the art global hydrology models (the Copernicus Emergency Management Service Global Flood Awareness System) across all continents, lead times, and return periods. AI is especially effective at forecasting in ungauged basins, which is important because only a few percent of the world's watersheds have stream gauges, with a disproportionate number of ungauged basins in developing countries that are especially vulnerable to the human impacts of flooding. We produce forecasts of extreme events in South America and Africa that achieve reliability approaching the current state of the art in Europe and North America, and we achieve reliability at between 4 and 6-day lead times that are similar to current state of the art nowcasts (0-day lead time). Additionally, we achieve accuracies over 10-year return period events that are similar to current accuracies over 2-year return period events, meaning that AI can provide warnings earlier and over larger and more impactful events. The model that we develop in this paper has been incorporated into an operational early warning system that produces publicly available (free and open) forecasts in real time in over 80 countries. This work using AI and open data highlights a need for increasing the availability of hydrological data to continue to improve global access to reliable flood warnings.

Neural (Tangent Kernel) Collapse

May 25, 2023Abstract:This work bridges two important concepts: the Neural Tangent Kernel (NTK), which captures the evolution of deep neural networks (DNNs) during training, and the Neural Collapse (NC) phenomenon, which refers to the emergence of symmetry and structure in the last-layer features of well-trained classification DNNs. We adopt the natural assumption that the empirical NTK develops a block structure aligned with the class labels, i.e., samples within the same class have stronger correlations than samples from different classes. Under this assumption, we derive the dynamics of DNNs trained with mean squared (MSE) loss and break them into interpretable phases. Moreover, we identify an invariant that captures the essence of the dynamics, and use it to prove the emergence of NC in DNNs with block-structured NTK. We provide large-scale numerical experiments on three common DNN architectures and three benchmark datasets to support our theory.

Flood forecasting with machine learning models in an operational framework

Nov 04, 2021

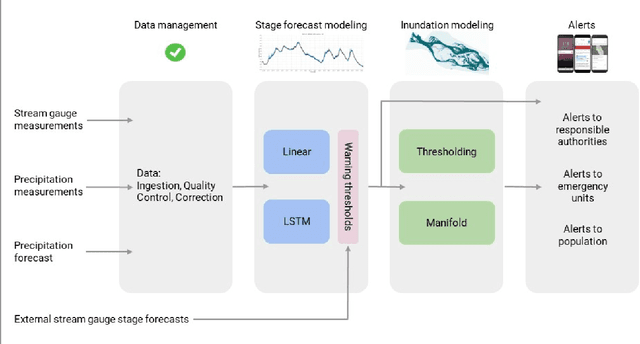

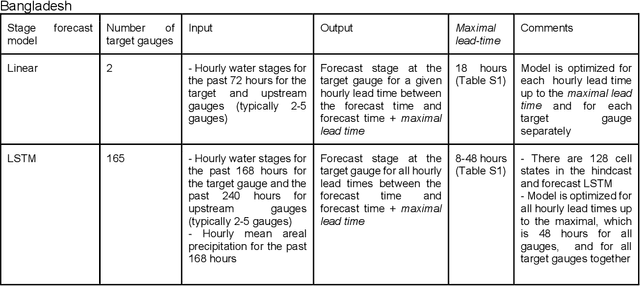

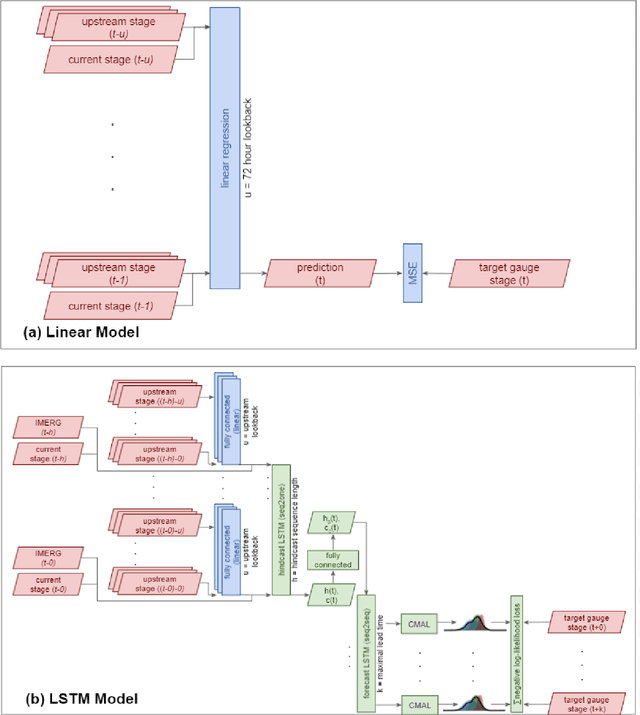

Abstract:The operational flood forecasting system by Google was developed to provide accurate real-time flood warnings to agencies and the public, with a focus on riverine floods in large, gauged rivers. It became operational in 2018 and has since expanded geographically. This forecasting system consists of four subsystems: data validation, stage forecasting, inundation modeling, and alert distribution. Machine learning is used for two of the subsystems. Stage forecasting is modeled with the Long Short-Term Memory (LSTM) networks and the Linear models. Flood inundation is computed with the Thresholding and the Manifold models, where the former computes inundation extent and the latter computes both inundation extent and depth. The Manifold model, presented here for the first time, provides a machine-learning alternative to hydraulic modeling of flood inundation. When evaluated on historical data, all models achieve sufficiently high-performance metrics for operational use. The LSTM showed higher skills than the Linear model, while the Thresholding and Manifold models achieved similar performance metrics for modeling inundation extent. During the 2021 monsoon season, the flood warning system was operational in India and Bangladesh, covering flood-prone regions around rivers with a total area of 287,000 km2, home to more than 350M people. More than 100M flood alerts were sent to affected populations, to relevant authorities, and to emergency organizations. Current and future work on the system includes extending coverage to additional flood-prone locations, as well as improving modeling capabilities and accuracy.

Separable Joint Blind Deconvolution and Demixing

Feb 04, 2021

Abstract:Blind deconvolution and demixing is the problem of reconstructing convolved signals and kernels from the sum of their convolutions. This problem arises in many applications, such as blind MIMO. This work presents a separable approach to blind deconvolution and demixing via convex optimization. Unlike previous works, our formulation allows separation into smaller optimization problems, which significantly improves complexity. We develop recovery guarantees, which comply with those of the original non-separable problem, and demonstrate the method performance under several normalization constraints.

Face Authentication from Grayscale Coded Light Field

May 31, 2020

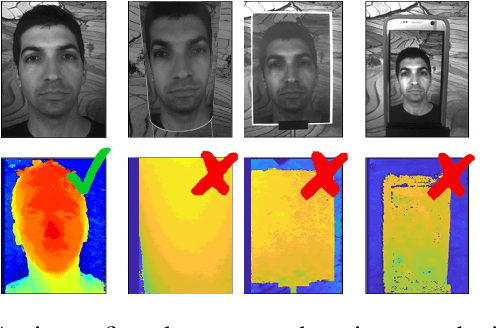

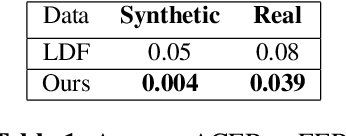

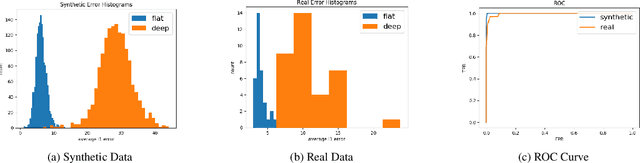

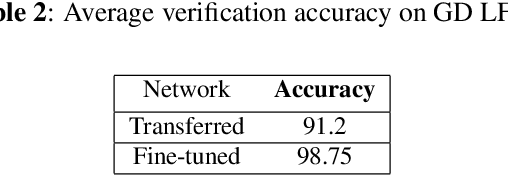

Abstract:Face verification is a fast-growing authentication tool for everyday systems, such as smartphones. While current 2D face recognition methods are very accurate, it has been suggested recently that one may wish to add a 3D sensor to such solutions to make them more reliable and robust to spoofing, e.g., using a 2D print of a person's face. Yet, this requires an additional relatively expensive depth sensor. To mitigate this, we propose a novel authentication system, based on slim grayscale coded light field imaging. We provide a reconstruction free fast anti-spoofing mechanism, working directly on the coded image. It is followed by a multi-view, multi-modal face verification network that given grayscale data together with a low-res depth map achieves competitive results to the RGB case. We demonstrate the effectiveness of our solution on a simulated 3D (RGBD) version of LFW, which will be made public, and a set of real faces acquired by a light field computational camera.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge